Generative AI took the consumer landscape by storm in 2023, reaching over a billion dollars of consumer spend1 in record time. In 2024, we believe the revenue opportunity will be multiples larger in the enterprise.

生成式人工智能在 2023 年席卷了消费者领域,在创纪录的时间内达到了超过 10 亿美元的消费者支出。到 2024 年,我们相信企业的收入机会将增加数倍。

Last year, while consumers spent hours chatting with new AI companions or making images and videos with diffusion models, most enterprise engagement with genAI seemed limited to a handful of obvious use cases and shipping “GPT-wrapper” products as new SKUs. Some naysayers doubted that genAI could scale into the enterprise at all. Aren’t we stuck with the same 3 use cases? Can these startups actually make any money? Isn’t this all hype?

去年,当消费者花费数小时与新的人工智能伙伴聊天或使用扩散模型制作图像和视频时,大多数企业与genAI的互动似乎仅限于少数明显的用例,并将“GPT包装器”产品作为新的SKU发布。一些反对者怀疑genAI能否扩展到企业。我们不是被困在相同的 3 个用例中吗?这些创业公司真的能赚钱吗?这不都是炒作吗?

Over the past couple months, we’ve spoken with dozens of Fortune 500 and top enterprise leaders,2 and surveyed 70 more, to understand how they’re using, buying, and budgeting for generative AI. We were shocked by how significantly the resourcing and attitudes toward genAI had changed over the last 6 months. Though these leaders still have some reservations about deploying generative AI, they’re also nearly tripling their budgets, expanding the number of use cases that are deployed on smaller open-source models, and transitioning more workloads from early experimentation into production.

在过去的几个月里,我们与数十家财富 500 强企业和顶级企业领导者进行了交谈, 2 并对另外 70 家企业进行了调查,以了解他们如何使用、购买和预算生成式 AI。在过去的 6 个月里,我们对 genAI 的资源和态度发生了如此重大的变化感到震惊。尽管这些领导者仍然对部署生成式人工智能持保留态度,但他们也将预算增加了近两倍,扩大了部署在较小开源模型上的用例数量,并将更多工作负载从早期实验转移到生产中。

This is a massive opportunity for founders. We believe that AI startups who 1) build for enterprises’ AI-centric strategic initiatives while anticipating their pain points, and 2) move from a services-heavy approach to building scalable products will capture this new wave of investment and carve out significant market share.

这对创始人来说是一个巨大的机会。我们相信,如果 1) 为企业以 AI 为中心的战略计划构建,同时预测他们的痛点,以及 2) 从以服务为主的方法转向构建可扩展的产品,人工智能初创公司将抓住这一新一轮投资浪潮并开拓可观的市场份额。

As always, building and selling any product for the enterprise requires a deep understanding of customers’ budgets, concerns, and roadmaps. To clue founders into how enterprise leaders are making decisions about deploying generative AI—and to give AI executives a handle on how other leaders in the space are approaching the same problems they have—we’ve outlined 16 top-of-mind considerations about resourcing, models, and use cases from our recent conversations with those leaders below.

与往常一样,为企业构建和销售任何产品都需要深入了解客户的预算、关注点和路线图。为了让创始人了解企业领导者如何做出有关部署生成式 AI 的决策,并让 AI 高管了解该领域的其他领导者如何处理他们遇到的相同问题,我们从最近与这些领导者的对话中概述了 16 个关于资源、模型和用例的首要考虑因素。

Resourcing: budgets are growing dramatically and here to stay

资源:预算正在急剧增长,并将继续存在

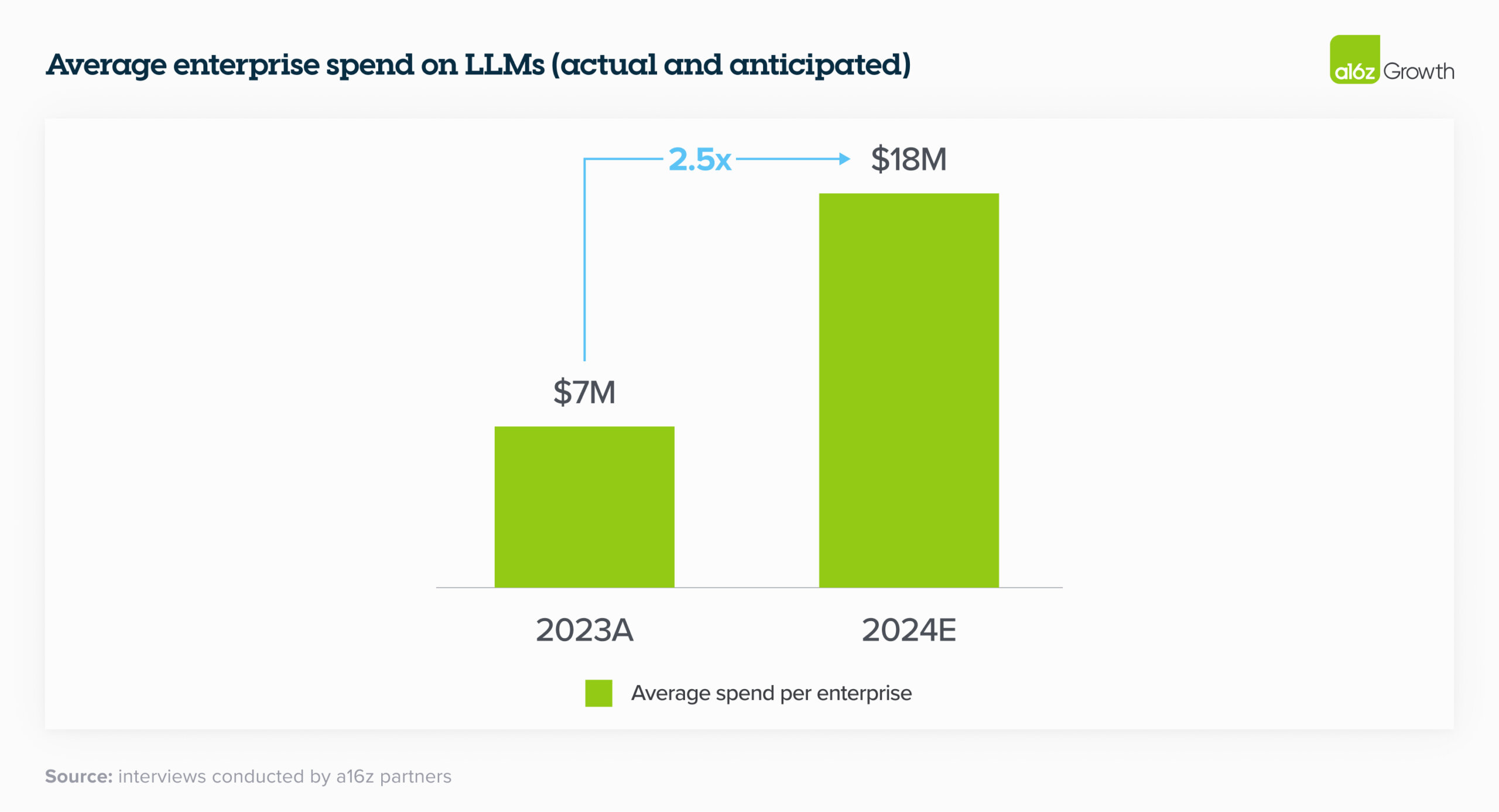

1. Budgets for generative AI are skyrocketing.

1. 生成式人工智能的预算正在飙升。

In 2023, the average spend across foundation model APIs, self-hosting, and fine-tuning models was $7M across the dozens of companies we spoke to. Moreover, nearly every single enterprise we spoke with saw promising early results of genAI experiments and planned to increase their spend anywhere from 2x to 5x in 2024 to support deploying more workloads to production.

2023 年,在我们采访的数十家公司中,基础模型 API、自托管和微调模型的平均支出为 700 万美元。此外,我们采访的几乎每家企业都看到了 genAI 实验的早期成果,并计划在 2024 年将支出增加 2 倍到 5 倍,以支持将更多工作负载部署到生产环境。

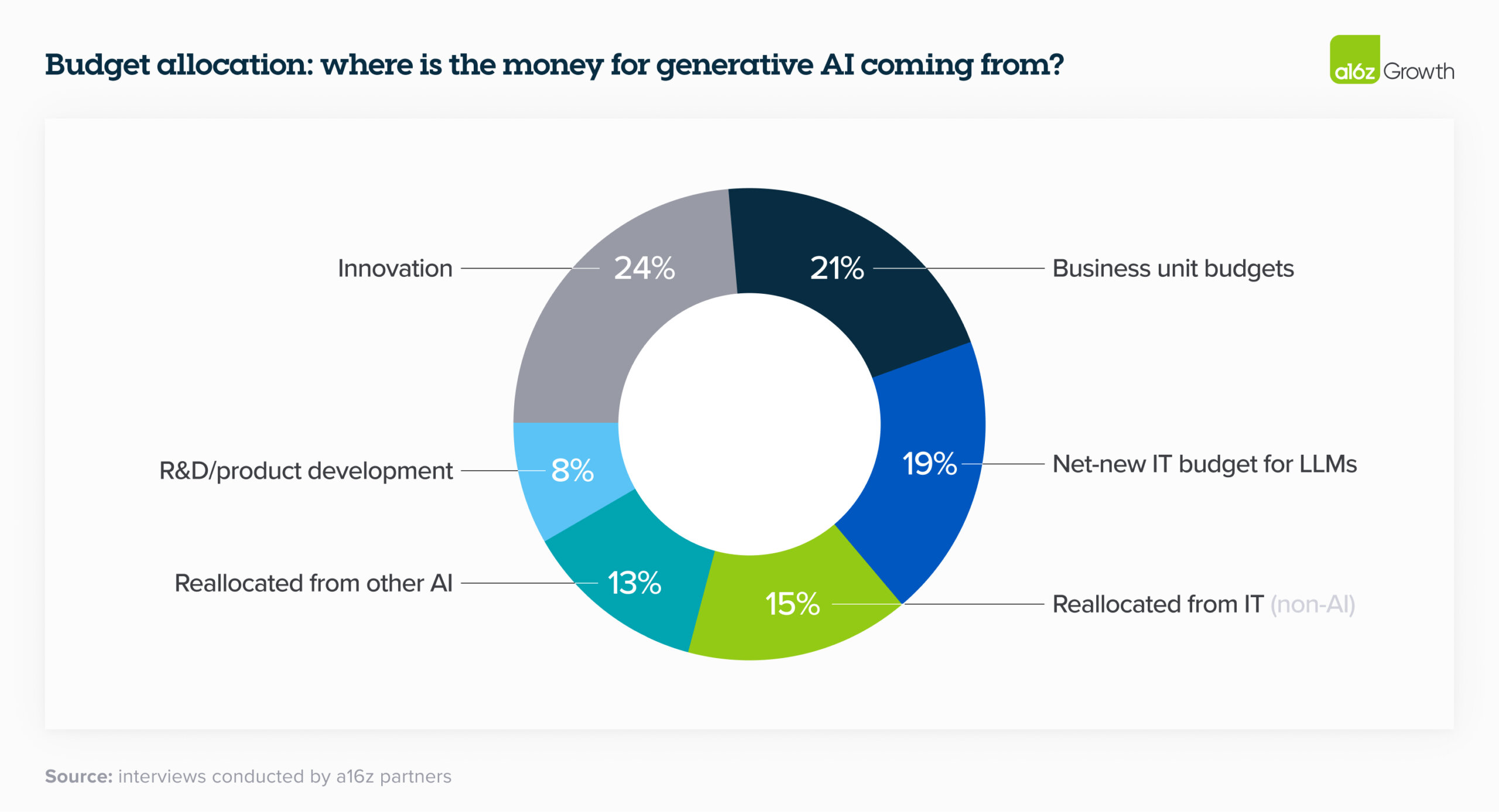

2. Leaders are starting to reallocate AI investments to recurring software budget lines.

2. 领导者开始将人工智能投资重新分配给经常性的软件预算项目。

Last year, much of enterprise genAI spend unsurprisingly came from “innovation” budgets and other typically one-time pools of funding. In 2024, however, many leaders are reallocating that spend to more permanent software line items; fewer than a quarter reported that genAI spend will come from innovation budgets this year. On a much smaller scale, we’ve also started to see some leaders deploying their genAI budget against headcount savings, particularly in customer service. We see this as a harbinger of significantly higher future spend on genAI if the trend continues. One company cited saving ~$6 for each call served by their LLM-powered customer service—for a total of ~90% cost savings—as a reason to increase their investment in genAI eightfold.

不出所料,企业genAI的大部分支出来自“创新”预算和其他典型的一次性资金池。然而,在 2024 年,许多领导者正在将这笔支出重新分配给更持久的软件项目;只有不到四分之一的受访者表示,genAI今年的支出将来自创新预算。在更小的范围内,我们也开始看到一些领导者将他们的 genAI 预算用于节省员工人数,尤其是在客户服务方面。我们认为,如果这种趋势继续下去,这预示着未来在genAI上的支出将大幅增加。一家公司表示,其LLM支持的客户服务为每个呼叫节省了 ~6 美元——总共节省了 ~90% 的成本——这是他们将 genAI 投资增加八倍的原因。

Here’s the overall breakdown of how orgs are allocating their LLM spend:

以下是组织如何分配其LLM支出的总体细分:

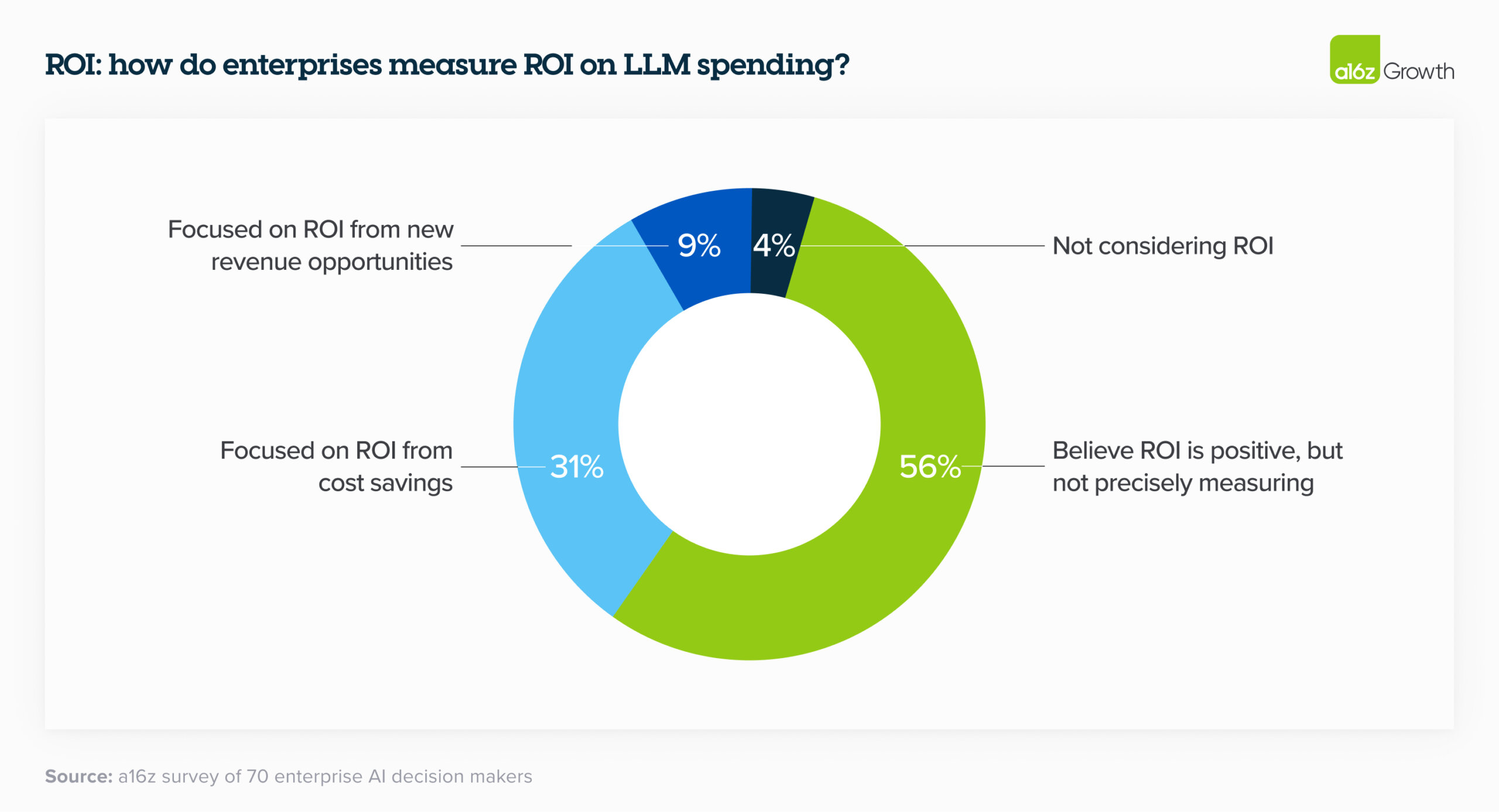

3. Measuring ROI is still an art and a science.

3. 衡量投资回报率仍然是一门艺术和一门科学。

Enterprise leaders are currently mostly measuring ROI by increased productivity generated by AI. While they are relying on NPS and customer satisfaction as good proxy metrics, they’re also looking for more tangible ways to measure returns, such as revenue generation, savings, efficiency, and accuracy gains, depending on their use case. In the near term, leaders are still rolling out this tech and figuring out the best metrics to use to quantify returns, but over the next 2 to 3 years ROI will be increasingly important. While leaders are figuring out the answer to this question, many are taking it on faith when their employees say they’re making better use of their time.

目前,企业领导者主要通过提高人工智能产生的生产力来衡量投资回报率。虽然他们依靠 NPS 和客户满意度作为良好的代理指标,但他们也在寻找更切实的方法来衡量回报,例如创收、节省、效率和准确性提高,具体取决于他们的用例。在短期内,领导者仍在推出这项技术,并找出用于量化回报的最佳指标,但在未来 2 到 3 年内,投资回报率将变得越来越重要。虽然领导者正在找出这个问题的答案,但当他们的员工说他们正在更好地利用他们的时间时,许多人会相信这一点。

4. Implementing and scaling generative AI requires the right technical talent, which currently isn’t in-house for many enterprises.

4. 实施和扩展生成式人工智能需要合适的技术人才,而目前许多企业内部并不具备这些人才。

Simply having an API to a model provider isn’t enough to build and deploy generative AI solutions at scale. It takes highly specialized talent to implement, maintain, and scale the requisite computing infrastructure. Implementation alone accounted for one of the biggest areas of AI spend in 2023 and was, in some cases, the largest. One executive mentioned that “LLMs are probably a quarter of the cost of building use cases,” with development costs accounting for the majority of the budget.

仅仅为模型提供程序提供 API 并不足以大规模构建和部署生成式 AI 解决方案。实施、维护和扩展必要的计算基础设施需要高度专业化的人才。仅实施就占了 2023 年人工智能支出的最大领域之一,在某些情况下,也是最大的领域。一位高管提到,“LLMs可能是构建用例成本的四分之一”,开发成本占预算的大部分。

In order to help enterprises get up and running on their models, foundation model providers offered and are still providing professional services, typically related to custom model development. We estimate that this made up a sizable portion of revenue for these companies in 2023 and, in addition to performance, is one of the key reasons enterprises selected certain model providers. Because it’s so difficult to get the right genAI talent in the enterprise, startups who offer tooling to make it easier to bring genAI development in house will likely see faster adoption.

Models: enterprises are trending toward a multi-model, open source world

5. A multi-model future.

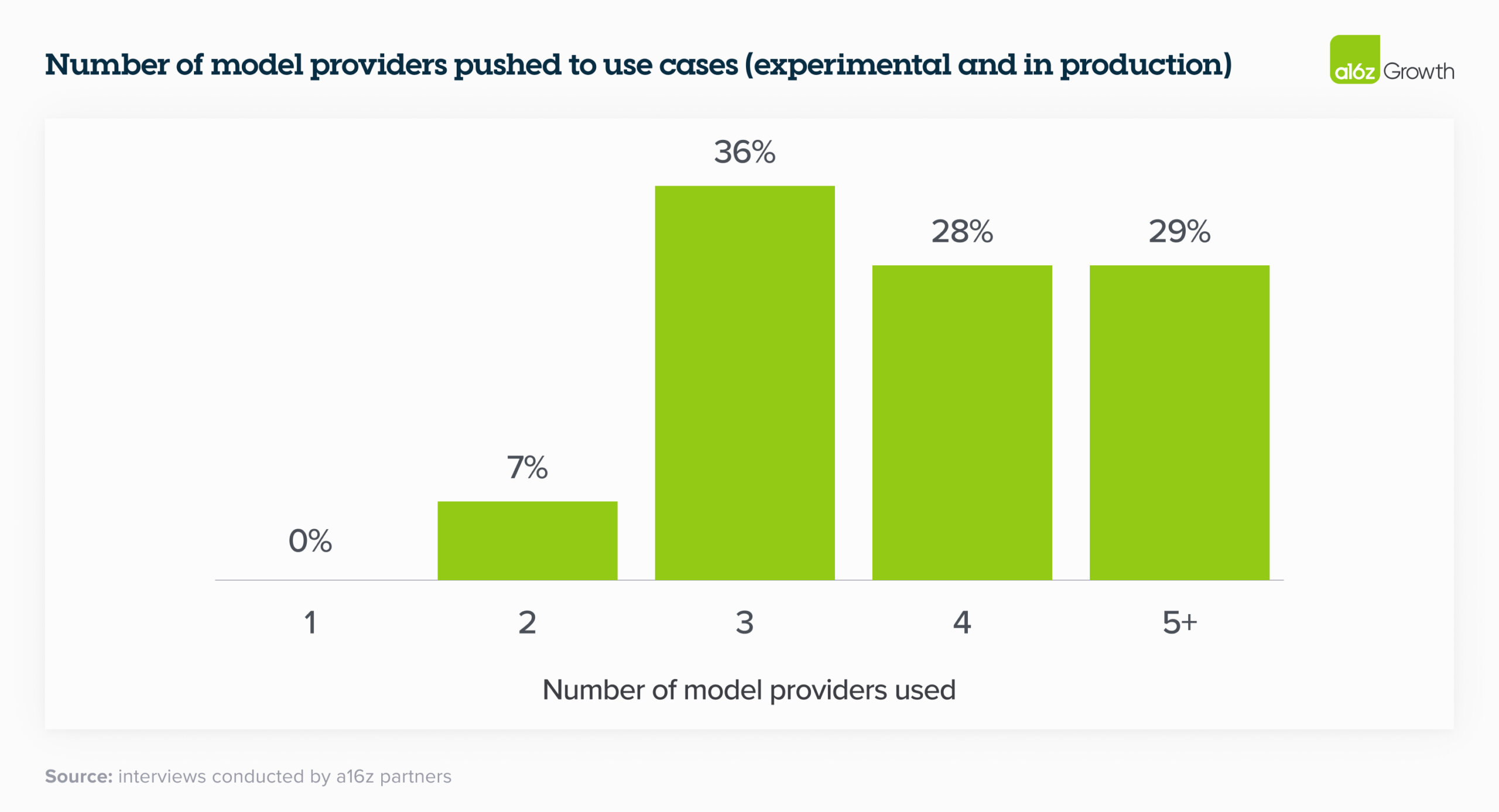

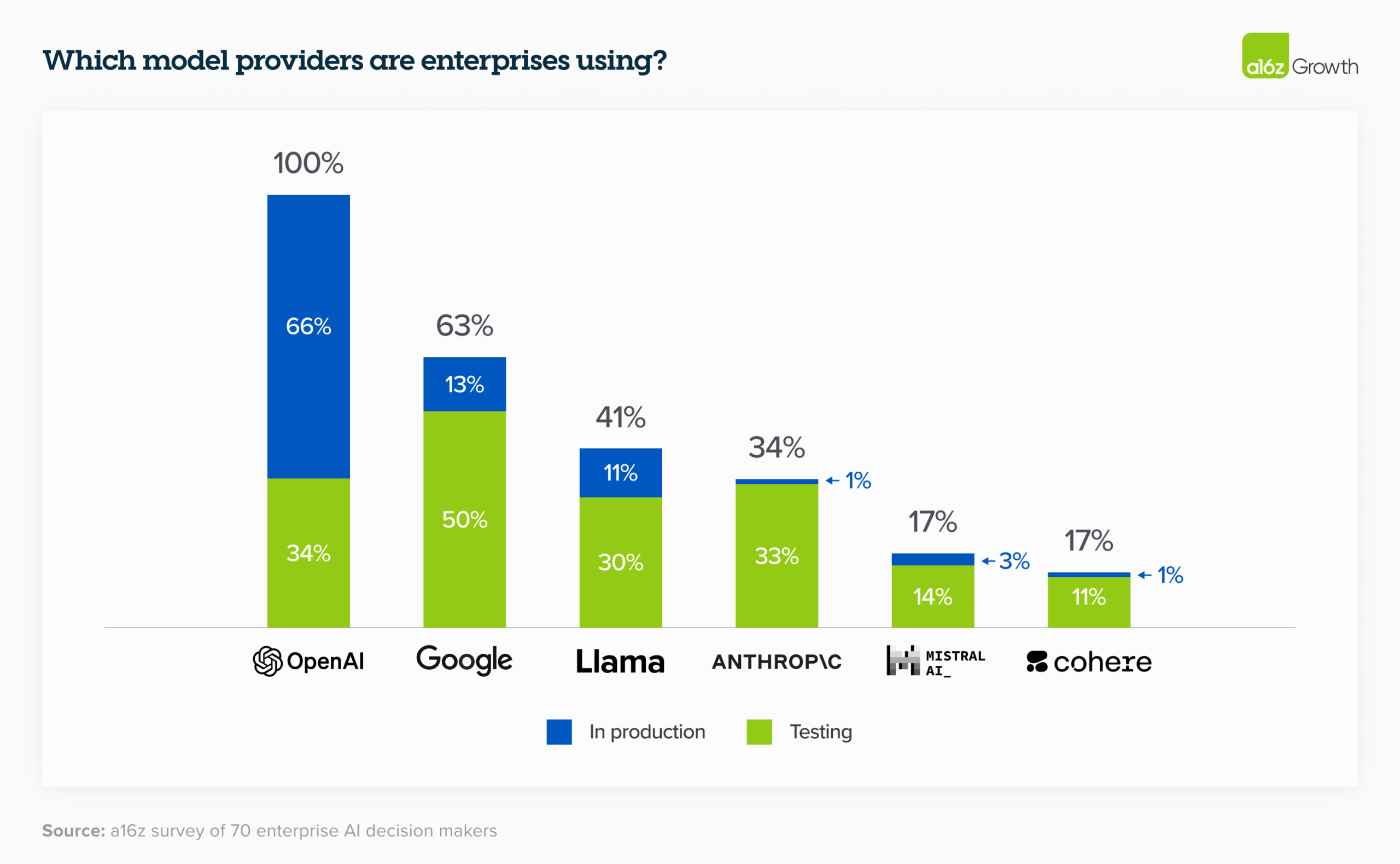

Just over 6 months ago, the vast majority of enterprises were experimenting with 1 model (usually OpenAI’s) or 2 at most. When we talked to enterprise leaders today, they’re are all testing—and in some cases, even using in production—multiple models, which allows them to 1) tailor to use cases based on performance, size, and cost, 2) avoid lock-in, and 3) quickly tap into advancements in a rapidly moving field. This third point was especially important to leaders, since the model leaderboard is dynamic and companies are excited to incorporate both current state-of-the-art models and open-source models to get the best results.

就在 6 个多月前,绝大多数企业都在试验 1 个模型(通常是 OpenAI 的模型)或最多 2 个模型。当我们今天与企业领导者交谈时,所有测试(在某些情况下,甚至在生产中使用)多个模型,这使他们能够 1) 根据性能、规模和成本定制用例,2) 避免锁定,以及 3) 快速利用快速发展的领域的进步。这第三点对领导者来说尤为重要,因为模型排行榜是动态的,公司很高兴能够同时结合当前最先进的模型和开源模型以获得最佳结果。

我们可能会看到更多的模型激增。在下表中,根据调查数据,企业领导者报告了许多测试中的模型,这是将用于将工作负载推送到生产环境的模型的领先指标。对于生产用例,OpenAI仍然占据主导地位,正如预期的那样。

6. Open source is booming.

6. 开源正在蓬勃发展。

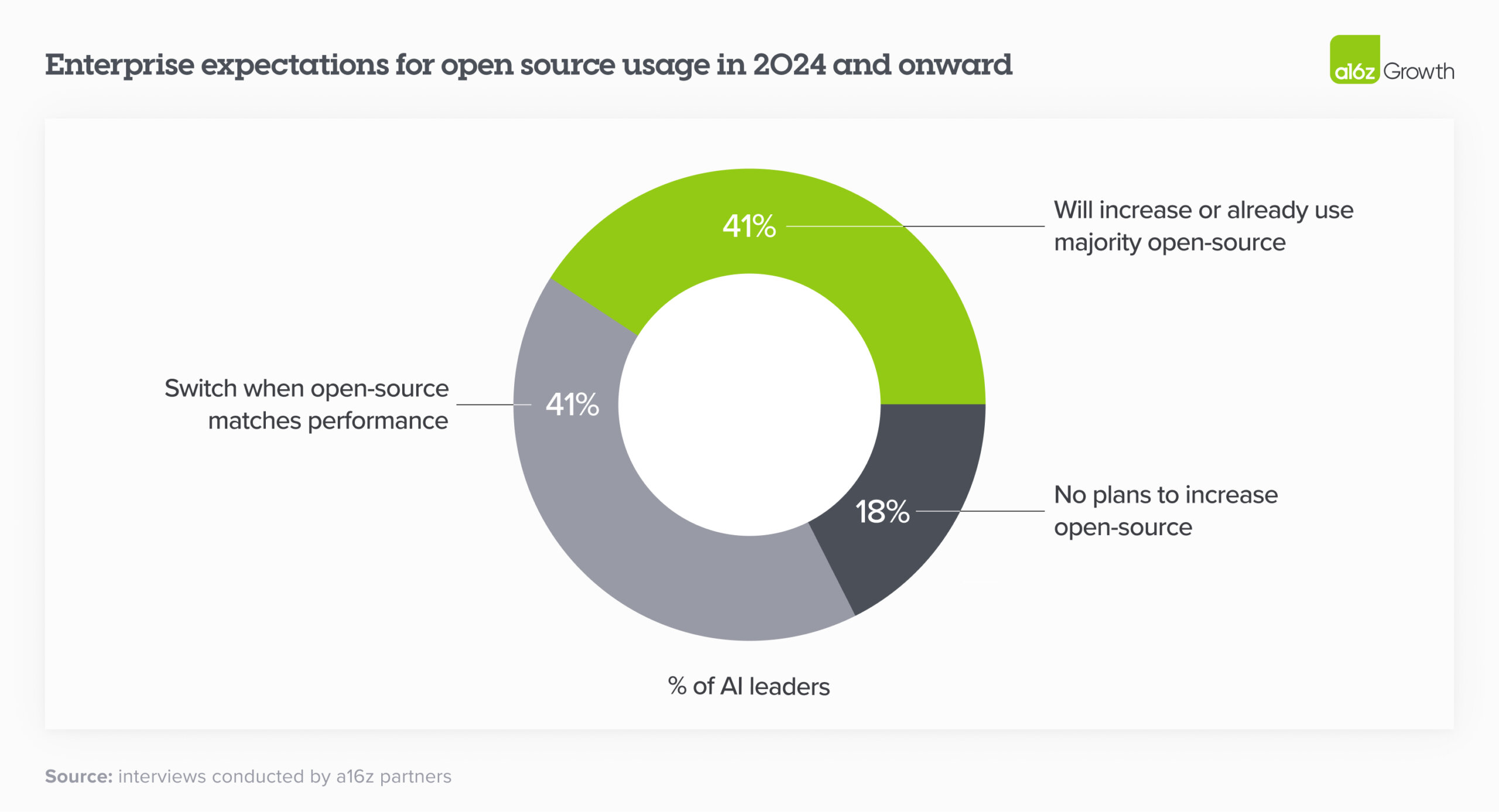

This is one of the most surprising changes in the landscape over the past 6 months. We estimate the market share in 2023 was 80%–90% closed source, with the majority of share going to OpenAI. However, 46% of survey respondents mentioned that they prefer or strongly prefer open source models going into 2024. In interviews, nearly 60% of AI leaders noted that they were interested in increasing open source usage or switching when fine-tuned open source models roughly matched performance of closed-source models. In 2024 and onwards, then, enterprises expect a significant shift of usage towards open source, with some expressly targeting a 50/50 split—up from the 80% closed/20% open split in 2023.

这是过去 6 个月来最令人惊讶的变化之一。我们估计 2023 年的市场份额为 80%–90% 的闭源,其中大部分份额归 OpenAI 所有。然而,46% 的受访者提到,他们更喜欢或非常喜欢 2024 年的开源模型。在采访中,近 60% 的 AI 领导者指出,当微调的开源模型的性能大致匹配时,他们有兴趣增加开源的使用或切换。因此,在 2024 年及以后,企业预计使用将向开源发生重大转变,一些企业明确的目标是 50/50 的拆分——高于 2023 年的 80% 封闭/20% 开放拆分。

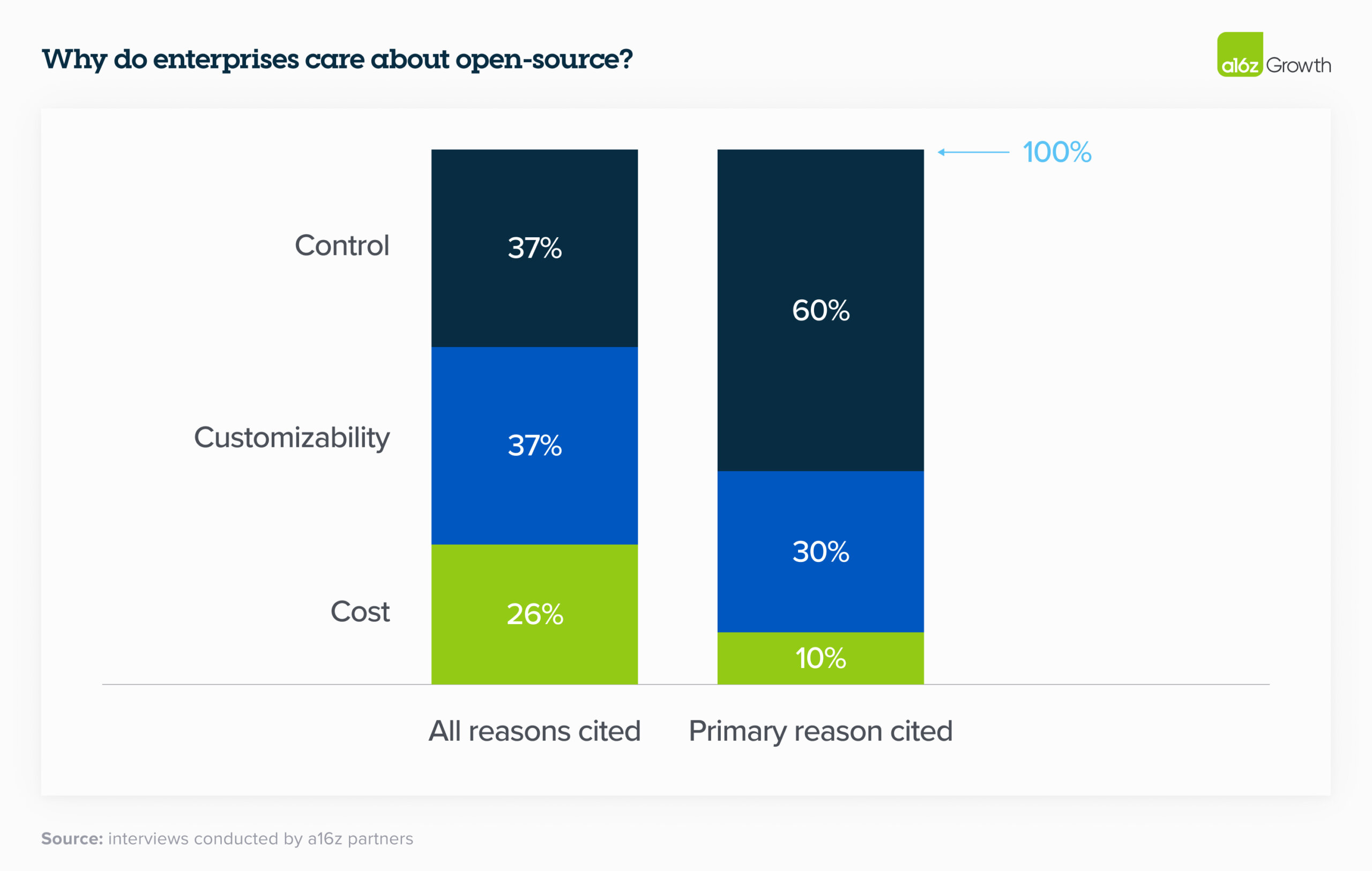

7. While cost factored into open source appeal, it ranked below control and customization as key selection criteria.

7. 虽然成本因素影响了开源的吸引力,但它作为关键的选择标准排在控制和定制之下。

Control (security of proprietary data and understanding why models produce certain outputs) and customization (ability to effectively fine-tune for a given use case) far outweighed cost as the primary reasons to adopt open source. We were surprised that cost wasn’t top of mind, but it reflects the leadership’s current conviction that the excess value created by generative AI will likely far outweigh its price. As one executive explained: “getting an accurate answer is worth the money.”

控制(专有数据的安全性和理解模型产生某些输出的原因)和定制(针对给定用例进行有效微调的能力)远远超过成本,这是采用开源的主要原因。令我们惊讶的是,成本并不是首要考虑因素,但它反映了领导层目前的信念,即生成式人工智能创造的超额价值可能会远远超过其价格。正如一位高管所解释的那样:“得到一个准确的答案是值得的。

8. Desire for control stems from sensitive use cases and enterprise data security concerns.

8. 对控制的渴望源于敏感的用例和企业数据安全问题。

Enterprises still aren’t comfortable sharing their proprietary data with closed-source model providers out of regulatory or data security concerns—and unsurprisingly, companies whose IP is central to their business model are especially conservative. While some leaders addressed this concern by hosting open source models themselves, others noted that they were prioritizing models with virtual private cloud (VPC) integrations.

出于监管或数据安全方面的考虑,企业仍然不愿意与闭源模型提供商共享其专有数据,不出所料,知识产权对其商业模式至关重要的公司尤其保守。虽然一些领导者通过自己托管开源模型来解决这个问题,但其他人指出,他们正在优先考虑具有虚拟私有云 (VPC) 集成的模型。

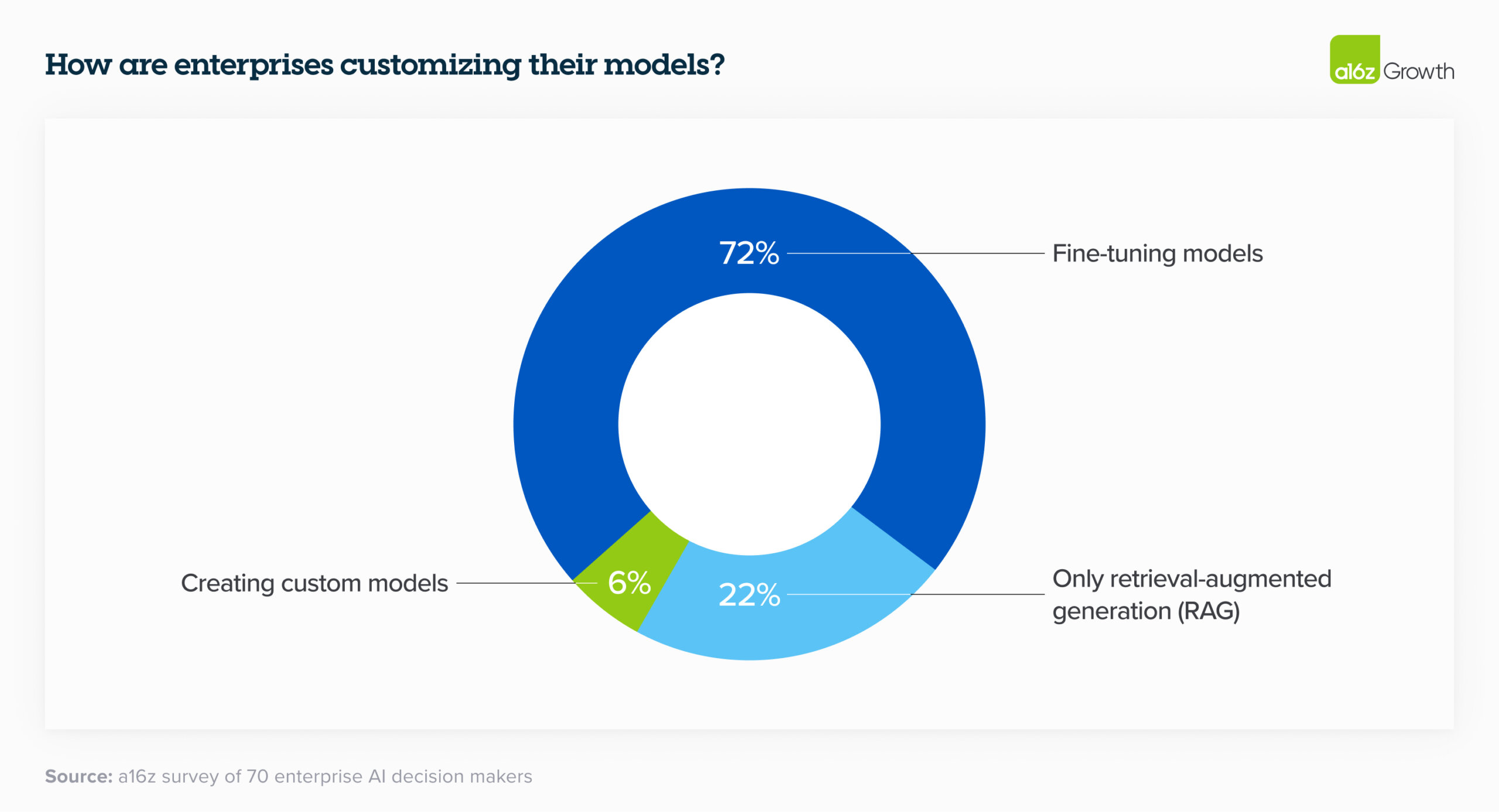

9. Leaders generally customize models through fine-tuning instead of building models from scratch.

9. 领导者通常通过微调来定制模型,而不是从头开始构建模型。

In 2023, there was a lot of discussion around building custom models like BloombergGPT. In 2024, enterprises are still interested in customizing models, but with the rise of high-quality open source models, most are opting not to train their own LLM from scratch and instead use retrieval-augmented generation (RAG) or fine-tune an open source model for their specific needs.

2023 年,围绕构建像 BloombergGPT 这样的自定义模型进行了大量讨论。到 2024 年,企业仍然对定制模型感兴趣,但随着高质量开源模型的兴起,大多数企业选择不从头开始训练自己的LLM模型,而是使用检索增强生成 (RAG) 或根据其特定需求微调开源模型。

10. Cloud is still highly influential in model purchasing decisions.

10. 云在模型购买决策中仍然具有很大影响力。

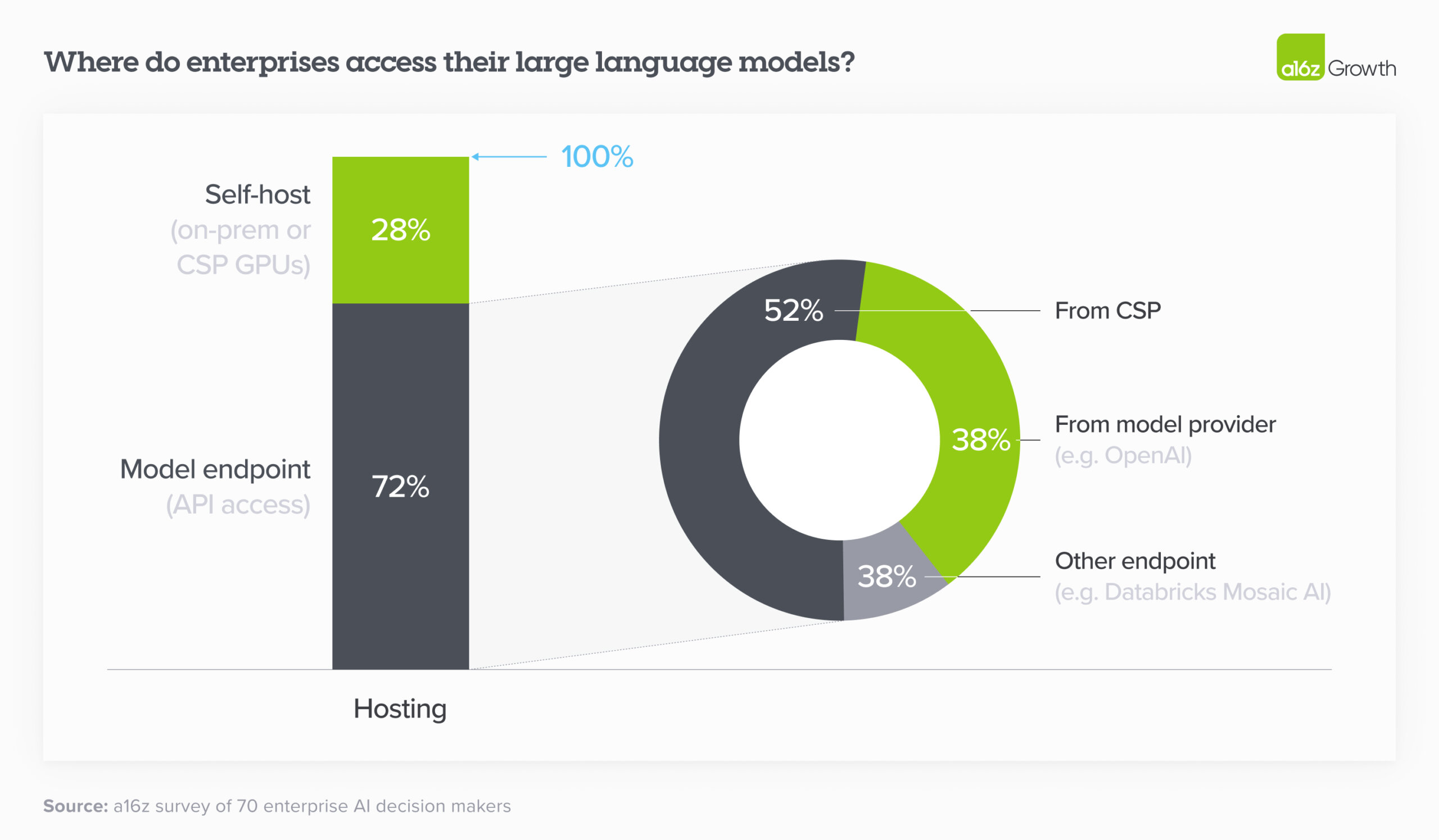

In 2023, many enterprises bought models through their existing cloud service provider (CSP) for security reasons—leaders were more concerned about closed-source models mishandling their data than their CSPs—and to avoid lengthy procurement processes. This is still the case in 2024, which means that the correlation between CSP and preferred model is fairly high: Azure users generally preferred OpenAI, while Amazon users preferred Anthropic or Cohere. As we can see in the chart below, of the 72% of enterprises who use an API to access their model, over half used the model hosted by their CSP. (Note that over a quarter of respondents did self-host, likely in order to run open source models.)

2023 年,出于安全原因,许多企业通过其现有的云服务提供商 (CSP) 购买了模型——领导者比 CSP 更关心闭源模型对数据处理不当——并避免冗长的采购流程。2024 年仍然如此,这意味着 CSP 和首选模型之间的相关性相当高:Azure 用户通常更喜欢 OpenAI,而亚马逊用户更喜欢 Anthropic 或 Cohere。如下图所示,在使用 API 访问其模型的 72% 的企业中,超过一半的企业使用其 CSP 托管的模型。(请注意,超过四分之一的受访者确实是自托管的,可能是为了运行开源模型。

11. Customers still care about early-to-market features.

11. 客户仍然关心早期上市的功能。

While leaders cited reasoning capability, reliability, and ease of access (e.g., on their CSP) as the top reasons for adopting a given model, leaders also gravitated toward models with other differentiated features. Multiple leaders cited the prior 200K context window as a key reason for adopting Anthropic, for instance, while others adopted Cohere because of their early-to-market, easy-to-use fine-tuning offering.

虽然领导者将推理能力、可靠性和易用性(例如,在他们的 CSP 上)作为采用给定模型的主要原因,但领导者也倾向于具有其他差异化特征的模型。例如,多位领导者将之前的 200K 上下文窗口作为采用 Anthropic 的关键原因,而其他人则采用 Cohere,因为他们的早期上市、易于使用的微调产品。

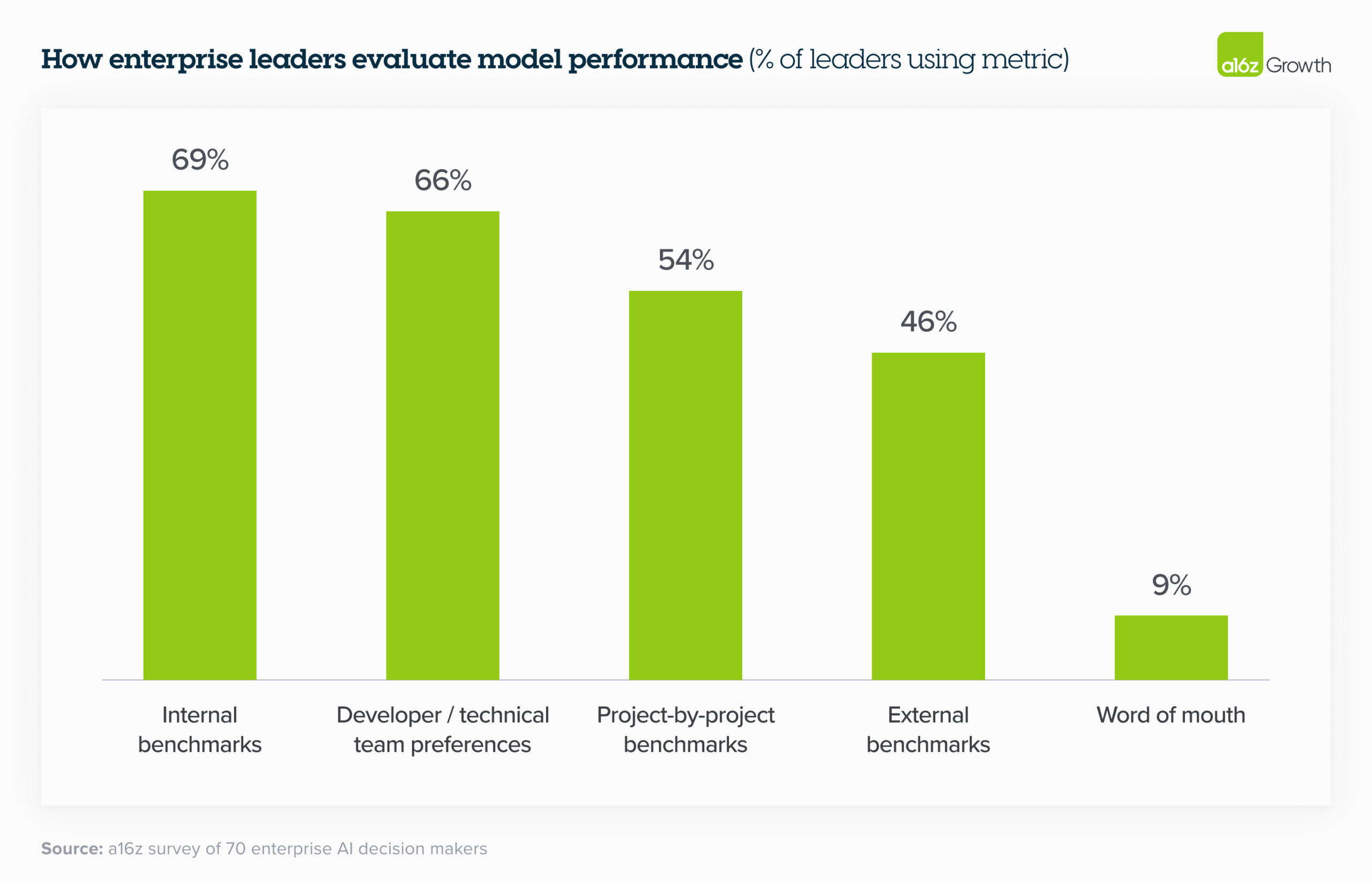

12. That said, most enterprises think model performance is converging.

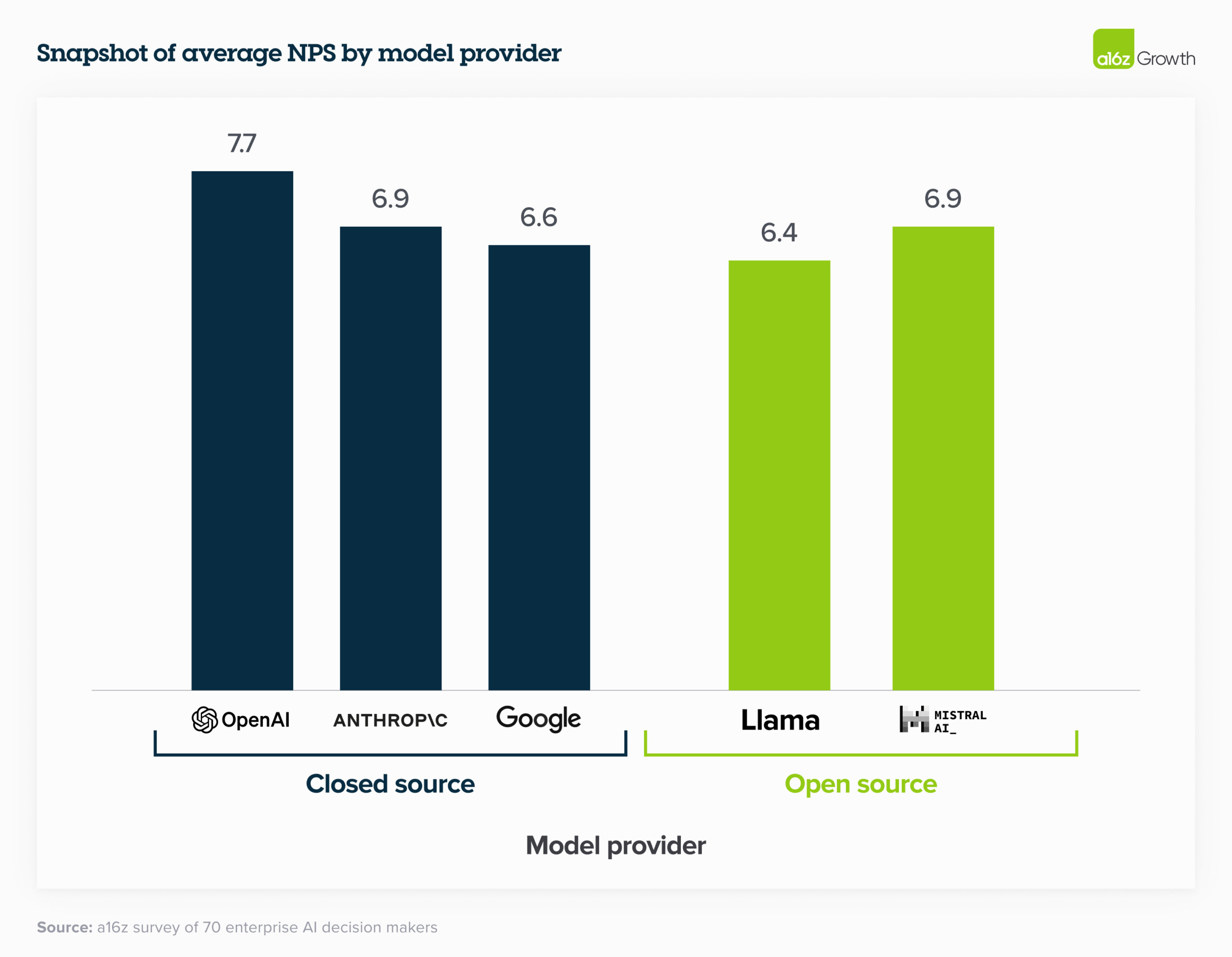

While large swathes of the tech community focus on comparing model performance to public benchmarks, enterprise leaders are more focused on comparing the performance of fine-tuned open-source models and fine-tuned closed-source models against their own internal sets of benchmarks. Interestingly, despite closed-source models typically performing better on external benchmarking tests, enterprise leaders still gave open-source models relatively high NPS (and in some cases higher) because they’re easier to fine-tune to specific use cases. One company found that “after fine-tuning, Mistral and Llama perform almost as well as OpenAI but at much lower cost.” By these standards, model performance is converging even more quickly than we anticipated, which gives leaders a broader range of very capable models to choose from.

虽然大部分技术社区都专注于将模型性能与公共基准进行比较,但企业领导者更专注于将微调的开源模型和微调的闭源模型的性能与他们自己的内部基准集进行比较。有趣的是,尽管闭源模型通常在外部基准测试中表现更好,但企业领导者仍然为开源模型提供了相对较高的 NPS(在某些情况下甚至更高),因为它们更容易针对特定用例进行微调。一家公司发现,“经过微调,Mistral 和 Llama 的性能几乎与 OpenAI 一样好,但成本要低得多。按照这些标准,模型性能的收敛速度比我们预期的要快,这为领导者提供了更广泛的非常有能力的模型可供选择。

13. Optimizing for optionality.

13. 针对可选性进行优化。

Most enterprises are designing their applications so that switching between models requires little more than an API change. Some companies are even pre-testing prompts so the change happens literally at the flick of a switch, while others have built “model gardens” from which they can deploy models to different apps as needed. Companies are taking this approach in part because they’ve learned some hard lessons from the cloud era about the need to reduce dependency on providers, and in part because the market is evolving at such a fast clip that it feels unwise to commit to a single vendor.

Use cases: more migrating to production

用例:更多迁移到生产环境

14. Enterprises are building, not buying, apps—for now.

14. 目前,企业正在构建应用程序,而不是购买应用程序。

Enterprises are overwhelmingly focused on building applications in house, citing the lack of battle-tested, category-killing enterprise AI applications as one of the drivers. After all, there aren’t Magic Quadrants for apps like this (yet!). The foundation models have also made it easier than ever for enterprises to build their own AI apps by offering APIs. Enterprises are now building their own versions of familiar use cases—such as customer support and internal chatbots—while also experimenting with more novel use cases, like writing CPG recipes, narrowing the field for molecule discovery, and making sales recommendations. Much has been written about the limited differentiation of “GPT wrappers,” or startups building a familiar interface (e.g., chatbot) for a well-known output of an LLM (e.g., summarizing documents); one reason we believe these will struggle is that AI further reduced the barrier to building similar applications in-house.

企业压倒性地专注于在内部构建应用程序,理由是缺乏经过实战考验的、杀死类别的企业 AI 应用程序是驱动因素之一。毕竟,像这样的应用程序还没有魔力象限(还没有!基础模型还使企业比以往任何时候都更容易通过提供 API 来构建自己的 AI 应用程序。企业现在正在构建自己熟悉的用例版本,例如客户支持和内部聊天机器人,同时也在尝试更新颖的用例,例如编写 CPG 配方、缩小分子发现领域以及提出销售建议。关于“GPT 包装器”的有限差异化,或者初创公司为众所周知的输出LLM(例如,总结文档)构建熟悉的界面(例如,聊天机器人)的文章很多;我们认为这些将陷入困境的一个原因是,人工智能进一步降低了在内部构建类似应用程序的障碍。

However, the jury is still out on whether this will shift when more enterprise-focused AI apps come to market. While one leader noted that though they were building many use cases in house, they’re optimistic “there will be new tools coming up” and would prefer to “use the best out there.” Others believe that genAI is an increasingly “strategic tool” that allows companies to bring certain functionalities in-house instead of relying as they traditionally have on external vendors. Given these dynamics,

然而,当更多以企业为中心的人工智能应用程序进入市场时,这种情况是否会改变,目前尚无定论。虽然一位领导者指出,尽管他们在内部构建了许多用例,但他们乐观地认为“将会有新的工具出现”,并且更愿意“使用最好的工具”。其他人则认为,genAI是一种越来越“战略工具”,它允许公司将某些功能引入内部,而不是像传统上那样依赖外部供应商。鉴于这些动态,we believe that the apps that innovate beyond the “LLM + UI” formula and significantly rethink the underlying workflows of enterprises or help enterprises better use their own proprietary data stand to perform especially well in this market.

我们相信,超越“LLM+ UI”公式进行创新,并显着重新思考企业的底层工作流程或帮助企业更好地使用自己的专有数据的应用程序将在这个市场中表现得特别好。

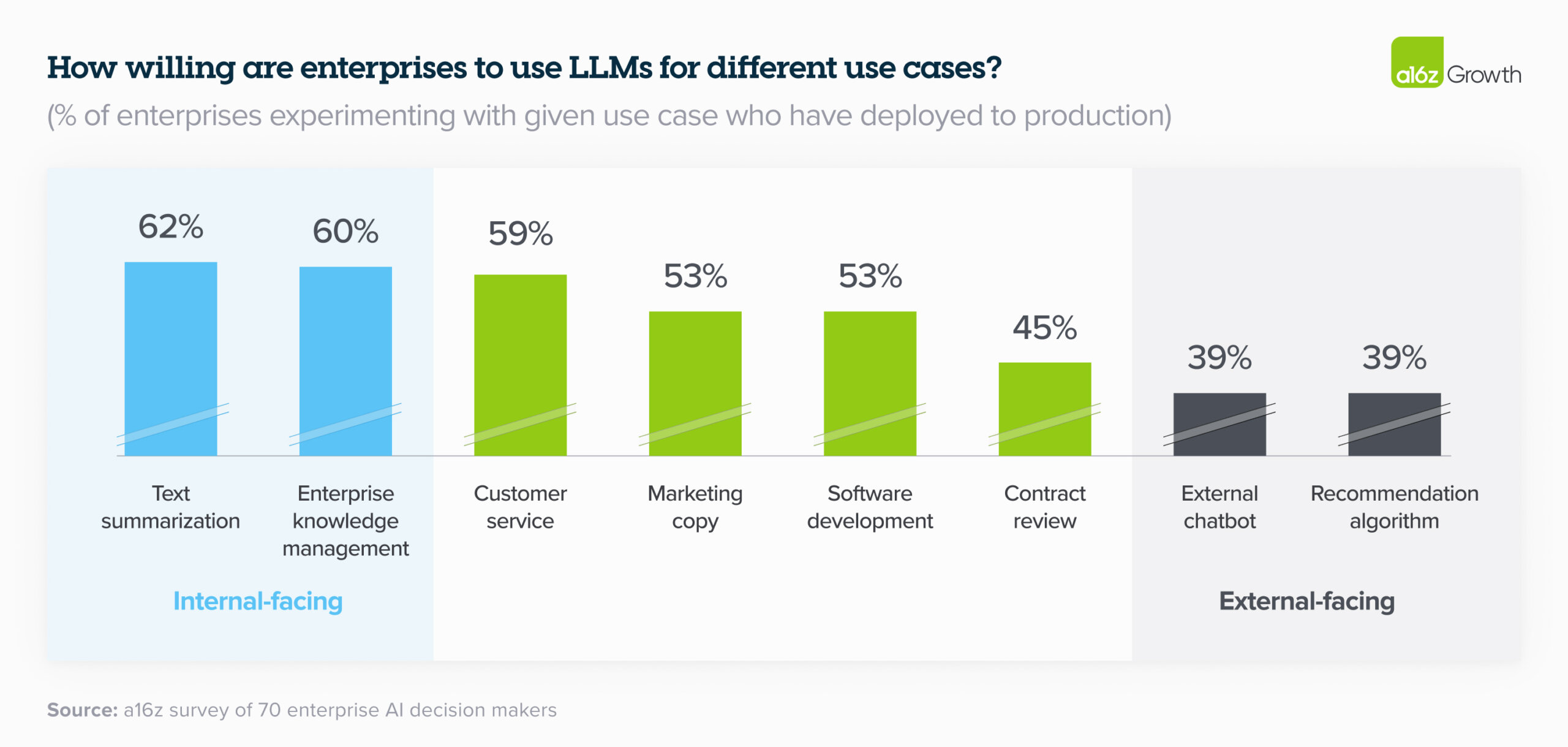

15. Enterprises are excited about internal use cases but remain more cautious about external ones.

15. 企业对内部用例感到兴奋,但对外部用例仍然更加谨慎。

That’s because 2 primary concerns about genAI still loom large in the enterprise: 1) potential issues with hallucination and safety, and 2) public relations issues with deploying genAI, particularly into sensitive consumer sectors (e.g., healthcare and financial services). The most popular use cases of the past year were either focused on internal productivity or routed through a human before getting to a customer—like coding copilots, customer support, and marketing. As we can see in the chart below, these use cases are still dominating in the enterprise in 2024, with enterprises pushing totally internal use cases like text summarization and knowledge management (e.g., internal chatbot) to production at far higher rates than sensitive human-in-the-loop use cases like contract review, or customer-facing use cases like external chatbots or recommendation algorithms. Companies are keen to avoid the fallout from generative AI mishaps like the Air Canada customer service debacle. Because these concerns still loom large for most enterprises, startups who build tooling that can help control for these issues could see significant adoption.

这是因为关于genAI的2个主要问题在企业中仍然很突出:1)幻觉和安全的潜在问题,以及2)部署genAI的公共关系问题,特别是在敏感的消费领域(例如,医疗保健和金融服务)。过去一年中最流行的用例要么专注于内部生产力,要么在到达客户之前通过人工路由,例如编码副驾驶、客户支持和营销。如下图所示,这些用例在 2024 年仍然在企业中占主导地位,企业将文本摘要和知识管理(例如内部聊天机器人)等完全内部用例推向生产的速度远远高于敏感的人机交互用例(如合同审查)或面向客户的用例(如外部聊天机器人或推荐算法)。公司热衷于避免生成式人工智能事故的影响,例如加拿大航空公司客户服务崩溃。由于这些问题对大多数企业来说仍然很突出,因此构建可以帮助控制这些问题的工具的初创公司可能会看到大量的采用。

Size of total opportunity: massive and growing quickly

总机会规模:庞大且快速增长

16. We believe total spend on model APIs and fine-tuning will grow to over $5B run-rate by the end of 2024, and enterprise spend will make up a significant part of that opportunity.

16. 我们认为,到 2024 年底,模型 API 和微调的总支出将增长到 50 亿美元以上,而企业支出将构成这一机会的重要组成部分。

By our calculations, we estimate that the model API (including fine-tuning) market ended 2023 around $1.5–2B run-rate revenue, including spend on OpenAI models via Azure. Given the anticipated growth in the overall market and concrete indications from enterprises, spend on this area alone will grow to at least $5B run-rate by year end, with significant upside potential. As we’ve discussed, enterprises have prioritized genAI deployment, increased budgets and reallocated them to standard software lines, optimized use cases across different models, and plan to push even more workloads to production in 2024, which means they’ll likely drive a significant chunk of this growth.

根据我们的计算,我们估计模型 API(包括微调)市场在 2023 年底的运行率收入约为 $1.5–2B,包括通过 Azure 在 OpenAI 模型上的支出。鉴于整体市场的预期增长和企业的具体迹象,到年底,仅这一领域的支出就将增长到至少 50 亿美元的运行率,具有显着的上升潜力。正如我们所讨论的,企业已经优先考虑了genAI的部署,增加了预算并将其重新分配给标准软件产品线,优化了不同模型的用例,并计划在2024年将更多的工作负载推向生产,这意味着他们可能会推动这一增长的很大一部分。

Over the past 6 months, enterprises have issued a top-down mandate to find and deploy genAI solutions. Deals that used to take over a year to close are being pushed through in 2 or 3 months, and those deals are much bigger than they’ve been in the past. While this post focuses on the foundation model layer, we also believe this opportunity in the enterprise extends to other parts of the stack—from tooling that helps with fine-tuning, to model serving, to application building, and to purpose-built AI native applications. We’re at an inflection point in genAI in the enterprise, and we’re excited to partner with the next generation of companies serving this dynamic and growing market.

在过去的 6 个月里,企业发布了自上而下的任务,以寻找和部署 genAI 解决方案。过去需要一年多的时间才能完成的交易将在 2 或 3 个月内完成,而且这些交易比过去要大得多。虽然这篇文章的重点是基础模型层,但我们也相信企业中的这种机会会延伸到堆栈的其他部分——从有助于微调的工具到模型服务,到应用程序构建,再到专门构建的 AI 原生应用程序。我们正处于企业中genAI的转折点,我们很高兴能与服务于这个充满活力和不断增长的市场的下一代公司合作。

-

1 Per SecondMeasure and Yipit estimates.

每秒 1 次测量和 Yipit 估计。 -

2 Industries included: technology, telecom, CPG, banking, payments, healthcare, and energy.

2 行业包括:科技、电信、快消品、银行、支付、医疗保健和能源。