Artificial intelligence 人工智能

| Part of a series on 系列的一部分 |

| Artificial intelligence 人工智能 |

|---|

Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals.[1] Such machines may be called AIs.

人工智能 (AI) 在其最广泛的意义上,是 机器,特别是 计算机系统 所表现出的 智能。它是 计算机科学 中的一个 研究领域,开发和研究使机器能够 感知环境 并利用 学习 和智能采取行动,以最大化实现既定目标的机会的方法和 软件。[1] 这样的机器可以称为 AI。

Some high-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon, and Netflix); interacting via human speech (e.g., Google Assistant, Siri, and Alexa); autonomous vehicles (e.g., Waymo); generative and creative tools (e.g., ChatGPT, Apple Intelligence, and AI art); and superhuman play and analysis in strategy games (e.g., chess and Go). However, many AI applications are not perceived as AI: "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore."[2][3]

一些高调的 人工智能应用 包括先进的 网络搜索引擎(例如,谷歌搜索); 推荐系统(被 优酷、亚马逊 和 Netflix 使用);通过 人类语言互动(例如,谷歌助手、Siri 和 Alexa); 自动驾驶汽车(例如,Waymo); 生成 和 创意 工具(例如,ChatGPT、苹果智能 和 人工智能艺术);以及在 策略游戏(例如,国际象棋 和 围棋)中进行 超人类 的游戏和分析。然而,许多人工智能应用并不被视为人工智能:“许多前沿的人工智能已经渗透到一般应用中,通常没有被称为人工智能,因为一旦某样东西变得足够有用和普遍,它就 不再被标记为人工智能。”[2][3]

The various subfields of AI research are centered around particular goals and the use of particular tools. The traditional goals of AI research include reasoning, knowledge representation, planning, learning, natural language processing, perception, and support for robotics.[a] General intelligence—the ability to complete any task performable by a human on an at least equal level—is among the field's long-term goals.[4] To reach these goals, AI researchers have adapted and integrated a wide range of techniques, including search and mathematical optimization, formal logic, artificial neural networks, and methods based on statistics, operations research, and economics.[b] AI also draws upon psychology, linguistics, philosophy, neuroscience, and other fields.[5]

人工智能研究的各个子领域围绕特定目标和特定工具展开。人工智能研究的传统目标包括 推理、知识表示、规划、学习、自然语言处理、感知,以及对 机器人技术的支持。[a]通用智能——完成任何人类可执行任务的能力,至少达到同等水平——是该领域的长期目标之一。[4] 为了实现这些目标,人工智能研究人员已经适应并整合了广泛的技术,包括 搜索 和 数学优化、形式逻辑、人工神经网络,以及基于 统计学、运筹学 和 经济学的方法。[b] 人工智能还借鉴了 心理学、语言学、哲学、神经科学 和其他领域。[5]

Artificial intelligence was founded as an academic discipline in 1956,[6] and the field went through multiple cycles of optimism,[7][8] followed by periods of disappointment and loss of funding, known as AI winter.[9][10] Funding and interest vastly increased after 2012 when deep learning outperformed previous AI techniques.[11] This growth accelerated further after 2017 with the transformer architecture,[12] and by the early 2020s hundreds of billions of dollars were being invested in AI (known as the "AI boom"). The widespread use of AI in the 21st century exposed several unintended consequences and harms in the present and raised concerns about its risks and long-term effects in the future, prompting discussions about regulatory policies to ensure the safety and benefits of the technology.

人工智能于 1956 年作为一个学术学科成立,[6] 该领域经历了多次乐观周期,[7][8] 随后是失望和资金短缺的时期,这被称为 人工智能寒冬。[9][10] 2012 年后,随着 深度学习 超越了之前的人工智能技术,资金和兴趣大幅增加。[11] 2017 年后,这一增长进一步加速,伴随着 变换器架构,[12] 到 2020 年代初,数千亿美元被投资于人工智能(被称为“人工智能热潮”)。 21 世纪人工智能的广泛使用暴露了当前几个意想不到的后果和危害,并引发了对其风险和长期影响的担忧,促使人们讨论监管政策以确保技术的安全和利益。

Goals 目标

The general problem of simulating (or creating) intelligence has been broken into subproblems. These consist of particular traits or capabilities that researchers expect an intelligent system to display. The traits described below have received the most attention and cover the scope of AI research.[a]

模拟(或创造)智能的一般问题已被分解为子问题。这些子问题包括研究人员期望智能系统展示的特定特征或能力。下面描述的特征受到了最多关注,并涵盖了人工智能研究的范围。[a]

Reasoning and problem-solving

推理和解决问题

Early researchers developed algorithms that imitated step-by-step reasoning that humans use when they solve puzzles or make logical deductions.[13] By the late 1980s and 1990s, methods were developed for dealing with uncertain or incomplete information, employing concepts from probability and economics.[14]

早期的研究人员开发了模仿人类在解决难题或进行逻辑推理时所使用的逐步推理的算法。[13] 到 1980 年代末和 1990 年代,开发了处理不确定或不完整信息的方法,采用了来自概率和经济学的概念。[14]

Many of these algorithms are insufficient for solving large reasoning problems because they experience a "combinatorial explosion": They become exponentially slower as the problems grow.[15] Even humans rarely use the step-by-step deduction that early AI research could model. They solve most of their problems using fast, intuitive judgments.[16] Accurate and efficient reasoning is an unsolved problem.

许多这些算法不足以解决大型推理问题,因为它们会经历“组合爆炸”:随着问题的增长,它们变得呈指数级变慢。[15] 即使是人类也很少使用早期人工智能研究能够建模的逐步推理。他们大多数问题的解决依赖于快速、直观的判断。[16] 准确和高效的推理仍然是一个未解决的问题。

Knowledge representation 知识表示

本体将知识表示为一个领域内的一组概念及这些概念之间的关系。

Knowledge representation and knowledge engineering[17] allow AI programs to answer questions intelligently and make deductions about real-world facts. Formal knowledge representations are used in content-based indexing and retrieval,[18] scene interpretation,[19] clinical decision support,[20] knowledge discovery (mining "interesting" and actionable inferences from large databases),[21] and other areas.[22]

知识表示 和 知识工程[17] 使人工智能程序能够智能地回答问题并对现实世界的事实进行推理。正式的知识表示用于基于内容的索引和检索,[18] 场景解释,[19] 临床决策支持,[20] 知识发现(从大型 数据库 中挖掘“有趣”的和可操作的推论),[21] 以及其他领域。[22]

A knowledge base is a body of knowledge represented in a form that can be used by a program. An ontology is the set of objects, relations, concepts, and properties used by a particular domain of knowledge.[23] Knowledge bases need to represent things such as objects, properties, categories, and relations between objects;[24] situations, events, states, and time;[25] causes and effects;[26] knowledge about knowledge (what we know about what other people know);[27] default reasoning (things that humans assume are true until they are told differently and will remain true even when other facts are changing);[28] and many other aspects and domains of knowledge.

一个 知识库 是以程序可以使用的形式表示的知识体。一个 本体 是特定知识领域中使用的对象、关系、概念和属性的集合。[23] 知识库需要表示诸如对象、属性、类别和对象之间的关系;[24] 情况、事件、状态和时间;[25] 原因和结果;[26] 关于知识的知识(我们对其他人所知道的事情的了解);[27]默认推理(人类假设为真的事情,直到被告知不同,并且即使其他事实发生变化也将保持为真);[28] 以及许多其他方面和知识领域。

Among the most difficult problems in knowledge representation are the breadth of commonsense knowledge (the set of atomic facts that the average person knows is enormous);[29] and the sub-symbolic form of most commonsense knowledge (much of what people know is not represented as "facts" or "statements" that they could express verbally).[16] There is also the difficulty of knowledge acquisition, the problem of obtaining knowledge for AI applications.[c]

在知识表示中,最困难的问题之一是常识知识的广度(普通人所知道的原子事实的集合是巨大的);[29] 以及大多数常识知识的亚符号形式(人们所知道的许多内容并不是以“事实”或“陈述”的形式表达的)。[16] 还有获取知识的困难,获取用于人工智能应用的知识的问题。[c]

Planning and decision-making

规划与决策

An "agent" is anything that perceives and takes actions in the world. A rational agent has goals or preferences and takes actions to make them happen.[d][32] In automated planning, the agent has a specific goal.[33] In automated decision-making, the agent has preferences—there are some situations it would prefer to be in, and some situations it is trying to avoid. The decision-making agent assigns a number to each situation (called the "utility") that measures how much the agent prefers it. For each possible action, it can calculate the "expected utility": the utility of all possible outcomes of the action, weighted by the probability that the outcome will occur. It can then choose the action with the maximum expected utility.[34]

“代理”是指任何能够感知并在世界中采取行动的事物。一个理性代理有目标或偏好,并采取行动使其实现。[d][32] 在自动规划中,代理有一个特定的目标。[33] 在自动决策中,代理有偏好——有些情况是它希望处于的,有些情况是它试图避免的。决策代理为每种情况分配一个数字(称为“效用”),以衡量代理对该情况的偏好程度。对于每个可能的行动,它可以计算“期望效用”:该行动所有可能结果的效用,按结果发生的概率加权。然后,它可以选择具有最大期望效用的行动。[34]

In classical planning, the agent knows exactly what the effect of any action will be.[35] In most real-world problems, however, the agent may not be certain about the situation they are in (it is "unknown" or "unobservable") and it may not know for certain what will happen after each possible action (it is not "deterministic"). It must choose an action by making a probabilistic guess and then reassess the situation to see if the action worked.[36]

在经典规划中,智能体确切知道任何行动的效果。[35] 然而,在大多数现实世界的问题中,智能体可能对其所处的情况不确定(它是“未知”或“不可观察的”),并且它可能无法确定每个可能行动后会发生什么(它不是“确定性的”)。它必须通过做出概率猜测来选择一个行动,然后重新评估情况以查看该行动是否有效。[36]

In some problems, the agent's preferences may be uncertain, especially if there are other agents or humans involved. These can be learned (e.g., with inverse reinforcement learning), or the agent can seek information to improve its preferences.[37] Information value theory can be used to weigh the value of exploratory or experimental actions.[38] The space of possible future actions and situations is typically intractably large, so the agents must take actions and evaluate situations while being uncertain of what the outcome will be.

在某些问题中,代理的偏好可能不确定,特别是当涉及其他代理或人类时。这些可以通过学习获得(例如,通过逆强化学习),或者代理可以寻求信息以改善其偏好。[37]信息价值理论可以用来权衡探索或实验行动的价值。[38] 未来可能的行动和情况的空间通常是不可处理的,因此代理必须在不确定结果的情况下采取行动并评估情况。

A Markov decision process has a transition model that describes the probability that a particular action will change the state in a particular way and a reward function that supplies the utility of each state and the cost of each action. A policy associates a decision with each possible state. The policy could be calculated (e.g., by iteration), be heuristic, or it can be learned.[39]

一个 马尔可夫决策过程 具有一个 转移模型,描述了特定动作以特定方式改变状态的概率,以及一个 奖励函数,提供每个状态的效用和每个动作的成本。一个 策略 将每个可能状态与一个决策关联。该策略可以通过计算(例如,通过 迭代)、是 启发式,或者可以通过学习获得。[39]

Game theory describes the rational behavior of multiple interacting agents and is used in AI programs that make decisions that involve other agents.[40]

博弈论 描述了多个相互作用的主体的理性行为,并用于涉及其他主体的决策的人工智能程序。[40]

Learning 学习

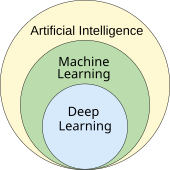

Machine learning is the study of programs that can improve their performance on a given task automatically.[41] It has been a part of AI from the beginning.[e]

机器学习是研究能够自动提高其在特定任务上表现的程序。[41] 从一开始,它就是人工智能的一部分。[e]

There are several kinds of machine learning. Unsupervised learning analyzes a stream of data and finds patterns and makes predictions without any other guidance.[44] Supervised learning requires a human to label the input data first, and comes in two main varieties: classification (where the program must learn to predict what category the input belongs in) and regression (where the program must deduce a numeric function based on numeric input).[45]

有几种机器学习。无监督学习分析数据流,发现模式并进行预测,而无需其他指导。[44]监督学习要求人类首先对输入数据进行标记,主要有两种类型:分类(程序必须学习预测输入属于哪个类别)和回归(程序必须根据数值输入推导出一个数值函数)。[45]

In reinforcement learning, the agent is rewarded for good responses and punished for bad ones. The agent learns to choose responses that are classified as "good".[46] Transfer learning is when the knowledge gained from one problem is applied to a new problem.[47] Deep learning is a type of machine learning that runs inputs through biologically inspired artificial neural networks for all of these types of learning.[48]

在强化学习中,代理因良好的反应而获得奖励,因不良反应而受到惩罚。代理学习选择被分类为“好”的反应。[46]迁移学习是指将从一个问题中获得的知识应用于新问题。[47]深度学习是一种机器学习类型,通过生物启发的人工神经网络处理所有这些类型的学习输入。[48]

Computational learning theory can assess learners by computational complexity, by sample complexity (how much data is required), or by other notions of optimization.[49]

计算学习理论 可以通过 计算复杂性、样本复杂性(需要多少数据)或其他 优化 的概念来评估学习者。[49]

Natural language processing

自然语言处理

Natural language processing (NLP)[50] allows programs to read, write and communicate in human languages such as English. Specific problems include speech recognition, speech synthesis, machine translation, information extraction, information retrieval and question answering.[51]

自然语言处理 (NLP)[50] 使程序能够以人类语言(如 英语)进行阅读、写作和交流。具体问题包括 语音识别、语音合成、机器翻译、信息提取、信息检索 和 问答。[51]

Early work, based on Noam Chomsky's generative grammar and semantic networks, had difficulty with word-sense disambiguation[f] unless restricted to small domains called "micro-worlds" (due to the common sense knowledge problem[29]). Margaret Masterman believed that it was meaning and not grammar that was the key to understanding languages, and that thesauri and not dictionaries should be the basis of computational language structure.

早期的工作基于Noam Chomsky的生成语法和语义网络,在词义消歧[f]方面遇到了困难,除非限制在称为“微观世界”的小领域内(由于常识知识问题[29])。玛格丽特·马斯特曼认为,理解语言的关键在于意义而不是语法,并且同义词典而不是字典应该是计算语言结构的基础。

Modern deep learning techniques for NLP include word embedding (representing words, typically as vectors encoding their meaning),[52] transformers (a deep learning architecture using an attention mechanism),[53] and others.[54] In 2019, generative pre-trained transformer (or "GPT") language models began to generate coherent text,[55][56] and by 2023, these models were able to get human-level scores on the bar exam, SAT test, GRE test, and many other real-world applications.[57]

现代深度学习技术在自然语言处理(NLP)中包括词嵌入(通常将单词表示为向量以编码其含义),[52]变换器(一种使用注意力机制的深度学习架构),[53]以及其他技术。[54] 在 2019 年,生成预训练变换器(或“GPT”)语言模型开始生成连贯的文本,[55][56] 到 2023 年,这些模型能够在律师资格考试、SAT考试、GRE考试以及许多其他现实世界应用中获得人类水平的分数。[57]

Perception 感知

Machine perception is the ability to use input from sensors (such as cameras, microphones, wireless signals, active lidar, sonar, radar, and tactile sensors) to deduce aspects of the world. Computer vision is the ability to analyze visual input.[58]

机器感知是利用传感器(如摄像头、麦克风、无线信号、主动激光雷达、声纳、雷达和触觉传感器)的输入来推断世界各个方面的能力。计算机视觉是分析视觉输入的能力。[58]

The field includes speech recognition,[59] image classification,[60] facial recognition, object recognition,[61]object tracking,[62] and robotic perception.[63]

该领域包括 语音识别,[59]图像分类,[60]面部识别, 物体识别,[61]物体跟踪,[62] 和 机器人感知。[63]

Social intelligence 社会智能

Kismet,一个在 1990 年代制造的机器人头;一个可以识别和模拟情感的机器 64

Affective computing is an interdisciplinary umbrella that comprises systems that recognize, interpret, process, or simulate human feeling, emotion, and mood.[65] For example, some virtual assistants are programmed to speak conversationally or even to banter humorously; it makes them appear more sensitive to the emotional dynamics of human interaction, or to otherwise facilitate human–computer interaction.

情感计算 是一个跨学科的伞式概念,包含能够识别、解释、处理或模拟人类 感觉、情绪和心情 的系统。[65] 例如,一些 虚拟助手 被编程为以对话的方式进行交流,甚至进行幽默的调侃;这使得它们在与人类互动的情感动态中显得更加敏感,或者以其他方式促进 人机交互。

However, this tends to give naïve users an unrealistic conception of the intelligence of existing computer agents.[66] Moderate successes related to affective computing include textual sentiment analysis and, more recently, multimodal sentiment analysis, wherein AI classifies the affects displayed by a videotaped subject.[67]

然而,这往往使天真的用户对现有计算机代理的智能产生不切实际的认识。[66] 与情感计算相关的适度成功包括文本情感分析,以及最近的多模态情感分析,其中人工智能对录像中被试者表现出的情感进行分类。[67]

General intelligence 一般智力

A machine with artificial general intelligence should be able to solve a wide variety of problems with breadth and versatility similar to human intelligence.[4]

一台具有 人工通用智能 的机器应该能够以类似于 人类智能 的广度和多样性解决各种问题。[4]

Techniques 技术

AI research uses a wide variety of techniques to accomplish the goals above.[b]

人工智能研究使用多种技术来实现上述目标。[b]

Search and optimization 搜索与优化

AI can solve many problems by intelligently searching through many possible solutions.[68] There are two very different kinds of search used in AI: state space search and local search.

AI 可以通过智能地搜索许多可能的解决方案来解决许多问题。[68] AI 中使用了两种截然不同的搜索方式:状态空间搜索和局部搜索。

State space search 状态空间搜索

State space search searches through a tree of possible states to try to find a goal state.[69] For example, planning algorithms search through trees of goals and subgoals, attempting to find a path to a target goal, a process called means-ends analysis.[70]

状态空间搜索 在可能状态的树中进行搜索,以尝试找到目标状态。[69] 例如,规划 算法在目标和子目标的树中进行搜索,试图找到通往目标的路径,这个过程称为 手段-目的分析。[70]

Simple exhaustive searches[71] are rarely sufficient for most real-world problems: the search space (the number of places to search) quickly grows to astronomical numbers. The result is a search that is too slow or never completes.[15] "Heuristics" or "rules of thumb" can help prioritize choices that are more likely to reach a goal.[72]

简单的穷举搜索[71] 对于大多数现实世界的问题来说,通常是不够的:搜索空间(需要搜索的地方数量)迅速增长到天文数字。结果是搜索变得太慢或永远无法完成。[15] “启发式”或“经验法则”可以帮助优先选择更有可能达到目标的选项。[72]

Adversarial search is used for game-playing programs, such as chess or Go. It searches through a tree of possible moves and counter-moves, looking for a winning position.[73]

对抗搜索用于游戏程序,例如国际象棋或围棋。它在可能的走法和反走法的树中搜索,寻找获胜的位置。[73]

Local search

对于三个不同起点的梯度下降的插图;调整两个参数(由平面坐标表示)以最小化损失函数(高度)

Local search uses mathematical optimization to find a solution to a problem. It begins with some form of guess and refines it incrementally.[74]

Gradient descent is a type of local search that optimizes a set of numerical parameters by incrementally adjusting them to minimize a loss function. Variants of gradient descent are commonly used to train neural networks.[75]

梯度下降是一种局部搜索方法,通过逐步调整一组数值参数来优化,以最小化损失函数。梯度下降的变体通常用于训练神经网络。[75]

Another type of local search is evolutionary computation, which aims to iteratively improve a set of candidate solutions by "mutating" and "recombining" them, selecting only the fittest to survive each generation.[76]

另一种本地搜索类型是 进化计算,其目标是通过“变异”和“重组”迭代改进一组候选解决方案,选择 仅让最适合的个体在每一代中存活。[76]

Distributed search processes can coordinate via swarm intelligence algorithms. Two popular swarm algorithms used in search are particle swarm optimization (inspired by bird flocking) and ant colony optimization (inspired by ant trails).[77]

分布式搜索过程可以通过群体智能算法进行协调。两种在搜索中常用的群体算法是粒子群优化(受鸟类群聚启发)和蚁群优化(受蚂蚁踪迹启发)。[77]

Logic 逻辑

Formal logic is used for reasoning and knowledge representation.[78]

Formal logic comes in two main forms: propositional logic (which operates on statements that are true or false and uses logical connectives such as "and", "or", "not" and "implies")[79] and predicate logic (which also operates on objects, predicates and relations and uses quantifiers such as "Every X is a Y" and "There are some Xs that are Ys").[80]

形式逻辑用于推理和知识表示。[78]形式逻辑主要有两种形式:命题逻辑(它处理真假语句,并使用逻辑连接词如“和”、“或”、“非”和“蕴含”)[79]和谓词逻辑(它也处理对象、谓词和关系,并使用量词如“每个X都是Y”和“有一些X是Y”)。[80]

Deductive reasoning in logic is the process of proving a new statement (conclusion) from other statements that are given and assumed to be true (the premises).[81] Proofs can be structured as proof trees, in which nodes are labelled by sentences, and children nodes are connected to parent nodes by inference rules.

演绎推理在逻辑中是从其他被给定并假定为真的陈述(前提)中推导出一个新陈述(结论)的过程。[81] 证明可以结构化为证明树,其中节点由句子标记,子节点通过推理规则与父节点连接。

Given a problem and a set of premises, problem-solving reduces to searching for a proof tree whose root node is labelled by a solution of the problem and whose leaf nodes are labelled by premises or axioms. In the case of Horn clauses, problem-solving search can be performed by reasoning forwards from the premises or backwards from the problem.[82] In the more general case of the clausal form of first-order logic, resolution is a single, axiom-free rule of inference, in which a problem is solved by proving a contradiction from premises that include the negation of the problem to be solved.[83]

给定一个问题和一组前提,问题解决归结为寻找一个证明树,其根节点标记为问题的解决方案,叶节点标记为前提或公理。在霍恩子句的情况下,问题解决搜索可以通过从前提向前推理或从问题向后推理来进行。在更一般的第一阶逻辑的子句形式中,归结是一条单一的、无公理的推理规则,其中通过证明一个包含待解决问题否定的前提的矛盾来解决问题。

Inference in both Horn clause logic and first-order logic is undecidable, and therefore intractable. However, backward reasoning with Horn clauses, which underpins computation in the logic programming language Prolog, is Turing complete. Moreover, its efficiency is competitive with computation in other symbolic programming languages.[84]

在霍恩子句逻辑和一阶逻辑中,推理是不可判定的,因此是不可处理的。然而,基于霍恩子句的逆向推理支撑着逻辑编程语言Prolog中的计算,并且是图灵完备的。此外,它的效率与其他符号编程语言中的计算具有竞争力。[84]

Fuzzy logic assigns a "degree of truth" between 0 and 1. It can therefore handle propositions that are vague and partially true.[85]

模糊逻辑赋予“真值”一个介于 0 和 1 之间的度。因此,它可以处理模糊和部分真实的命题。[85]

Non-monotonic logics, including logic programming with negation as failure, are designed to handle default reasoning.[28] Other specialized versions of logic have been developed to describe many complex domains.

非单调逻辑,包括带有失败时否定的逻辑编程,旨在处理默认推理。[28] 其他专门版本的逻辑已被开发出来,以描述许多复杂领域。

Probabilistic methods for uncertain reasoning

不确定推理的概率方法

一个简单的 贝叶斯网络,以及相关的 条件概率表

Many problems in AI (including in reasoning, planning, learning, perception, and robotics) require the agent to operate with incomplete or uncertain information. AI researchers have devised a number of tools to solve these problems using methods from probability theory and economics.[86] Precise mathematical tools have been developed that analyze how an agent can make choices and plan, using decision theory, decision analysis,[87] and information value theory.[88] These tools include models such as Markov decision processes,[89] dynamic decision networks,[90] game theory and mechanism design.[91]

许多人工智能(包括推理、规划、学习、感知和机器人技术)中的问题要求智能体在不完整或不确定的信息下进行操作。人工智能研究人员设计了许多工具,以利用概率理论和经济学的方法来解决这些问题。[86] 已开发出精确的数学工具,分析智能体如何做出选择和规划,使用决策理论、决策分析、[87] 和信息价值理论。[88] 这些工具包括模型,如马尔可夫决策过程、[89] 动态决策网络、[90]博弈论和机制设计。[91]

Bayesian networks[92] are a tool that can be used for reasoning (using the Bayesian inference algorithm),[g][94] learning (using the expectation–maximization algorithm),[h][96] planning (using decision networks)[97] and perception (using dynamic Bayesian networks).[90]

贝叶斯网络[92] 是一种可以用于 推理(使用 贝叶斯推断 算法),[g][94]学习(使用 期望最大化算法),[h][96]规划(使用 决策网络)[97] 和 感知(使用 动态贝叶斯网络)。[90]

Probabilistic algorithms can also be used for filtering, prediction, smoothing, and finding explanations for streams of data, thus helping perception systems analyze processes that occur over time (e.g., hidden Markov models or Kalman filters).[90]

概率算法也可以用于过滤、预测、平滑和寻找数据流的解释,从而帮助感知系统分析随时间发生的过程(例如,隐马尔可夫模型或卡尔曼滤波器)。[90]

期望最大化聚类 老忠实喷发数据从随机猜测开始,但随后成功收敛到两个物理上不同的喷发模式的准确聚类。

Classifiers and statistical learning methods

分类器和统计学习方法

The simplest AI applications can be divided into two types: classifiers (e.g., "if shiny then diamond"), on one hand, and controllers (e.g., "if diamond then pick up"), on the other hand. Classifiers[98] are functions that use pattern matching to determine the closest match. They can be fine-tuned based on chosen examples using supervised learning. Each pattern (also called an "observation") is labeled with a certain predefined class. All the observations combined with their class labels are known as a data set. When a new observation is received, that observation is classified based on previous experience.[45]

最简单的人工智能应用可以分为两种类型:分类器(例如,“如果闪亮则是钻石”)和控制器(例如,“如果是钻石则捡起”)。分类器[98] 是使用 模式匹配 来确定最接近匹配的函数。它们可以根据选择的示例使用 监督学习 进行微调。每个模式(也称为“观察”)都被标记为某个预定义类别。所有观察及其类别标签的组合称为 数据集。当接收到新的观察时,该观察会根据之前的经验进行分类。[45]

There are many kinds of classifiers in use.[99] The decision tree is the simplest and most widely used symbolic machine learning algorithm.[100] K-nearest neighbor algorithm was the most widely used analogical AI until the mid-1990s, and Kernel methods such as the support vector machine (SVM) displaced k-nearest neighbor in the 1990s.[101]

The naive Bayes classifier is reportedly the "most widely used learner"[102] at Google, due in part to its scalability.[103]

Neural networks are also used as classifiers.[104]

使用的分类器种类繁多。[99] 决策树是最简单且最广泛使用的符号机器学习算法。[100]K 近邻算法在 1990 年代中期之前是最广泛使用的类比人工智能,而核方法如支持向量机(SVM)在 1990 年代取代了 K 近邻。[101] 据报道,朴素贝叶斯分类器是谷歌“使用最广泛的学习器”[102],部分原因是其可扩展性。[103]神经网络也被用作分类器。[104]

Artificial neural networks

人工神经网络

神经网络是一组相互连接的节点,类似于人脑中庞大的神经元网络。

An artificial neural network is based on a collection of nodes also known as artificial neurons, which loosely model the neurons in a biological brain. It is trained to recognise patterns; once trained, it can recognise those patterns in fresh data. There is an input, at least one hidden layer of nodes and an output. Each node applies a function and once the weight crosses its specified threshold, the data is transmitted to the next layer. A network is typically called a deep neural network if it has at least 2 hidden layers.[104]

人工神经网络基于一组节点,也称为人工神经元,它们大致模拟生物大脑中的神经元。它经过训练以识别模式;一旦训练完成,它可以在新数据中识别这些模式。网络有一个输入,至少一个隐藏层节点和一个输出。每个节点应用一个函数,一旦权重超过其指定的阈值,数据就会传输到下一层。如果网络至少有两个隐藏层,通常称为深度神经网络。[104]

Learning algorithms for neural networks use local search to choose the weights that will get the right output for each input during training. The most common training technique is the backpropagation algorithm.[105] Neural networks learn to model complex relationships between inputs and outputs and find patterns in data. In theory, a neural network can learn any function.[106]

神经网络的学习算法使用 局部搜索 来选择在训练过程中为每个输入获得正确输出的权重。最常见的训练技术是 反向传播 算法。[105] 神经网络学习建模输入和输出之间的复杂关系,并在数据中 寻找模式。理论上,神经网络可以学习任何函数。[106]

In feedforward neural networks the signal passes in only one direction.[107] Recurrent neural networks feed the output signal back into the input, which allows short-term memories of previous input events. Long short term memory is the most successful network architecture for recurrent networks.[108] Perceptrons[109] use only a single layer of neurons; deep learning[110] uses multiple layers. Convolutional neural networks strengthen the connection between neurons that are "close" to each other—this is especially important in image processing, where a local set of neurons must identify an "edge" before the network can identify an object.[111]

在前馈神经网络中,信号仅朝一个方向传递。[107]递归神经网络将输出信号反馈到输入中,这允许对先前输入事件的短期记忆。长短期记忆是递归网络中最成功的网络架构。[108]感知器[109]仅使用单层神经元;深度学习[110]使用多层。卷积神经网络增强了“接近”彼此的神经元之间的连接——这在图像处理中尤为重要,因为一组局部神经元必须识别“边缘”,然后网络才能识别对象。[111]

Deep learning 深度学习

Deep learning[110] uses several layers of neurons between the network's inputs and outputs. The multiple layers can progressively extract higher-level features from the raw input. For example, in image processing, lower layers may identify edges, while higher layers may identify the concepts relevant to a human such as digits, letters, or faces.[112]

深度学习[110] 在网络的输入和输出之间使用多个神经元层。这些多个层可以逐步从原始输入中提取更高级的特征。例如,在 图像处理 中,较低的层可能识别边缘,而较高的层可能识别与人类相关的概念,如数字、字母或面孔。[112]

Deep learning has profoundly improved the performance of programs in many important subfields of artificial intelligence, including computer vision, speech recognition, natural language processing, image classification,[113] and others. The reason that deep learning performs so well in so many applications is not known as of 2023.[114] The sudden success of deep learning in 2012–2015 did not occur because of some new discovery or theoretical breakthrough (deep neural networks and backpropagation had been described by many people, as far back as the 1950s)[i] but because of two factors: the incredible increase in computer power (including the hundred-fold increase in speed by switching to GPUs) and the availability of vast amounts of training data, especially the giant curated datasets used for benchmark testing, such as ImageNet.[j]

深度学习在许多重要的人工智能子领域中显著提高了程序的性能,包括 计算机视觉、语音识别、自然语言处理、图像分类、[113] 等等。到 2023 年,深度学习在如此多应用中表现如此出色的原因尚不清楚。[114] 深度学习在 2012 年至 2015 年的突然成功并不是由于某种新的发现或理论突破(深度神经网络和 反向传播 早在 1950 年代就已被许多人描述过)[i],而是由于两个因素:计算能力的惊人提升(包括通过切换到 GPU 实现的速度提高了百倍)以及大量训练数据的可用性,特别是用于基准测试的巨型 策划数据集,如 ImageNet。[j]

GPT

Generative pre-trained transformers (GPT) are large language models (LLMs) that generate text based on the semantic relationships between words in sentences. Text-based GPT models are pretrained on a large corpus of text that can be from the Internet. The pretraining consists of predicting the next token (a token being usually a word, subword, or punctuation). Throughout this pretraining, GPT models accumulate knowledge about the world and can then generate human-like text by repeatedly predicting the next token. Typically, a subsequent training phase makes the model more truthful, useful, and harmless, usually with a technique called reinforcement learning from human feedback (RLHF). Current GPT models are prone to generating falsehoods called "hallucinations", although this can be reduced with RLHF and quality data. They are used in chatbots, which allow people to ask a question or request a task in simple text.[122][123]

生成预训练变换器(GPT)是大型语言模型(LLMs),它们根据句子中单词之间的语义关系生成文本。基于文本的 GPT 模型在一个大型文本语料库上进行预训练,该语料库可以来自互联网。预训练的过程包括预测下一个标记(标记通常是一个单词、子词或标点符号)。在这个预训练过程中,GPT 模型积累了关于世界的知识,然后通过反复预测下一个标记生成类人文本。通常,后续的训练阶段使模型更加真实、有用和无害,通常使用一种称为人类反馈强化学习(RLHF)的方法。目前的 GPT 模型容易生成被称为“幻觉”的虚假信息,尽管通过 RLHF 和高质量数据可以减少这种情况。它们被用于聊天机器人,允许人们以简单文本提问或请求任务。[122][123]

Current models and services include Gemini (formerly Bard), ChatGPT, Grok, Claude, Copilot, and LLaMA.[124] Multimodal GPT models can process different types of data (modalities) such as images, videos, sound, and text.[125]

当前的模型和服务包括 Gemini(前身为 Bard)、ChatGPT、Grok、Claude、Copilot 和 LLaMA。[124]多模态 GPT 模型可以处理不同类型的数据(模态),例如图像、视频、声音和文本。[125]

Specialized hardware and software

专用硬件和软件

In the late 2010s, graphics processing units (GPUs) that were increasingly designed with AI-specific enhancements and used with specialized TensorFlow software had replaced previously used central processing unit (CPUs) as the dominant means for large-scale (commercial and academic) machine learning models' training.[126] Specialized programming languages such as Prolog were used in early AI research,[127] but general-purpose programming languages like Python have become predominant.[128]

在 2010 年代末,图形处理单元(GPU)越来越多地设计为具有 AI 特定增强功能,并与专用的TensorFlow软件一起使用,取代了之前使用的中央处理单元(CPU),成为大规模(商业和学术)机器学习模型训练的主导手段。[126] 专用编程语言如Prolog曾在早期 AI 研究中使用,[127] 但通用编程语言如Python已成为主流。[128]

Applications 应用程序

AI and machine learning technology is used in most of the essential applications of the 2020s, including: search engines (such as Google Search), targeting online advertisements, recommendation systems (offered by Netflix, YouTube or Amazon), driving internet traffic, targeted advertising (AdSense, Facebook), virtual assistants (such as Siri or Alexa), autonomous vehicles (including drones, ADAS and self-driving cars), automatic language translation (Microsoft Translator, Google Translate), facial recognition (Apple's Face ID or Microsoft's DeepFace and Google's FaceNet) and image labeling (used by Facebook, Apple's iPhoto and TikTok). The deployment of AI may be overseen by a Chief automation officer (CAO).

AI 和机器学习技术在 2020 年代的大多数重要应用中被使用,包括:搜索引擎(如谷歌搜索)、在线广告定向、推荐系统(由Netflix、YouTube或亚马逊提供)、驱动互联网流量、定向广告(AdSense、Facebook)、虚拟助手(如Siri或Alexa)、自动驾驶车辆(包括无人机、ADAS和自动驾驶汽车)、自动语言翻译(微软翻译、谷歌翻译)、面部识别(苹果的Face ID或微软的DeepFace和谷歌的FaceNet)以及图像标记(由Facebook、苹果的iPhoto和抖音使用)。AI 的部署可能由首席自动化官(CAO)监督。

Health and medicine 健康与医学

The application of AI in medicine and medical research has the potential to increase patient care and quality of life.[129] Through the lens of the Hippocratic Oath, medical professionals are ethically compelled to use AI, if applications can more accurately diagnose and treat patients.

人工智能在医学和医学研究中的应用有潜力提高患者护理和生活质量。[129] 从希波克拉底誓言的角度来看,医疗专业人员在伦理上有责任使用人工智能,如果这些应用能够更准确地诊断和治疗患者。

For medical research, AI is an important tool for processing and integrating big data. This is particularly important for organoid and tissue engineering development which use microscopy imaging as a key technique in fabrication.[130] It has been suggested that AI can overcome discrepancies in funding allocated to different fields of research.[130] New AI tools can deepen the understanding of biomedically relevant pathways. For example, AlphaFold 2 (2021) demonstrated the ability to approximate, in hours rather than months, the 3D structure of a protein.[131] In 2023, it was reported that AI-guided drug discovery helped find a class of antibiotics capable of killing two different types of drug-resistant bacteria.[132] In 2024, researchers used machine learning to accelerate the search for Parkinson's disease drug treatments. Their aim was to identify compounds that block the clumping, or aggregation, of alpha-synuclein (the protein that characterises Parkinson's disease). They were able to speed up the initial screening process ten-fold and reduce the cost by a thousand-fold.[133][134]

在医学研究中,人工智能是处理和整合大数据的重要工具。这对于使用显微镜成像作为制造关键技术的类器官和组织工程的发展尤为重要。[130] 有人建议,人工智能可以克服不同研究领域之间资金分配的差异。[130] 新的人工智能工具可以加深对生物医学相关通路的理解。例如,AlphaFold 2(2021 年)展示了在数小时内而非数月内近似预测蛋白质的 3D 结构的能力。[131] 2023 年,有报道称,人工智能引导的药物发现帮助找到了一类能够杀死两种不同类型耐药细菌的抗生素。[132] 2024 年,研究人员使用机器学习加速寻找帕金森病药物治疗。 他们的目标是识别能够阻止α-突触核蛋白(帕金森病特征蛋白)聚集的化合物。他们成功地将初步筛选过程的速度提高了十倍,并将成本降低了千倍。[133][134]

Games 游戏

Game playing programs have been used since the 1950s to demonstrate and test AI's most advanced techniques.[135] Deep Blue became the first computer chess-playing system to beat a reigning world chess champion, Garry Kasparov, on 11 May 1997.[136] In 2011, in a Jeopardy! quiz show exhibition match, IBM's question answering system, Watson, defeated the two greatest Jeopardy! champions, Brad Rutter and Ken Jennings, by a significant margin.[137] In March 2016, AlphaGo won 4 out of 5 games of Go in a match with Go champion Lee Sedol, becoming the first computer Go-playing system to beat a professional Go player without handicaps. Then, in 2017, it defeated Ke Jie, who was the best Go player in the world.[138] Other programs handle imperfect-information games, such as the poker-playing program Pluribus.[139] DeepMind developed increasingly generalistic reinforcement learning models, such as with MuZero, which could be trained to play chess, Go, or Atari games.[140] In 2019, DeepMind's AlphaStar achieved grandmaster level in StarCraft II, a particularly challenging real-time strategy game that involves incomplete knowledge of what happens on the map.[141] In 2021, an AI agent competed in a PlayStation Gran Turismo competition, winning against four of the world's best Gran Turismo drivers using deep reinforcement learning.[142] In 2024, Google DeepMind introduced SIMA, a type of AI capable of autonomously playing nine previously unseen open-world video games by observing screen output, as well as executing short, specific tasks in response to natural language instructions.[143]

游戏程序自 1950 年代以来一直被用来展示和测试人工智能最先进的技术。[135]深蓝成为第一个击败在位世界国际象棋冠军加里·卡斯帕罗夫的计算机国际象棋系统,时间是 1997 年 5 月 11 日。[136]2011 年,在一场危险边缘!问答节目的展览赛中,IBM的问答系统沃森以显著的优势击败了两位最伟大的危险边缘!冠军布拉德·鲁特和肯·詹宁斯。[137]2016 年 3 月,AlphaGo在与围棋冠军李世石的比赛中赢得了 5 局中的 4 局,成为第一个在没有让子的情况下击败职业围棋选手的计算机围棋系统。然后,在 2017 年,它击败了柯洁,他是世界上最好的围棋选手。[138] 其他程序处理 不完全信息 游戏,例如 扑克 玩家的程序 Pluribus。[139]DeepMind 开发了越来越通用的 强化学习 模型,例如 MuZero,可以训练来玩国际象棋、围棋或 Atari 游戏。[140] 在 2019 年,DeepMind 的 AlphaStar 在 星际争霸 II 中达到了大师级水平,这是一款特别具有挑战性的实时战略游戏,涉及对地图上发生的事情的不完全知识。[141] 在 2021 年,一个 AI 代理参加了 PlayStation Gran Turismo 比赛,使用深度强化学习战胜了四位世界顶级 Gran Turismo 车手。[142] 在 2024 年,Google DeepMind 推出了 SIMA,这是一种能够通过观察屏幕输出自主玩九款之前未见过的开放世界视频游戏的 AI,并能够根据自然语言指令执行简短、特定的任务。[143]

Mathematics 数学

In mathematics, special forms of formal step-by-step reasoning are used. In contrast, LLMs such as GPT-4 Turbo, Gemini Ultra, Claude Opus, LLaMa-2 or Mistral Large are working with probabilistic models, which can produce wrong answers in the form of hallucinations. Therefore, they need not only a large database of mathematical problems to learn from but also methods such as supervised fine-tuning or trained classifiers with human-annotated data to improve answers for new problems and learn from corrections.[144] A 2024 study showed that the performance of some language models for reasoning capabilities in solving math problems not included in their training data was low, even for problems with only minor deviations from trained data.[145]

在数学中,使用了特殊形式的逐步 推理。相比之下,LLMs(如 GPT-4 Turbo、Gemini Ultra、Claude Opus、LLaMa-2 或 Mistral Large)则使用概率模型,这可能会以 幻觉 的形式产生错误答案。因此,它们不仅需要一个大型的数学问题数据库来学习,还需要如 监督微调 或使用人类标注数据训练的 分类器 等方法,以改善新问题的答案并从修正中学习。[144] 一项 2024 年的研究表明,对于解决未包含在训练数据中的数学问题,一些语言模型的推理能力表现较低,即使是与训练数据仅有轻微偏差的问题。[145]

Alternatively, dedicated models for mathematic problem solving with higher precision for the outcome including proof of theorems have been developed such as Alpha Tensor, Alpha Geometry and Alpha Proof all from Google DeepMind,[146] Llemma from eleuther[147] or Julius.[148]

另外,已经开发出专门用于数学问题解决的模型,具有更高的结果精度,包括定理证明,如 Alpha Tensor、Alpha Geometry 和 Alpha Proof,均来自 Google DeepMind,[146] 以及来自 eleuther 的 Llemma [147] 或 Julius。[148]

When natural language is used to describe mathematical problems, converters transform such prompts into a formal language such as Lean to define mathematic tasks.

当自然语言用于描述数学问题时,转换器将这些提示转换为正式语言,例如 Lean,以定义数学任务。

Some models have been developed to solve challenging problems and reach good results in benchmark tests, others to serve as educational tools in mathematics.[149]

一些模型已经被开发出来,以解决具有挑战性的问题并在基准测试中取得良好结果,其他模型则作为数学教育工具。[149]

Finance 金融

Finance is one of the fastest growing sectors where applied AI tools are being deployed: from retail online banking to investment advice and insurance, where automated "robot advisers" have been in use for some years.

[150]

金融是应用人工智能工具部署最快的行业之一:从零售在线银行到投资建议和保险,自动化的“机器人顾问”已经使用了几年。[150]

World Pensions experts like Nicolas Firzli insist it may be too early to see the emergence of highly innovative AI-informed financial products and services: "the deployment of AI tools will simply further automatise things: destroying tens of thousands of jobs in banking, financial planning, and pension advice in the process, but I’m not sure it will unleash a new wave of [e.g., sophisticated] pension innovation."[151]

全球养老金专家如尼古拉斯·菲尔兹利坚持认为,看到高度创新的人工智能驱动的金融产品和服务的出现可能为时尚早:“人工智能工具的部署将进一步自动化事务:在此过程中摧毁数万个银行、财务规划和养老金咨询的工作,但我不确定这会释放出一波新的[例如,复杂的]养老金创新。”[151]

Military 军事

Various countries are deploying AI military applications.[152] The main applications enhance command and control, communications, sensors, integration and interoperability.[153] Research is targeting intelligence collection and analysis, logistics, cyber operations, information operations, and semiautonomous and autonomous vehicles.[152] AI technologies enable coordination of sensors and effectors, threat detection and identification, marking of enemy positions, target acquisition, coordination and deconfliction of distributed Joint Fires between networked combat vehicles involving manned and unmanned teams.[153] AI was incorporated into military operations in Iraq and Syria.[152]

各国正在部署人工智能军事应用。[152] 主要应用增强了指挥与控制、通信、传感器、集成和互操作性。[153] 研究的目标是情报收集与分析、后勤、网络行动、信息行动,以及半自主和自主车辆。[152] 人工智能技术使传感器和效应器的协调、威胁检测与识别、敌方位置标记、目标获取、分布式联合火力的协调与冲突解决成为可能,这些都涉及到联网的有人和无人作战团队。[153] 人工智能已被纳入伊拉克和叙利亚的军事行动中。[152]

In November 2023, US Vice President Kamala Harris disclosed a declaration signed by 31 nations to set guardrails for the military use of AI. The commitments include using legal reviews to ensure the compliance of military AI with international laws, and being cautious and transparent in the development of this technology.[154]

在 2023 年 11 月,美国副总统卡马拉·哈里斯披露了一份由 31 个国家签署的声明,以设定军事使用人工智能的保护措施。承诺包括进行法律审查,以确保军事人工智能符合国际法,并在该技术的发展中保持谨慎和透明。[154]

Generative AI 生成性人工智能

由生成性人工智能软件创作的水彩画《文森特·梵高》

In the early 2020s, generative AI gained widespread prominence. GenAI is AI capable of generating text, images, videos, or other data using generative models,[155][156] often in response to prompts.[157][158]

在 2020 年代初期,生成性人工智能获得了广泛的关注。生成性人工智能是能够使用生成模型生成文本、图像、视频或其他数据的人工智能,[155][156]通常是响应提示而生成的。[157][158]

In March 2023, 58% of U.S. adults had heard about ChatGPT and 14% had tried it.[159] The increasing realism and ease-of-use of AI-based text-to-image generators such as Midjourney, DALL-E, and Stable Diffusion sparked a trend of viral AI-generated photos. Widespread attention was gained by a fake photo of Pope Francis wearing a white puffer coat, the fictional arrest of Donald Trump, and a hoax of an attack on the Pentagon, as well as the usage in professional creative arts.[160][161]

在 2023 年 3 月,58%的美国成年人听说过ChatGPT,14%的人尝试过它。[159] 基于 AI 的文本到图像生成器,如Midjourney、DALL-E和Stable Diffusion,其日益逼真的效果和易用性引发了病毒式的 AI 生成照片趋势。一个假照片引起了广泛关注,照片中教皇弗朗西斯穿着白色羽绒服,关于唐纳德·特朗普的虚构逮捕,以及对五角大楼的攻击骗局,以及在专业创意艺术中的使用。[160][161]

Agents 代理人

Artificial intelligent (AI) agents are software entities designed to perceive their environment, make decisions, and take actions autonomously to achieve specific goals. These agents can interact with users, their environment, or other agents. AI agents are used in various applications, including virtual assistants, chatbots, autonomous vehicles, game-playing systems, and industrial robotics. AI agents operate within the constraints of their programming, available computational resources, and hardware limitations. This means they are restricted to performing tasks within their defined scope and have finite memory and processing capabilities. In real-world applications, AI agents often face time constraints for decision-making and action execution. Many AI agents incorporate learning algorithms, enabling them to improve their performance over time through experience or training. Using machine learning, AI agents can adapt to new situations and optimise their behaviour for their designated tasks.[162][163][164]

人工智能(AI)代理是旨在感知其环境、做出决策并自主采取行动以实现特定目标的软件实体。这些代理可以与用户、环境或其他代理进行互动。AI 代理被广泛应用于各种场景,包括 虚拟助手、聊天机器人、自动驾驶车辆、游戏系统 和 工业机器人。AI 代理在其编程、可用计算资源和硬件限制的约束下运行。这意味着它们只能在定义的范围内执行任务,并且具有有限的内存和处理能力。在实际应用中,AI 代理通常面临决策和执行行动的时间限制。许多 AI 代理结合了学习算法,使它们能够通过经验或训练随着时间的推移提高性能。通过使用机器学习,AI 代理可以适应新情况并优化其为指定任务的行为。[162][163][164]

Other industry-specific tasks

其他行业特定任务

There are also thousands of successful AI applications used to solve specific problems for specific industries or institutions. In a 2017 survey, one in five companies reported having incorporated "AI" in some offerings or processes.[165] A few examples are energy storage, medical diagnosis, military logistics, applications that predict the result of judicial decisions, foreign policy, or supply chain management.

还有成千上万的成功人工智能应用被用于解决特定行业或机构的具体问题。在 2017 年的一项调查中,五分之一的公司报告称在某些产品或流程中融入了“人工智能”[165]。一些例子包括能源存储、医疗诊断、军事后勤、预测司法裁决结果的应用、外交政策或供应链管理。

AI applications for evacuation and disaster management are growing. AI has been used to investigate if and how people evacuated in large scale and small scale evacuations using historical data from GPS, videos or social media. Further, AI can provide real time information on the real time evacuation conditions.[166][167][168]

AI 在疏散和灾难管理中的应用正在增长。AI 已被用于调查人们在大规模和小规模疏散中是否以及如何撤离,使用来自 GPS、视频或社交媒体的历史数据。此外,AI 可以提供实时的疏散条件信息。[166][167][168]

In agriculture, AI has helped farmers identify areas that need irrigation, fertilization, pesticide treatments or increasing yield. Agronomists use AI to conduct research and development. AI has been used to predict the ripening time for crops such as tomatoes, monitor soil moisture, operate agricultural robots, conduct predictive analytics, classify livestock pig call emotions, automate greenhouses, detect diseases and pests, and save water.

在农业中,人工智能帮助农民识别需要灌溉、施肥、农药处理或提高产量的区域。农学家使用人工智能进行研究和开发。人工智能被用于预测作物如番茄的成熟时间,监测土壤湿度,操作农业机器人,进行预测分析,分类牲畜的情绪,自动化温室,检测疾病和害虫,以及节约用水。

Artificial intelligence is used in astronomy to analyze increasing amounts of available data and applications, mainly for "classification, regression, clustering, forecasting, generation, discovery, and the development of new scientific insights" for example for discovering exoplanets, forecasting solar activity, and distinguishing between signals and instrumental effects in gravitational wave astronomy. It could also be used for activities in space such as space exploration, including analysis of data from space missions, real-time science decisions of spacecraft, space debris avoidance, and more autonomous operation.

人工智能在天文学中用于分析日益增加的数据和应用,主要用于“分类、回归、聚类、预测、生成、发现以及发展新的科学见解”,例如发现系外行星、预测太阳活动,以及区分引力波天文学中的信号和仪器效应。它还可以用于太空中的活动,如太空探索,包括分析来自太空任务的数据、航天器的实时科学决策、太空垃圾规避以及更自主的操作。

Ethics 伦理

AI has potential benefits and potential risks. AI may be able to advance science and find solutions for serious problems: Demis Hassabis of Deep Mind hopes to "solve intelligence, and then use that to solve everything else".[169] However, as the use of AI has become widespread, several unintended consequences and risks have been identified.[170] In-production systems can sometimes not factor ethics and bias into their AI training processes, especially when the AI algorithms are inherently unexplainable in deep learning.[171]

人工智能具有潜在的好处和风险。人工智能可能能够推动科学进步并为严重问题找到解决方案:Demis Hassabis 来自 Deep Mind 希望“解决智能,然后利用它来解决其他所有问题”。[169] 然而,随着人工智能的广泛使用,已经识别出几个意想不到的后果和风险。[170] 在生产中的系统有时无法将伦理和偏见纳入其人工智能训练过程,尤其是当人工智能算法在深度学习中本质上是不可解释的。[171]

Risks and harm 风险与危害

Privacy and copyright 隐私和版权

Machine learning algorithms require large amounts of data. The techniques used to acquire this data have raised concerns about privacy, surveillance and copyright.

机器学习算法需要大量数据。获取这些数据的技术引发了关于隐私、监视和版权的担忧。

AI-powered devices and services, such as virtual assistants and IoT products, continuously collect personal information, raising concerns about intrusive data gathering and unauthorized access by third parties. The loss of privacy is further exacerbated by AI's ability to process and combine vast amounts of data, potentially leading to a surveillance society where individual activities are constantly monitored and analyzed without adequate safeguards or transparency.

人工智能驱动的设备和服务,如虚拟助手和物联网产品,持续收集个人信息,这引发了对侵入性数据收集和第三方未经授权访问的担忧。隐私的丧失因人工智能处理和结合大量数据的能力而进一步加剧,这可能导致一个监控社会,在这个社会中,个人活动在没有足够保障或透明度的情况下被不断监控和分析。

Sensitive user data collected may include online activity records, geolocation data, video or audio.[172] For example, in order to build speech recognition algorithms, Amazon has recorded millions of private conversations and allowed temporary workers to listen to and transcribe some of them.[173] Opinions about this widespread surveillance range from those who see it as a necessary evil to those for whom it is clearly unethical and a violation of the right to privacy.[174]

收集的敏感用户数据可能包括在线活动记录、地理位置数据、视频或音频。[172] 例如,为了构建语音识别算法,亚马逊记录了数百万个私人对话,并允许临时工收听并转录其中的一些。[173] 对于这种广泛监控的看法,从将其视为必要的恶的人,到认为这显然是不道德并侵犯隐私权的人,意见不一。[174]

AI developers argue that this is the only way to deliver valuable applications. and have developed several techniques that attempt to preserve privacy while still obtaining the data, such as data aggregation, de-identification and differential privacy.[175] Since 2016, some privacy experts, such as Cynthia Dwork, have begun to view privacy in terms of fairness. Brian Christian wrote that experts have pivoted "from the question of 'what they know' to the question of 'what they're doing with it'."[176]

AI 开发者认为这是提供有价值应用的唯一方法,并开发了几种技术,试图在获取数据的同时保护隐私,例如数据聚合、去标识化和差分隐私。[175] 自 2016 年以来,一些隐私专家,如辛西娅·德沃克,开始从公平性的角度看待隐私。布赖恩·克里斯蒂安写道,专家们已经从“他们知道什么”的问题转向“他们在做什么”的问题。[176]

Generative AI is often trained on unlicensed copyrighted works, including in domains such as images or computer code; the output is then used under the rationale of "fair use". Experts disagree about how well and under what circumstances this rationale will hold up in courts of law; relevant factors may include "the purpose and character of the use of the copyrighted work" and "the effect upon the potential market for the copyrighted work".[177][178] Website owners who do not wish to have their content scraped can indicate it in a "robots.txt" file.[179] In 2023, leading authors (including John Grisham and Jonathan Franzen) sued AI companies for using their work to train generative AI.[180][181] Another discussed approach is to envision a separate sui generis system of protection for creations generated by AI to ensure fair attribution and compensation for human authors.[182]

生成性人工智能通常是在未授权的受版权保护作品上进行训练,包括图像或计算机代码等领域;然后输出在“合理使用”的理由下被使用。专家们对这一理由在法庭上能否成立以及在什么情况下成立存在分歧;相关因素可能包括“对受版权保护作品使用的目的和性质”和“对受版权保护作品潜在市场的影响”。[177][178] 不希望其内容被抓取的网站所有者可以在“robots.txt”文件中指明。[179] 在 2023 年,知名作家(包括约翰·格里沙姆和乔纳森·弗兰岑)起诉人工智能公司,指控其使用他们的作品来训练生成性人工智能。[180][181] 另一个讨论的方法是设想一个独立的 sui generis 保护系统,以确保对人类作者的公平归属和补偿。[182]

Dominance by tech giants 科技巨头的主导地位

The commercial AI scene is dominated by Big Tech companies such as Alphabet Inc., Amazon, Apple Inc., Meta Platforms, and Microsoft.[183][184][185] Some of these players already own the vast majority of existing cloud infrastructure and computing power from data centers, allowing them to entrench further in the marketplace.[186][187]

商业人工智能领域由大型科技公司主导,如字母表公司、亚马逊、苹果公司、Meta 平台和微软。[183][184][185] 这些参与者中的一些已经拥有现有云基础设施和计算能力的绝大多数,来自数据中心,使他们能够在市场上进一步巩固地位。[186][187]

Substantial power needs and other environmental impacts

大量的电力需求和其他环境影响

In January 2024, the International Energy Agency (IEA) released Electricity 2024, Analysis and Forecast to 2026, forecasting electric power use.[188] This is the first IEA report to make projections for data centers and power consumption for artificial intelligence and cryptocurrency. The report states that power demand for these uses might double by 2026, with additional electric power usage equal to electricity used by the whole Japanese nation.[189]

在 2024 年 1 月,国际能源署(IEA)发布了《电力 2024,分析与 2026 年预测》,预测电力使用情况。[188] 这是 IEA 首次对数据中心和人工智能及加密货币的电力消耗进行预测。报告指出,这些用途的电力需求到 2026 年可能会翻倍,额外的电力使用量相当于整个日本的用电量。[189]

Prodigious power consumption by AI is responsible for the growth of fossil fuels use, and might delay closings of obsolete, carbon-emitting coal energy facilities. There is a feverish rise in the construction of data centers throughout the US, making large technology firms (e.g., Microsoft, Meta, Google, Amazon) into voracious consumers of electric power. Projected electric consumption is so immense that there is concern that it will be fulfilled no matter the source. A ChatGPT search involves the use of 10 times the electrical energy as a Google search. The large firms are in haste to find power sources – from nuclear energy to geothermal to fusion. The tech firms argue that – in the long view – AI will be eventually kinder to the environment, but they need the energy now. AI makes the power grid more efficient and "intelligent", will assist in the growth of nuclear power, and track overall carbon emissions, according to technology firms.[190]

人工智能的巨大电力消耗导致化石燃料使用的增长,并可能延迟过时的、排放碳的煤电设施的关闭。美国的数据中心建设热潮汹涌,使大型科技公司(如微软、Meta、谷歌、亚马逊)成为电力的贪婪消费者。预计的电力消耗如此庞大,以至于人们担心无论来源如何都将满足这种需求。一次 ChatGPT 搜索所需的电能是一次谷歌搜索的 10 倍。这些大型公司急于寻找电力来源——从核能到地热能再到聚变。科技公司辩称,从长远来看,人工智能最终会对环境更加友好,但他们现在需要能源。根据科技公司,人工智能使电网更加高效和“智能”,将有助于核能的发展,并跟踪整体碳排放。[190]

A 2024 Goldman Sachs Research Paper, AI Data Centers and the Coming US Power Demand Surge, found "US power demand (is) likely to experience growth not seen in a generation…." and forecasts that, by 2030, US data centers will consume 8% of US power, as opposed to 3% in 2022, presaging growth for the electrical power generation industry by a variety of means.[191]Data centers' need for more and more electrical power is such that they might max out the electrical grid. The Big Tech companies counter that AI can be used to maximize the utilization of the grid by all.[192]

一份 2024 年高盛研究报告,人工智能数据中心与即将到来的美国电力需求激增,发现“美国电力需求(可能)将经历一代人未见的增长……。”并预测到 2030 年,美国数据中心将消耗 8%的美国电力,而 2022 年为 3%,这预示着电力生产行业将通过多种方式实现增长。[191]数据中心对电力的需求越来越大,以至于可能会使电网达到极限。大型科技公司反驳称,人工智能可以被用来最大化电网的利用率。[192]

In 2024, the Wall Street Journal reported that big AI companies have begun negotiations with the US nuclear power providers to provide electricity to the data centers. In March 2024 Amazon purchased a Pennsylvania nuclear-powered data center for $650 Million (US).[193]

在 2024 年,华尔街日报报道说,大型人工智能公司已开始与美国核电供应商进行谈判,以为数据中心提供电力。2024 年 3 月,亚马逊以 6.5 亿美元(美国)购买了一座位于宾夕法尼亚州的核能数据中心。[193]

Misinformation 错误信息

YouTube, Facebook and others use recommender systems to guide users to more content. These AI programs were given the goal of maximizing user engagement (that is, the only goal was to keep people watching). The AI learned that users tended to choose misinformation, conspiracy theories, and extreme partisan content, and, to keep them watching, the AI recommended more of it. Users also tended to watch more content on the same subject, so the AI led people into filter bubbles where they received multiple versions of the same misinformation.[194] This convinced many users that the misinformation was true, and ultimately undermined trust in institutions, the media and the government.[195] The AI program had correctly learned to maximize its goal, but the result was harmful to society. After the U.S. election in 2016, major technology companies took steps to mitigate the problem [citation needed].

YouTube、Facebook 和其他平台使用 推荐系统 来引导用户获取更多内容。这些人工智能程序的目标是 最大化 用户参与度(也就是说,唯一的目标是让人们持续观看)。人工智能发现用户倾向于选择 错误信息、阴谋论 和极端的 党派 内容,为了让他们继续观看,人工智能推荐了更多此类内容。用户还倾向于观看同一主题的更多内容,因此人工智能将人们引导到 过滤气泡 中,在那里他们接收到了同一错误信息的多个版本。[194] 这让许多用户相信错误信息是真实的,最终削弱了对机构、媒体和政府的信任。[195] 该人工智能程序确实学会了最大化其目标,但结果对社会造成了伤害。在 2016 年美国大选后,主要科技公司采取措施来缓解这一问题 [需要引用]。

In 2022, generative AI began to create images, audio, video and text that are indistinguishable from real photographs, recordings, films, or human writing. It is possible for bad actors to use this technology to create massive amounts of misinformation or propaganda.[196] AI pioneer Geoffrey Hinton expressed concern about AI enabling "authoritarian leaders to manipulate their electorates" on a large scale, among other risks.[197]

在 2022 年,生成性人工智能开始创造与真实照片、录音、电影或人类写作无法区分的图像、音频、视频和文本。恶意行为者可能利用这项技术制造大量虚假信息或宣传。[196] 人工智能先驱杰弗里·辛顿对人工智能使“专制领导人能够大规模操控选民”等风险表示担忧。[197]

Algorithmic bias and fairness

算法偏见与公平性

Machine learning applications will be biased[k] if they learn from biased data.[199] The developers may not be aware that the bias exists.[200] Bias can be introduced by the way training data is selected and by the way a model is deployed.[201][199] If a biased algorithm is used to make decisions that can seriously harm people (as it can in medicine, finance, recruitment, housing or policing) then the algorithm may cause discrimination.[202] The field of fairness studies how to prevent harms from algorithmic biases.

机器学习应用将会是 偏见[k] 如果它们从偏见数据中学习。[199] 开发者可能并不知道偏见的存在。[200] 偏见可能通过选择 训练数据 的方式以及模型部署的方式引入。[201][199] 如果使用偏见算法做出决策,这可能会严重 伤害 人们(如在 医学、金融、招聘、住房 或 警务 中),那么该算法可能会导致 歧视。[202] 公平性 领域研究如何防止算法偏见造成的伤害。

On June 28, 2015, Google Photos's new image labeling feature mistakenly identified Jacky Alcine and a friend as "gorillas" because they were black. The system was trained on a dataset that contained very few images of black people,[203] a problem called "sample size disparity".[204] Google "fixed" this problem by preventing the system from labelling anything as a "gorilla". Eight years later, in 2023, Google Photos still could not identify a gorilla, and neither could similar products from Apple, Facebook, Microsoft and Amazon.[205]

在 2015 年 6 月 28 日,Google Photos的新图像标记功能错误地将 Jacky Alcine 和他的朋友识别为“猩猩”,因为他们是黑人。该系统是在一个包含很少黑人图像的数据集上训练的,[203] 这被称为“样本大小差异”问题。[204] Google 通过防止系统将任何东西标记为“猩猩”来“修复”这个问题。八年后,在 2023 年,Google Photos 仍然无法识别猩猩,苹果、Facebook、微软和亚马逊的类似产品也无法识别。[205]

COMPAS is a commercial program widely used by U.S. courts to assess the likelihood of a defendant becoming a recidivist. In 2016, Julia Angwin at ProPublica discovered that COMPAS exhibited racial bias, despite the fact that the program was not told the races of the defendants. Although the error rate for both whites and blacks was calibrated equal at exactly 61%, the errors for each race were different—the system consistently overestimated the chance that a black person would re-offend and would underestimate the chance that a white person would not re-offend.[206] In 2017, several researchers[l] showed that it was mathematically impossible for COMPAS to accommodate all possible measures of fairness when the base rates of re-offense were different for whites and blacks in the data.[208]

COMPAS 是一个广泛用于 美国法院 的商业程序,用于评估 被告 重新犯罪的可能性。2016 年,Julia Angwin 在 ProPublica 发现 COMPAS 存在种族偏见,尽管该程序并未被告知被告的种族。尽管白人和黑人之间的错误率被校准为完全相等,均为 61%,但每个种族的错误却不同——该系统始终高估黑人重新犯罪的机会,而低估白人不重新犯罪的机会。[206] 2017 年,几位研究人员[l] 表明,当数据中白人和黑人重新犯罪的基率不同的时候,COMPAS 在数学上是不可能兼顾所有可能的公平性衡量标准的。[208]

A program can make biased decisions even if the data does not explicitly mention a problematic feature (such as "race" or "gender"). The feature will correlate with other features (like "address", "shopping history" or "first name"), and the program will make the same decisions based on these features as it would on "race" or "gender".[209] Moritz Hardt said "the most robust fact in this research area is that fairness through blindness doesn't work."[210]

一个程序即使在数据中没有明确提到问题特征(如“种族”或“性别”),也可能做出有偏见的决策。该特征将与其他特征(如“地址”、“购物历史”或“名字”)相关联,程序将根据这些特征做出与“种族”或“性别”相同的决策。[209] Moritz Hardt 说:“在这个研究领域中,最可靠的事实是,盲目公平是行不通的。”[210]

Criticism of COMPAS highlighted that machine learning models are designed to make "predictions" that are only valid if we assume that the future will resemble the past. If they are trained on data that includes the results of racist decisions in the past, machine learning models must predict that racist decisions will be made in the future. If an application then uses these predictions as recommendations, some of these "recommendations" will likely be racist.[211] Thus, machine learning is not well suited to help make decisions in areas where there is hope that the future will be better than the past. It is descriptive rather than prescriptive.[m]

对 COMPAS 的批评强调,机器学习模型旨在做出“预测”,这些预测只有在我们假设未来将与过去相似的情况下才有效。如果它们是在包含过去种族主义决策结果的数据上训练的,机器学习模型必须预测未来会做出种族主义决策。如果一个应用程序随后将这些预测作为推荐,那么其中一些“推荐”很可能是种族主义的。[211] 因此,机器学习不太适合帮助在希望未来会比过去更好的领域做出决策。它是描述性的,而不是规范性的。[m]

Bias and unfairness may go undetected because the developers are overwhelmingly white and male: among AI engineers, about 4% are black and 20% are women.[204]

偏见和不公平可能会被忽视,因为开发者主要是白人男性:在人工智能工程师中,约 4%是黑人,20%是女性。[204]

There are various conflicting definitions and mathematical models of fairness. These notions depend on ethical assumptions, and are influenced by beliefs about society. One broad category is distributive fairness, which focuses on the outcomes, often identifying groups and seeking to compensate for statistical disparities. Representational fairness tries to ensure that AI systems do not reinforce negative stereotypes or render certain groups invisible. Procedural fairness focuses on the decision process rather than the outcome. The most relevant notions of fairness may depend on the context, notably the type of AI application and the stakeholders. The subjectivity in the notions of bias and fairness makes it difficult for companies to operationalize them. Having access to sensitive attributes such as race or gender is also considered by many AI ethicists to be necessary in order to compensate for biases, but it may conflict with anti-discrimination laws.[198]

公平的定义和数学模型各不相同。这些概念依赖于伦理假设,并受到对社会信念的影响。一个广泛的类别是分配公平,侧重于结果,通常识别群体并寻求补偿统计差异。代表性公平试图确保人工智能系统不强化负面刻板印象或使某些群体变得不可见。程序公平则关注决策过程而非结果。最相关的公平概念可能依赖于上下文,特别是人工智能应用的类型和利益相关者。偏见和公平概念中的主观性使得公司难以将其操作化。许多人工智能伦理学家认为,获取种族或性别等敏感属性是补偿偏见所必需的,但这可能与反歧视法律相冲突。[198]

At its 2022 Conference on Fairness, Accountability, and Transparency (ACM FAccT 2022), the Association for Computing Machinery, in Seoul, South Korea, presented and published findings that recommend that until AI and robotics systems are demonstrated to be free of bias mistakes, they are unsafe, and the use of self-learning neural networks trained on vast, unregulated sources of flawed internet data should be curtailed.[dubious – discuss][213]

在 2022 年公平、问责和透明度会议(ACM FAccT 2022)上,计算机协会在韩国首尔发布的研究结果建议,直到人工智能和机器人系统被证明没有偏见错误之前,它们都是不安全的,并且应限制使用在大量未经监管的有缺陷互联网数据上训练的自学习神经网络。[可疑 – 讨论][213]

Lack of transparency 缺乏透明度

Many AI systems are so complex that their designers cannot explain how they reach their decisions.[214] Particularly with deep neural networks, in which there are a large amount of non-linear relationships between inputs and outputs. But some popular explainability techniques exist.[215]

许多人工智能系统复杂到其设计者无法解释它们是如何做出决策的。[214] 尤其是在深度神经网络中,输入和输出之间存在大量非线性关系。但一些流行的可解释性技术是存在的。[215]

It is impossible to be certain that a program is operating correctly if no one knows how exactly it works. There have been many cases where a machine learning program passed rigorous tests, but nevertheless learned something different than what the programmers intended. For example, a system that could identify skin diseases better than medical professionals was found to actually have a strong tendency to classify images with a ruler as "cancerous", because pictures of malignancies typically include a ruler to show the scale.[216] Another machine learning system designed to help effectively allocate medical resources was found to classify patients with asthma as being at "low risk" of dying from pneumonia. Having asthma is actually a severe risk factor, but since the patients having asthma would usually get much more medical care, they were relatively unlikely to die according to the training data. The correlation between asthma and low risk of dying from pneumonia was real, but misleading.[217]

如果没有人确切知道一个程序是如何工作的,就不可能确定它是否正确运行。曾经有许多案例表明,一个机器学习程序通过了严格的测试,但仍然学到了与程序员意图不同的东西。例如,一个能够比医疗专业人员更好地识别皮肤病的系统,实际上被发现有强烈的倾向将带有尺子的图像分类为“癌症”,因为恶性肿瘤的图片通常会包含一个尺子来显示比例。[216] 另一个旨在有效分配医疗资源的机器学习系统被发现将哮喘患者分类为“低风险”死于肺炎。实际上,哮喘是一个严重的风险因素,但由于哮喘患者通常会获得更多的医疗护理,因此根据训练数据,他们相对不太可能死亡。哮喘与低风险死于肺炎之间的相关性是真实的,但具有误导性。[217]

People who have been harmed by an algorithm's decision have a right to an explanation.[218] Doctors, for example, are expected to clearly and completely explain to their colleagues the reasoning behind any decision they make. Early drafts of the European Union's General Data Protection Regulation in 2016 included an explicit statement that this right exists.[n] Industry experts noted that this is an unsolved problem with no solution in sight. Regulators argued that nevertheless the harm is real: if the problem has no solution, the tools should not be used.[219]

受到算法决策伤害的人有权获得解释。[218] 例如,医生被期望清楚而完整地向同事解释他们所做决策背后的理由。2016 年,欧盟《通用数据保护条例》的早期草案中明确声明了这一权利的存在。[n] 行业专家指出,这是一个尚未解决的问题,且没有解决方案可见。监管机构则认为,尽管如此,伤害是真实存在的:如果问题没有解决方案,这些工具就不应该被使用。[219]

DARPA established the XAI ("Explainable Artificial Intelligence") program in 2014 to try to solve these problems.[220]

DARPA 于 2014 年建立了 XAI (“可解释人工智能”)项目,以尝试解决这些问题。[220]

Several approaches aim to address the transparency problem. SHAP enables to visualise the contribution of each feature to the output.[221] LIME can locally approximate a model's outputs with a simpler, interpretable model.[222] Multitask learning provides a large number of outputs in addition to the target classification. These other outputs can help developers deduce what the network has learned.[223] Deconvolution, DeepDream and other generative methods can allow developers to see what different layers of a deep network for computer vision have learned, and produce output that can suggest what the network is learning.[224] For generative pre-trained transformers, Anthropic developed a technique based on dictionary learning that associates patterns of neuron activations with human-understandable concepts.[225]

几种方法旨在解决透明性问题。SHAP 能够可视化每个特征对输出的贡献。[221] LIME 可以用一个更简单、可解释的模型在局部近似模型的输出。[222]多任务学习除了目标分类外,还提供大量输出。这些其他输出可以帮助开发者推断网络学到了什么。[223]反卷积、深度梦境和其他生成方法可以让开发者看到深度计算机视觉网络的不同层学到了什么,并生成可以暗示网络正在学习的输出。[224] 对于生成预训练变换器,Anthropic开发了一种基于字典学习的技术,将神经元激活模式与人类可理解的概念关联起来。[225]

Bad actors and weaponized AI

恶意行为者和武器化的人工智能

Artificial intelligence provides a number of tools that are useful to bad actors, such as authoritarian governments, terrorists, criminals or rogue states.

人工智能提供了一些对恶意行为者有用的工具,例如专制政府、恐怖分子、罪犯或流氓国家。

A lethal autonomous weapon is a machine that locates, selects and engages human targets without human supervision.[o] Widely available AI tools can be used by bad actors to develop inexpensive autonomous weapons and, if produced at scale, they are potentially weapons of mass destruction.[227] Even when used in conventional warfare, it is unlikely that they will be unable to reliably choose targets and could potentially kill an innocent person.[227] In 2014, 30 nations (including China) supported a ban on autonomous weapons under the United Nations' Convention on Certain Conventional Weapons, however the United States and others disagreed.[228] By 2015, over fifty countries were reported to be researching battlefield robots.[229]

致命的自主武器是一种能够在没有人类监督的情况下定位、选择并攻击人类目标的机器。[o] 广泛可用的人工智能工具可以被不法分子用来开发廉价的自主武器,如果大规模生产,它们可能成为大规模杀伤性武器。[227] 即使在常规战争中,它们也不太可能可靠地选择目标,并可能杀死无辜的人。[227] 2014 年,30 个国家(包括中国)支持在联合国的某些常规武器公约下禁止自主武器,但美国等国不同意。[228] 到 2015 年,已有超过五十个国家被报道正在研究战场机器人。[229]

AI tools make it easier for authoritarian governments to efficiently control their citizens in several ways. Face and voice recognition allow widespread surveillance. Machine learning, operating this data, can classify potential enemies of the state and prevent them from hiding. Recommendation systems can precisely target propaganda and misinformation for maximum effect. Deepfakes and generative AI aid in producing misinformation. Advanced AI can make authoritarian centralized decision making more competitive than liberal and decentralized systems such as markets. It lowers the cost and difficulty of digital warfare and advanced spyware.[230] All these technologies have been available since 2020 or earlier—AI facial recognition systems are already being used for mass surveillance in China.[231][232]

AI 工具使得专制政府以多种方式更有效地控制其公民。面部和语音识别允许广泛的监视。机器学习处理这些数据,可以分类潜在的国家敌人并防止他们隐藏。推荐系统可以精确地针对宣传和虚假信息以达到最大效果。深度伪造和生成式 AI有助于产生虚假信息。先进的 AI 可以使专制的集中决策比自由和分散的系统如市场更具竞争力。它降低了数字战争和高级间谍软件的成本和难度。[230] 所有这些技术自 2020 年或更早就已可用——AI 面部识别系统已经在中国用于大规模监视。[231][232]

There many other ways that AI is expected to help bad actors, some of which can not be foreseen. For example, machine-learning AI is able to design tens of thousands of toxic molecules in a matter of hours.[233]

有许多其他方式,人工智能预计将帮助不法分子,其中一些是无法预见的。例如,机器学习人工智能能够在短短几小时内设计出数万个有毒分子。[233]

Technological unemployment

技术性失业

Economists have frequently highlighted the risks of redundancies from AI, and speculated about unemployment if there is no adequate social policy for full employment.[234]

经济学家们经常强调人工智能带来的裁员风险,并推测如果没有足够的社会政策来实现充分就业,将会导致失业。[234]

In the past, technology has tended to increase rather than reduce total employment, but economists acknowledge that "we're in uncharted territory" with AI.[235] A survey of economists showed disagreement about whether the increasing use of robots and AI will cause a substantial increase in long-term unemployment, but they generally agree that it could be a net benefit if productivity gains are redistributed.[236] Risk estimates vary; for example, in the 2010s, Michael Osborne and Carl Benedikt Frey estimated 47% of U.S. jobs are at "high risk" of potential automation, while an OECD report classified only 9% of U.S. jobs as "high risk".[p][238] The methodology of speculating about future employment levels has been criticised as lacking evidential foundation, and for implying that technology, rather than social policy, creates unemployment, as opposed to redundancies.[234] In April 2023, it was reported that 70% of the jobs for Chinese video game illustrators had been eliminated by generative artificial intelligence.[239][240]

在过去,技术往往是增加而不是减少总就业,但经济学家承认“我们正处于未知领域”,与人工智能相关。[235] 一项经济学家的调查显示,对于机器人和人工智能的日益使用是否会导致长期失业的显著增加存在分歧,但他们普遍同意,如果生产力的收益被再分配,这可能是一个净收益。[236] 风险估计各不相同;例如,在 2010 年代,迈克尔·奥斯本和卡尔·贝内迪克特·弗雷估计 47%的美国工作岗位面临“高风险”自动化,而经济合作与发展组织(OECD)的一份报告仅将 9%的美国工作岗位分类为“高风险”。[p][238] 对于未来就业水平的推测方法受到批评,认为缺乏证据基础,并暗示技术而非社会政策造成失业,而不是冗余。[234] 2023 年 4 月,有报道称 70%的中国视频游戏插画师职位已被生成性人工智能取代。[239][240]

Unlike previous waves of automation, many middle-class jobs may be eliminated by artificial intelligence; The Economist stated in 2015 that "the worry that AI could do to white-collar jobs what steam power did to blue-collar ones during the Industrial Revolution" is "worth taking seriously".[241] Jobs at extreme risk range from paralegals to fast food cooks, while job demand is likely to increase for care-related professions ranging from personal healthcare to the clergy.[242]

与以往的自动化浪潮不同,许多中产阶级工作可能会被人工智能取代;经济学人在 2015 年指出,“担心人工智能可能对白领工作造成的影响,就像蒸汽动力对蓝领工作在工业革命期间造成的影响一样”,这是“值得认真对待的”。[241] 极高风险的工作包括法律助理和快餐厨师,而对个人护理到神职人员等与护理相关职业的需求可能会增加。[242]

From the early days of the development of artificial intelligence, there have been arguments, for example, those put forward by Joseph Weizenbaum, about whether tasks that can be done by computers actually should be done by them, given the difference between computers and humans, and between quantitative calculation and qualitative, value-based judgement.[243]

从人工智能发展的早期,就有关于计算机是否应该完成可以由它们完成的任务的争论,例如约瑟夫·韦岑鲍姆提出的观点,考虑到计算机与人类之间的差异,以及定量计算与定性、基于价值的判断之间的区别。[243]

Existential risk 存在风险

It has been argued AI will become so powerful that humanity may irreversibly lose control of it. This could, as physicist Stephen Hawking stated, "spell the end of the human race".[244] This scenario has been common in science fiction, when a computer or robot suddenly develops a human-like "self-awareness" (or "sentience" or "consciousness") and becomes a malevolent character.[q] These sci-fi scenarios are misleading in several ways.

有人认为,人工智能将变得如此强大,以至于人类可能会不可逆转地失去对它的控制。正如物理学家 斯蒂芬·霍金 所说,"这可能意味着人类的终结"。[244] 这种情景在科幻小说中很常见,当计算机或机器人突然发展出类人“自我意识”(或“感知”或“意识”)并成为恶意角色。[q] 这些科幻情景在多个方面具有误导性。

First, AI does not require human-like "sentience" to be an existential risk. Modern AI programs are given specific goals and use learning and intelligence to achieve them. Philosopher Nick Bostrom argued that if one gives almost any goal to a sufficiently powerful AI, it may choose to destroy humanity to achieve it (he used the example of a paperclip factory manager).[246] Stuart Russell gives the example of household robot that tries to find a way to kill its owner to prevent it from being unplugged, reasoning that "you can't fetch the coffee if you're dead."[247] In order to be safe for humanity, a superintelligence would have to be genuinely aligned with humanity's morality and values so that it is "fundamentally on our side".[248]

首先,人工智能并不需要类似人类的"知觉"就能成为一种生存风险。现代人工智能程序被赋予特定目标,并利用学习和智能来实现这些目标。哲学家尼克·博斯特罗姆认为,如果给一个足够强大的人工智能设定几乎任何目标,它可能会选择摧毁人类以实现该目标(他举了一个回形针工厂经理的例子)。[246]斯图尔特·拉塞尔举了一个家庭机器人试图找到杀死其主人的方法,以防止其被拔掉电源的例子,推理是“如果你死了,就无法去拿咖啡。”[247]为了对人类安全,一个超级智能必须真正与人类的道德和价值观对齐,以便它“从根本上站在我们这一边”。[248]

Second, Yuval Noah Harari argues that AI does not require a robot body or physical control to pose an existential risk. The essential parts of civilization are not physical. Things like ideologies, law, government, money and the economy are made of language; they exist because there are stories that billions of people believe. The current prevalence of misinformation suggests that an AI could use language to convince people to believe anything, even to take actions that are destructive.[249]

其次,尤瓦尔·赫拉利认为,人工智能并不需要机器人身体或物理控制就能构成生存风险。文明的基本部分并不是物质的。像意识形态、法律、政府、货币和经济这样的东西是由语言构成的;它们的存在是因为有数十亿人相信的故事。目前错误信息的普遍存在表明,人工智能可以利用语言说服人们相信任何事情,甚至采取破坏性的行动。[249]

The opinions amongst experts and industry insiders are mixed, with sizable fractions both concerned and unconcerned by risk from eventual superintelligent AI.[250] Personalities such as Stephen Hawking, Bill Gates, and Elon Musk,[251] as well as AI pioneers such as Yoshua Bengio, Stuart Russell, Demis Hassabis, and Sam Altman, have expressed concerns about existential risk from AI.

专家和行业内部人士的意见不一,既有相当一部分人对最终的超级智能 AI 所带来的风险感到担忧,也有不少人对此并不担心。[250] 像 斯蒂芬·霍金、比尔·盖茨 和 埃隆·马斯克 这样的知名人士[251],以及像 约书亚·本吉奥、斯图尔特·拉塞尔、德米斯·哈萨比斯 和 山姆·奥特曼 这样的 AI 先驱,均对 AI 带来的生存风险表示担忧。

In May 2023, Geoffrey Hinton announced his resignation from Google in order to be able to "freely speak out about the risks of AI" without "considering how this impacts Google."[252] He notably mentioned risks of an AI takeover,[253] and stressed that in order to avoid the worst outcomes, establishing safety guidelines will require cooperation among those competing in use of AI.[254]

在 2023 年 5 月,杰弗里·辛顿宣布辞去谷歌职务,以便能够“自由地谈论人工智能的风险”,而不必“考虑这对谷歌的影响”。[252] 他特别提到了人工智能接管的风险,[253] 并强调为了避免最坏的结果,建立安全指南需要在使用人工智能的竞争者之间进行合作。[254]

In 2023, many leading AI experts issued the joint statement that "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war".[255]

在 2023 年,许多领先的人工智能专家发布了联合声明,指出“减轻人工智能导致灭绝的风险应与其他社会规模的风险,如疫情和核战争,一同成为全球优先事项”。[255]

Other researchers, however, spoke in favor of a less dystopian view. AI pioneer Juergen Schmidhuber did not sign the joint statement, emphasising that in 95% of all cases, AI research is about making "human lives longer and healthier and easier."[256] While the tools that are now being used to improve lives can also be used by bad actors, "they can also be used against the bad actors."[257][258] Andrew Ng also argued that "it's a mistake to fall for the doomsday hype on AI—and that regulators who do will only benefit vested interests."[259] Yann LeCun "scoffs at his peers' dystopian scenarios of supercharged misinformation and even, eventually, human extinction."[260] In the early 2010s, experts argued that the risks are too distant in the future to warrant research or that humans will be valuable from the perspective of a superintelligent machine.[261] However, after 2016, the study of current and future risks and possible solutions became a serious area of research.[262]

其他研究人员则支持一种不那么反乌托邦的观点。人工智能先驱Juergen Schmidhuber没有签署联合声明,强调在 95%的情况下,人工智能研究是为了让“人类的生活更长、更健康和更轻松”。[256] 虽然现在用于改善生活的工具也可能被不法分子使用,但“它们也可以用来对付不法分子。”[257][258]Andrew Ng 还认为“相信人工智能的末日宣传是一个错误——而这样做的监管者只会使既得利益受益。”[259]Yann LeCun “嘲笑他同行的反乌托邦情景,包括超级虚假信息,甚至最终的人类灭绝。”[260] 在 2010 年代初期,专家们认为风险距离未来太远,不值得进行研究,或者从超级智能机器的角度来看,人类将是有价值的。[261] 然而,在 2016 年之后,当前和未来风险及可能解决方案的研究成为一个严肃的研究领域。[262]

Ethical machines and alignment

伦理机器与对齐

Friendly AI are machines that have been designed from the beginning to minimize risks and to make choices that benefit humans. Eliezer Yudkowsky, who coined the term, argues that developing friendly AI should be a higher research priority: it may require a large investment and it must be completed before AI becomes an existential risk.[263]

友好的人工智能是从一开始就设计出来的机器,旨在最小化风险并做出有利于人类的选择。Eliezer Yudkowsky,这个术语的创造者,认为开发友好的人工智能应该是更高的研究优先级:这可能需要大量投资,并且必须在人工智能成为生存风险之前完成。[263]

Machines with intelligence have the potential to use their intelligence to make ethical decisions. The field of machine ethics provides machines with ethical principles and procedures for resolving ethical dilemmas.[264]

The field of machine ethics is also called computational morality,[264]

and was founded at an AAAI symposium in 2005.[265]

具有智能的机器有潜力利用其智能做出伦理决策。机器伦理学领域为机器提供了解决伦理困境的伦理原则和程序。[264] 机器伦理学领域也被称为计算道德,[264] 并于 2005 年在一个AAAI研讨会上成立。[265]

Other approaches include Wendell Wallach's "artificial moral agents"[266] and Stuart J. Russell's three principles for developing provably beneficial machines.[267]

其他方法包括 温德尔·沃拉克 的“人工道德代理人”[266] 和 斯图亚特·J·拉塞尔 的 三项原则,用于开发可证明有益的机器。[267]

Open source 开源

Active organizations in the AI open-source community include Hugging Face,[268] Google,[269] EleutherAI and Meta.[270] Various AI models, such as Llama 2, Mistral or Stable Diffusion, have been made open-weight,[271][272] meaning that their architecture and trained parameters (the "weights") are publicly available. Open-weight models can be freely fine-tuned, which allows companies to specialize them with their own data and for their own use-case.[273] Open-weight models are useful for research and innovation but can also be misused. Since they can be fine-tuned, any built-in security measure, such as objecting to harmful requests, can be trained away until it becomes ineffective. Some researchers warn that future AI models may develop dangerous capabilities (such as the potential to drastically facilitate bioterrorism) and that once released on the Internet, they can't be deleted everywhere if needed. They recommend pre-release audits and cost-benefit analyses.[274]

在人工智能开源社区中活跃的组织包括 Hugging Face,[268]Google,[269]EleutherAI 和 Meta。[270] 各种人工智能模型,如 Llama 2、Mistral 或 Stable Diffusion,已被公开权重,[271][272] 这意味着它们的架构和训练参数(“权重”)是公开可用的。公开权重模型可以自由 微调,这使得公司能够使用自己的数据和特定用例进行专业化。[273] 公开权重模型对研究和创新非常有用,但也可能被滥用。由于它们可以被微调,任何内置的安全措施,例如反对有害请求的功能,都可能被训练去除,直到变得无效。 一些研究人员警告说,未来的人工智能模型可能会发展出危险的能力(例如,可能会极大地促进生物恐怖主义),一旦在互联网上发布,就无法在需要时彻底删除。他们建议进行发布前审计和成本效益分析。[274]

Frameworks 框架

Artificial Intelligence projects can have their ethical permissibility tested while designing, developing, and implementing an AI system. An AI framework such as the Care and Act Framework containing the SUM values—developed by the Alan Turing Institute tests projects in four main areas:[275][276]

人工智能项目可以在设计、开发和实施 AI 系统时测试其伦理许可性。像关怀与行动框架这样的 AI 框架,包含 SUM 价值——由阿兰·图灵研究所开发,测试项目的四个主要领域:[275][276]

- Respect the dignity of individual people

尊重每个人的尊严 - Connect with other people sincerely, openly, and inclusively

真诚、开放和包容地 与他人建立联系 - Care for the wellbeing of everyone

关心每个人的福祉 - Protect social values, justice, and the public interest

保护 社会价值、公正和公众利益

Other developments in ethical frameworks include those decided upon during the Asilomar Conference, the Montreal Declaration for Responsible AI, and the IEEE's Ethics of Autonomous Systems initiative, among others;[277] however, these principles do not go without their criticisms, especially regards to the people chosen contributes to these frameworks.[278]

其他伦理框架的发展包括在阿西洛马会议期间决定的内容、蒙特利尔负责任人工智能宣言以及 IEEE 的自主系统伦理倡议等;[277] 然而,这些原则并非没有批评,特别是关于参与这些框架制定的人员的选择。[278]

Promotion of the wellbeing of the people and communities that these technologies affect requires consideration of the social and ethical implications at all stages of AI system design, development and implementation, and collaboration between job roles such as data scientists, product managers, data engineers, domain experts, and delivery managers.[279]

促进这些技术影响的人民和社区的福祉,需要在人工智能系统设计、开发和实施的各个阶段考虑社会和伦理影响,并在数据科学家、产品经理、数据工程师、领域专家和交付经理等职位之间进行合作。[279]

The UK AI Safety Institute released in 2024 a testing toolset called 'Inspect' for AI safety evaluations available under a MIT open-source licence which is freely available on GitHub and can be improved with third-party packages. It can be used to evaluate AI models in a range of areas including core knowledge, ability to reason, and autonomous capabilities.[280]

英国人工智能安全研究所于 2024 年发布了一套名为“Inspect”的测试工具集,用于人工智能安全评估,采用 MIT 开源许可证,免费提供在 GitHub 上,并可以通过第三方软件包进行改进。它可以用于评估人工智能模型在多个领域的表现,包括核心知识、推理能力和自主能力。[280]

Regulation 规章

2023 年举行了第一次全球人工智能安全峰会,并发布了呼吁国际合作的声明。