Imagine using tens of thousands of GPUs to train your neural network. Using multiple GPUs to train neural networks has become quite common with all deep learning frameworks, providing optimized, multi-GPU, and multi-machine training. Allreduce operations, used to sum gradients over multiple GPUs, have usually been implemented using rings [1] [2] to achieve full bandwidth. The downside of rings is that latency scales linearly with the number of GPUs, preventing scaling above hundreds of GPUs. Enter NCCL 2.4.

想象一下,使用成千上万的 GPU 来训练你的神经网络。利用多 GPU 训练神经网络已变得相当普遍,所有深度学习框架都提供了优化的多 GPU 和多机训练支持。用于在多个 GPU 上求和梯度的 Allreduce 操作,通常通过环形结构[1][2]实现,以达到全带宽。然而,环形结构的缺点是延迟随 GPU 数量线性增长,限制了其在数百个 GPU 以上的扩展能力。于是,NCCL 2.4 应运而生。

Many large scale experiments have replaced the flat ring by a hierarchical, 2D ring algorithm [3] [4] [5] to get reasonably good bandwidth while lowering latency.

许多大规模实验已采用分层二维环算法[3][4][5]替代平面环,以在降低延迟的同时获得相当不错的带宽。

NCCL 2.4 now adds double binary trees, which offer full bandwidth and a logarithmic latency even lower than 2D ring latency.

NCCL 2.4 现在增加了双二叉树,它提供了全带宽和比二维环延迟更低的对数延迟。

Double binary trees 双二叉树

双二叉树的概念不仅优化了广播和减少操作的带宽,还实现了对数级的延迟,这对于中小规模操作来说是一个巨大的进步。

Double binary trees were introduced in MPI in 2009 [6] and offer the advantage of combining both full bandwidth for broadcast and reduce operations (which can be combined into an allreduce performing a reduce, then a broadcast) and a logarithmic latency, enabling good performance on small and medium size operations.

双二叉树于 2009 年在 MPI 中引入[6],其优势在于结合了广播和归约操作(可合并为执行归约后再广播的全归约操作)的满带宽以及对数延迟,从而在中小规模操作上实现了良好的性能。

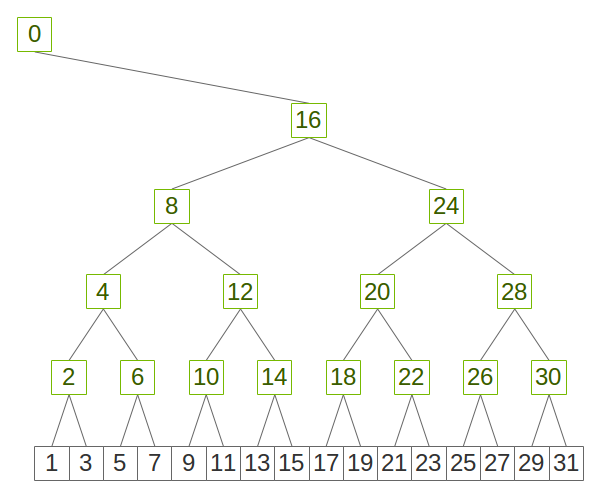

In NCCL, we build binary trees using an easy-to-implement pattern which maximizes locality, as shown in figure 1.

在 NCCL 中,我们采用了一种易于实现的模式构建二叉树,以最大化局部性,如图 1 所示。

图 1. 使用二次幂模式的二叉树

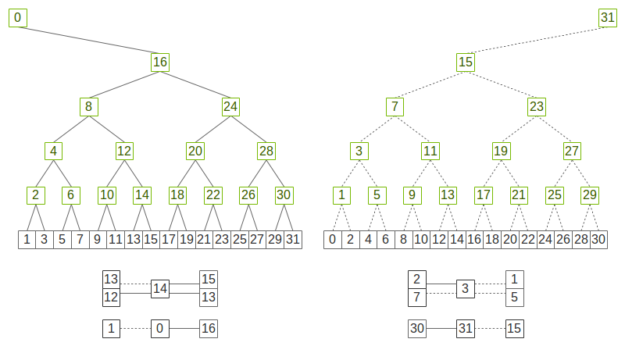

Double binary trees rely on the fact that half or less ranks in a binary tree are nodes and half (or more) ranks are leaves. Therefore, we can build a second tree using leaves as nodes and vice-versa for each binary tree. There might be one rank which is a leaf on both trees but no rank is a node on both trees.

双二叉树依赖于这样一个事实:在二叉树中,一半或更少的层级是节点,而另一半(或更多)的层级是叶子。因此,我们可以为每棵二叉树构建第二棵树,将叶子作为节点,反之亦然。可能存在一个层级在两棵树中都是叶子,但没有一个层级在两棵树中都是节点。

Figure 2 shows how we can use the pattern above to build a double binary tree by flipping the tree to invert nodes and leaves.

图 2 展示了我们如何利用上述模式通过翻转树来反转节点和叶子,从而构建一个双重二叉树。

图 2. 两棵互补的二叉树,其中每个层级在一棵树中至多是一个节点,在另一棵树中则是一个叶子节点。

If you superimpose the two trees, all ranks have both two parents and two children except for the root ranks, which only have one parent and one child. If we use each of the two trees to process half of the data, each rank will at most receive half of the data twice and send half of the data twice, which is as optimal as rings in terms of data sent/received.

如果将两棵树叠加,除了根节点外,所有层级都有两个父节点和两个子节点。根节点则只有一个父节点和一个子节点。如果我们分别使用这两棵树处理一半的数据,每个层级最多会接收两次一半的数据,并发送两次一半的数据,这在发送/接收数据方面与环形结构一样最优。

Performance at scale 大规模性能

NCCL 2.4的测试结果显示,使用双二叉树在延迟上有显著提升,特别是在大规模GPU集群上,性能提升可达180倍。尽管在跨L3交换机时带宽略有下降,但双二叉树的低初始延迟仍然显示出明显优势。

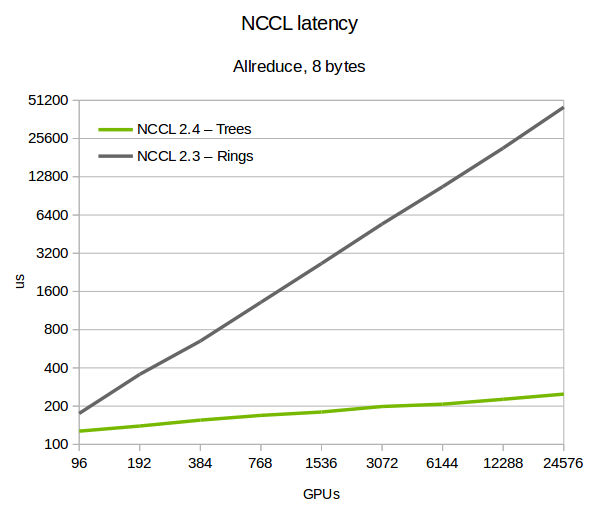

We tested NCCL 2.4 on various large machines, including the Summit [7] supercomputer, up to 24,576 GPUs. As figure 3 shows, latency improves significantly using trees. The difference from ring increases with the scale, with up to 180x improvement at 24k GPUs.

我们在多台大型机器上测试了 NCCL 2.4,包括 Summit [7]超级计算机,最多达到 24,576 个 GPU。如图 3 所示,使用树结构显著改善了延迟。与环状结构相比,差异随着规模增大而增加,在 24k GPU 时最多有 180 倍的提升。

图 3. 最多 24,576 个 GPU 上的 NCCL 延迟

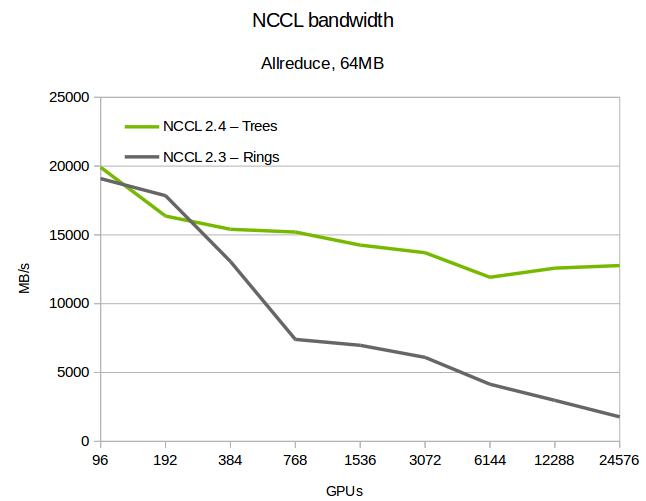

We confirmed that the system maintains full bandwidth with double binary trees. At scale, bandwidth degrades a bit when we cross L3 switches in the InfiniBand fabric, which we believe is due to inefficiencies between the NCCL communication pattern and InfiniBand routing algorithms.

我们确认系统在使用双二叉树时保持了全带宽。在扩展规模时,当我们在 InfiniBand 网络中跨越 L3 交换机时,带宽会略有下降,我们认为这是由于 NCCL 通信模式与 InfiniBand 路由算法之间的效率不足所致。

While not perfect, this might be improved in the future. Even so, trees still show a clear advantage even when limited in bandwidth because of their small initial latency. However, NCCL automatically switches back to rings when that pattern results in greater bandwidth.

虽然并不完美,但未来可能会有所改进。即便如此,由于初始延迟较小,即使在带宽受限的情况下,树形结构仍然显示出明显的优势。然而,当环形模式能带来更大带宽时,NCCL 会自动切换回环形结构。

图 4. 最多 24,576 个 GPU 上的 NCCL 总线带宽

Effect on DL training

对深度学习训练的影响

图5展示了深度学习训练性能的显著提升,并且随着GPU数量的增加而增强。我们比较了NCCL 2.3和NCCL 2.4,以及使用NCCL 2.3的二维分层环。

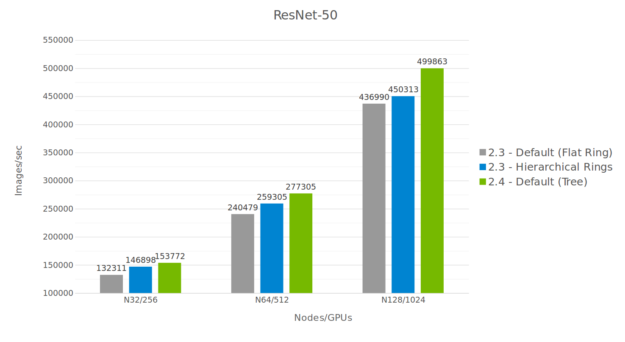

Figure 5 shows performance improvement on DL training is significant, and increases as we scale to larger numbers of GPUs.

图 5 显示深度学习训练的性能提升显著,并且随着我们扩展到更多 GPU 时,提升幅度增加。

We compared NCCL 2.3 and NCCL 2.4, as well as the 2D hierarchical rings using NCCL 2.3. The hierarchical ring is a 2D ring (intra-node/inter-node being the 2 dimensions) which performs a reduce-scatter operation inside the node, then multiple all-reduce operations between nodes, then an all-gather operation inside the node again.

我们比较了 NCCL 2.3 和 NCCL 2.4,以及使用 NCCL 2.3 的二维分层环。分层环是一种二维环(节点内/节点间为两个维度),它在节点内执行 reduce-scatter 操作,然后在节点间执行多个 all-reduce 操作,最后再次在节点内执行 all-gather 操作。

图 5. ResNet50 上的性能对比

While the hierarchical rings perform better than non-hierarchical rings, their advantage at scale remains constant. The tree algorithm, on the other hand, offers an increasing advantage as we scale.

虽然分层环的性能优于非分层环,但它们在规模上的优势保持不变。另一方面,随着规模的扩大,树算法提供了越来越大的优势。

Other features 其他功能

Network error handling 网络错误处理

NCCL 操作类似于 CUDA 内核,启动后需等待完成。但随着网络使用,网络错误可能导致操作挂起。NCCL 2.4 引入了新功能来处理这类问题。

NCCL operations behave as CUDA kernels. Once the operation launches on a CUDA stream, the user waits for its completion using stream semantics, e.g. cudaStreamQuery or cudaStreamSynchronize. It’s convenient to have the NCCL operation start as soon as the CUDA kernel producing the data completes, but it doesn’t let NCCL report errors during communication.

NCCL 操作的行为类似于 CUDA 内核。一旦操作在 CUDA 流上启动,用户使用流语义等待其完成,例如 cudaStreamQuery 或 cudaStreamSynchronize 。让 NCCL 操作在生成数据的 CUDA 内核完成后立即启动是很方便的,但这不允许 NCCL 在通信期间报告错误。

However, as we start using the network between nodes, network errors can occur and could prevent the NCCL operation from completing, causing a hang. This becomes increasingly important as we grow in size. NCCL 2.4 introduces two new verbs : ncclCommGetAsyncError and ncclCommAbort to handle this.

然而,随着我们开始在节点之间使用网络,可能会出现网络错误,并可能阻止 NCCL 操作完成,导致挂起。随着规模的扩大,这一点变得越来越重要。NCCL 2.4 引入了两个新的动词: ncclCommGetAsyncError 和 ncclCommAbort 来处理这个问题。

Programs can call ncclCommGetAsyncError in a loop waiting for operations to complete. If an error happens, they can abort the application or try to only abort the communicator operation with ncclCommAbort, then recreate a new communicator with the remaining nodes.

程序可以在循环中调用 ncclCommGetAsyncError 以等待操作完成。如果发生错误,它们可以中止应用程序,或者尝试仅通过 ncclCommAbort 中止通信器操作,然后使用剩余的节点重新创建一个新的通信器。

An example of using those two functions can be found in the documentation. Here is a simplified example illustrating the usage of those two functions :

使用这两个函数的示例可以在文档中找到。以下是一个简化的示例,展示了这两个函数的用法:

int ncclStreamSynchronize(cudaStream_t stream, ncclComm_t comm) { while (1) { cudaError_t cudaErr = cudaStreamQuery(stream); ncclResult_t ncclAsyncErr, ncclErr; ncclErr = ncclCommGetAsyncError(comm, &ncclAsyncErr); if (cudaErr == cudaSuccess) return 0; if (cudaErr != cudaErrorNotReady || ncclErr != ncclSuccess) { printf("CUDA/NCCL Error : %d/%d\n", cudaErr, ncclErr); return 1; // Abnormal error } if (ncclAsyncErr != ncclSuccess) { // Async network error // Stop and destroy communicator if (ncclCommAbort(comm) != ncclSuccess) { printf("NCCL Comm Abort error : %d\n", ncclErr); return 1; // Abnormal error } return 2; // Normal error : may recreate a new comm } } }

This function can be generalized to including polling for other asynchronous operations, such as MPI, socket, or other I/O operations.

此函数可推广至包含轮询其他异步操作,如 MPI、套接字或其他 I/O 操作。

Support for more networks

支持更多网络

NCCL 2.4 引入了对 TCP/IP 套接字和 InfiniBand Verbs 的原生支持,这对于网络通信性能的提升至关重要。

NCCL 2.4 comes with native support for TCP/IP Sockets and InfiniBand Verbs. TCP/IP sockets should work on most networks but can also be bandwidth- and latency-limited due to limitations in the kernel driver. CPU affinity can also be complex to handle.

NCCL 2.4 提供了对 TCP/IP 套接字和 InfiniBand Verbs 的原生支持。TCP/IP 套接字在大多数网络上应该都能工作,但由于内核驱动的限制,可能会受到带宽和延迟的限制。CPU 亲和性处理也可能比较复杂。

The InfiniBand verbs library enables an application to bypass the kernel and directly handle all network communication from user space. This is the prefered API to use on InfiniBand and RDMA over Converged Ethernet (RoCE) capable hardware..

InfiniBand 动词库使应用程序能够绕过内核,直接从用户空间处理所有网络通信。这是在支持 InfiniBand 和基于融合以太网的 RDMA(RoCE)硬件上使用的首选 API。

Some other networking providers have different network APIs which provides better performance than TCP/IP sockets. Those vendors can get the best performance from NCCL by implementing an external network plugin to be used by NCCL when present. This can be provided in the form of a library named libnccl-net.so. NCCL includes an example in ext-net/dummy. Check out one example in the plugin for the libfabrics API.

其他一些网络提供商拥有不同的网络 API,这些 API 提供了比 TCP/IP 套接字更好的性能。这些供应商可以通过实现一个外部网络插件来让 NCCL 在存在时使用,从而获得最佳性能。这可以以名为 libnccl-net.so 的库的形式提供。NCCL 在 ext-net/dummy 中包含了一个示例。查看插件中针对 libfabrics API 的一个示例。

Get NCCL 2.4 Today

立即获取 NCCL 2.4

You can get started scaling your applications to massive numbers of GPUs today. Pre-built NCCL package can be obtained from the download page. The source code is also available on github.

您今天就可以开始将应用程序扩展到大量 GPU。预构建的 NCCL 包可以从下载页面获取。源代码也可以在 GitHub 上找到。

References 参考

这里提到的参考文献展示了深度学习训练系统的高可扩展性和效率,特别是如何利用混合精度训练在极短的时间内完成ImageNet的训练。

[1] Baidu Allreduce [1] 百度 Allreduce

[2] Horovod

[3] Xianyan Jia, Shutao Song, Wei He, Yangzihao Wang, Haidong Rong, Feihu Zhou, Liqiang Xie, Zhenyu Guo, Yuanzhou Yang, Liwei Yu, Tiegang Chen, Guangxiao Hu, Shaohuai Shi, Xiaowen Chu; Highly Scalable Deep Learning Training System with Mixed-Precision: Training ImageNet in Four Minutes

[3] 贾先岩, 宋书涛, 何伟, 王阳昭, 荣海东, 周飞虎, 谢立强, 郭振宇, 杨远洲, 于立伟, 陈铁钢, 胡广晓, 石少怀, 初晓文; 高度可扩展的混合精度深度学习训练系统:四分钟内训练 ImageNet

[4] Hiroaki Mikami, Hisahiro Suganuma, Pongsakorn U-chupala, Yoshiki Tanaka, Yuichi Kageyama; ImageNet/ResNet-50 Training in 224 Seconds

[4] 三上浩明,菅沼久浩,U-chupala Pongsakorn,田中良树,影山雄一;ImageNet/ResNet-50 在 224 秒内完成训练

[5] Chris Ying, Sameer Kumar, Dehao Chen, Tao Wang, Youlong Cheng; Image Classification at Supercomputer Scale

[5] 克里斯·应,萨米尔·库马尔,陈德豪,王涛,程友龙;超级计算机规模的图像分类

[6] Peter Sanders; Jochen Speck, Jesper Larsson Träff (2009); Two-tree algorithms for full bandwidth broadcast, reduction and scan

[6] 彼得·桑德斯;约亨·斯佩克,杰斯珀·拉尔森·特拉夫(2009);用于全带宽广播、归约和扫描的双树算法

[7] Summit Supercomputer [7] 顶峰超级计算机

I wonder whether the Flat Ring is composed of a reduce-scatter then an all-gather? Or just a reduce and broadcast?