AI Advances Are Accelerating, and We’re Not Ready

AI is improving more quickly than we realize. The economic and societal impact could be massive.

当 OpenAI 于 2022 年推出 ChatGPT 时,人们立刻就能感受到人工智能领域的巨大进步。毕竟,我们都会说一门语言,并且能够欣赏聊天机器人如何以流畅、 接近人类的方式回答问题。从那时起,人工智能取得了巨大的进步,但我们中的许多人——让我委婉地说——太过幼稚,以至于没有注意到这一点。

麻省理工学院物理学教授马克斯·泰格马克表示,我们获取专业知识的能力有限,这让我们更难意识到技术进步令人不安的速度。大多数人并非数学高手,可能不知道,就在过去几年里,人工智能的掌握程度已经从高中代数水平发展到忍者级别的微积分。同样,世界上的音乐大师相对较少,但人工智能最近却变得擅长阅读乐谱、理解乐理,甚至创作主流音乐。“很多人低估了在很短的时间内发生了多少事情,”泰格马克说。“现在事情发展得非常快。”

旧金山目前仍是人工智能运动的中心,人们可以从一波波新的计算机学习方法、聊天机器人功能和播客热词中追踪这些进步。今年 2 月,OpenAI 发布了一款名为 “深度研究” (Deep Research)的工具,其功能类似于一位足智多谋的同事,通过挖掘网络事实、整合信息并生成图表报告来回答深入的疑问。另一项重大进展是,OpenAI 和 Anthropic(由首席执行官达里奥·阿莫迪(Dario Amodei) 和一群前 OpenAI 工程师共同创立)都开发了工具,让用户可以控制聊天机器人是否进行“推理”:用户可以控制聊天机器人对某个问题进行长时间的思考,以得出更准确或更全面的答案。

另一个流行的趋势是所谓的 “代理人工智能”(agentic AI) ,即理论上可以无需用户监督就能执行任务的自主程序,例如发送电子邮件或预订餐厅。科技界人士也在热议“氛围编码”(vibecoding)——这并非西海岸一种新的冥想练习,而是一种提出通用想法,并让微软公司的 GitHub Copilot 或初创公司 Anysphere Inc. 开发的 Cursor 等热门编码助手进行演绎的艺术。

As developers blissfully vibe code, there’s also been an unmistakable vibe shift in Silicon Valley. Just a year ago, breakthroughs in AI were usually accompanied by furrowed brows and wringing hands, as tech and political leaders fretted about the safety implications. That changed sometime around February, when US Vice President JD Vance, speaking at a global summit in Paris focused on mitigating harms from AI, inveighed against any regulation that might impede progress. “I’m not here this morning to talk about AI safety,” he said. “I’m here to talk about AI opportunity.”

When Vance and President Donald Trump took office, they dashed any hope of new government rules that might slow the AI juggernauts. On his third day in office, Trump rescinded an executive order from his predecessor, Joe Biden, that set AI safety standards and asked tech companies to submit safety reports for new products. At the same time, AI startups have softened their calls for regulation. In 2023, OpenAI CEO Sam Altman told Congress that the possibility AI could run amok and hurt humans was among his “areas of greatest concern” and that companies should have to get licenses from the government to operate new models. At the TED Conference in Vancouver this April, he said he no longer favored that approach, because he’d “learned more about how the government works.”

It’s not unusual in Silicon Valley to see tech companies and their leaders contort their ideologies to fit the shifting political winds. Still, the intensity over the past few months has been startling to watch. Many tech companies have stopped highlighting existential AI safety concerns, shed employees focused on the issue (along with diversity, sustainability and other Biden-era priorities) and become less apologetic about doing business with militaries at home and abroad, bypassing concerns from staff about placing deadly weapons in the hands of AI. Rob Reich, a professor of political science and senior fellow at the Institute for Human-Centered AI at Stanford University, says “there’s a shift to explicitly talking about American advantage. AI security and sovereignty are the watchwords of the day, and the geopolitical implications of building powerful AI systems are stronger than ever.”

If Trump’s policies are one reason for the change, another is the emergence of DeepSeek and its talented, enigmatic CEO, Liang Wenfeng. When the Chinese AI startup released its R1 model in the US in January, analysts marveled at the quality of a product from a company that had raised far less capital than its US rivals and was supposedly using data centers with less powerful Nvidia Corp. chips. DeepSeek’s chatbot shot to the top of the charts on app stores, and US tech stocks promptly cratered on the possibility that the upstart had figured out a more efficient way to reap AI’s gains.

The uproar has quieted since then, but Trump has further restricted the sale of powerful American AI chips to China, and Silicon Valley now watches DeepSeek and its Chinese peers with a sense of urgency. “Everyone has to think very carefully about what is at stake if we cede leadership,” says Alex Kotran, CEO of the AI Education Project.

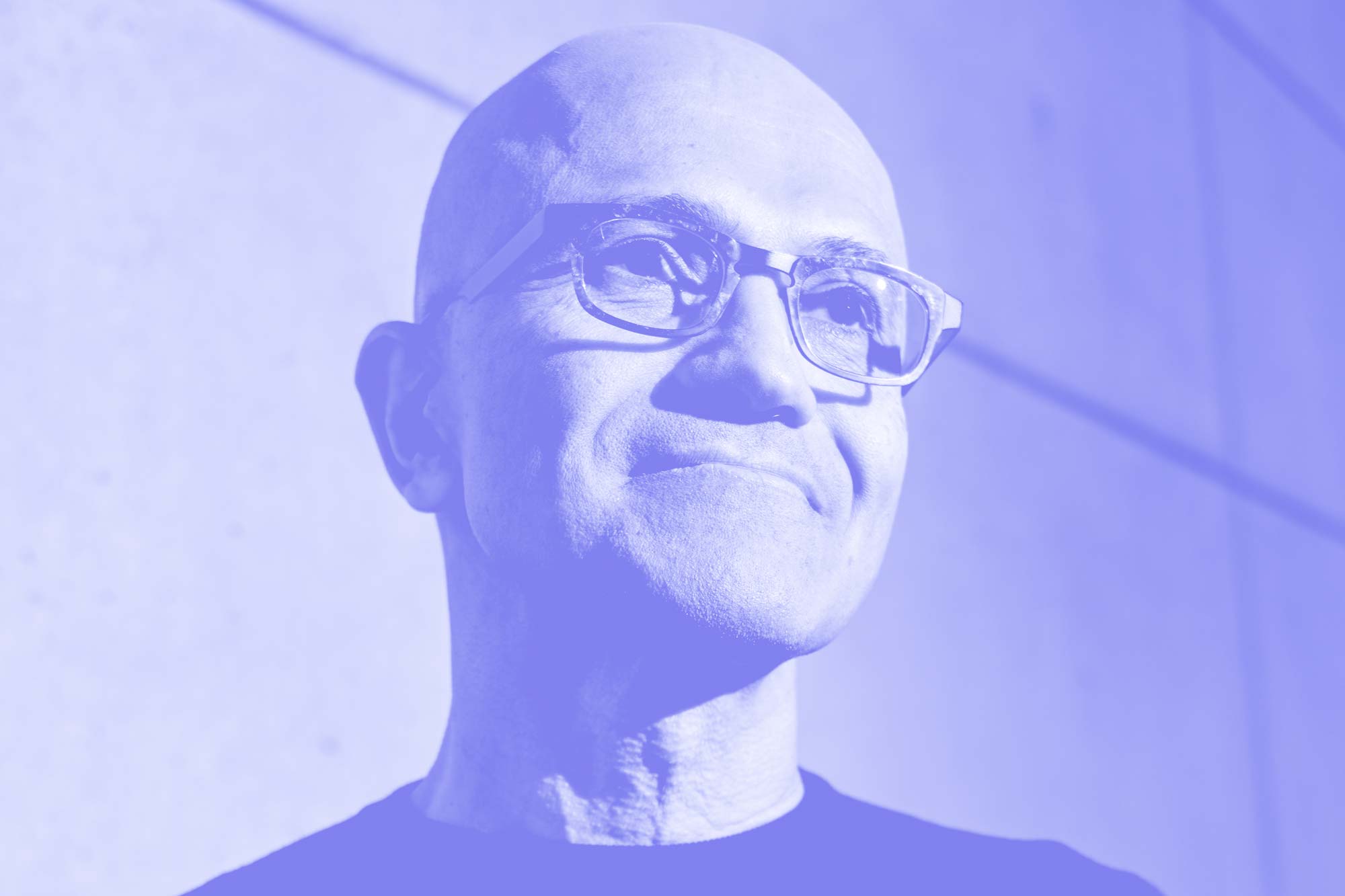

Losing to China isn’t the only potential downside, though. AI-generated content is becoming so pervasive online that it could soon sap the web of any practical utility, and the Pentagon is using machine learning to hasten humanity’s possible contact with alien life. Let’s hope they like us. Nor has this geopolitical footrace calmed the widespread fear of economic damage and job losses. Take just one field: computer programming. Sundar Pichai, CEO of Alphabet Inc., said on an earnings call in April that AI now generates “well over 30%” of all new code for the company’s products. Garry Tan, CEO of startup program Y Combinator, said on a podcast that for a quarter of the startups in his winter program, 95% of their lines of code were AI-generated.

MIT’s Tegmark, who’s also president of an AI safety advocacy organization called the Future of Life Institute, finds solace in his belief that a human instinct for self-preservation will ultimately kick in: Pro-AI business leaders and politicians “don’t want someone to build an AI that will overthrow the government any more than they want plutonium to be legalized.” He remains concerned, though, that the inexorable acceleration of AI development is occurring just outside the visible spectrum of most people on Earth, and that it could have economic and societal consequences beyond our current imagination. “It sounds like sci-fi,” Tegmark says, “but I remind you that ChatGPT also sounded like sci-fi as recently as a few years ago.”