If you enjoy performance engineering and peeling back abstraction layers to ask underlying subsystems to explain themselves, this article’s for you. The context is a chronic Redis latency problem, and you are about to tour a practical example of using BPF and profiling tools in concert with standard metrics to reveal unintuitive behaviors of a complex system.

如果您喜欢性能工程并且喜欢剥开抽象层来询问基础子系统解释自己,请阅读这篇文章。这个背景是关于一个慢性的 Redis 延迟问题,您将要看到一个实际示例,展示如何结合 BPF 和性能分析工具以及标准度量标准来揭示复杂系统的不直觉行为。

Beyond the tools and techniques, we also use an iterative hypothesis-testing approach to compose a behavior model of the system dynamics. This model tells us what factors influence the problem's severity and triggering conditions.

除了工具和技术之外,我们还使用迭代的假设测试方法来构建系统动力学行为模型。这个模型告诉我们哪些因素影响问题的严重程度和触发条件。

Ultimately, we find the root cause, and its remedy is delightfully boring and effective. We uncover a three-phase cycle involving two distinct saturation points and a simple fix to break that cycle. Along the way, we inspect aspects of the system’s behavior using stack sampling profiles, heat maps and flamegraphs, experimental tuning, source and binary analysis, instruction-level BPF instrumentation, and targeted latency injection under specific entry and exit conditions.

最终,我们找到了根本原因,而它的解决方法令人愉快地无聊而有效。我们发现了一个包含两个明显饱和点和一个简单修复以打破这个循环的三阶段周期。在过程中,我们使用堆栈抽样分析、热图和火焰图、实验性调整、源代码和二进制分析、指令级 BPF 工具、以及在特定输入和输出条件下进行有针对性的延迟注入来检查系统行为的各个方面。

If you are short on time, the takeaways are summarized at the end. But the journey is the fun part, so let's dig in!

如果你时间紧迫,总结内容放在最后。但旅程才是有趣的部分,让我们开始吧!

Introducing the problem: Chronic latency

介绍问题:慢性延迟

GitLab makes extensive use of Redis, and, on GitLab.com SaaS, we use separate Redis clusters for certain functions. This tale concerns a Redis instance acting exclusively as a least recently used (LRU) cache.

GitLab 广泛使用 Redis,在 GitLab.com 的 SaaS 中,我们为某些功能使用单独的 Redis 集群。这个故事涉及一个 Redis 实例,专门用作最近最少使用(LRU)缓存。

This cache had a chronic latency problem that started occurring intermittently over two years ago and in recent months had become significantly worse: Every few minutes, it suffered from bursts of very high latency and corresponding throughput drop, eating into its Service Level Objective (SLO). These latency spikes impacted user-facing response times and burned error budgets for dependent features, and this is what we aimed to solve.

这个缓存存在慢性延迟问题,两年前开始间歇性发生,最近几个月变得严重:每隔几分钟,出现非常高的延迟和相应的吞吐量下降,影响了其服务水平目标(SLO)。这些延迟高峰影响了用户响应时间,减少了依赖功能的错误预算,这是我们的解决目标。

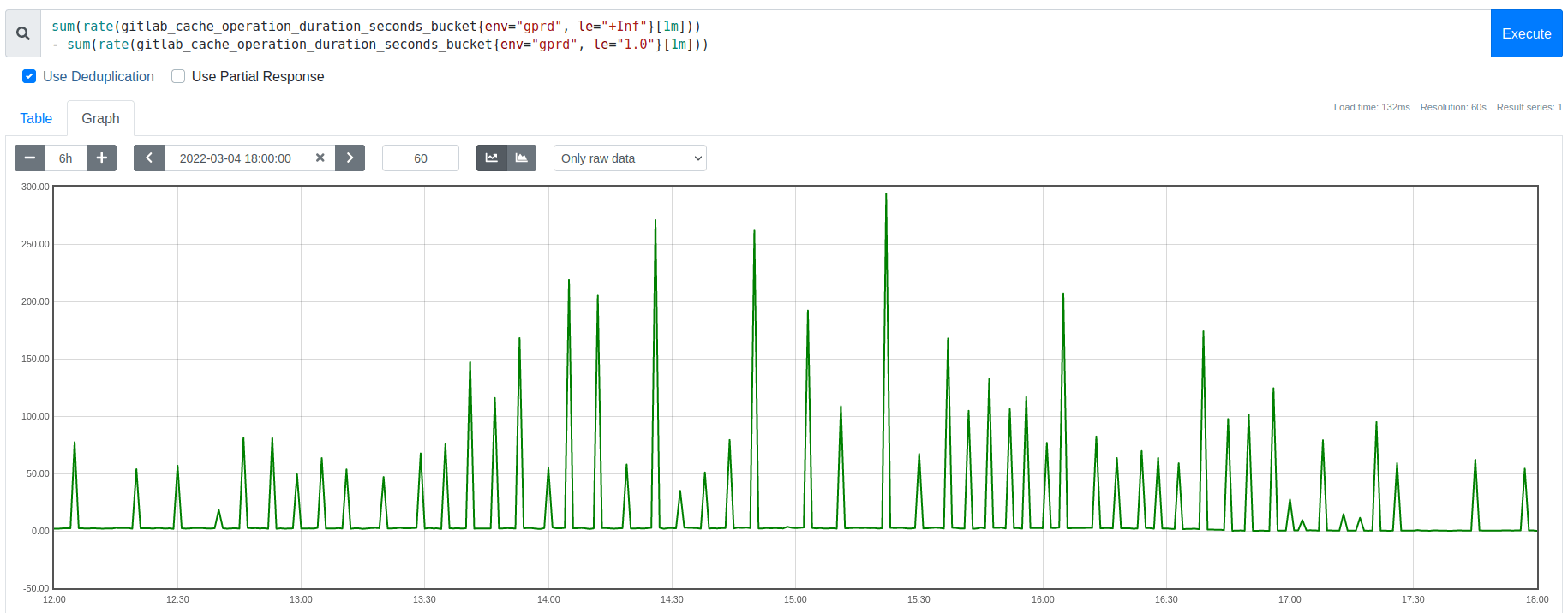

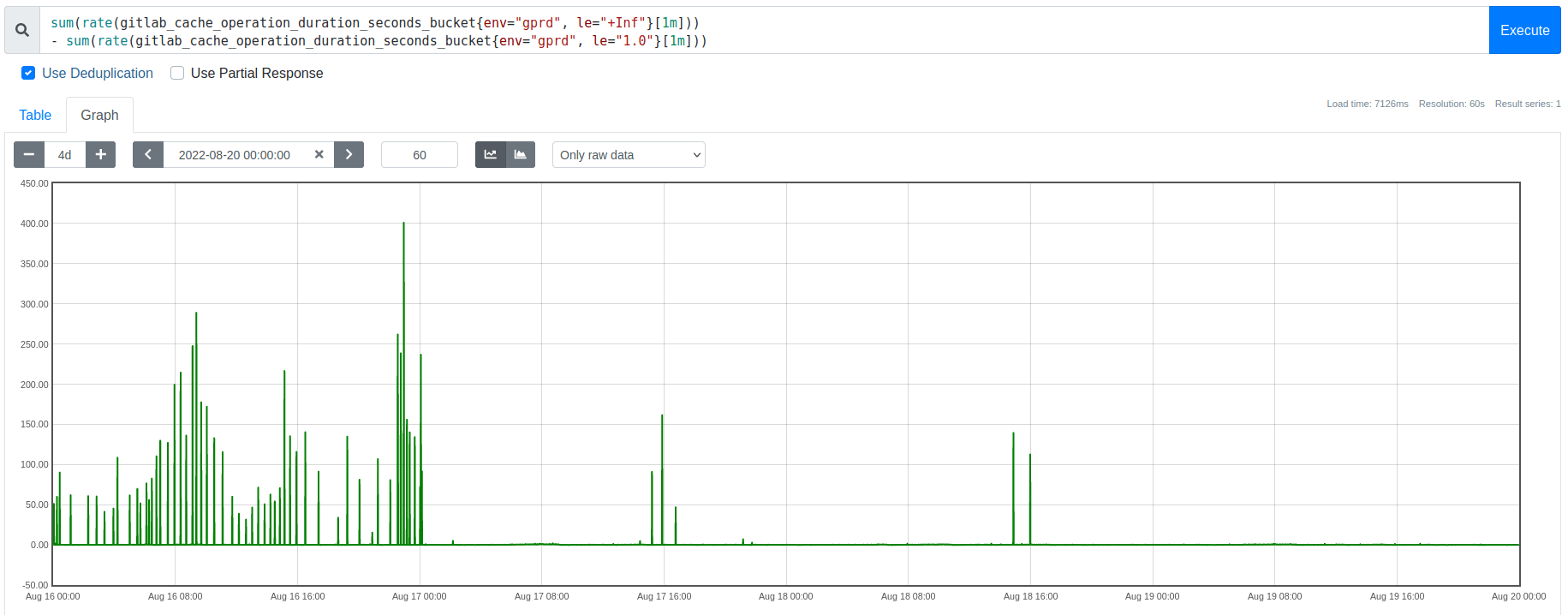

Graph: Spikes in the rate of extremely slow (1 second) Redis requests, each corresponding to an eviction burst

图表:极慢请求速率(1 秒)的波动,每个波动对应一个逐出爆发

In prior work, we had already completed several mitigating optimizations. These sufficed for a while, but organic growth had resurfaced this as an important long-term scaling problem. We had also already ruled out externally triggered causes, such as request floods, connection rate spikes, host-level resource contention, etc. These latency spikes were consistently associated with memory usage reaching the eviction threshold (maxmemory), not by changes in client traffic patterns or other processes competing with Redis for CPU time, memory bandwidth, or network I/O.

在先前的工作中,我们已经完成了几项缓解优化措施。这些措施一度足够,但有机增长导致这个问题再次成为一个重要的长期扩展问题。我们已经排除了外部触发的原因,比如请求洪水、连接速率激增、主机级资源争用等。这些延迟峰值一贯与内存使用达到驱逐阈值( maxmemory )有关,而不是由客户端流量模式的变化或其他进程与 Redis 竞争 CPU 时间、内存带宽或网络 I/O 引起的。

We initially thought that Redis 6.2’s new eviction throttling mechanism might alleviate our eviction burst overhead. It did not. That mechanism solves a different problem: It prevents a stall condition where a single call to performEvictions could run arbitrarily long. In contrast, during this analysis we discovered that our problem (both before and after upgrading Redis) was related to numerous calls collectively reducing Redis throughput, rather than a few extremely slow calls causing a complete stall.

我们最初以为 Redis 6.2 的新驱逐限制机制可能会减轻我们的驱逐爆发开销。但事实并非如此。该机制解决的是一个不同的问题:它防止了一个调用可能运行任意长的停顿条件。相比之下,在此分析期间,我们发现我们的问题(在升级 Redis 之前和之后)与大量调用共同降低了 Redis 的吞吐量有关,而并非少数极慢的调用导致完全停顿。

To discover our bottleneck and its potential solutions, we needed to investigate Redis’s behavior during our workload’s eviction bursts.

为了发现我们的瓶颈及其潜在解决方案,我们需要调查 Redis 在我们的工作负载驱逐阵发生时的行为。

A little background on Redis evictions

关于 Redis 驱逐的一些背景

At the time, our cache was oversubscribed, trying to hold more cache keys than the configured maxmemory threshold could hold, so evictions from the LRU cache were expected. But the dense concentration of that eviction overhead was surprising and troubling.

当时,我们的缓存超额订阅,尝试保存比配置的 maxmemory 阈值更多的缓存键,因此 LRU 缓存预期会发生剔除。但是,该剔除开销的密集集中令人惊讶和令人困扰。

Redis is essentially single-threaded. With a few exceptions, the “main” thread does almost all tasks serially, including handling client requests and evictions, among other things. Spending more time on X means there is less remaining time to do Y, so think about queuing behavior as the story unfolds.

Redis 基本上是单线程的。除了一些特例,"主"线程几乎所有任务都是串行进行,包括处理客户端请求和淘汰等。在 X 上花更多时间意味着剩余时间做 Y 的时间更少,所以在故事逐步展开时考虑排队行为。

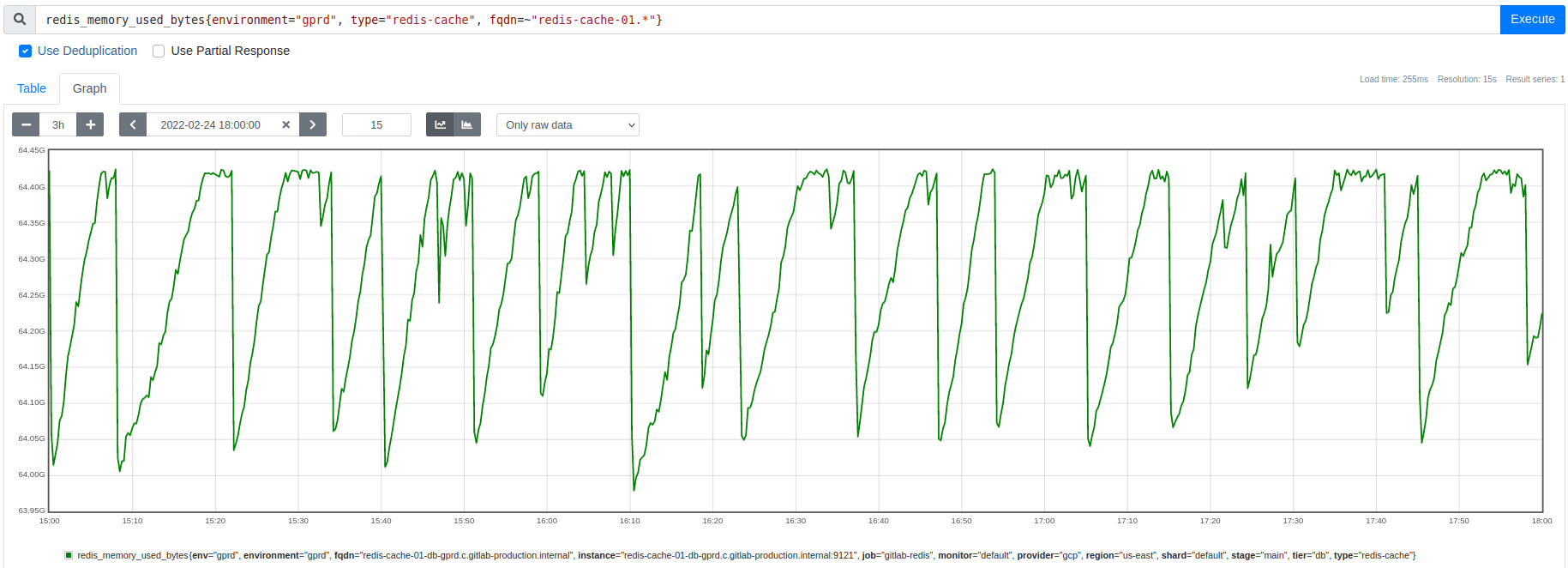

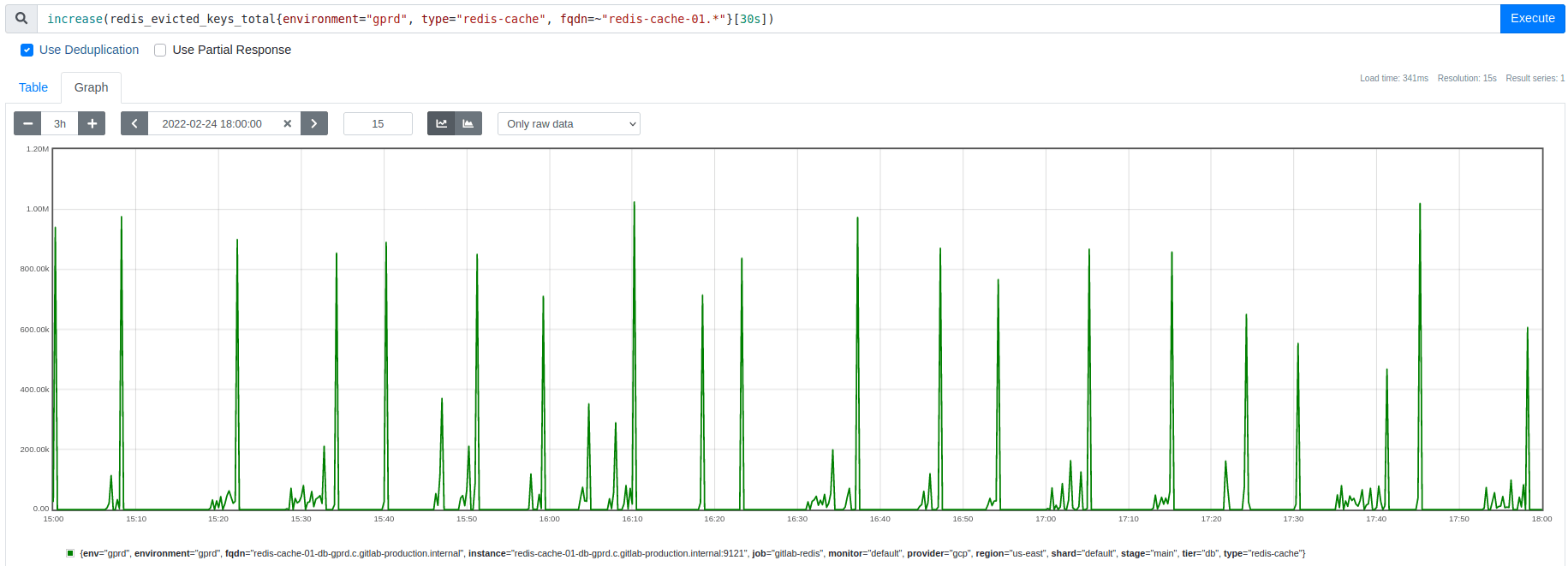

Whenever Redis reaches its maxmemory threshold, it frees memory by evicting some keys, aiming to do just enough evictions to get back under maxmemory. However, contrary to expectation, the metrics for memory usage and eviction rate (shown below) indicated that instead of a continuous steady eviction rate, there were abrupt burst events that freed much more memory than expected. After each eviction burst, no evictions occurred until memory usage climbed back up to the maxmemory threshold again.

每当 Redis 达到 maxmemory 阈值时,它通过驱逐一些键来释放内存,目标是做足够的驱逐以使其恢复到 maxmemory 以下。然而,与预期相反,内存使用和驱逐速率的指标(如下所示)表明,与持续稳定的驱逐速率相反,会发生突然的爆发事件,释放了比预期更多的内存。在每次驱逐突发事件之后,直到内存使用率再次上升到 maxmemory 阈值之前,不会发生任何驱逐。

Graph: Redis memory usage drops by 300-500 MB during each eviction burst:

图表:Redis 在每次驱逐阶段内存使用下降 300-500 MB

Graph: Key eviction spikes match the timing and size of the memory usage dips shown above

图表:关键的驱逐高峰与上面显示的内存使用量下降的时间和规模相匹配

This apparent excess of evictions became the central mystery. Initially, we thought answering that question might reveal a way to smooth the eviction rate, spreading out the overhead and avoiding the latency spikes. Instead, we discovered that these bursts are an interaction effect that we need to avoid, but more on that later.

这种明显过多的驱逐事件成为了中心谜团。最初,我们认为回答这个问题可能会揭示一种平滑驱逐率的方法,将开销分散开来,避免延迟峰值。相反,我们发现这些突发事件是我们需要避免的相互作用效应,但稍后会详细说明。

Eviction bursts cause CPU saturation

驱逐爆炸导致 CPU 饱和

As shown above, we had found that these latency spikes correlated perfectly with large spikes in the cache’s eviction rate, but we did not yet understand why the evictions were concentrated into bursts that last a few seconds and occur every few minutes.

正如上面所示,我们发现这些延迟峰值与缓存驱逐率的大幅波动完美相关,但我们尚不明白为什么这些驱逐操作会集中在持续几秒并每隔几分钟发生一次的突发情况中。

As a first step, we wanted to verify a causal relationship between eviction bursts and latency spikes.

作为第一步,我们希望验证驱逐爆发和延迟峰值之间的因果关系。

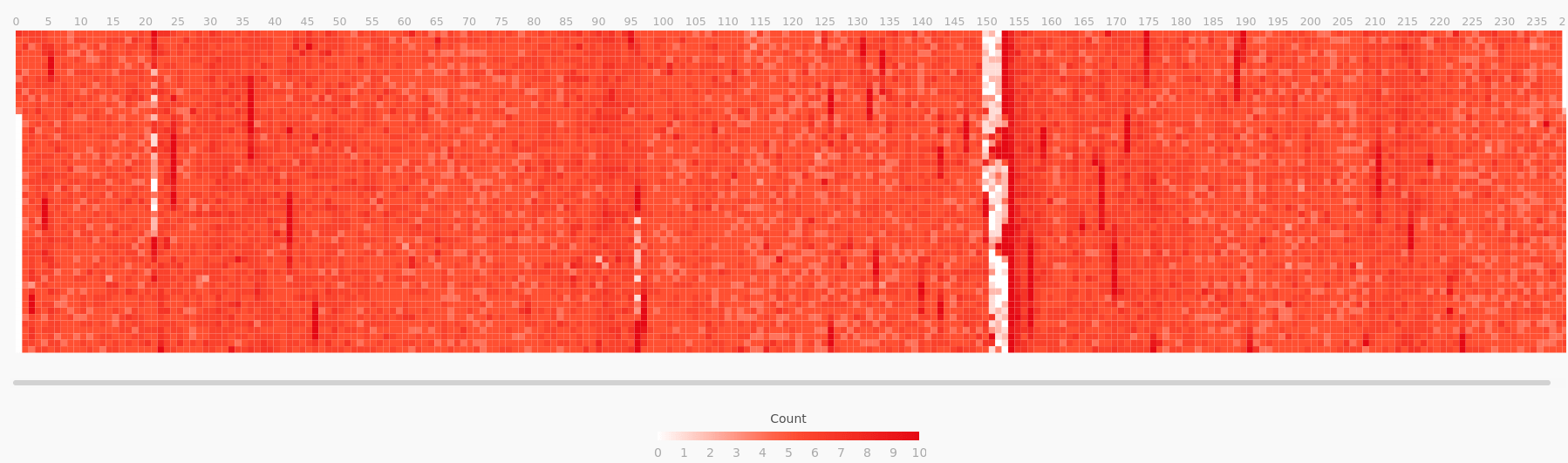

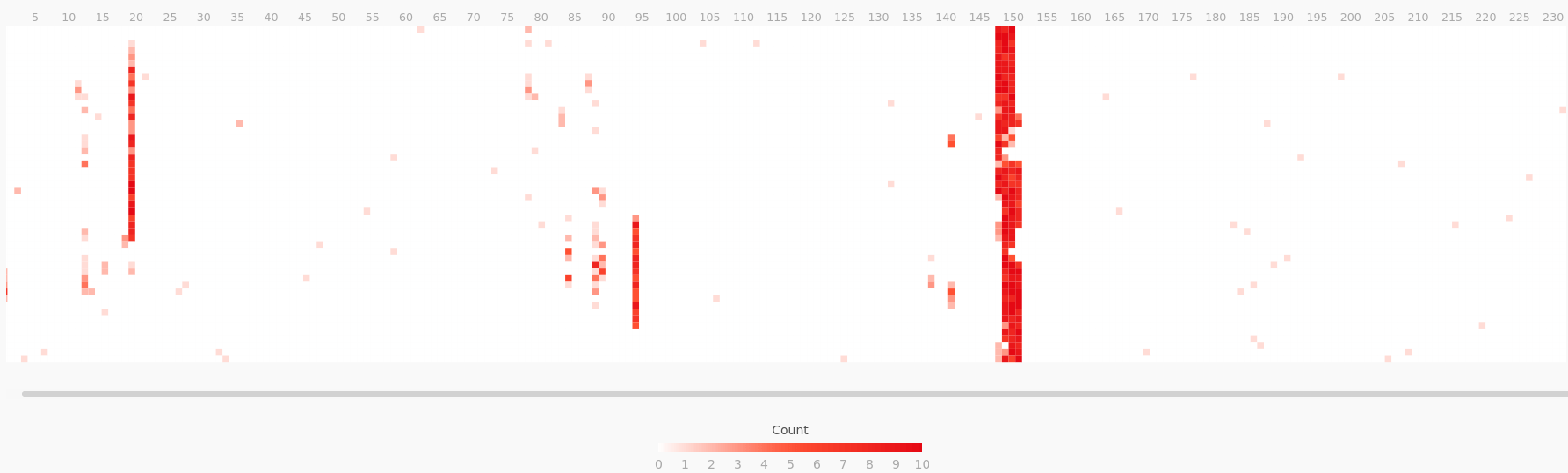

To test this, we used perf to run a CPU sampling profile on the Redis main thread. Then we applied a filter to split that profile, isolating the samples where it was calling the performEvictions function. Using flamescope, we can visualize the profile’s CPU usage as a subsecond offset heat map, where each second on the X axis is folded into a column of 20 msec buckets along the Y axis. This visualization style highlights sub-second activity patterns. Comparing these two heat maps confirmed that during an eviction burst, performEvictions is starving all other main thread code paths for CPU time.

为了测试这一点,我们使用 perf 在 Redis 主线程上运行了 CPU 采样配置文件。然后我们应用了一个过滤器来拆分该配置文件,隔离它调用 performEvictions 函数的样本。使用 flamescope ,我们可以将该配置文件的 CPU 使用率可视化为亚秒偏移热图,其中 X 轴上的每秒被折叠成沿 Y 轴的 20 毫秒桶的列。这种可视化风格突出了亚秒活动模式。比较这两个热度图确认,在驱逐突发期间, performEvictions 正在榨取所有其他主线程代码路径的 CPU 时间。

Graph: Redis main thread CPU time, excluding calls to performEvictions

图表:Redis 主线程 CPU 时间,不包括调用 performEvictions

Graph: Remainder of the same profile, showing only the calls to performEvictions

图表:保留相同概要,仅显示对 performEvictions 的调用

These results confirm that eviction bursts are causing CPU starvation on the main thread, which acts as a throughput bottleneck and increases Redis’s response time latency. These CPU utilization bursts typically lasted a few seconds, so they were too short-lived to trigger alerts but were still user impacting.

这些结果证实了驱逐突发事件导致主线程 CPU 饥饿,这会作为吞吐量瓶颈,并增加 Redis 的响应时间延迟。这些 CPU 利用率突发事件通常持续几秒,因此它们持续时间过短无法触发警报,但仍会影响用户。

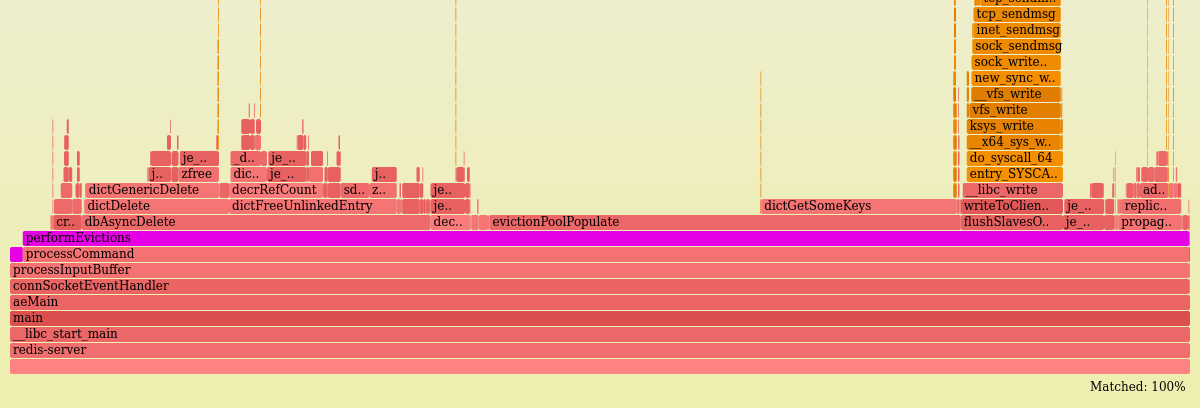

For context, the following flamegraph shows where performEvictions spends its CPU time. There are a few interesting things here, but the most important takeaways are:

为了上下文,以下火焰图显示了 performEvictions 花在 CPU 时间上的位置。这里有一些有趣的地方,但最重要的是:

- It gets called synchronously by

processCommand(which handles all client requests).

它由processCommand(处理所有客户端请求的函数)同步调用。 - It handles many of its own deletes. Despite its name, the

dbAsyncDeletefunction only delegates deletes to a helper thread under certain conditions which turn out to be rare for this workload.

它处理许多自己的删除。尽管其名称如此,dbAsyncDelete函数只在某些情况下将删除操作委托给一个辅助线程,而这些情况对于这个工作负载来说是罕见的。

For more details on this analysis, see the walkthrough and methodology.

有关此分析的更多详细信息,请参阅步骤说明和方法。

How fast are individual calls to performEvictions?

单个 performEvictions 呼叫速度有多快?

Each incoming request to Redis is handled by a call to processCommand, and it always concludes by calling the performEvictions function. That call to performEvictions is frequently a no-op, returning immediately after checking that the maxmemory threshold has not been breached. But when the threshold is exceeded, it will continue evicting keys until it either reaches its mem_tofree goal or exceeds its configured time limit per call.

每个传入的 Redis 请求都通过调用 processCommand 来处理,并最终总是调用 performEvictions 函数结束。对 performEvictions 的调用通常是一个无操作,立即返回,只在检查 maxmemory 阈值未被突破后执行。但当超过阈值时,它将继续驱逐密钥,直到达到 mem_tofree 目标或超过每次调用的配置时间限制。

The CPU heat maps shown earlier proved that performEvictions calls were collectively consuming a large majority of CPU time for up to several seconds.

之前显示的 CPU 热图证明, performEvictions 调用在几秒钟内集体消耗了大部分 CPU 时间。

To complement that, we also measured the wall clock time of individual calls.

为了补充,我们还测量了各个调用的挂钟时间。

Using the funclatency CLI tool (part of the BCC suite of BPF tools), we measured call duration by instrumenting entry and exit from the performEvictions function and aggregated those measurements into a histogram at 1-second intervals. When no evictions were occurring, the calls were consistently low latency (4-7 usecs/call). This is the no-op case described above (including 2.5 usecs/call of instrumentation overhead). But during an eviction burst, the results shift to a bimodal distribution, including a combination of the fast no-op calls along with much slower calls that are actively performing evictions:

使用 funclatency CLI 工具(BCC 工具套件的一部分),我们通过在 performEvictions 函数的进入和退出处插入测量了调用持续时间,并将这些测量值按 1 秒间隔聚合到直方图中。在没有发生驱逐时,调用持续时间一直很低延迟(每个调用 4-7 微秒)。这是上文所描述的无操作案例(包括每个调用 2.5 微秒的插装开销)。但在一次驱逐突发事件期间,结果会转变成双峰分布,其中既包括快速无操作调用,也包括正在执行驱逐的远慢调用。

$ sudo funclatency-bpfcc --microseconds --timestamp --interval 1 --duration 600 --pid $( pgrep -o redis-server ) '/opt/gitlab/embedded/bin/redis-server:performEvictions'

...

23:54:03

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 576 |************ |

4 -> 7 : 1896 |****************************************|

8 -> 15 : 392 |******** |

16 -> 31 : 84 |* |

32 -> 63 : 62 |* |

64 -> 127 : 94 |* |

128 -> 255 : 182 |*** |

256 -> 511 : 826 |***************** |

512 -> 1023 : 750 |*************** |

This measurement also directly confirmed and quantified the throughput drop in Redis requests handled per second: The call rate to performEvictions (and hence to processCommand) dropped to 20% of its norm from before the evictions began, from 25K to 5K calls per second.

这项测量还直接确认和量化了 Redis 每秒处理的请求吞吐量下降:对 performEvictions 的调用率(因此也是对 processCommand 的调用率)从清理操作开始前的正常水平下降到原来的 20%,从每秒 25K 个调用降至每秒 5K 个调用。

This has a huge impact on clients: New requests are arriving at 5x the rate they are being completed. And crucially, we will see soon that this asymmetry is what drives the eviction burst.

这对客户有重大影响:新请求到达的速度是完成速度的 5 倍。关键是,我们很快会看到这种不对称性是导致清除爆发的原因。

For more details on this analysis, see the safety check for instrumentation overhead and the results walkthrough. And for more general reference, the BPF instrumentation overhead estimate is based on these benchmark results.

有关此分析的更多详细信息,请参阅仪器化开销的安全检查和结果演示。另外,有关更一般的参考,BPF 仪器化开销估算基于这些基准测试结果。

Experiment: Can tuning mitigate eviction-driven CPU saturation?

实验:调整是否可以减轻基于驱逐的 CPU 饱和?

The analyses so far had shown that evictions were severely starving the Redis main thread for CPU time. There were still important unanswered questions (which we will return to shortly), but this was already enough info to suggest some experiments to test potential mitigations:

到目前为止的分析显示,驱逐行为严重地剥夺了 Redis 主线程的 CPU 时间。仍然存在一些重要的未解答问题(我们会很快回到这个话题),但这已经足够提供一些实验的信息来测试潜在的缓解措施。

- Can we spread out the eviction overhead so it takes longer to reach its goal but consumes a smaller percentage of the main thread’s time?

我们能否分散逐出开销,使其需要更长的时间才能达到目标,但消耗主线程时间的百分比更小? - Are evictions freeing more memory than expected due to scheduling a lot of keys to be asynchronously deleted by the lazyfree mechanism? Lazyfree is an optional feature that lets the Redis main thread delegate to an async helper thread the expensive task of deleting keys that have more than 64 elements. These async evictions do not count immediately towards the eviction loop’s memory goal, so if many keys qualify for lazyfree, this could potentially drive many extra iterations of the eviction loop.

由于安排了许多密钥通过 lazyfree 机制异步删除,驱逐是否释放的内存超过了预期? Lazyfree 是一个可选功能,允许 Redis 主线程将删除具有超过 64 个元素的密钥的昂贵任务委托给异步辅助线程。这些异步驱逐不会立即计入驱逐循环的内存目标,因此如果许多密钥符合 lazyfree 的条件,这可能会导致驱逐循环的许多额外迭代。

The answers to both turned out to be no:

结果都是"不"

- Reducing

maxmemory-eviction-tenacityto its minimum setting still did not makeperformEvictionscheap enough to avoid accumulating a request backlog. It did increase response rate, but arrival rate still far exceeded it, so this was not an effective mitigation.

将maxmemory-eviction-tenacity减少到最低设置仍然不足以使performEvictions便宜到足以避免积累请求积压。虽然加快了响应速度,但到达速率仍然远远超过它,因此这并不是一种有效的缓解措施。 - Disabling

lazyfree-lazy-evictiondid not prevent the eviction burst from dropping memory usage far belowmaxmemory. Those lazyfrees represent a small percentage of reclaimed memory. This rules out one of the potential explanations for the mystery of excessive memory being freed.

禁用lazyfree-lazy-eviction未能阻止驱逐爆发导致内存使用远远低于maxmemory。那些懒惰地释放的内存只占被回收内存的一小部分。这排除了其中一种对释放过多内存之谜的潜在解释。

Having ruled out two potential mitigations and one candidate hypothesis, at this point we return to the pivotal question: Why are several hundred extra megabytes of memory being freed by the end of each eviction burst?

在排除了两种潜在的缓解措施和一个候选假设之后,我们回到了一个关键问题:为什么在每次驱逐爆发结束时会释放几百兆字节的额外内存?

Why do evictions occur in bursts and free too much memory?

为什么驱逐会成批发生并释放太多内存?

Each round of eviction aims to free just barely enough memory to get back under the maxmemory threshold.

每一轮驱逐操作的目的都是为了释放足够少的内存,以便重新回到阈值以下。

With a steady rate of demand for new memory allocations, the eviction rate should be similarly steady. The rate of arriving cache writes does appear to be steady. So why are evictions happening in dense bursts, rather than smoothly? And why do they reduce memory usage on a scale of hundreds of megabytes rather than hundreds of bytes?

随着对新内存分配的需求稳步增长,驱逐率也应该保持稳定。缓存写入的到达率似乎是稳定的。那么为什么驱逐会突然密集发生,而不是平稳进行?而且为什么它们会导致内存使用减少数百兆字节的规模,而不是数百字节?

Some potential explanations to explore:

一些可能的解释需要探究:

- Do evictions only end when a large key gets evicted, spontaneously freeing enough memory to skip evictions for a while? No, the memory usage drop is far bigger than the largest keys in the dataset.

当一个大的键被驱逐时,才会结束驱逐,从而自动释放足够的内存以跳过一段时间的驱逐吗?不,内存使用下降远远大于数据集中最大的键。 - Do deferred lazyfree evictions cause the eviction loop to overshoot its goal, freeing more memory than intended? No, the above experiment disproved this hypothesis.

延迟惰性剔除是否会导致剔除循环超出目标,释放比预期更多的内存?不会,上述实验证明了这一假设是错误的。 - Is something causing the eviction loop to sometimes calculate an unexpectedly large value for its

mem_tofreegoal? We explore this next. The answer is no, but checking it led to a new insight.

某些原因导致驱逐循环时有时对其目标计算出意外的大值吗?我们接下来会讨论这个问题。答案是否定的,但检查这一点带来了新的见解。 - Is a feedback loop causing evictions to become somehow self-amplifying? If so, what conditions lead to entering and leaving this state? This turned out to be correct.

反馈循环使驱逐情况在某种程度上变得自我放大吗?如果是这样,是什么条件导致进入和离开这种状态?原来是正确的。

These were all plausible and testable hypotheses, and each would point towards a different solution to the eviction-driven latency problem.

这些都是可信且可验证的假设,每一个都会指向驱逐导致的延迟问题的不同解决方案。

The first two hypotheses we have already eliminated.

我们已经排除了前两个假设。

To test the next two, we built custom BPF instrumentation to peek at the calculation of mem_tofree at the start of each call to performEvictions.

为了测试接下来的两个,我们建立了自定义的 BPF 工具来窥探每次调用 performEvictions 开始时的 mem_tofree 计算。

Observing the mem_tofree calculation with bpftrace

观察 mem_tofree 计算与 bpftrace

This part of the investigation was a personal favorite and led to a critical realization about the nature of the problem.

调查的这一部分是我个人最喜欢的,也引发了对问题性质的关键认识。

As noted above, our two remaining hypotheses were:

正如上文所提到的,我们剩下的两个假设是:

- an unexpectedly large

mem_tofreegoal

一个意外的庞大目标 - a self-amplifying feedback loop

自我放大反馈循环

To differentiate between them, we used bpftrace to instrument the calculation of mem_tofree, looking at its input variables and results.

为了区分它们,我们使用 bpftrace 来对 mem_tofree 的计算进行仪器化,查看其输入变量和结果。

This set of measurements directly tests the following:

这组测量直接检验以下内容:

- Does each call to

performEvictionsaim to free a small amount of memory -- perhaps roughly the size of an average cache entry? Ifmem_tofreeever approaches hundreds of megabytes, that would confirm the first hypothesis and reveal what part of the calculation was causing that large value. Otherwise, it rules out the first hypothesis and makes the feedback loop hypothesis more likely.

每次调用performEvictions都旨在释放少量内存吗——也许大致相当于平均缓存条目的大小?如果mem_tofree接近数百兆字节,那将证实第一个假设,并揭示导致该大数值的计算的部分。否则,就排除第一个假设,使反馈循环假设更有可能。 - Does the replication buffer size significantly influence

mem_tofreeas a feedback mechanism? Each eviction adds to this buffer, just like normal writes do. If this buffer grows large (possibly partly due to evictions) and then abruptly shrinks (due to the peer consuming it), that would cause a spontaneous large drop in memory usage, ending evictions and instantly reducing memory usage. This is one potential way for evictions to drive a feedback loop.

复制缓冲区大小是否会显著影响作为反馈机制的mem_tofree?每次驱逐都会增加这个缓冲区,就像常规写入一样。如果这个缓冲区变得很大(可能部分原因是驱逐),然后突然缩小(由于对等方使用它),那将导致内存使用量的急剧下降,终止驱逐并立即减少内存使用量。这是驱逐驱动反馈循环的一种潜在方式。

To peek at the values of the mem_tofree calculation (script), we needed to isolate the correct call from performEvictions to the getMaxmemoryState function and reverse engineer its assembly to find the right instruction and register to instrument for each of the source code level variables that we wanted to capture. From that data we generate histograms for each of the following variables:

为了窥视 mem_tofree 计算(脚本)的值,我们需要从 performEvictions 到 getMaxmemoryState 函数中分离出正确的调用,并反向工程其汇编以找到每个我们想要捕获的源代码级变量的正确指令和寄存器进行检测。从这些数据中,我们为以下每个变量生成直方图:

mem_reported = zmalloc_used_memory() // All used memory tracked by jemalloc

overhead = freeMemoryGetNotCountedMemory() // Replication output buffers + AOF buffer

mem_used = mem_reported - overhead // Non-exempt used memory

mem_tofree = mem_used - maxmemory // Eviction goal

Caveat: Our custom BPF instrumentation is specific to this particular build of the redis-server binary, since it attaches to virtual addresses that are likely to change the next time Redis is compiled. But the approach is able to be generalized. Treat this as a concrete example of using BPF to inspect source code variables in the middle of a function call without having to rebuild the binary. Because we are peeking at the function’s intermediate state and because the compiler inlined this function call, we needed to do binary analysis to find the correct instrumentation points. In general, peeking at a function’s arguments or return value is easier and more portable, but in this case it would not suffice.

注意:我们的自定义 BPF 仪器是针对此特定构建的 redis-server 二进制文件的,因为它连接到虚拟地址,而这些地址在下次编译 Redis 时可能会发生变化。但是这种方法可以泛化。将其视为使用 BPF 检查源代码变量的具体示例,而无需重新构建二进制文件。因为我们正在窥视函数的中间状态,并且编译器内联了此函数调用,所以我们需要进行二进制分析来找到正确的仪器点。一般来说,窥视函数的参数或返回值更容易且更具可移植性,但在这种情况下是不够的。

The results: 结果:

- Ruled out the first hypothesis: Each call to

performEvictionshad a small target value (mem_tofree< 2 MB). This means each call toperformEvictionsdid a small amount of work. Redis’s mysterious rapid drop in memory usage cannot have been caused by an abnormally largemem_tofreetarget evicting a big batch of keys all at once. Instead, there must be many calls collectively driving down memory usage.

排除了第一个假设:每次调用performEvictions都有一个较小的目标值(mem_tofree< 2 MB)。这意味着每次调用performEvictions都只做了少量的工作。Redis 神秘的内存使用量快速下降不能是由于异常大的mem_tofree目标一次性驱逐了大批键。相反,必然是许多调用共同降低了内存使用量。 - The replication output buffers remained consistently small, ruling out one of the potential feedback loop mechanisms.

复制输出缓冲区始终保持很小,排除了潜在的反馈循环机制之一。 - Surprisingly,

mem_tofreewas usually 16 KB to 64 KB, which is larger than a typical cache entry. This size discrepancy hints that cache keys may not be the main source of the memory pressure perpetuating the eviction burst once it begins.

令人意外的是,mem_tofree通常为 16 KB 至 64 KB,比典型的缓存条目大。这种大小差异暗示缓存键可能不是导致内存压力继续进行驱逐爆发的主要原因。

All of the above results were consistent with the feedback loop hypothesis.

以上所有结果与反馈环假设一致。

In addition to answering the initial questions, we got a bonus outcome: Concurrently measuring both mem_tofree and mem_used revealed a crucial new fact – the memory reclaim is a completely distinct phase from the eviction burst.

除了回答最初的问题,我们得到了一个额外的结果:同时测量 mem_tofree 和 mem_used 揭示了一个关键的新事实 - 内存回收是一个完全不同的阶段,与驱逐爆发无关。

Reframing the pathology as exhibiting separate phases for evictions versus memory reclaim led to a series of realizations, described in the next section. From that emerged a coherent hypothesis explaining all the observed properties of the pathology.

将病理学重新界定为对于驱逐和记忆重建展现出不同阶段,引发了一系列的顿悟,详见下一部分内容。由此产生了一个连贯的假设,解释了病理学的所有观察特性。

For more details on this analysis, see methodology notes, build notes supporting the disassembly of the Redis binary, and initial interpretations.

有关此分析的更多详细信息,请参阅方法论注释,构建注释,支持 Redis 二进制文件拆解的信息,以及初步解释。

Three-phase cycle 三相周期

With the above results indicating a distinct separation between the evictions and the memory reclaim, we can now concisely characterize three phases in the cycle of eviction-driven latency spikes.

通过上述结果表明驱逐和内存回收之间的明显分离,我们现在可以简明地描述驱逐驱动的延迟峰值的循环中的三个阶段。

Graph: Diagram (not to scale) comparing memory and CPU usage to request and response rates during each of the three phases

图表:比较三个阶段期间的内存和 CPU 使用率与请求和响应速率的图表(非比例)

Phase 1: Not saturated (7-15 minutes)

阶段 1:未饱和(7-15 分钟)

- Memory usage is below

maxmemory. No evictions occur during this phase.

在这个阶段内,内存使用率低于maxmemory。没有发生清除操作。 - Memory usage grows organically until reaching

maxmemory, which starts the next phase.

内存使用量会有机地增长,直到达到maxmemory,然后开始下一个阶段。

Phase 2: Saturated memory and CPU (6-8 seconds)

阶段 2:内存和 CPU 饱和(6-8 秒)

- When memory usage reaches

maxmemory, evictions begin.

当内存使用量达到,开始执行驱逐。 - Evictions occur only during this phase, and they occur intermittently and frequently.

驱逐仅在这个阶段发生,并且间歇性和频繁发生。 - Demand for memory frequently exceeds free capacity, repeatedly pushing memory usage above

maxmemory. Throughout this phase, memory usage oscillates close to themaxmemorythreshold, evicting a small amount of memory at a time, just enough to get back undermaxmemory.

内存需求经常超出可用容量,不断将内存使用提升到maxmemory以上。在这个阶段,内存使用率在接近maxmemory阈值的范围内波动,每次逐渐释放一小部分内存,使其恢复到maxmemory以下。

Phase 3: Rapid memory reclaim (30-60 seconds)

第三阶段: 快速内存回收(30-60 秒)

- No evictions occur during this phase.

在这个阶段不会发生驱逐。 - During this phase, something that had been holding a lot of memory starts quickly and steadily releasing it.

在这个阶段,一个一直保存了很多记忆的东西开始迅速稳定地释放出来。 - Without the overhead of running evictions, CPU time is again spent mostly on handling requests (starting with the backlog that accumulated during Phase 2).

没有运行驱逐的额外开销,CPU 时间主要再次用于处理请求(从在第二阶段累积的积压开始)。 - Memory usage drops rapidly and steadily. By the time this phase ends, hundreds of megabytes have been freed. Afterwards, the cycle restarts with Phase 1.

内存使用量迅速稳定地下降。到这个阶段结束时,数百兆字节的内存已被释放。之后,循环会重新开始,进入第一阶段。

At the transition between Phase 2 and Phase 3, evictions abruptly ended because memory usage stays below the maxmemory threshold.

在第 2 阶段到第 3 阶段的过渡时,驱逐行为突然结束,因为内存使用率保持在阈值以下。

Reaching that transition point where memory pressure becomes negative signals that whatever was driving the memory demand in Phase 2 has started releasing memory faster than it is consuming it, shrinking the footprint it had accumulated during the previous phase.

一旦达到转折点,使内存压力变为负数,这意味着在第二阶段推动内存需求的因素已经开始释放内存,超过了内存的使用速度,缩小了在前一阶段积累的占用空间。

What is this mystery memory consumer that bloats its demand during Phase 2 and frees it during Phase 3?

在第二阶段膨胀需求并在第三阶段释放需求的神秘内存消费者是什么?

The mystery revealed 揭示的谜团

Modeling the phase transitions gave us some useful constraints that a viable hypothesis must satisfy. The mystery memory consumer must:

建模相变让我们得到了一些有用的约束条件,一个可行的假设必须满足。神秘的内存消耗者必须:

- quickly bloat its footprint to hundreds of megabytes on a timescale of less than 10 seconds (the duration of Phase 2), under conditions triggered by the start of an eviction burst

在触发逐出爆发开始的条件下,快速使其占地面积膨胀到几百兆字节,在不到 10 秒的时间尺度内(第 2 阶段的持续时间) - quickly release its accumulated excess on a timescale of just tens of seconds (the duration of Phase 3), under the conditions immediately following an eviction burst

在驱逐突发事件后的即刻条件下,快速释放其积累的多余量,时间尺度仅为几十秒(第 3 阶段的持续时间)

The answer: The client input/output buffers meet those constraints to be the mystery memory consumer.

答案是:客户端输入/输出缓冲区符合这些约束,成为神秘的内存消耗者。

Here is how that hypothesis plays out:

这就是假设的实际表现方式:

- During Phase 1 (healthy state), the Redis main thread’s CPU usage is already fairly high. At the start of Phase 2, when evictions begin, the eviction overhead saturates the main thread’s CPU capacity, quickly dropping response rate below the incoming request rate.

在阶段 1(健康状态)时,Redis 主线程的 CPU 使用率已经相当高。在阶段 2 开始时,当驱逐开始时,驱逐开销饱和了主线程的 CPU 容量,迅速将响应速率降低到低于传入请求速率以下。 - This throughput mismatch between arrivals versus responses is itself the amplifier that takes over driving the eviction burst. As the size of that rate gap increases, the proportion of time spent doing evictions also increases.

到达和响应之间的吞吐量不匹配是推动驱逐突发的放大器。随着速率差距的增加,花费在执行驱逐操作的时间比例也会增加。 - Accumulating a backlog of requests requires memory, and that backlog continues to grow until enough clients are stalled that the arrival rate drops to match the response rate. As clients stall, the arrival rate falls, and with it the memory pressure, eviction rate, and CPU overhead begin to reduce.

积累请求积压需要内存,直到足够多的客户端停滞,使到达速率降至响应速率。随着客户端的停滞,到达速率下降,随之内存压力、清除速率和 CPU 开销开始减少。 - At the equilibrium point when arrival rate falls to match response rate, memory demand is satisfied and evictions stop (ending Phase 2). Without the eviction overhead, more CPU time is available to process the backlog, so response rate increases above request arrival rate. This recovery phase steadily consumes the request backlog, incrementally freeing memory as it goes (Phase 3).

在平衡点时,到达速率下降以匹配响应速率,内存需求得到满足,剔除停止(结束第 2 阶段)。没有剔除开销,更多的 CPU 时间可用于处理积压,因此响应速率超过请求到达速率。该恢复阶段稳步消耗请求积压,并在进行中逐步释放内存(第 3 阶段)。 - Once the backlog is resolved, the arrival and response rates match again. CPU usage is back to its Phase 1 norm, and memory usage has temporarily dropped in proportion to the max size of Phase 2’s request backlog.

一旦积压问题解决,到达和响应速率再次匹配。 CPU 使用率恢复到 Phase 1 的正常水平,并且内存使用量暂时降低,与 Phase 2 请求积压的最大大小成比例。

We confirmed this hypothesis via a latency injection experiment showing that queuing alone explains the pathology. This outcome supports the conclusion that the extra memory demand originates from response rate falling below request arrival rate.

通过延迟插入实验,我们证实了这一假设,结果显示仅排队就可以解释这种病理。这一结果支持了额外内存需求源自响应速度低于请求到达速度的结论。

Remedies: How to avoid entering the eviction burst cycle

解决办法:如何避免进入驱逐潮周期

Now that we understand the dynamics of the pathology, we can draw confident conclusions about viable solutions.

现在我们了解了这种病理学的动态,我们可以得出关于可行解决方案的自信结论。

Redis evictions are only self-amplifying when all of the following conditions are present:

Redis 清除只有在满足以下所有条件时才会自我放大:

- Memory saturation: Memory usage reaches the

maxmemorylimit, causing evictions to start.

内存饱和:内存使用达到maxmemory限制,导致开始驱逐。 - CPU saturation: The baseline CPU usage by the Redis main thread’s normal workload is close enough to a whole core that the eviction overhead pushes it to saturation. This reduces the response rate below request arrival rate, inducing self-amplification via increased memory demand for request buffering.

CPU 饱和:Redis 主线程正常工作负载的基准 CPU 使用率接近整个核心,导致驱逐开销使其饱和。这会将响应速率降低到请求到达速率以下,并通过增加请求缓冲的内存需求诱导出自我放大效应。 - Many active clients: The saturation only lasts as long as request arrival rate exceeds response rate. Stalled clients no longer contribute to that arrival rate, so the saturation lasts longer and has a greater impact if Redis has many active clients still sending requests.

许多活跃客户端:饱和仅持续到请求到达速率超过响应速率的时候。停滞的客户端不再对该到达速率做出贡献,因此,如果 Redis 仍有许多活跃客户端发送请求,那么饱和将持续更长时间并产生更大影响。

Viable remedies include:

可行的疗法包括:

- Avoid memory saturation by any combination of the following to make peak memory usage less than the

maxmemorylimit:

通过以下任意组合避免内存饱和,使峰值内存使用量小于maxmemory限制:- Reduce cache time to live (TTL)

减少缓存时间存活期(TTL) - Increase

maxmemory(and host memory if needed, but watch out fornuma_balancingCPU overhead on hosts with multiple NUMA nodes)

增加maxmemory(如有需要,还需增加主机内存,但要小心对于带有多个 NUMA 节点的主机可能会带来的numa_balancingCPU 开销) - Adjust client behavior to avoid writing unnecessary cache entries

调整客户端行为,避免写入不必要的缓存条目 - Split the cache among multiple instances (sharding or functional partitioning, helps avoid both memory and CPU saturation)

将缓存在多个实例之间分配(分片或功能分区,有助于避免内存和 CPU 饱和)

- Reduce cache time to live (TTL)

- Avoid CPU saturation by any combination of the following to make peak CPU usage for the workload plus eviction overhead be less than 1 CPU core:

通过以下任意组合来避免 CPU 饱和,使工作负载加上驱逐开销的峰值 CPU 使用率低于 1 个 CPU 核心:- Use the fastest processor available for single-threaded instructions per second

使用可用的最快处理器来执行单线程指令每秒 - Isolate the redis-server process (particularly its main thread) from any other competing CPU-intensive processes (dedicated host, taskset, cpuset)

将 redis-server 进程(特别是其主线程)与任何其他竞争性 CPU 密集型进程(专用主机、taskset、cpuset)隔离 - Adjust client behavior to avoid unnecessary cache lookups or writes

调整客户端行为,以避免不必要的缓存查找或写入 - Split the cache among multiple instances (sharding or functional partitioning, helps avoid both memory and CPU saturation)

将缓存分配给多个实例(分片或功能分区,有助于避免内存和 CPU 饱和) - Offload work from the Redis main thread (io-threads, lazyfree)

从 Redis 主线程卸载工作(io-线程,lazyfree) - Reduce eviction tenacity (only gives a minor benefit in our experiments)

减小驱逐坚韧性(在我们的实验中只带来轻微好处)

- Use the fastest processor available for single-threaded instructions per second

More exotic potential remedies could include a new Redis feature. One idea is to exempt ephemeral allocations like client buffers from counting towards the maxmemory limit, instead applying that limit only to key storage. Alternatively, we could limit evictions to only consume at most a configurable percentage of the main thread’s time, so that most of its time is still spent on request throughput rather than eviction overhead.

更多的异国潜在疗法可能包括新的 Redis 功能。一个想法是将短暂分配(如客户端缓冲区)排除在 maxmemory 限制范围之外,而将该限制仅应用于键存储。另一种选择是将驱逐限制为消耗主线程时间的最多可配置百分比,以便大部分时间仍然用于请求吞吐量而不是驱逐开销。

Unfortunately, either of those features would trade one failure mode for another, reducing the risk of eviction-driven CPU saturation while increasing the risk of unbounded memory growth at the process level, which could potentially saturate the host or cgroup and lead to an OOM, or out of memory, kill. That trade-off may not be worthwhile, and in any case it is not currently an option.

不幸的是,以上两个功能中的任一个都会将一种故障模式换成另一种,降低了因驱逐驱动的 CPU 饱和风险,同时增加了进程级别内存增长无限风险,并有可能使主机或 cgroup 饱和并导致 OOM,或内存不足,终止。这种权衡可能不值得,而且无论如何,目前也没有选择。

Our solution 我们的解决方案

We had already exhausted the low-hanging fruit for CPU efficiency, so we focused our attention on avoiding memory saturation.

我们已经挖掘了 CPU 效率的低 hanging fruit,所以我们把注意力集中在避免内存饱和上。

To improve the cache’s memory efficiency, we evaluated which types of cache keys were using the most space and how much IDLETIME they had accrued since last access. This memory usage profile identified some rarely used cache entries (which waste space), helped inform the TTL, or time to live, tuning by first focusing on keys with a high idle time, and highlighted some useful potential cutpoints for functionally partitioning the cache.

为了提高缓存的内存效率,我们评估了哪些类型的缓存键占用了最多的空间,以及自上次访问以来它们积累了多少空间。这种内存使用配置文件确定了一些很少使用的缓存条目(浪费空间),帮助调整 TTL(存活时间) tuning,首先关注空闲时间较长的键,并突出了一些有用的潜在划分缓存功能的切入点。

We decided to concurrently pursue several cache efficiency improvements and opened an epic for it. The goal was to avoid chronic memory saturation, and the main action items were:

我们决定同时推进几项缓存效率的改进,并为此开展了一个史诗级项目。目标是避免持续的内存饱和,主要行动项包括:

- Iteratively reduce the cache’s default TTL from 2 weeks to 8 hours (helped a lot!)

将缓存的默认 TTL 从 2 周逐步减少到 8 小时(效果很好!) - Switch to client-side caching for certain cache keys (efficiently avoids spending shared cache space on non-shared cache entries)

为某些缓存键切换到客户端缓存(有效地避免在非共享缓存条目上浪费共享缓存空间) - Partition a set of cache keys to a separate Redis instance

将一组缓存键分区到一个单独的 Redis 实例

The TTL reduction was the simplest solution and turned out to be a big win. One of our main concerns with TTL reduction was that the additional cache misses could potentially increase workload on other parts of the infrastructure. Some cache misses are more expensive than others, and our metrics are not granular enough to quantify the cost of cache misses per type of cache entry. This concern is why we applied the TTL adjustment incrementally and monitored for SLO violations. Fortunately, our inference was correct: Reducing TTL did not significantly reduce the cache hit rate, and the additional cache misses did not cause noticeable impact to downstream subsystems.

TTL 的缩减是最简单的解决方案,结果证明是一个巨大的胜利。我们对 TTL 减少的主要担忧之一是,额外的缓存未命中可能会增加基础设施的其他部分的工作量。一些缓存未命中比其他的更昂贵,而我们的指标不够精细,无法量化每种类型缓存条目的缓存未命中成本。这个担忧是我们逐渐应用 TTL 调整并监视 SLO 违规的原因。幸运的是,我们的推断是正确的:减少 TTL 并没有显著降低缓存命中率,额外的缓存未命中也没有对下游子系统产生明显影响。

The TTL reduction turned out to be sufficient to drop memory usage consistently a little below its saturation point.

TTL 减少后,内存使用量一直保持在饱和点以下。

Increasing maxmemory had initially not been feasible because the original peak memory demand (prior to the efficiency improvements) was expected to be larger than the max size of the VMs we use for Redis. However, once we dropped memory demand below saturation, then we could confidently provision headroom for future growth and re-enable saturation alerting.

增加初始不可行,因为原始峰值内存需求(在效率改善之前)预计会大于我们用于 Redis 的 VM 的最大尺寸。但是,一旦我们将内存需求降低到饱和以下,那么我们就可以为未来增长提供充足的余地,并重新启用饱和警报。

Results 结果

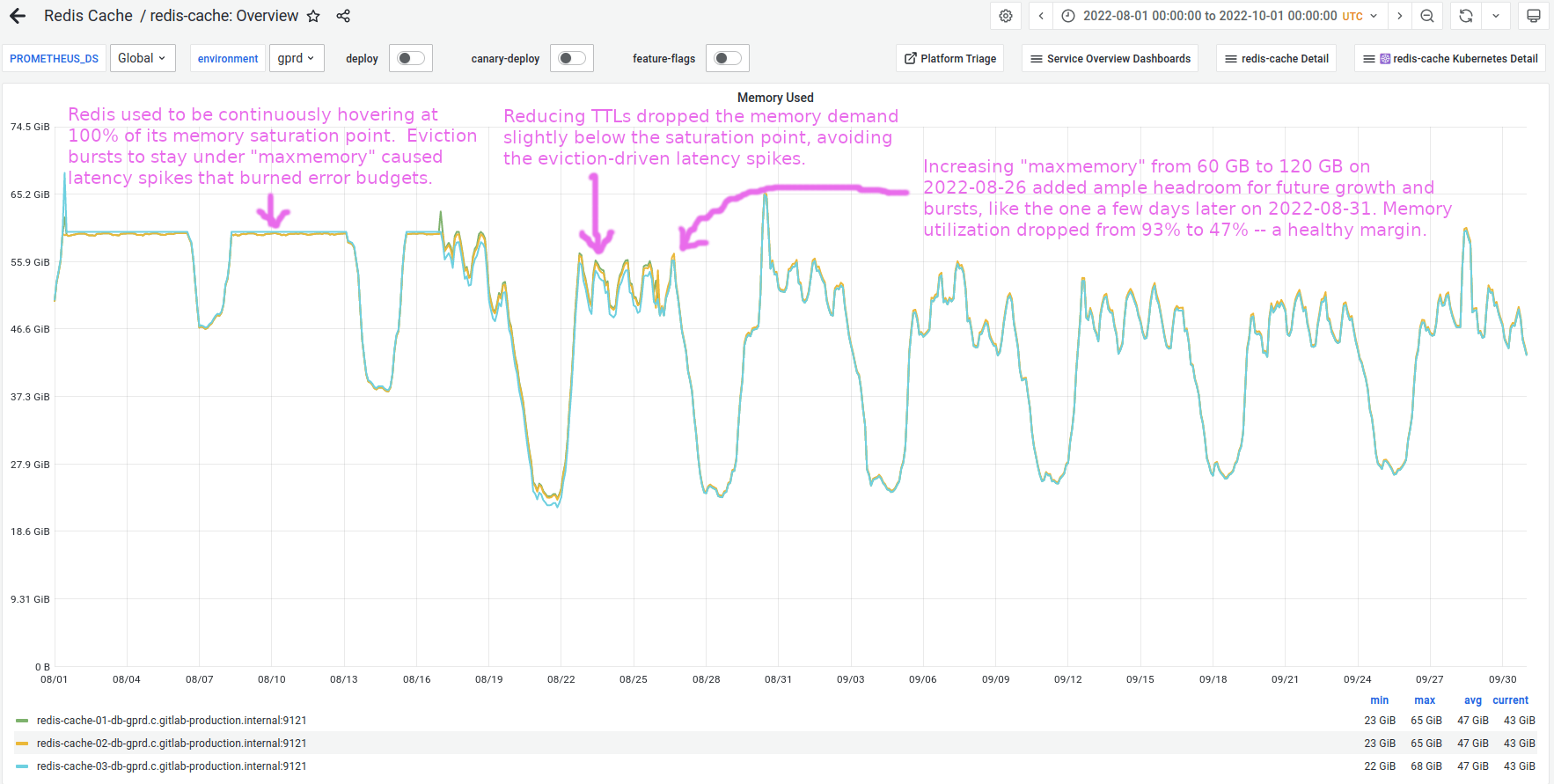

The following graph shows Redis memory usage transitioning out of its chronically saturated state, with annotations describing the milestones when latency spikes ended and when saturation margin became wide enough to be considered safe:

以下图表显示了 Redis 内存使用情况从其长期饱和状态转变的过程,注释描述了延迟峰值结束和饱和度余量变得足够大以被认为是安全的里程碑时刻:

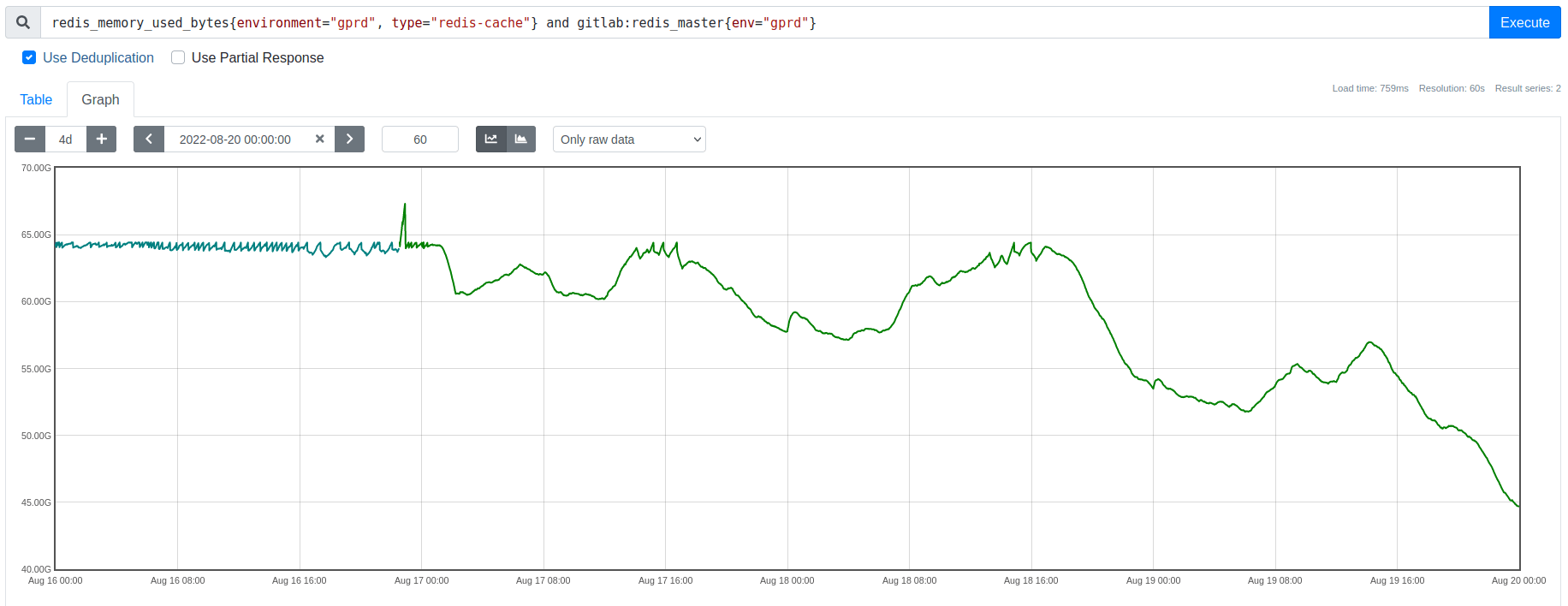

Zooming into the days when we rolled out the TTL adjustments, we can see the harmful eviction-driven latency spikes vanish as we drop memory usage below its saturation point, exactly as predicted:

当我们推出 TTL 调整的日子放大到细节,我们可以看到由于内存使用降至饱和点以下,那种有害的基于驱逐的延迟峰值消失,正如预测的那样:

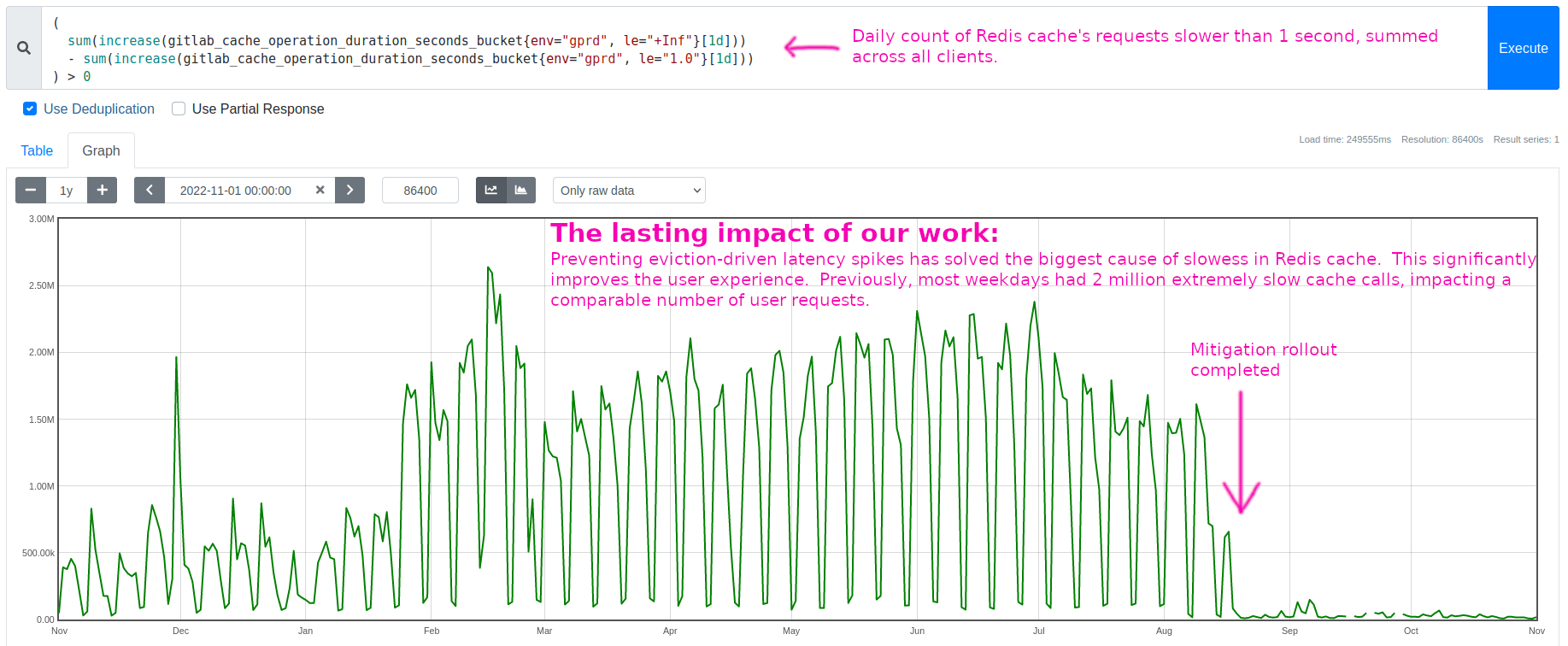

These eviction-driven latency spikes had been the biggest cause of slowess in Redis cache.

这些由驱逐引起的延迟峰值是导致 Redis 缓存变慢的最主要原因。

Solving this source of slowness significantly improved the user experience. This 1-year lookback shows only the long-tail portion of the improvement, not even the full benefit. Each weekday had roughly 2 million Redis requests slower than 1 second, until our fix in mid-August:

解决这一导致缓慢的问题显著改善了用户体验。这一年的回顾只显示了改善的长尾部分,甚至没有全部好处。直到在八月中旬修复之前,每个工作日大约有 200 万次 Redis 请求超过 1 秒。

Conclusions 结论

We solved a long-standing latency problem that had been worsening as the workload grew, and we learned a lot along the way. This article focuses mostly on the Redis discoveries, since those are general behaviors that some of you may encounter in your travels. We also developed some novel tools and analytical methods and uncovered several useful environment-specific facts about our workload, infrastructure, and observability, leading to several additional improvements and proposals not mentioned above.

我们解决了一个长期存在的延迟问题,随着工作量的增加而恶化,并且在这个过程中学到了很多。本文主要关注 Redis 的发现,因为这些是一些人在旅程中可能会遇到的普遍行为。我们还开发了一些新颖的工具和分析方法,并发现了一些关于我们的工作量、基础设施和可观测性的有用的特定环境事实,从而实现了若干其他未提及的改进和建议。

Overall, we made several efficiency improvements and broke the cycle that was driving the pathology. Memory demand now stays well below the saturation point, eliminating the latency spikes that were burning error budgets for the development teams and causing intermittent slowness for users. All stakeholders are happy, and we came away with deeper domain knowledge and sharper skills!

总体来说,我们进行了几项效率改进,并打破了导致病理的循环。内存需求现在保持在饱和点以下,消除了为开发团队消耗错误预算并导致用户时有时无的延迟峰值的问题。所有利益相关者都很满意,我们也获得了更深入的领域知识和更锐利的技能!

Key insights summary 关键见解摘要

The following notes summarize what we learned about Redis eviction behavior (current as of version 6.2):

以下笔记总结了我们关于 Redis 驱逐行为的学习(截至版本 6.2)。

- The same memory budget (

maxmemory) is shared by key storage and client connection buffers. A spike in demand for client connection buffers counts towards themaxmemorylimit, in the same way that a spike in key inserts or key size would.

相同的内存预算(maxmemory)由键存储和客户端连接缓冲区共享。对客户端连接缓冲区需求的激增计入maxmemory限制,就像键插入或键大小的激增一样。 - Redis performs evictions in the foreground on its main thread. All time spent in

performEvictionsis time not spent handling client requests. Consequently, during an eviction burst, Redis has a lower throughput ceiling.

Redis 在主线程上前台执行淘汰操作。在执行淘汰操作的时间内无法处理客户端请求。因此,在淘汰操作高峰期间,Redis 的吞吐量上限较低。 - If eviction overhead saturates the main thread’s CPU, then response rate falls below request arrival rate. Redis accumulates a request backlog (which consumes memory), and clients experience this as slowness.

如果驱逐开销饱和了主线程的 CPU,那么响应速率会低于请求到达速率。Redis 会积累请求积压(消耗内存),客户端会感觉到响应缓慢。 - The memory used for pending requests requires more evictions, driving the eviction burst until enough clients are stalled that arrival rate falls back below response rate. At that equilibrium point, evictions stop, eviction overhead vanishes, Redis rapidly handles its request backlog, and that backlog’s memory gets freed.

待处理请求所使用的内存需要更多的逐出,驱动逐出突发直到足够多的客户端被阻塞,从而使到达速率恢复到响应速率以下。在那个平衡点,逐出停止,逐出开销消失,Redis 快速处理其请求积压,并释放该积压的内存。 - Triggering this cycle requires all of the following:

触发这一循环需要以下所有条件:- Redis is configured with a

maxmemorylimit, and its memory demand exceeds that size. This memory saturation causes evictions to begin.

Redis 配置了maxmemory限制,而其内存需求超过了该大小。这种内存饱和导致开始进行驱逐。 - Redis main thread’s CPU utilization is high enough under its normal workload that having to also perform evictions drives it to CPU saturation. This reduces response rate below request rate, causing a growing request backlog and high latency.

Redis 主线程的 CPU 利用率在正常工作负载下已经足够高,而必须执行清理操作则使其达到 CPU 饱和状态。这会导致响应速率低于请求速率,引起不断增长的请求积压和高延迟。 - Many active clients are connected. The duration of the eviction burst and the size of memory spent on client connection buffers increases proportionally to the number of active clients.

许多活跃客户端已连接。驱逐突发事件的持续时间和用于客户端连接缓冲区的内存大小与活跃客户端数量成比例增加。

- Redis is configured with a

- Prevent this cycle by avoiding either memory or CPU saturation. In our case, avoiding memory saturation was easier (mainly by reducing cache TTL).

通过避免内存或 CPU 饱和来防止这种循环。在我们的情况下,避免内存饱和更容易(主要通过减少缓存 TTL)。

Further reading 进一步阅读

The following lists summarize the analytical tools and methods cited in this article. These tools are all highly versatile and any of them can provide a massive level-up when working on performance engineering problems.

以下列表总结了本文中提到的分析工具和方法。所有这些工具都非常通用,任何一个都可以在解决性能工程问题时提供巨大的提升。

Tools: 工具

- perf - A Linux performance analysis multitool. In this article, we used

perfas a sampling profiler, capturing periodic stack traces of theredis-serverprocess's main thread when it is actively running on a CPU.

perf - 一个 Linux 性能分析多功能工具。在本文中,我们使用perf作为一种采样分析器,在处理器上主动运行时捕获redis-server进程主线程的定期栈跟踪。 - Flamescope - A visualization tool for rendering a

perfprofile (and other formats) into an interactive subsecond heat map. This tool invites the user to explore the timeline for microbursts of activity or inactivity and render flamegraphs of those interesting timespans to explore what code paths were active.

Flamescope - 一种可将剖析文件(及其他格式)转换成交互式亚秒级热图的可视化工具。该工具邀请用户探索时间线以查看活动或静止的微缕,并渲染出这些有趣时间段的火焰图,以便探索哪些代码路径是活跃的。 - BCC - BCC is a framework for building BPF tools, and it ships with many useful tools out of the box. In this article, we used

funclatencyto measure the call durations of a specific Redis function and render the results as a histogram.

BCC - BCC 是一个构建 BPF 工具的框架,并且默认提供了许多实用工具。在本文中,我们使用funclatency来测量特定 Redis 函数的调用持续时间,并将结果渲染为直方图。 - bpftrace - Another BPF framework, ideal for answering ad-hoc questions about your system's behavior. It uses an

awk-like syntax and is quick to learn. In this article, we wrote a custombpftracescript for observing the variables used in computing how much memory to free during each round of evictions. This script's instrumentation points are specific to our particular build ofredis-server, but the approach is able to be generalized and illustrates how versatile this tool can be.

bpftrace - 另一个 BPF 框架,适合回答与系统行为有关的临时问题。它使用类似于< b0 > 的语法,易于学习。在本文中,我们编写了一个用于观察计算每轮驱逐过程中要释放多少内存的自定义< b1 > 脚本。该脚本的插装点是针对我们特定版本的< b2 > 构建的,但这种方法可以泛化,并展示了这个工具的多功能性。

Usage examples: 使用示例:

- Example - Walkthrough of using

perfandflamescopeto capture, filter, and visualize the stack sampling CPU profiles of the Redis main thread.

使用perf和flamescope来捕获、过滤和可视化 Redis 主线程的堆栈采样 CPU 性能剖析的操作演示。 - Example - Walkthrough (including safety check) of using

funclatencyto measure the durations of the frequent calls to functionperformEvictions.

使用funclatency来测量对函数performEvictions频繁调用的持续时间的示例步骤(包括安全检查)。 - Example - Experiment for adjusting Redis settings

lazyfree-lazy-evictionandmaxmemory-eviction-tenacityand observing the results usingperf,funclatency,funcslower, and the Redis metrics for eviction count and memory usage.

示例 - 调整 Redis 设置的实验lazyfree-lazy-eviction和maxmemory-eviction-tenacity,并使用perf,funclatency,funcslower观察结果,以及清除计数和内存使用量的 Redis 指标。 - Example - This is a working example (script included) of using

bpftraceto observe the values of a function's variables. In this case we inspected themem_tofreecalculation at the start ofperformEvictions. Also, these companion notes discuss some build-specific considerations.

示例 - 这是一个工作示例(包含脚本),演示如何使用bpftrace来观察函数变量的值。在这种情况下,我们检查了mem_tofree在performEvictions开始时的计算。此外,这些附注讨论一些构建特定的注意事项。 - Example - Describes the latency injection experiment (the first of the three ideas). This experiment confirmed that memory demand increases at the predicted rate when we slow response rate to below request arrival rate, in the same way evictions do. This result confirmed the request queuing itself is the source of the memory pressure that amplifies the eviction burst once it begins.

例子 - 描述了延迟注入实验(三个想法中的第一个)。这个实验证实,当我们将响应速度减慢到低于请求到达速率时,内存需求会按预测速度增加,就像驱逐一样。这个结果证实了请求排队本身就是内存压力的来源,一旦开始,它就会加剧驱逐爆发。