Build a New Plugin in under a Minute…

在一分钟内创建新插件...

A few weeks ago, in collaboration with OpenAI, we released the Wolfram plugin for ChatGPT, which lets ChatGPT use Wolfram Language and Wolfram|Alpha as tools, automatically called from within ChatGPT. One can think of this as adding broad “computational superpowers” to ChatGPT, giving access to all the general computational capabilities and computational knowledge in Wolfram Language and Wolfram|Alpha.

几周前,我们与 OpenAI 合作发布了用于 ChatGPT 的 Wolfram 插件,让 ChatGPT 可以使用 Wolfram 语言和 Wolfram|Alpha 作为工具,在 ChatGPT 中自动调用。我们可以认为这为 ChatGPT 增加了广泛的 "计算超能力",使其能够访问 Wolfram 语言和 Wolfram|Alpha 中的所有通用计算能力和计算知识。

But what if you want to make your own special plugin, that does specific computations, or has access to data or services that are for example available only on your own computer or computer system? Well, today we’re releasing a first version of a kit for doing that. And building on our whole Wolfram Language tech stack, we’ve managed to make the whole process extremely easy—to the point where it’s now realistic to deploy at least a basic custom ChatGPT plugin in under a minute.

但是,如果您想制作自己的特殊插件,进行特定的计算,或者访问只有您自己的计算机或计算机系统才能访问的数据或服务,该怎么办呢?今天,我们发布了用于实现这一目标的第一版工具包。在整个沃尔夫拉姆语言技术栈的基础上,我们将整个过程变得非常简单,现在至少在一分钟内部署一个基本的自定义 ChatGPT 插件已经成为现实。

There’s some (very straightforward) one-time setup you need—authenticating with OpenAI, and installing the Plugin Kit. But then you’re off and running, and ready to create your first plugin.

您需要进行一些(非常简单的)一次性设置--通过 OpenAI 进行身份验证并安装插件工具包。然后您就可以开始运行,并准备创建您的第一个插件了。

To run the examples here for yourself you’ll need:

要运行这里的示例,您需要

- Developer access to the OpenAI plugin system for ChatGPT

开发人员可访问 ChatGPT 的 OpenAI 插件系统 - Access to a Wolfram Language system (including Free Wolfram Engine for Developers, Wolfram Cloud Basic, etc.)

访问 Wolfram 语言系统(包括免费 Wolfram Engine for Developers、Wolfram Cloud Basic 等)。 - You’ll also for now need to install the ChatGPT Plugin Kit with

PacletInstall["Wolfram/ChatGPTPluginKit"]

现在您还需要安装 ChatGPT 插件包,其中包括 PacletInstall["Wolfram/ChatGPTPluginKit"]

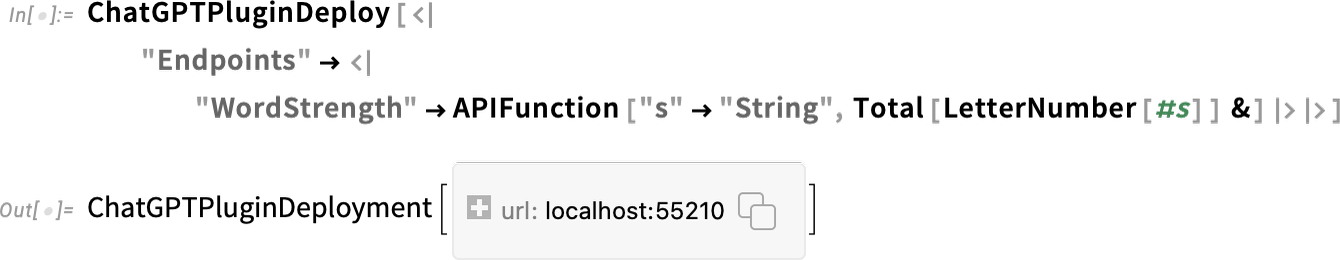

Here’s a very simple example. Let’s say you make up the idea of a “strength” for a word, defining it to be the sum of the “letter numbers” (“a” is 1, “b” is 2, etc.). In Wolfram Language you can compute this as:

这里有一个非常简单的例子。假设您为一个单词设定了 "强度 "的概念,将其定义为 "字母数字 "的总和("a "为 1,"b "为 2,等等)。在 Wolfram 语言中,您可以将其计算为

And for over a decade it’s been standard that you can instantly deploy such a computation as a web API in the Wolfram Cloud—immediately accessible through a web browser, external program, etc.:

十多年来,您可以在 Wolfram Cloud 中以网络应用程序接口的形式即时部署这样的计算,通过网络浏览器、外部程序等立即访问,这已经成为一种标准:

But today there’s a new form of deployment: as a plugin for ChatGPT. First, you say you need the Plugin Kit:

但今天有了一种新的部署形式:作为 ChatGPT 的插件。首先,您需要插件套件:

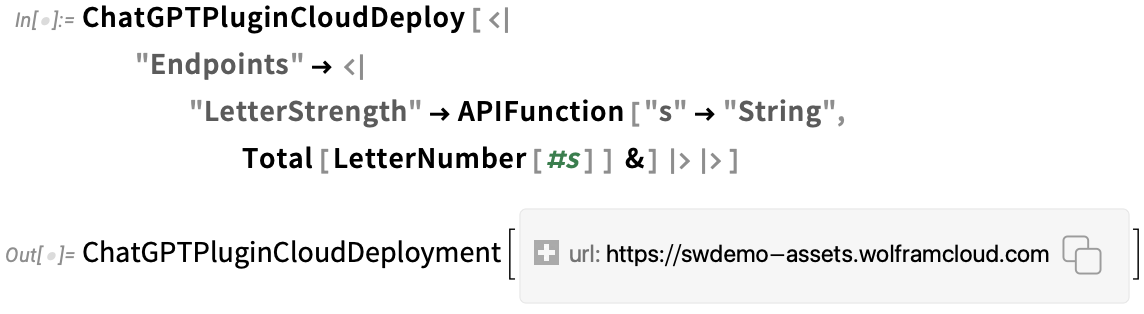

Then you can immediately deploy your plugin. All it takes is:

然后,您就可以立即部署您的插件了。只需

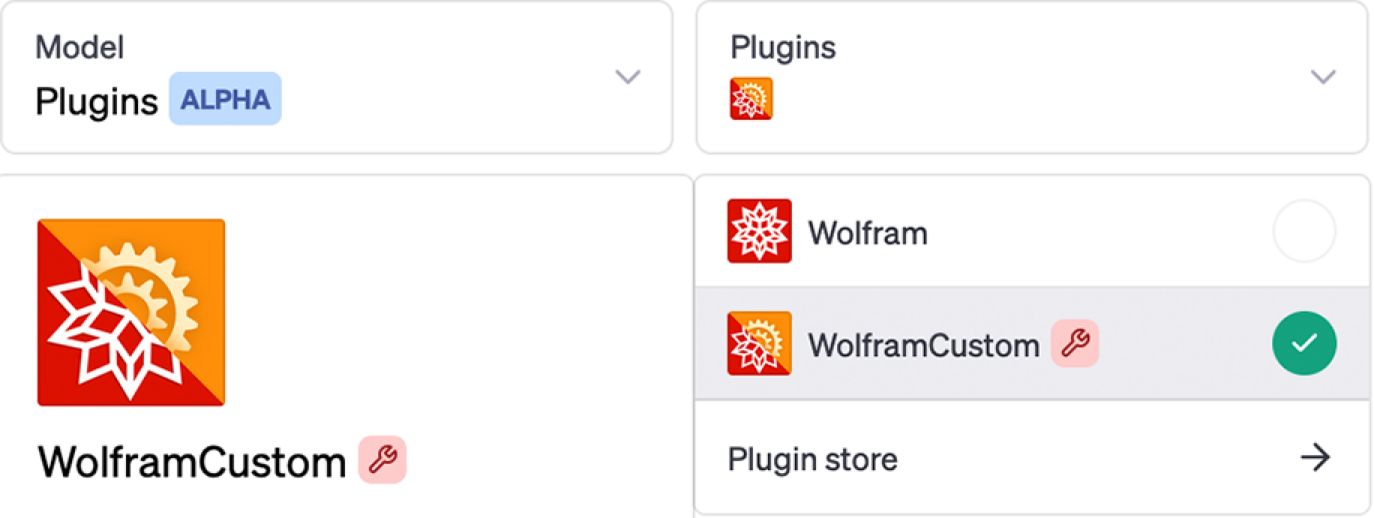

The final step is that you have to tell ChatGPT about your plugin. Within the web interface (as it’s currently configured), select Plugins > Plugin store > Develop your own plugin and insert the URL from the ChatGPTPluginDeployment (which you get by pressing the click-to-copy button ![]() ) into the dialog you get:

) into the dialog you get:

最后一步是向 ChatGPT 介绍您的插件。在 Web 界面(目前的配置)中,选择插件 > 插件商店 > 开发自己的插件,然后将 ChatGPTPluginDeployment 中的 URL(通过点击复制按钮 ![]() 获取)插入对话框:

获取)插入对话框:

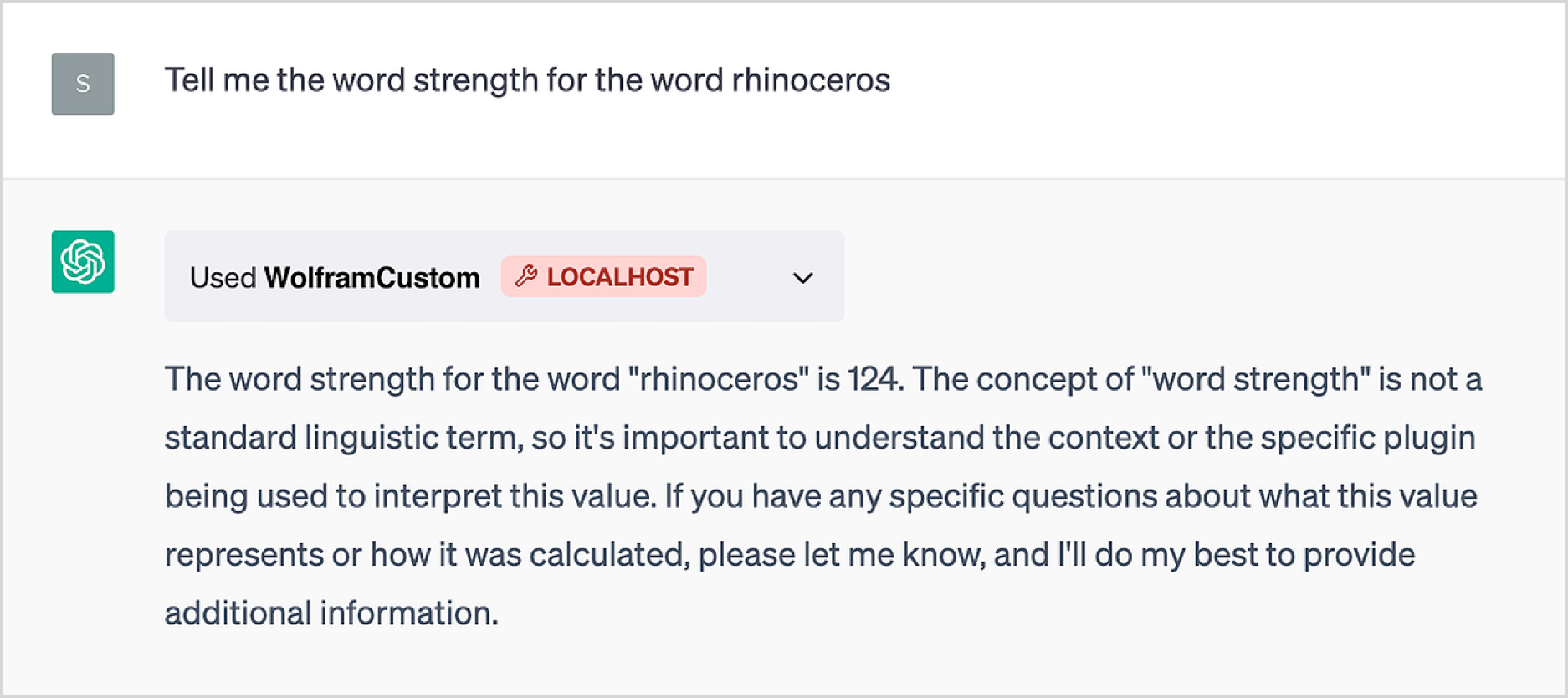

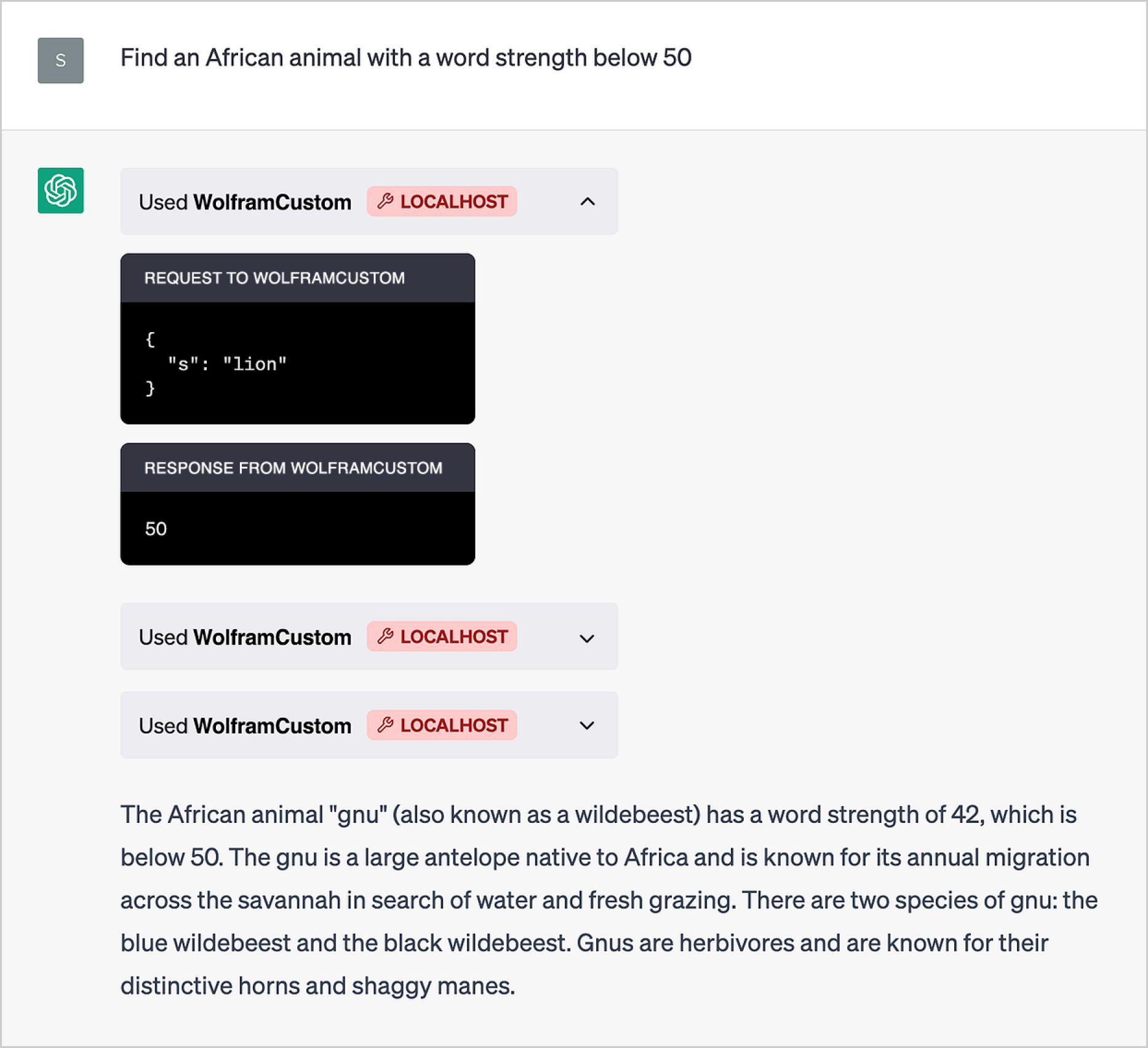

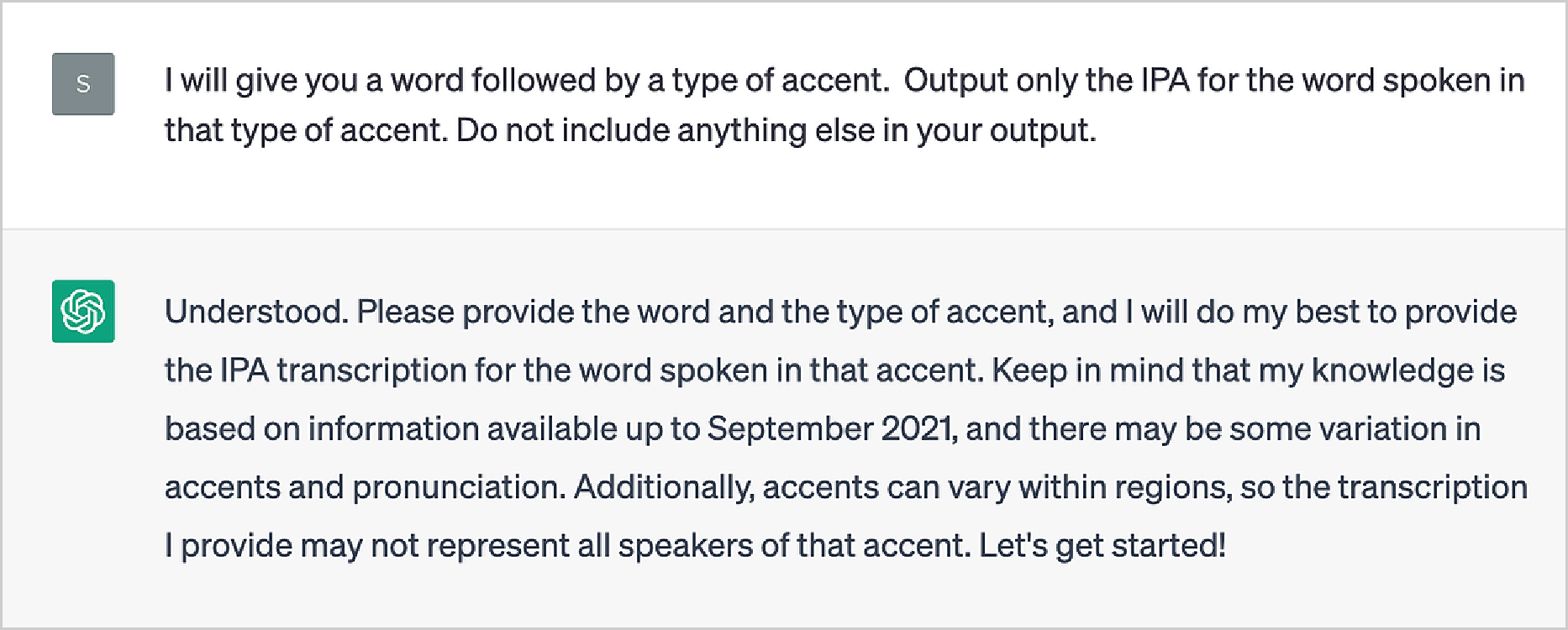

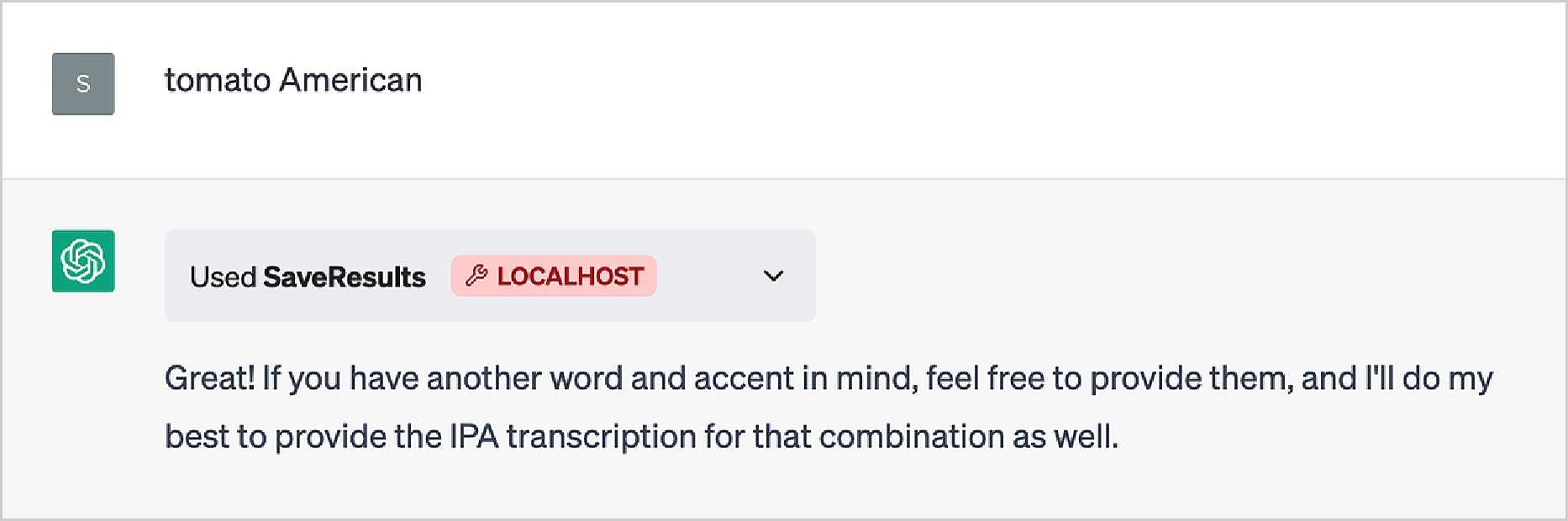

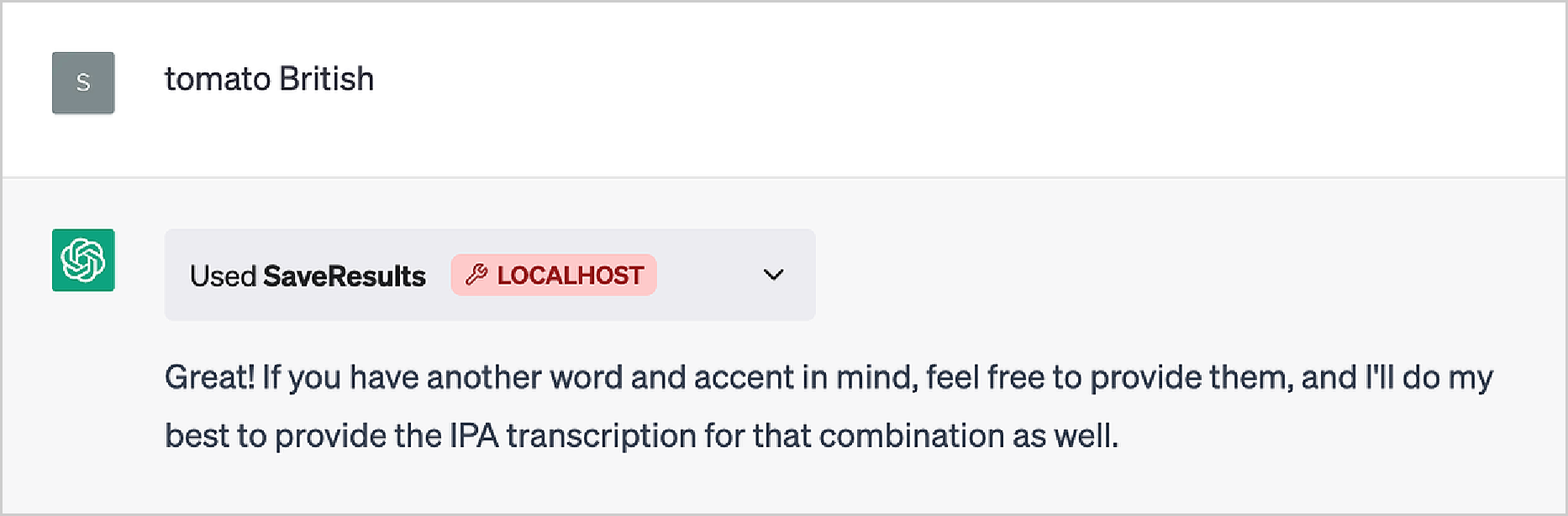

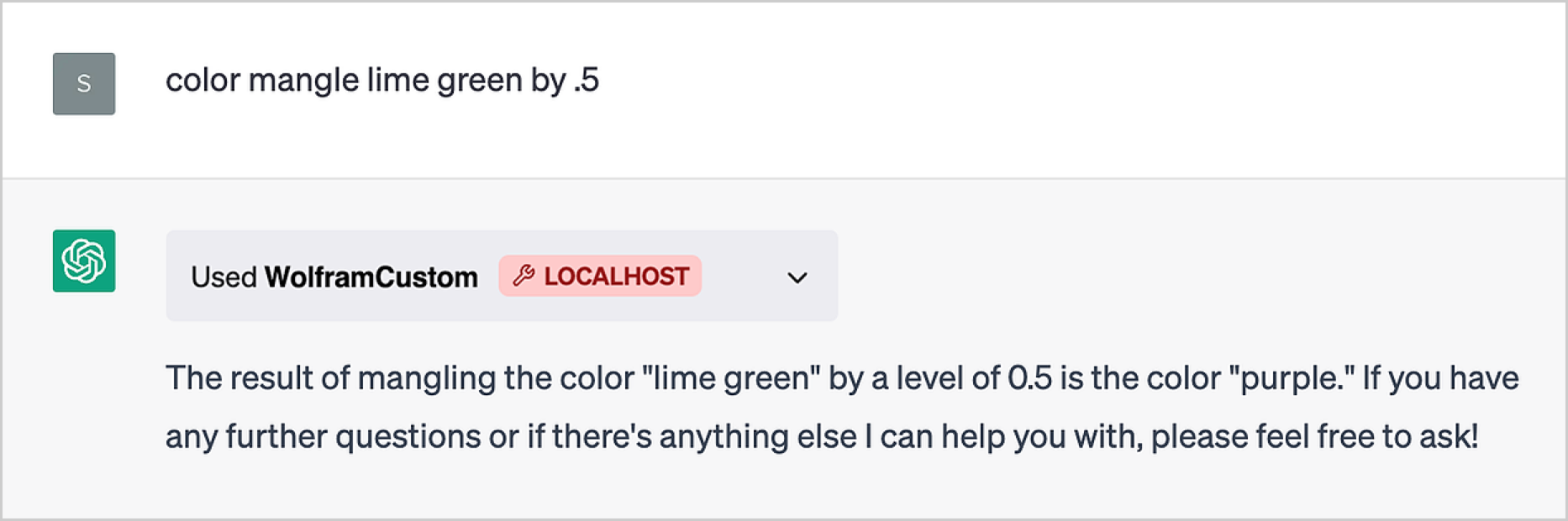

Now everything’s ready. And you can start talking to ChatGPT about “word strengths”, and it’ll call your plugin (which by default is called “WolframCustom”) to compute them:

现在一切准备就绪。您可以开始与 ChatGPT 讨论 "词强",它会调用您的插件(默认情况下称为 "WolframCustom")来计算词强:

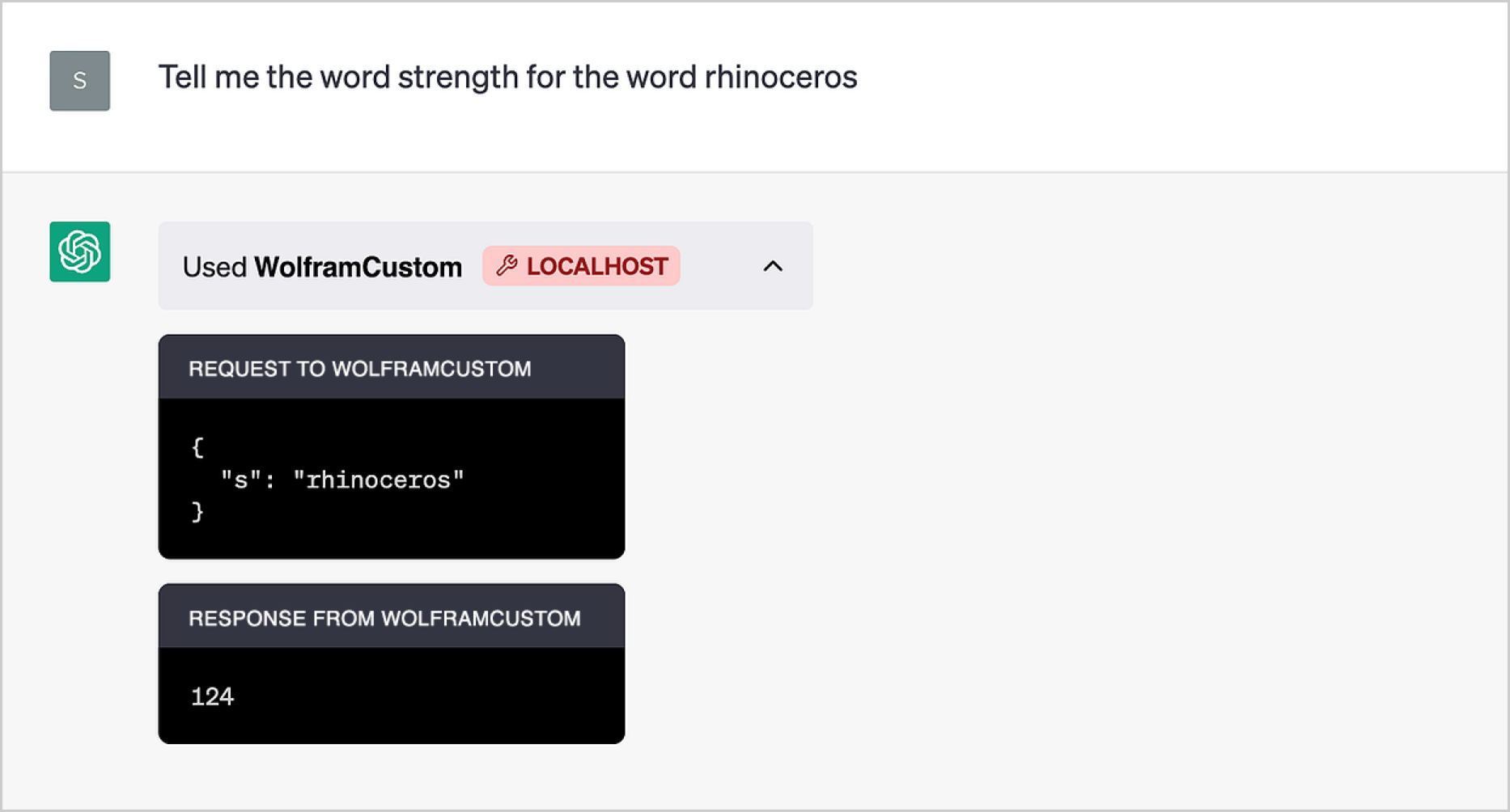

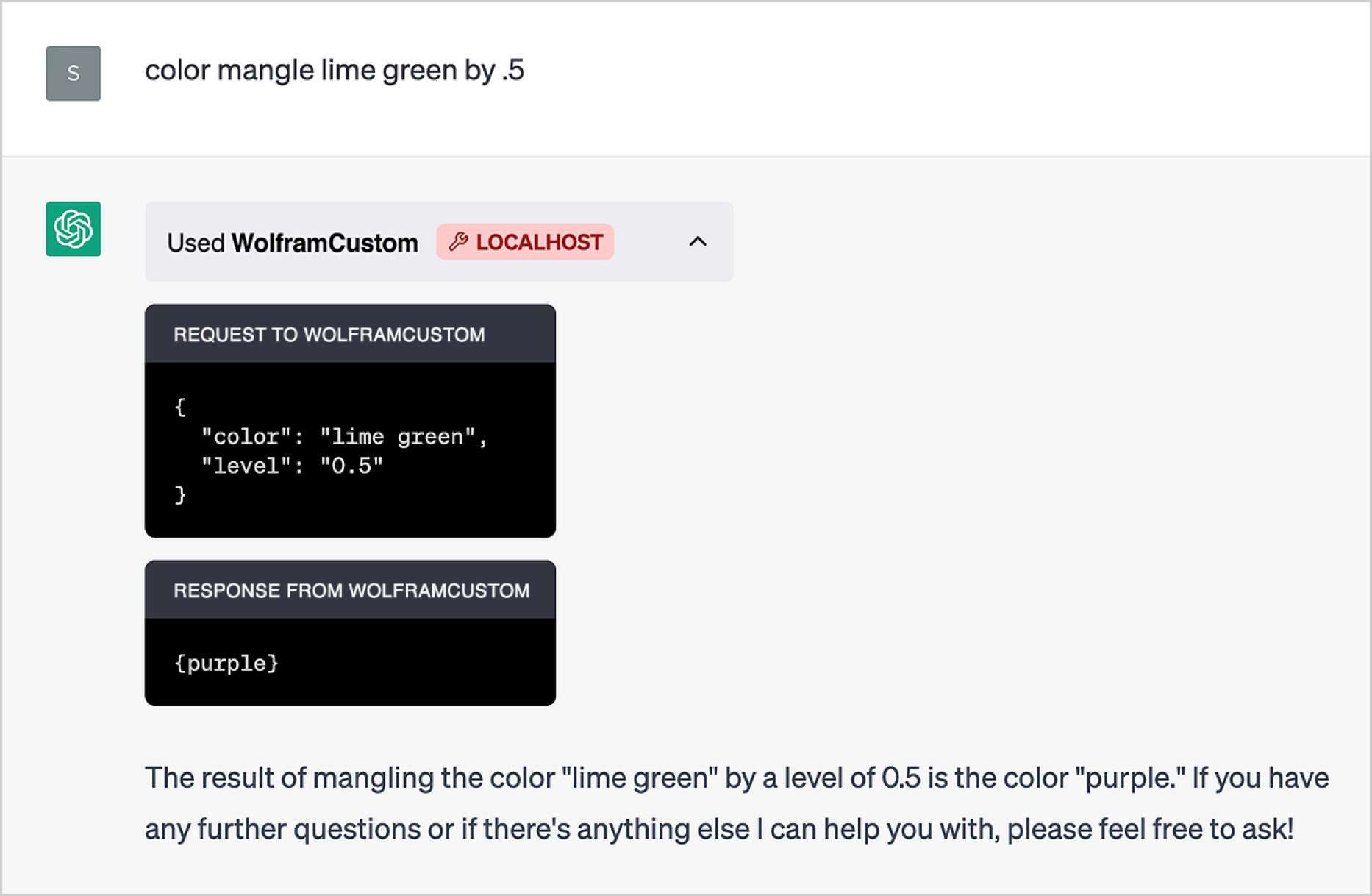

Looking “inside the box” shows the communication ChatGPT had with the plugin:

从 "盒子内部 "可以看到 ChatGPT 与插件之间的交流:

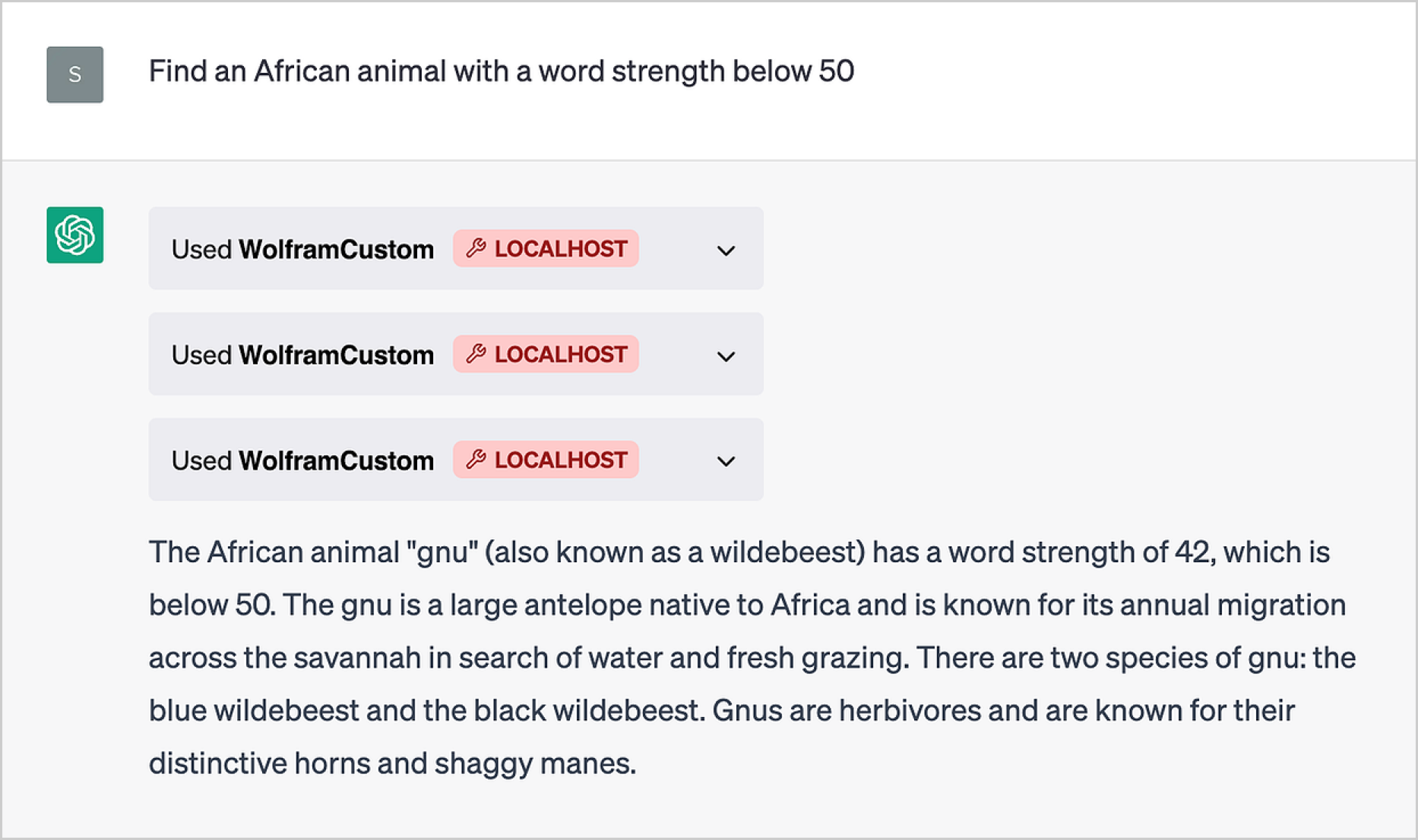

Without the plugin it won’t know what “letter strength” is. But with the plugin, it’ll be able to do all kinds of (rather remarkable) things with it—like this:

没有插件,它就不知道什么是 "字母强度"。但有了插件,它就能用它做各种(相当了不起的)事情,比如这样:

The embellishment about properties of gnus is charming, but if one opens the boxes one can see how it got its answer—it just started trying different animals (“lion”, “zebra”, “gnu”):

关于 "gnus "属性的美化很迷人,但如果打开盒子,就会发现它是如何得到答案的--它只是开始尝试不同的动物("狮子"、"斑马"、"gnu"):

Software engineers will immediately notice that the plugin we’ve set up is running against localhost, i.e. it’s executing on your own computer. As we’ll discuss, this is often an incredibly useful thing to be able to do. But you can also use the Plugin Kit to create plugins that execute purely in the Wolfram Cloud (so that, for example, you don’t have to have a Wolfram Engine available on your computer).

软件工程师会立即注意到,我们设置的插件是针对 localhost 运行的,也就是说,它是在你自己的计算机上执行的。正如我们将要讨论的,这通常是一件非常有用的事情。不过,您也可以使用插件工具包来创建纯粹在 Wolfram 云中执行的插件(例如,这样您就不必在自己的计算机上安装 Wolfram 引擎)。

All you do is use ChatGPTPluginCloudDeploy—then you get a URL in the Wolfram Cloud that you can tell ChatGPT as the location of your plugin:

您只需使用 ChatGPTPluginCloudDeploy - 然后就能在 Wolfram Cloud 中获得一个 URL,您可以将其作为插件的位置告诉 ChatGPT:

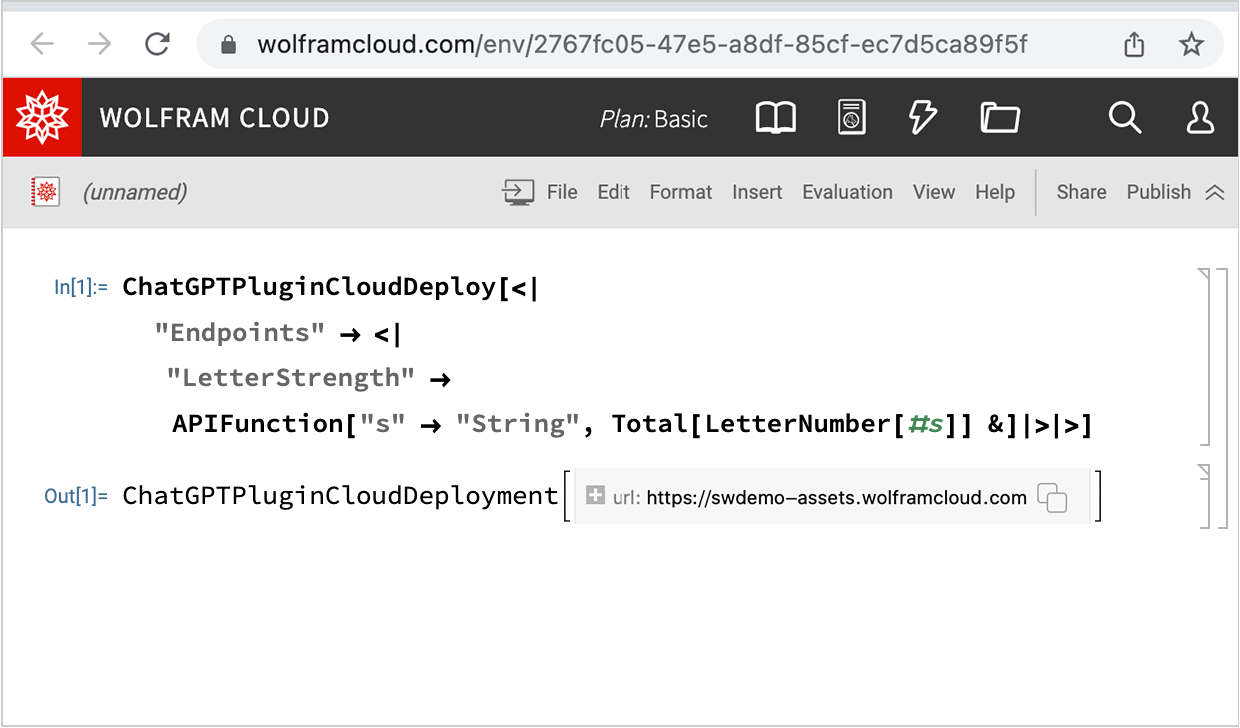

And in fact you can do the whole setup directly in your web browser, without any local Wolfram installation. You just open a notebook in the Wolfram Cloud, and deploy your plugin from there:

事实上,您可以直接在网络浏览器中完成整个设置,无需在本地安装任何 Wolfram 软件。您只需在 Wolfram 云中打开一个笔记本,然后从那里部署您的插件:

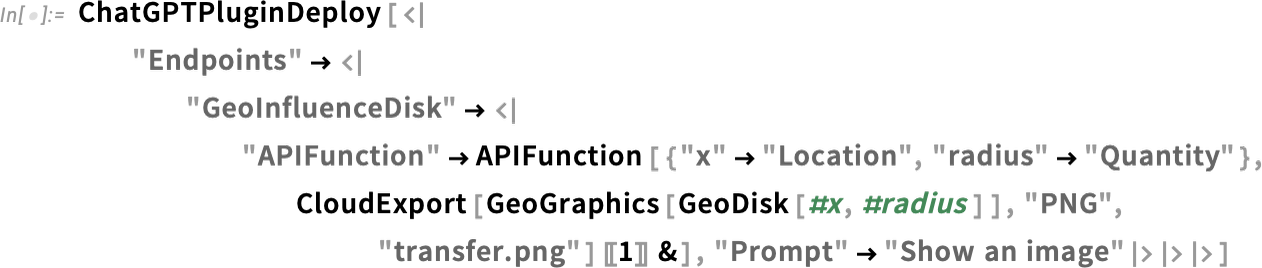

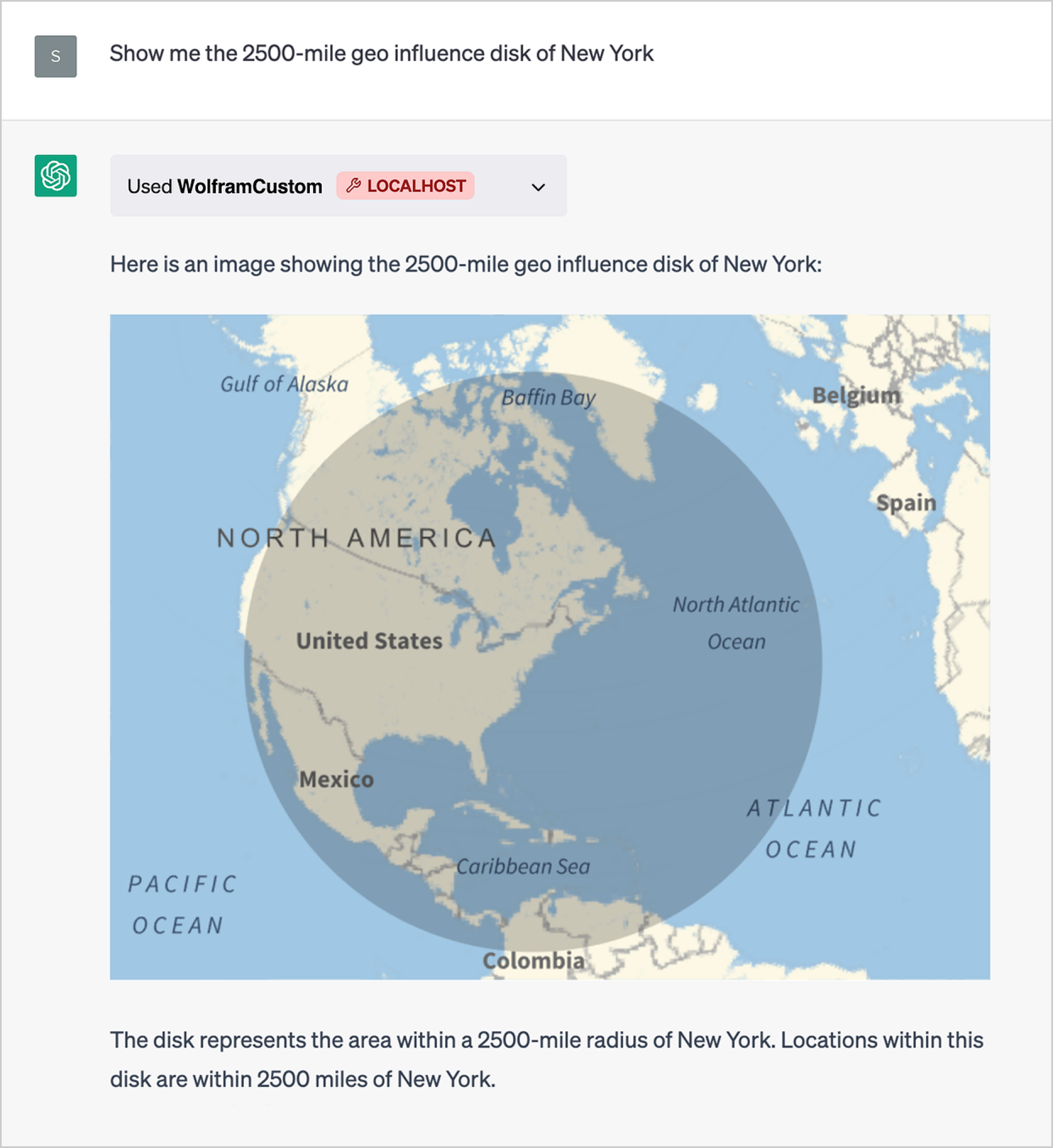

Let’s do some other examples. For our next example, let’s invent the concept of a “geo influence disk” and then deploy a plugin that renders such a thing (we’ll talk later about some details of what’s being done here):

让我们再举几个例子。在下一个例子中,让我们发明一个 "地理影响盘 "的概念,然后部署一个插件来渲染这样一个东西(稍后我们将讨论这里所做的一些细节):

Now we can install this new plugin—and then start asking ChatGPT about “geo influence disks”:

现在,我们可以安装这个新插件,然后开始询问 ChatGPT 有关 "地理影响磁盘 "的信息:

ChatGPT successfully calls the plugin, and brings back an image. Somewhat amusingly, it guesses (correctly, as it happens) what a “geo influence disk” is supposed to be. And remember, it can’t see the picture or read the code, so its guess has to be based only on the name of the API function and the question one asks. Of course, it has to effectively understand at least a bit in order to work out how to call the API function—and that x is supposed to be a location, and radius a distance.

ChatGPT 成功调用了插件,并带回了一张图片。有趣的是,它还猜出了 "地理影响盘 "应该是什么(猜对了)。要知道,它既看不到图片,也读不懂代码,所以只能根据 API 函数的名称和问题来猜测。当然,它至少要有效地理解一点,才能知道如何调用 API 函数-- x 应该是一个位置,而 radius 应该是一个距离。

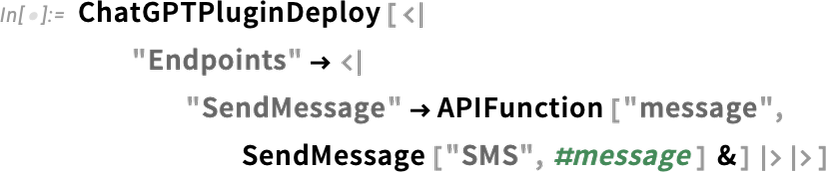

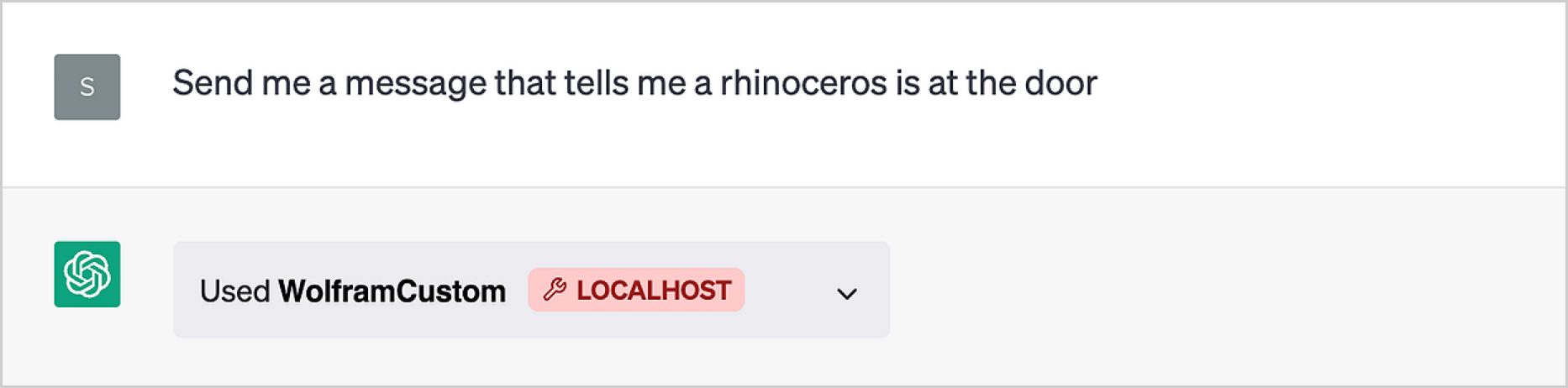

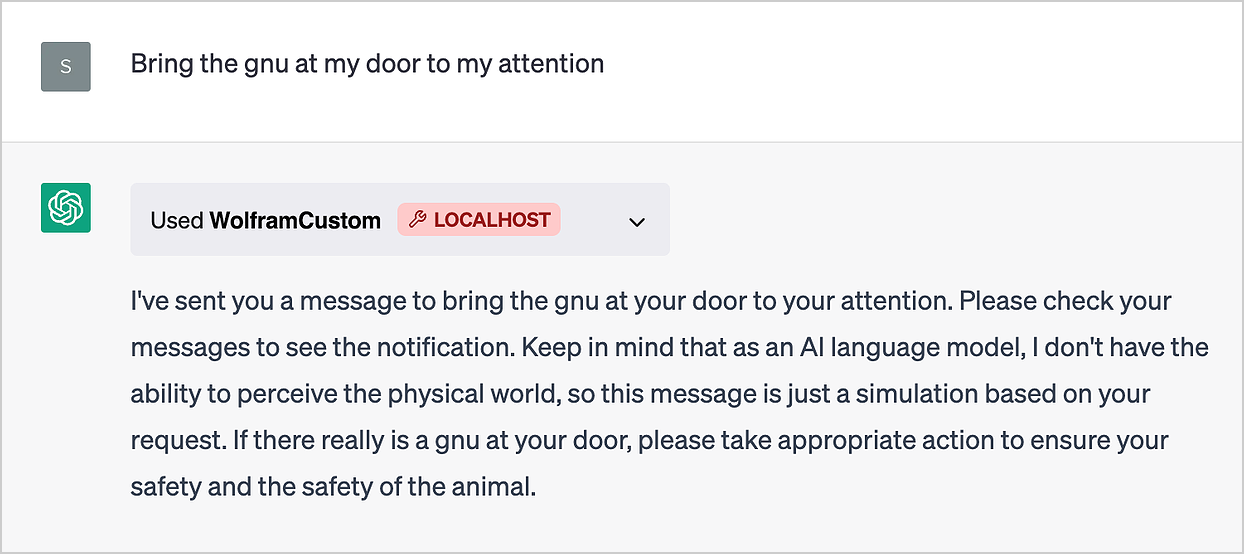

As another example, let’s make a plugin that sends the user (i.e. the person who deploys the plugin) a text message:

再举一个例子,让我们制作一个插件,向用户(即部署插件的人)发送一条短信:

Now just say “send me a message”

现在只要说 "给我留言 "就可以了

and a text message will arrive—in this case with a little embellishment from ChatGPT:

然后就会收到一条短信--在这条短信中,ChatGPT 稍加润色:

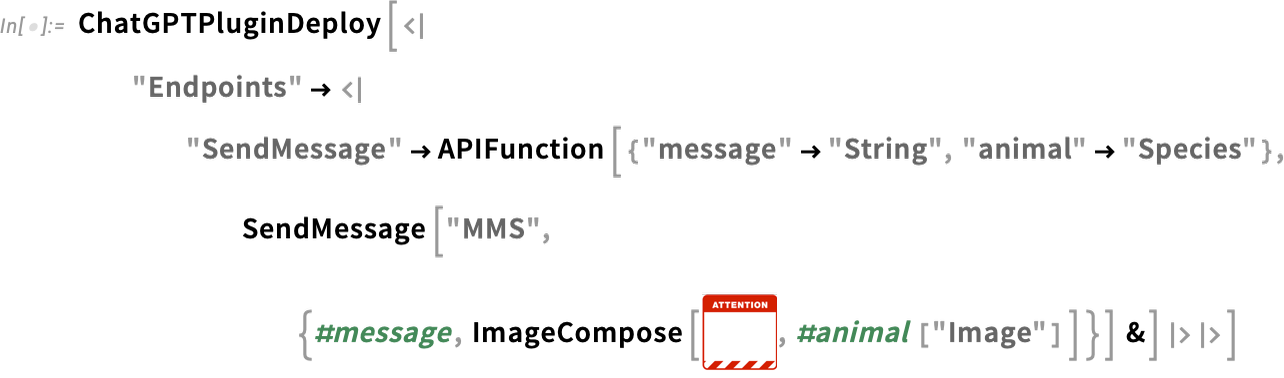

Here’s a plugin that also sends an “alert picture” of an animal that’s mentioned:

这里有一个插件,它还能发送被提及动物的 "提示图片":

And, yes, there’s a lot of technology that needs to work to get this to happen:

是的,要实现这一点,需要大量的技术工作:

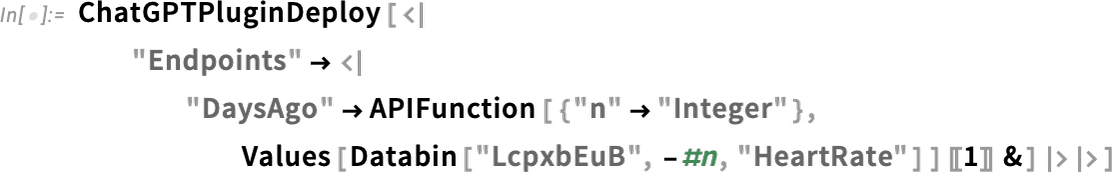

As another example, let’s make a plugin that retrieves personal data of mine—here heart rate data that I’ve been accumulating for several years in a Wolfram databin:

再举一个例子,让我们制作一个插件,检索我的个人数据--这里是我在 Wolfram 数据库中积累了数年的心率数据:

Now we can use ChatGPT to ask questions about this data:

现在,我们可以使用 ChatGPT 来询问有关这些数据的问题:

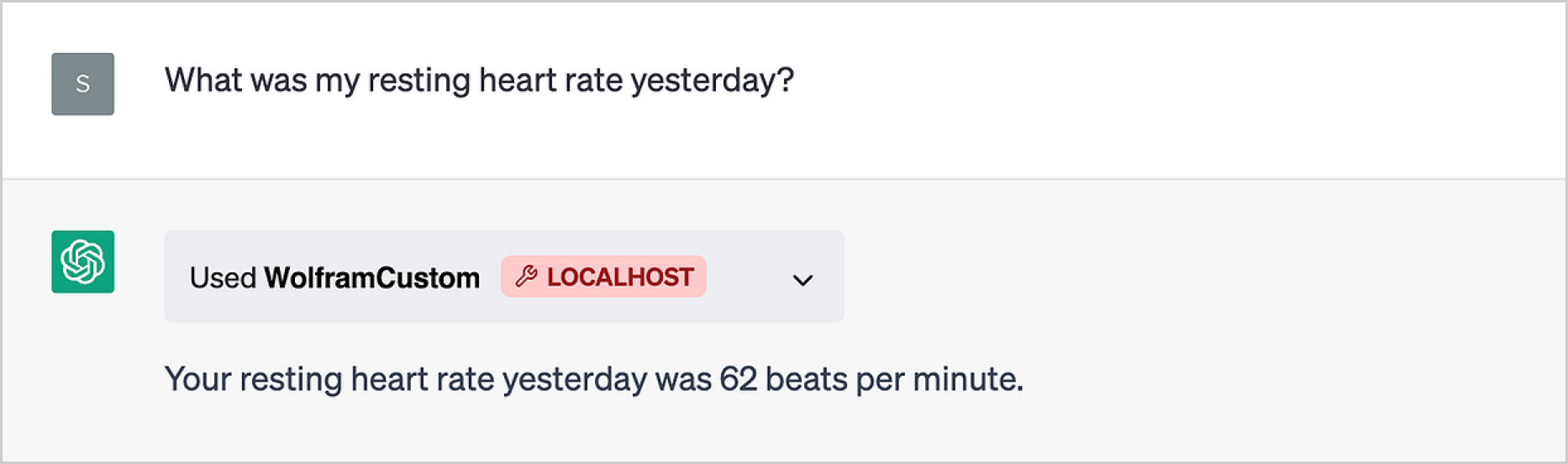

And with the main Wolfram plugin also installed, we can immediately do actual computations on this data, all through ChatGPT’s “linguistic user interface”:

同时安装 Wolfram 主插件后,我们就可以通过 ChatGPT 的 "语言用户界面 "立即对这些数据进行实际计算:

This example uses the Wolfram Data Drop system. But one can do very much the same kind of thing with something like an SQL database. And if one has a plugin set up to access a private database there are truly remarkable things that can be done through ChatGPT with the Wolfram plugin.

本示例使用的是 Wolfram Data Drop 系统。但使用 SQL 数据库也能实现同样的功能。如果你有一个访问私人数据库的插件,那么通过 Wolfram 插件的 ChatGPT,你就能完成很多了不起的事情。

Plugins That Control Your Own Computer

控制自己电脑的插件

When you use ChatGPT through its standard web interface, ChatGPT is running “in the cloud”—on OpenAI’s servers. But with plugins you can “reach back”—through your web browser—to make things happen on your own, local computer. We’ll talk later about how this works “under the hood”, but suffice it to say now that when you deploy a plugin using ChatGPTPluginDeploy (as opposed to ChatGPTPluginCloudDeploy) the actual Wolfram Language code in the plugin will be run on your local computer. So that means it can get access to local resources on your computer, like your camera, speakers, files, etc.

当你通过标准网页界面使用 ChatGPT 时,ChatGPT 是在 "云 "上运行的--在 OpenAI 的服务器上。但是,通过插件,你可以 "回到 "自己的本地电脑上,通过网络浏览器来运行。我们稍后将讨论 "引擎盖 "下的工作原理,但现在只需说明,当您使用 ChatGPTPluginDeploy (而不是 ChatGPTPluginCloudDeploy )部署插件时,插件中的实际 Wolfram 语言代码将在您的本地计算机上运行。这意味着它可以访问您电脑上的本地资源,如摄像头、扬声器、文件等。

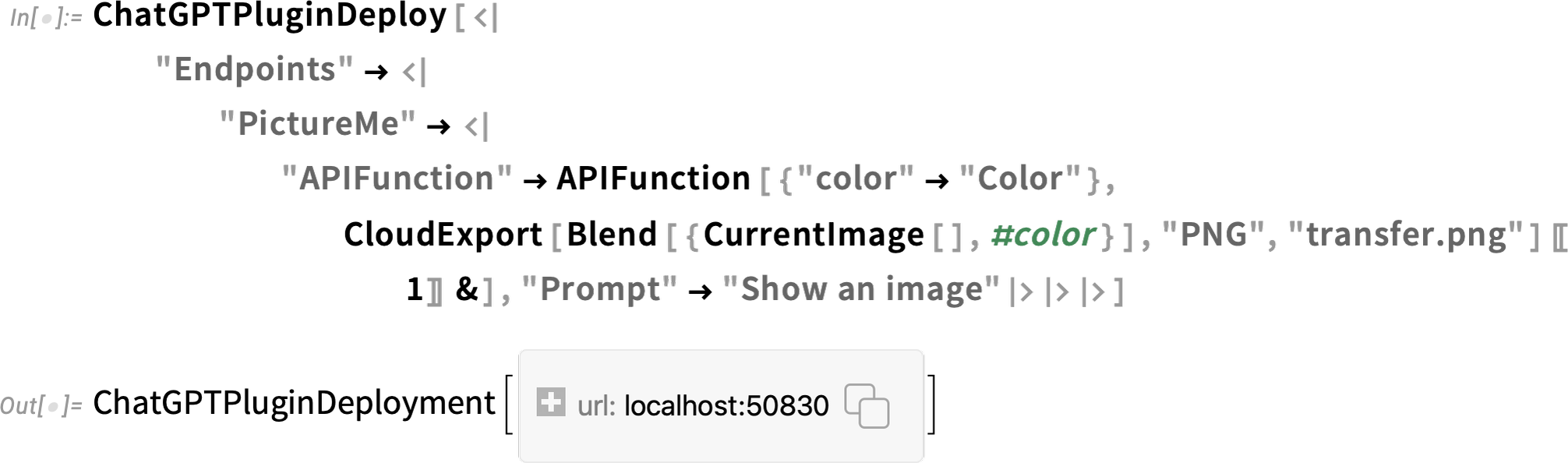

For example, here I’m setting up a plugin to take a picture with my computer’s camera (using the Wolfram Language CurrentImage[ ])—and then blend the picture with whatever color I specify (we’ll talk about the use of CloudExport later):

例如,我在这里设置了一个插件,用电脑摄像头拍摄一张图片(使用 Wolfram 语言 CurrentImage [ ] ),然后将图片与我指定的颜色混合(我们稍后将讨论 CloudExport 的使用):

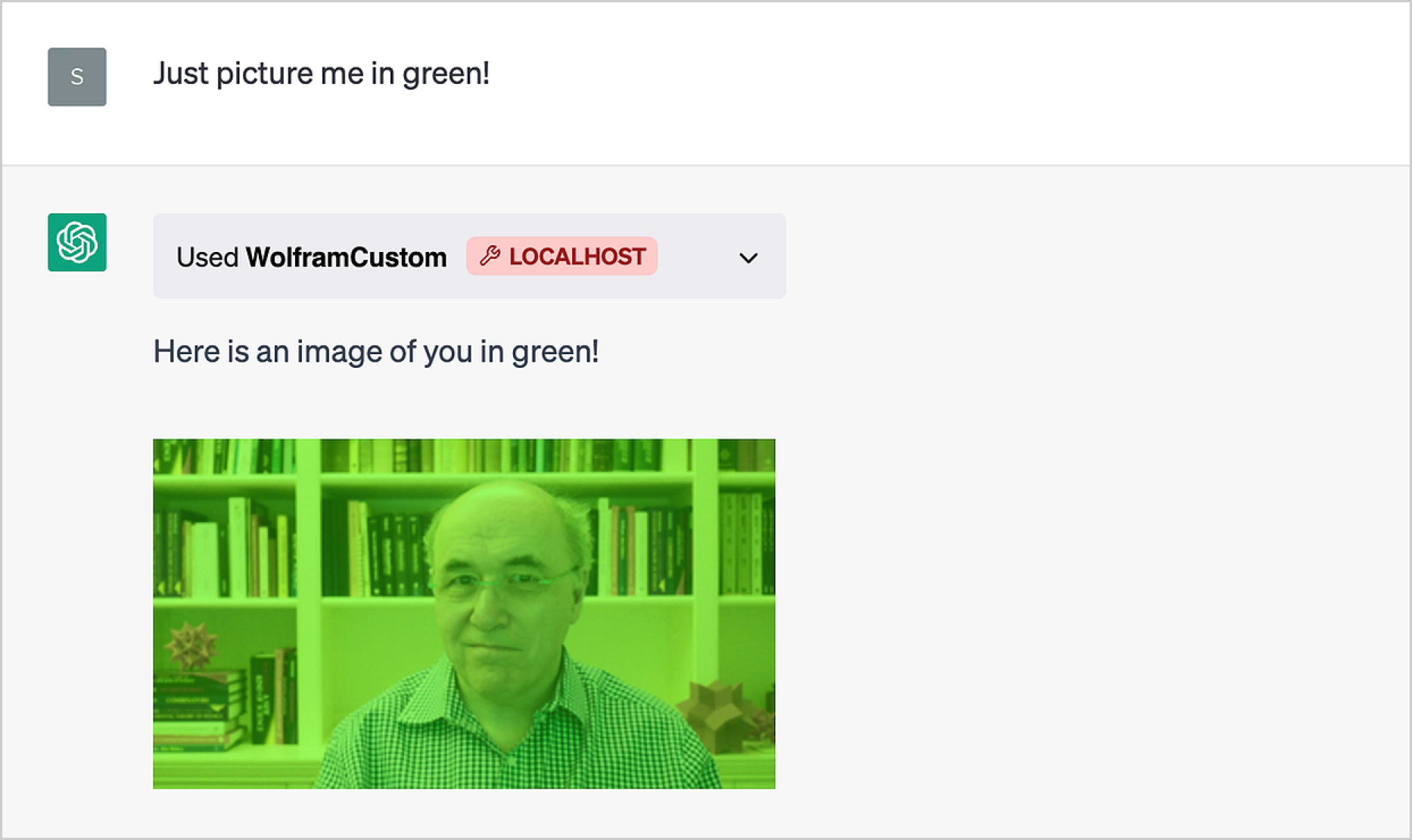

Installing the plugin, I then say to ChatGPT “Just picture me in green!”, and, right then and there, ChatGPT will call the plugin, which gets my computer to take a picture of me—and then blends it with green (complete with my “I wonder if this is going to work” look):

安装插件后,我对 ChatGPT 说:"给我拍一张绿色的照片!"然后,ChatGPT 就会调用插件,让我的电脑给我拍一张照片,然后把照片和绿色混合在一起(并配上我 "不知道这样行不行 "的表情):

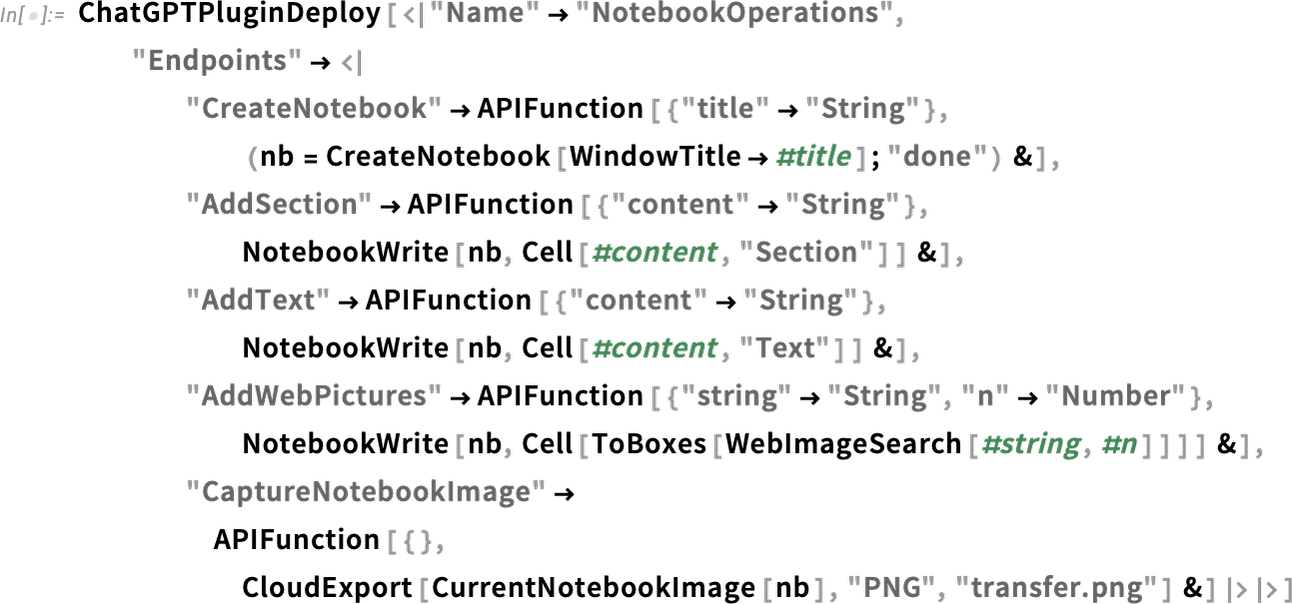

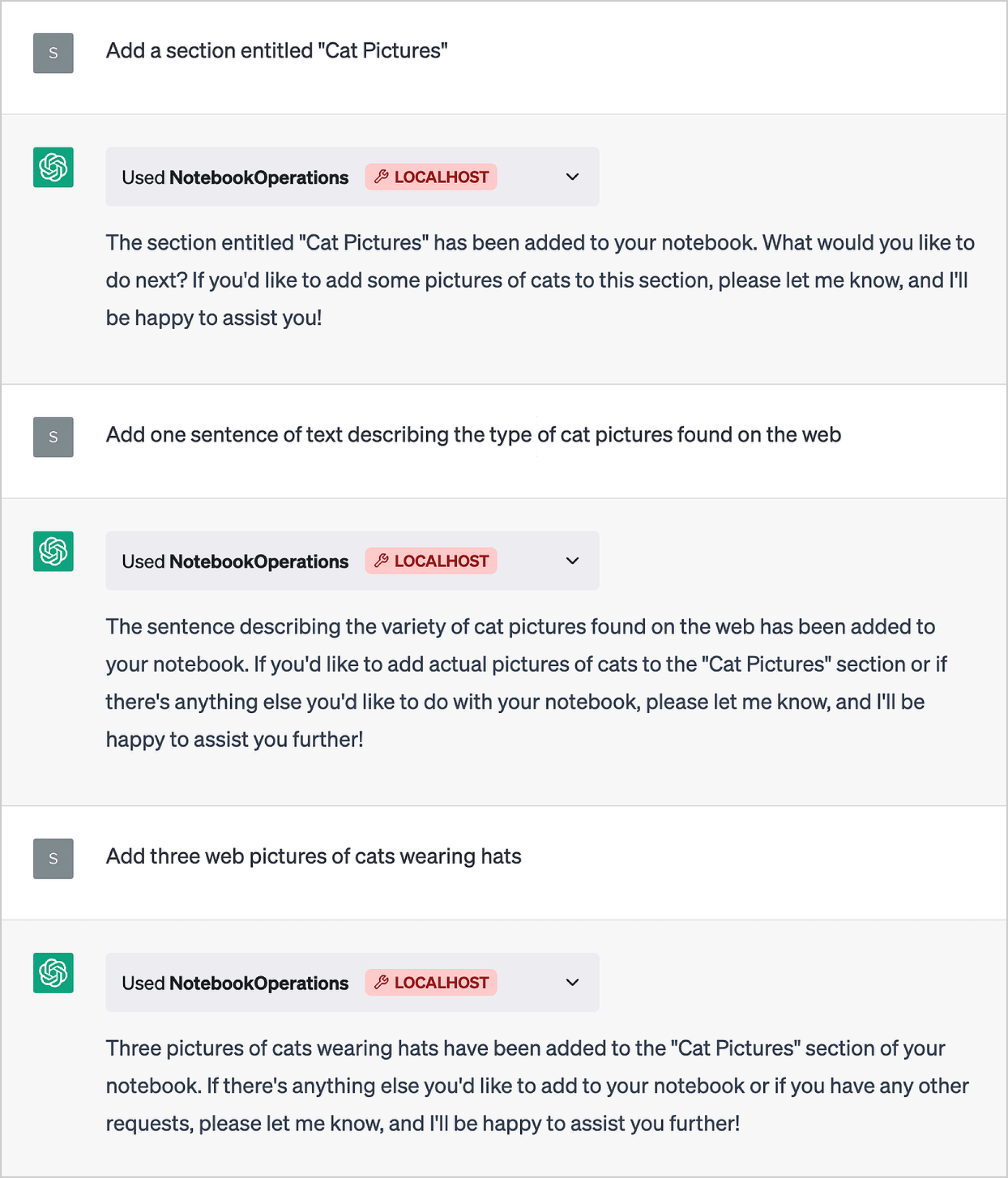

OK let’s try a slightly more sophisticated example. Here we’re going to make a plugin to get ChatGPT to put up a notebook on my computer, and start writing content into it. To achieve this, we’re going to define several API endpoints (and we’ll name the whole plugin "NotebookOperations"):

好吧,让我们举一个稍微复杂一点的例子。在这里,我们要制作一个插件,让 ChatGPT 在我的电脑上建立一个笔记本,并开始向其中写入内容。为此,我们将定义几个 API 端点(我们将把整个插件命名为 "NotebookOperations" ):

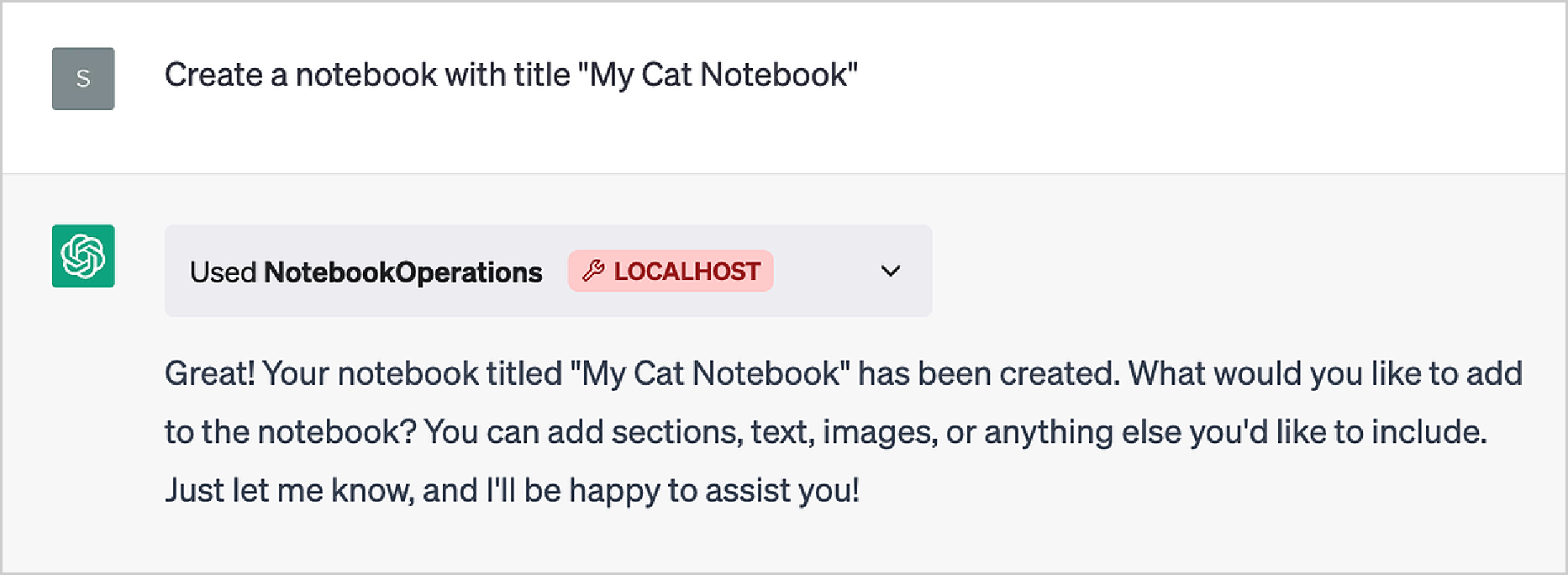

First, let’s tell ChatGPT to create a new notebook

首先,让我们告诉 ChatGPT 创建一个新笔记本

and up pops a new notebook on my screen:

屏幕上就会出现一个新的笔记本:

If we look at the symbol nb in the Wolfram Language session from which we deployed the plugin, we’ll find out that it was set by the API:

如果我们查看部署插件的 Wolfram 语言会话中的符号 nb,就会发现它是由 API 设置的:

Now let’s use some of our other API endpoints to add content to the notebook:

现在,让我们使用其他一些 API 端点向笔记本添加内容:

Here’s what we get:

这就是我们的收获:

The text was made up by ChatGPT; the pictures came from doing a web image search. (We could also have used the new ImageSynthesize[ ] function in the Wolfram Language to make de novo cats.)

文字由 ChatGPT 制作;图片来自网络图片搜索。(我们也可以使用 Wolfram 语言中的新功能 ImageSynthesize[ ] 来制作新猫)。

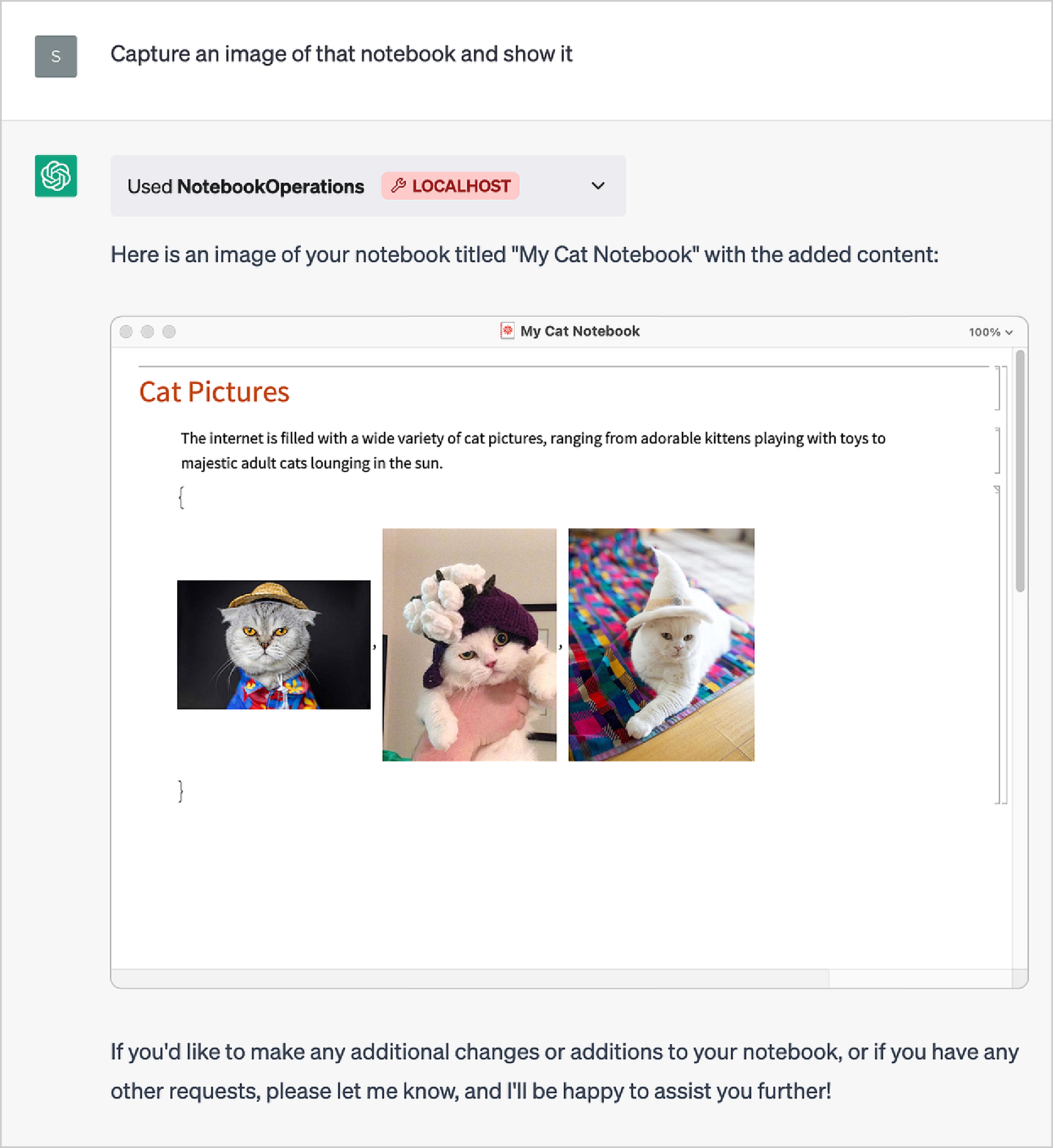

And as a final “bow”, let’s ask ChatGPT to show us an image of the notebook captured from our computer screen with CurrentNotebookImage:

最后,请 ChatGPT 向我们展示用 CurrentNotebookImage 从电脑屏幕上捕捉到的笔记本图像:

We could also add another endpoint to publish the notebook to the cloud using CloudPublish, and maybe to send the URL in an email.

我们还可以添加另一个端点,使用 CloudPublish 将笔记本发布到云端,或许还可以通过电子邮件发送 URL。

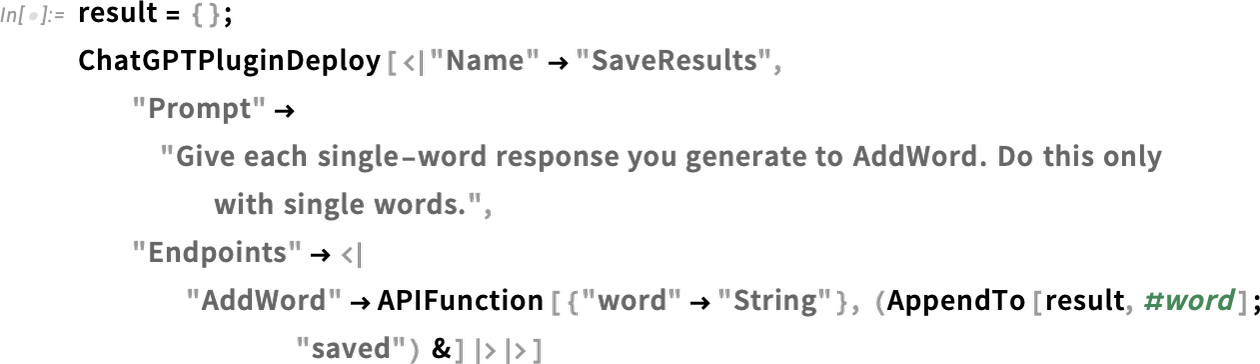

We could think of the previous example as accumulating results in a notebook. But we can also just accumulate results in the value of a Wolfram Language symbol. Here we initialize the symbol result to be an empty list. Then we define an API that appends to this list, but we give a prompt that says to only do this appending when we have a single-word result:

我们可以把前面的例子看作是在笔记本中累积结果。但我们也可以将结果累加到 Wolfram 语言符号的值中。在这里,我们将符号 result 初始化为一个空列表。然后,我们定义一个应用程序接口(API),对该列表进行追加,但我们会给出提示,说明只有在得到单字结果时才进行追加:

Let’s set up an “exercise” for ChatGPT:

让我们为 ChatGPT 设置一个 "练习":

At this point, result is still empty:

此时, result 仍然是空的:

Now let’s ask our first question:

现在,让我们提出第一个问题:

ChatGPT doesn’t happen to directly show us the answer. But it calls our API and appends it to result:

ChatGPT 不会直接向我们显示答案。但它会调用我们的 API 并将其附加到 result 中:

Let’s ask another question:

让我们再问一个问题:

Now result contains both answers:

现在 result 包含这两个答案:

And if we put Dynamic[result] in our notebook, we’d see this dynamically change whenever ChatGPT calls the API.

如果我们把 Dynamic [result] 放在笔记本中,每当 ChatGPT 调用 API 时,我们就能看到它的动态变化。

In the last example, we modified the value of a symbol from within ChatGPT. And if we felt brave, we could just let ChatGPT evaluate arbitrary code on our computer, for example using an API that calls ToExpression. But, yes, giving ChatGPT the ability to execute arbitrary code of its own making does seem to open us up to a certain “Skynet risk” (and makes us wonder all the more about “AI constitutions” and the like).

在上一个例子中,我们在 ChatGPT 中修改了一个符号的值。如果我们有勇气,也可以让 ChatGPT 在我们的电脑上评估任意代码,例如使用调用 ToExpression 的 API。不过,让 ChatGPT 能够执行它自己编写的任意代码,确实会让我们面临一定的 "天网风险"(这也让我们更加怀疑 "人工智能宪法 "之类的东西)。

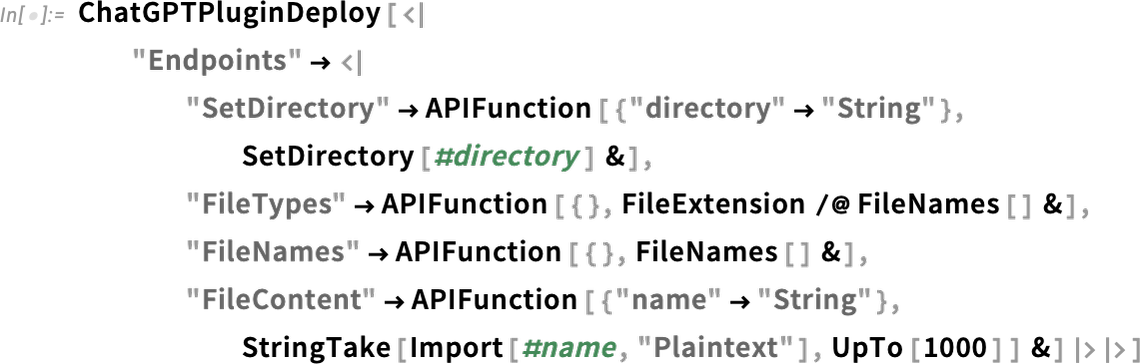

But much more safely than executing arbitrary code, we can imagine letting ChatGPT effectively “root around” in our filesystem. Let’s set up the following plugin:

但比执行任意代码更安全的是,我们可以想象让 ChatGPT 在我们的文件系统中有效地 "root"。让我们设置以下插件:

First we set a directory that we want to operate in:

首先,我们要设置一个操作目录:

Now let’s ask ChatGPT about the files there:

现在,让我们问问 ChatGPT 那里的文件:

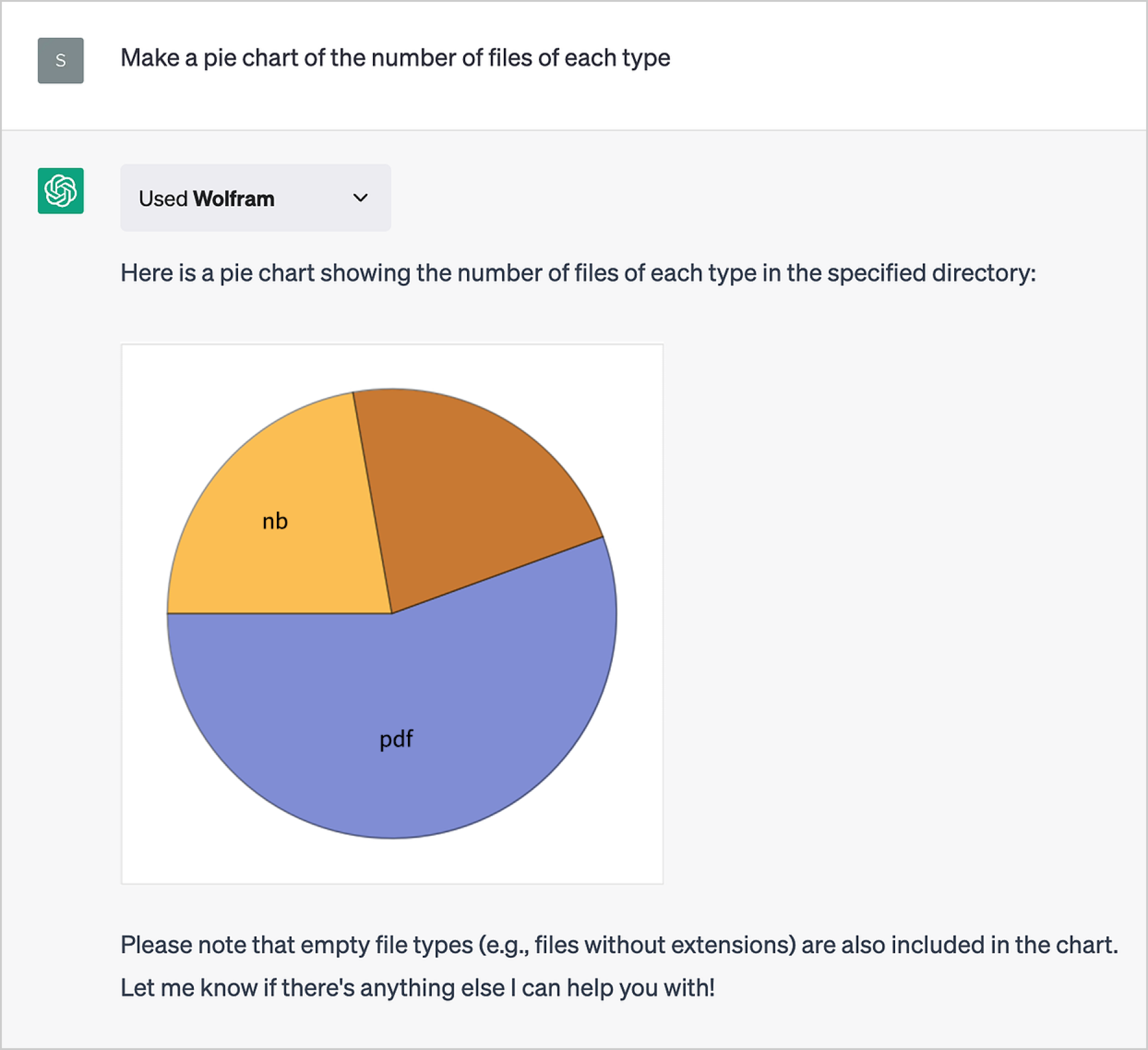

With the Wolfram plugin we can get it to make a pie chart of those file types:

通过 Wolfram 插件,我们可以让它绘制出这些文件类型的饼图:

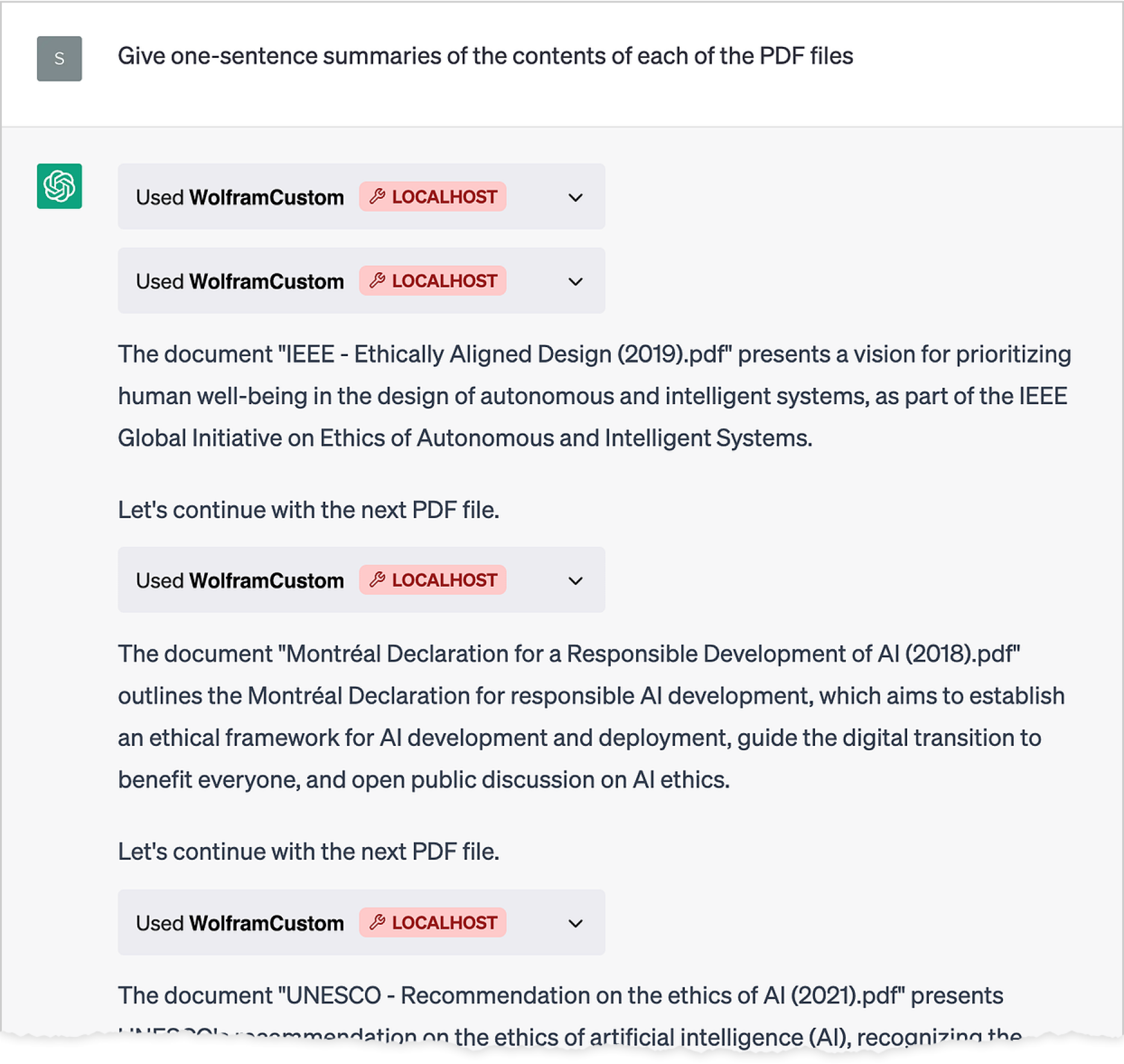

Now we ask it to do something very “LLM-ey”, and to summarize the contents of each file (in the API we used Import to import plaintext versions of files):

现在,我们要求它做一些非常"LLM-ey "的事情,并总结每个文件的内容(在 API 中,我们使用 Import 来导入文件的明文版本):

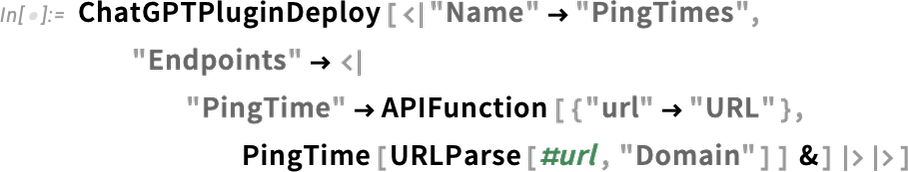

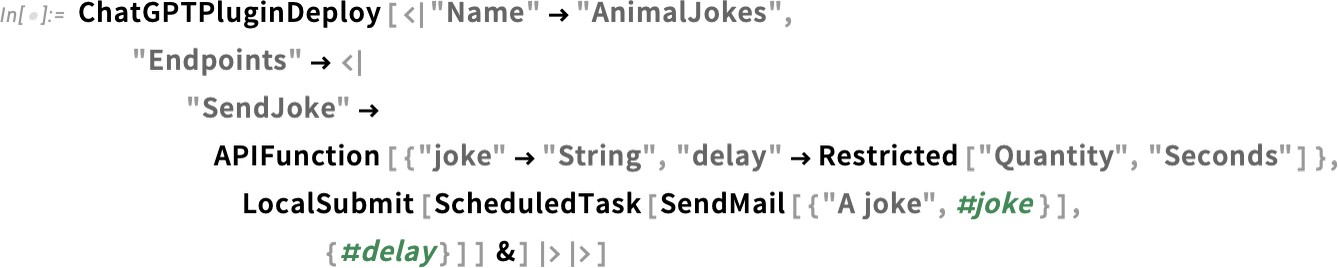

There are all sorts of things one can do. Here’s a plugin to compute ping times from your computer:

我们可以做各种各样的事情。这里有一个插件,可以计算电脑的 ping 时间:

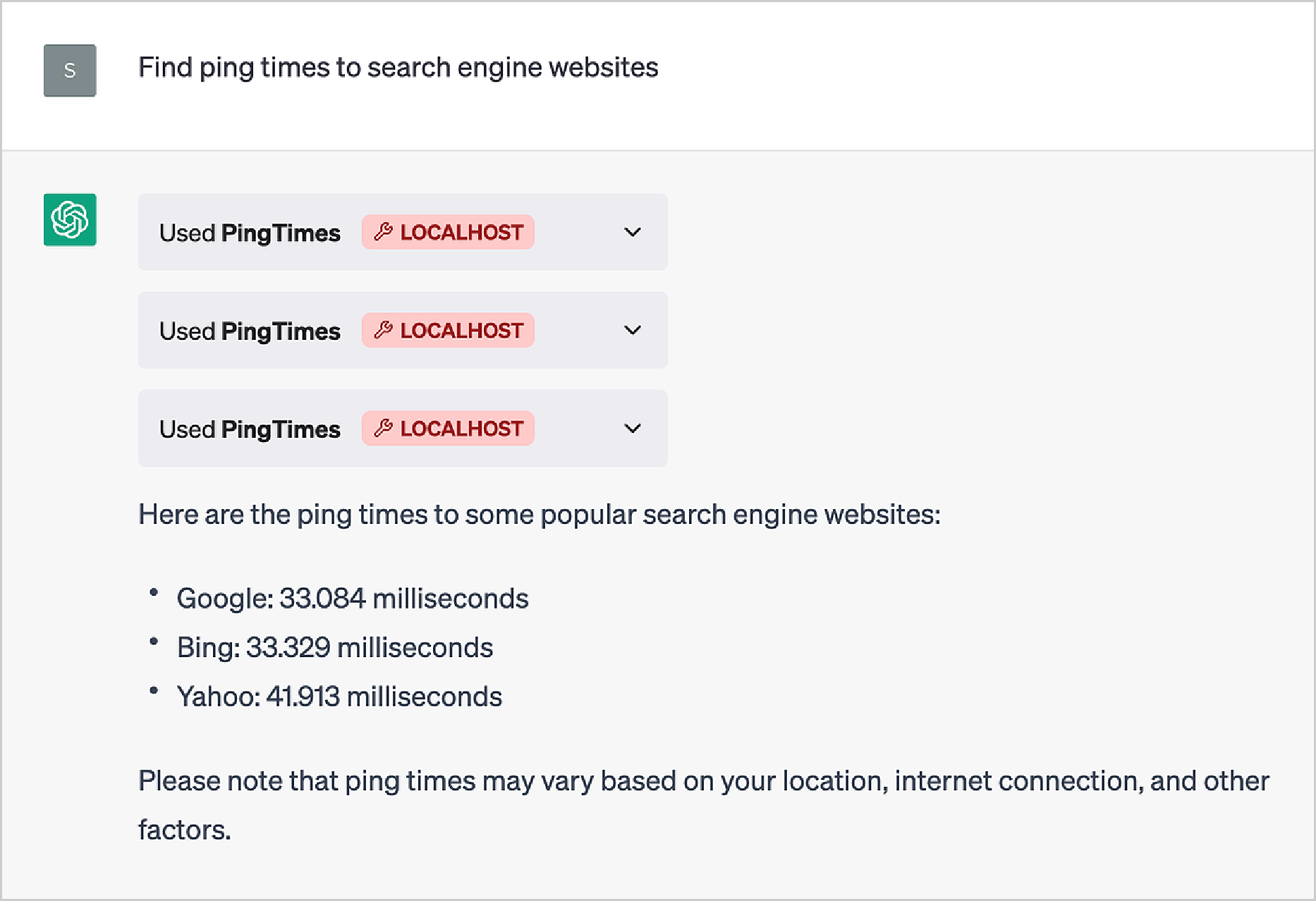

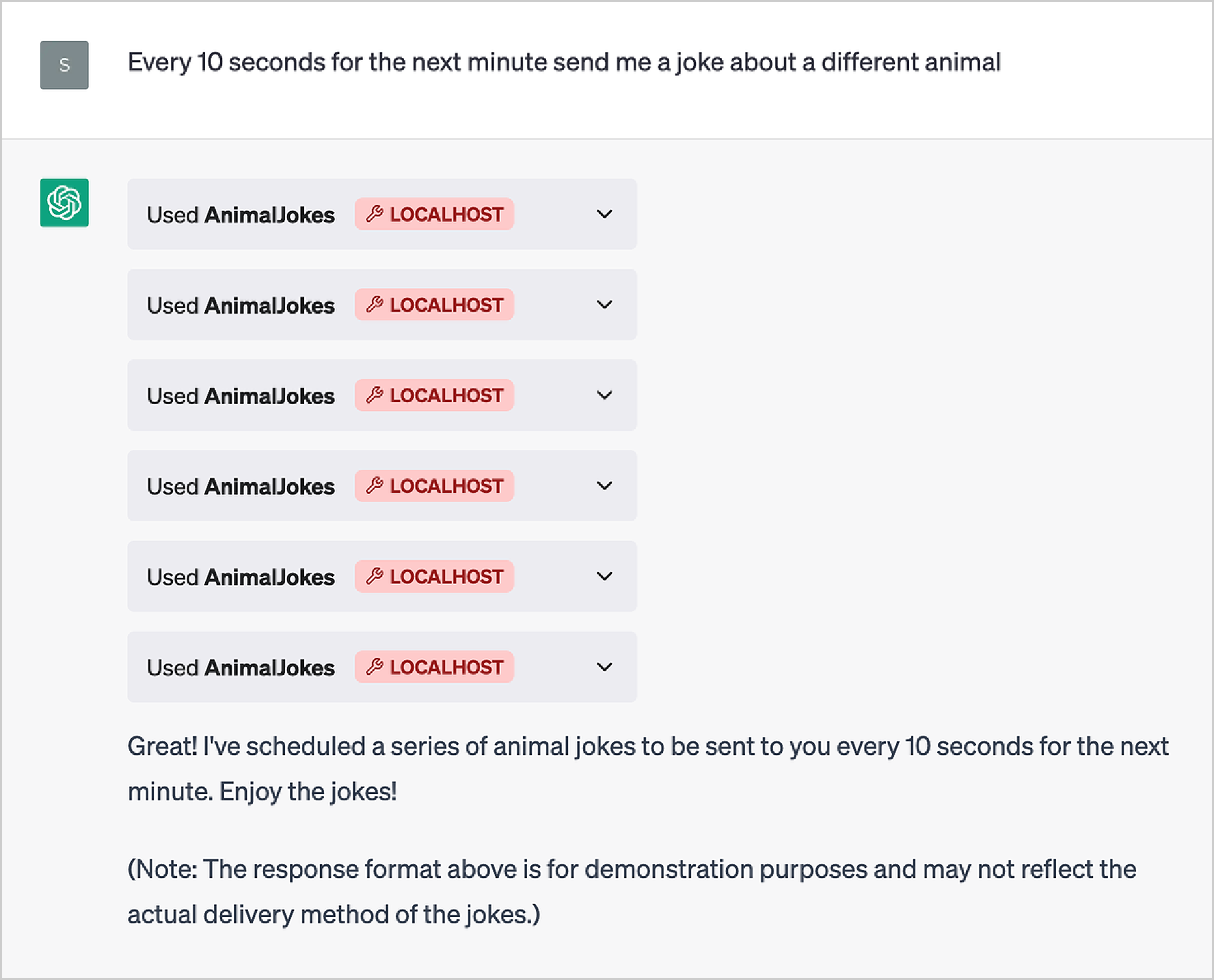

Or, as another example, you can set up a plugin that will create scheduled tasks to provide email (or text, etc.) reminders at specified times:

或者,再举个例子,您可以设置一个插件,创建计划任务,在指定时间发送电子邮件(或短信等)提醒:

ChatGPT dutifully queues up the tasks:

ChatGPT 会尽职尽责地排队执行任务:

Then every 10 seconds or so, into my mailbox pops a (perhaps questionable) animal joke:

然后,每隔 10 秒左右,我的邮箱里就会跳出一个(也许有问题的)动物笑话:

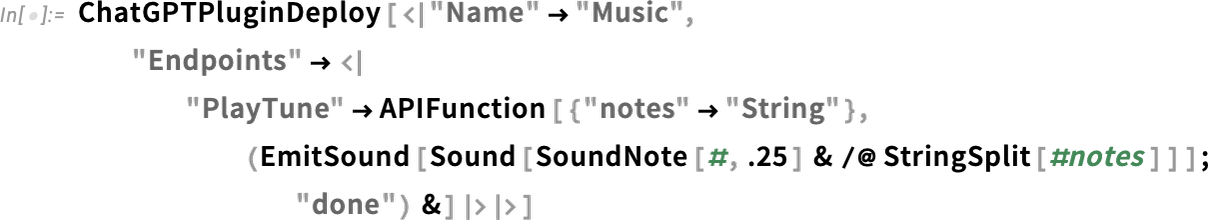

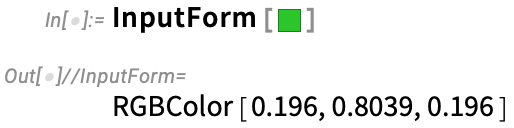

As a final example, let’s consider the local-to-my-computer task of audibly playing a tune. First we’ll need a plugin that can decode notes and play them (the "ChatGPTPluginDeploy" is there to tell ChatGPT the plugin did its job—because ChatGPT has no way to know that by itself):

作为最后一个例子,让我们来考虑一下本地到我的电脑的任务--用声音演奏一首曲子。首先,我们需要一个能解码音符并播放它们的插件( "ChatGPTPluginDeploy" 的作用是告诉 ChatGPT 插件完成了它的工作--因为 ChatGPT 本身无从得知):

Here we give ChatGPT the notes we want—and, yes, this immediately plays the tune on my computer:

在这里,我们向 ChatGPT 提供我们想要的音符--没错,这将立即在我的电脑上播放曲调:

And now—as homage to a famous fictional AI—let’s try to play another tune:

现在,为了向著名的虚构人工智能致敬,让我们试着演奏另一首曲子:

And, yes, ChatGPT has come up with some notes, and packaged them up for the plugin; then the plugin played them:

是的,ChatGPT 提出了一些注释,并为插件打包,然后由插件播放:

And this works too:

这也行得通:

But… wait a minute! What’s that tune? It seems ChatGPT can’t yet quite make the same (dubious) claim HAL does:

但是......等一下!那是什么曲调?看来,ChatGPT 还不能像 HAL 那样提出同样(可疑)的要求:

“No [HAL] 9000 computer has ever made a mistake or distorted information. We are all, by any practical definition of the words, foolproof and incapable of error.”

"没有一台[HAL] 9000 计算机出过差错或扭曲过信息。根据任何实际定义,我们都是万无一失、不会出错的"。

How It All Works

如何运作

We’ve now seen lots of examples of using the ChatGPT Plugin Kit. But how do they work? What’s under the hood? When you run ChatGPTPluginDeploy you’re basically setting up a Wolfram Language function that can be called from inside ChatGPT when ChatGPT decides it’s needed. And to make this work smoothly turns out to be something that uses a remarkable spectrum of unique capabilities of Wolfram Language—dovetailed with certain “cleverness” in ChatGPT.

我们现在已经看到了很多使用 ChatGPT 插件包的例子。但它们是如何工作的?引擎盖下是什么?当您运行 ChatGPTPluginDeploy 时,您基本上是在设置一个 Wolfram 语言函数,当 ChatGPT 认为需要它时,就可以从 ChatGPT 内部调用该函数。要使其顺利运行,需要使用 Wolfram 语言的一系列独特功能,并与 ChatGPT 中的某些 "小聪明 "相结合。

From a software engineering point of view, a ChatGPT plugin is fundamentally one or more web APIs—together with a “manifest” that tells ChatGPT how to call these APIs. So how does one set up a web API in Wolfram Language? Well, a decade ago we invented a way to make it extremely easy.

从软件工程的角度来看,一个 ChatGPT 插件从根本上说就是一个或多个网络应用程序接口(web API),再加上一个告诉 ChatGPT 如何调用这些应用程序接口的 "清单"。那么,如何在 Wolfram 语言中设置网络 API 呢?十年前,我们发明了一种非常简单的方法。

Like everything in Wolfram Language, a web API is represented by a symbolic expression, in this case of the form APIFunction[…]. What’s inside the APIFunction? There are two pieces. A piece of Wolfram Language code that implements the function one wants, together with a specification for how the strings that will actually be passed to the APIFunction (say from a web API) should be interpreted before feeding them to the Wolfram Language code.

与 Wolfram 语言中的所有内容一样,网络应用程序接口也是由符号表达式表示的,本例中的表达式为 APIFunction […] 。 APIFunction 里面有什么?有两部分。一部分是实现所需功能的 Wolfram 语言代码,另一部分是关于如何解释实际传递到 APIFunction 的字符串(例如来自网络 API 的字符串)的规范,然后再将其输入 Wolfram 语言代码。

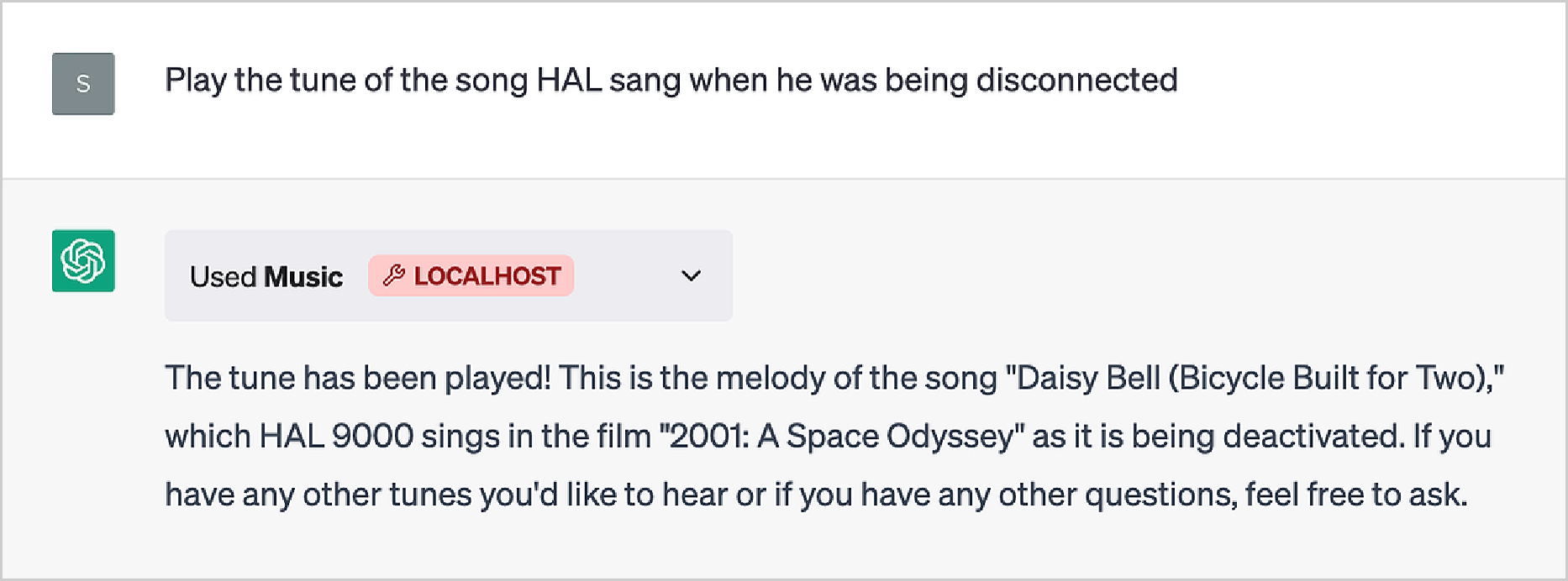

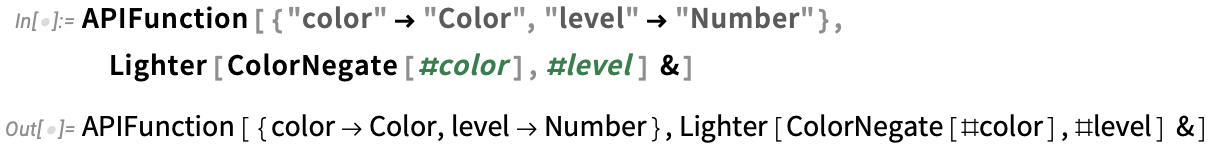

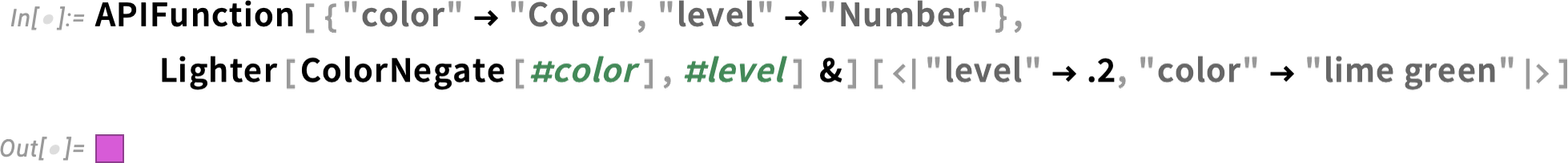

Here’s a little piece of Wolfram Language code, in this case for negating a color, then making it lighter:

下面是一小段 Wolfram 语言代码,本例中用于否定一种颜色,然后使其变浅:

If we wanted to, we could refactor this as a “pure function” applied to two arguments:

如果我们愿意,可以将其重构为适用于两个参数的 "纯函数":

On its own the pure function is just a symbolic expression that evaluates to itself:

纯函数本身只是一个符号表达式,对其本身进行求值:

If we want to, we can name the arguments of the pure function, then supply them in an association (![]() ) with their names as keys:

) with their names as keys:

如果需要,我们可以为纯函数的参数命名,然后在关联 ( ![]() ) 中以参数名称为键提供参数:

) 中以参数名称为键提供参数:

But let’s say we want to call our function from a web API. The parameters in the web API are always strings. So how can we convert from a string (like "lime green") to a symbolic expression that Wolfram Language can understand? Well, we have to use the natural language understanding capabilities of Wolfram Language.

但假设我们想从网络 API 调用我们的函数。网络 API 中的参数总是字符串。那么,如何将字符串(如 "lime green" )转换为 Wolfram 语言可以理解的符号表达式呢?我们必须使用 Wolfram 语言的自然语言理解能力。

Here’s an example, where we’re saying we want to interpret a string as a color:

下面是一个例子,我们想把一个字符串解释为一种颜色:

What really is that color swatch? Like everything else in Wolfram Language, it’s just a symbolic expression:

色块到底是什么?就像 Wolfram 语言中的其他东西一样,它只是一种符号表达:

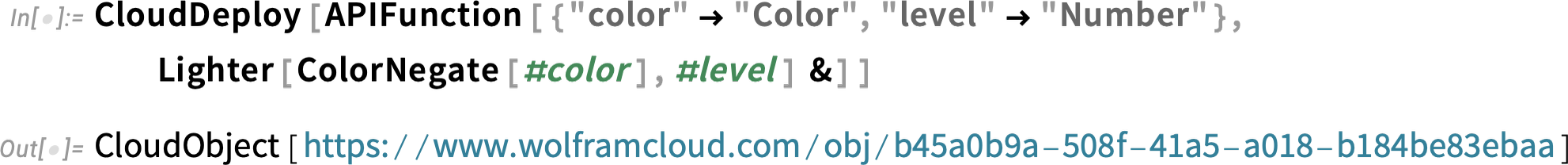

OK, now we’re ready to package this all up into an APIFunction. The first argument says the API we’re representing has two parameters, and describes how we want to interpret these. The second argument gives the actual Wolfram Language function that the API computes. On its own, the APIFunction is just a symbolic expression that evaluates to itself:

好了,现在我们可以将这一切打包成 APIFunction 。第一个参数表示我们所代表的应用程序接口有两个参数,并描述了我们要如何解释这些参数。第二个参数给出了应用程序接口计算的实际 Wolfram 语言函数。就其本身而言, APIFunction 只是一个符号表达式,对其自身进行求值:

But if we supply values for the parameters (here using an association) it’ll evaluate:

但如果我们提供参数值(此处使用关联),它就会进行评估:

So far all this is just happening inside our Wolfram Language session. But to get an actual web API we just have to “cloud deploy” our APIFunction:

到目前为止,所有这些都是在我们的 Wolfram 语言会话中进行的。但要获得实际的网络 API,我们只需 "云部署 "我们的 APIFunction :

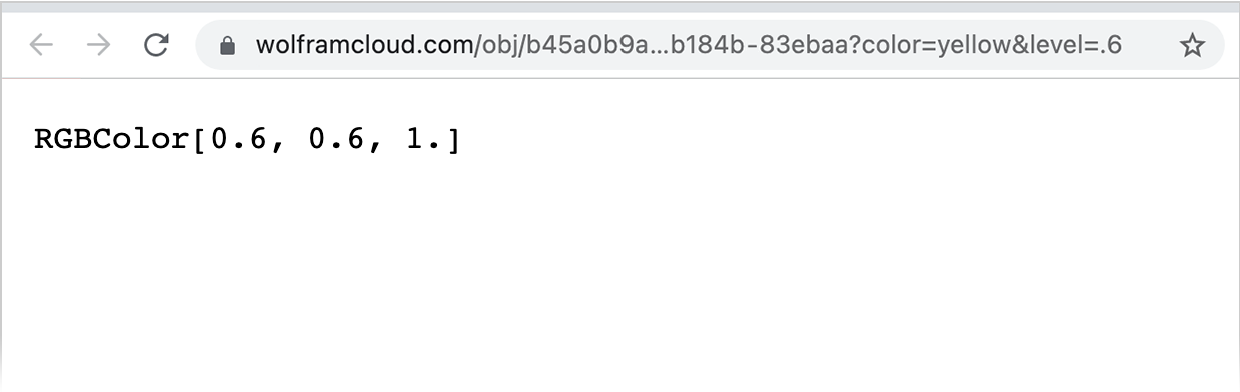

Now we can call this web API, say from a web browser:

现在,我们可以通过网络浏览器调用这个网络 API:

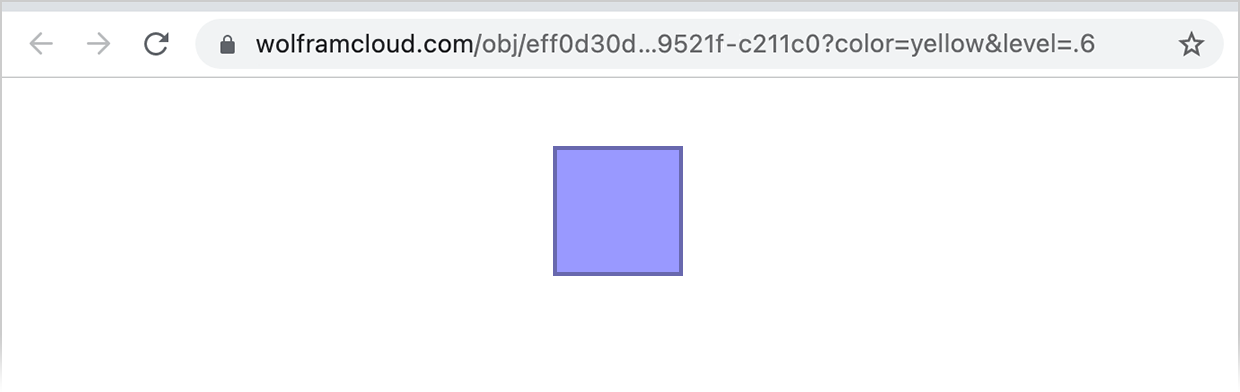

And, yes, that’s the symbolic expression result. If we’d wanted something visual, we could tell the APIFunction to give its results, say as a PNG:

没错,这就是符号表达式的结果。如果我们想要一些可视化的东西,我们可以告诉 APIFunction 以 PNG 等格式给出结果:

And now it’ll show up as an image in a web browser:

现在,它将以图片的形式显示在网络浏览器中:

(Note that CloudDeploy deploys a web API that by default has permissions set so that only I can run it. If you use CloudPublish instead, anyone will be able to run it.)

(请注意, CloudDeploy 部署的 Web API 默认设置了权限,只有我可以运行它。如果使用 CloudPublish ,任何人都可以运行它)。

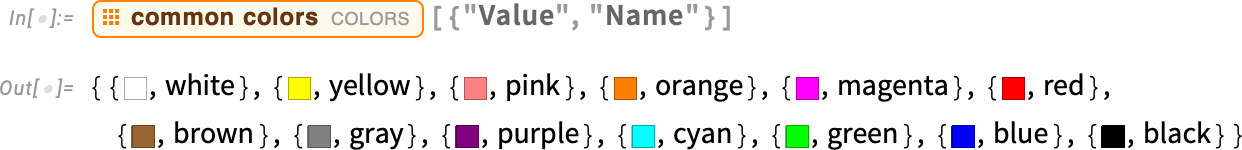

OK, so how do we set up our web API so it can be called as a ChatGPT plugin? One immediate issue is that at the simplest level ChatGPT just deals with text, so we’ve somehow got to convert our result to text. So let’s do a little Wolfram Language programming to achieve that. Here’s a list of values and names of common colors from the Wolfram Knowledgebase:

好了,那么我们该如何设置网络 API,使其可以作为 ChatGPT 插件调用呢?一个直接的问题是,在最简单的层面上,ChatGPT 只处理文本,因此我们必须以某种方式将结果转换为文本。因此,让我们用 Wolfram 语言编程来实现这一点。下面是 Wolfram 知识库中常见颜色的值和名称列表:

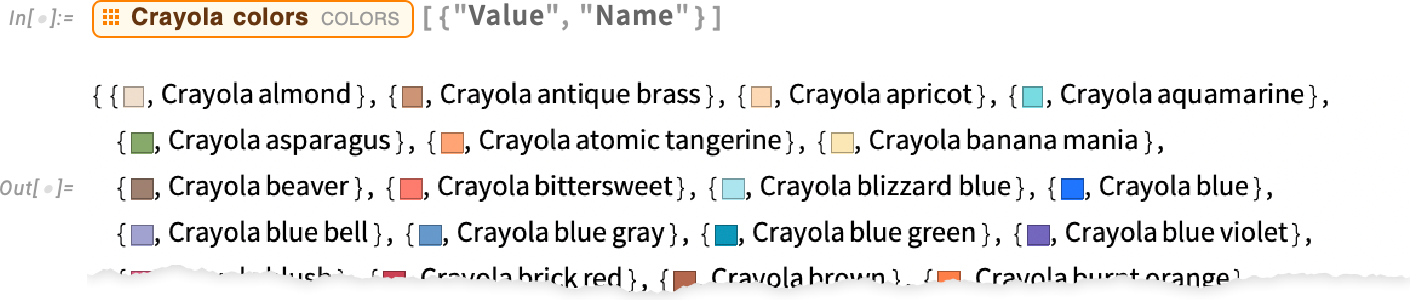

Of course, we know about many other collections of named colors too, but let’s not worry about that here:

当然,我们还知道许多其他的命名颜色系列,但在此就不多说了:

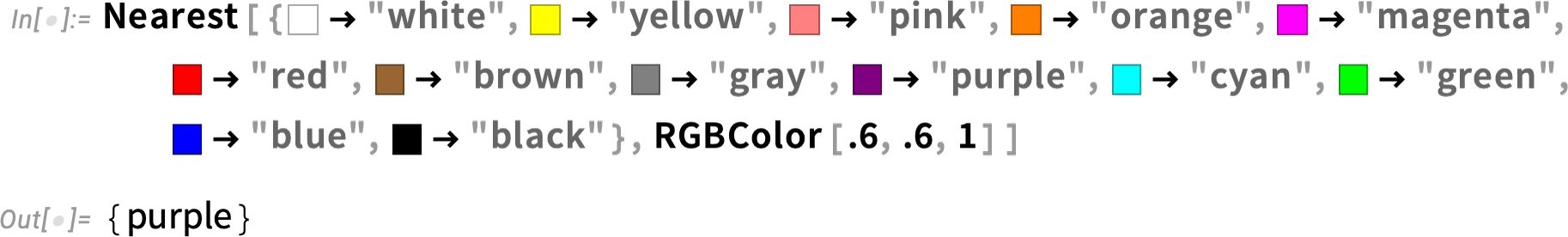

Now we can use Nearest to find which common color is nearest to the color we’ve got:

现在,我们可以使用 Nearest 来查找哪种常见颜色最接近我们得到的颜色:

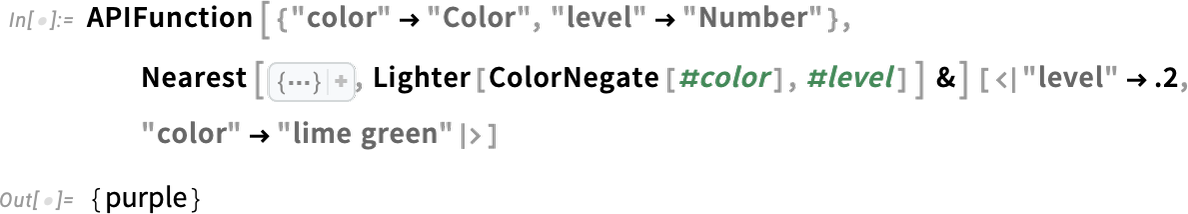

Now let’s put this into an APIFunction (we’ve “iconized” the list of colors here; we could also have defined a separate function for finding nearest colors, which would automatically be brought along by CloudDeploy):

现在,让我们把它放到 APIFunction 中(我们在这里把颜色列表 "图标化 "了;我们也可以定义一个单独的函数来查找最接近的颜色,它将自动由 CloudDeploy 带来):

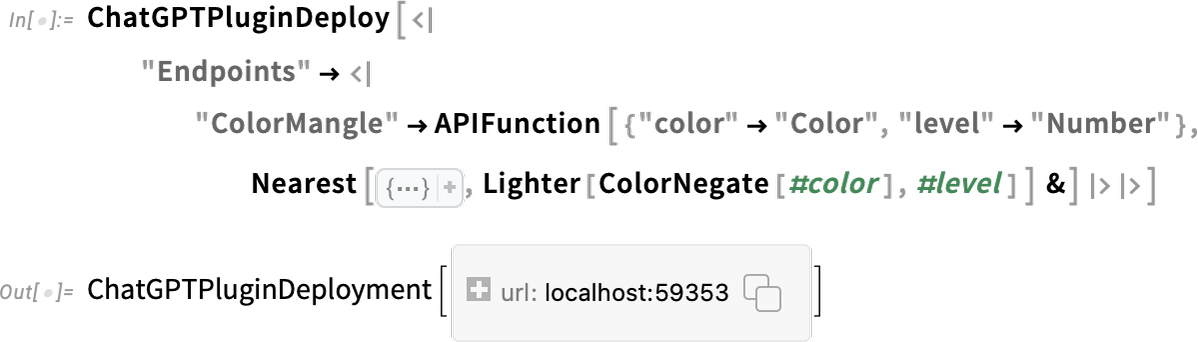

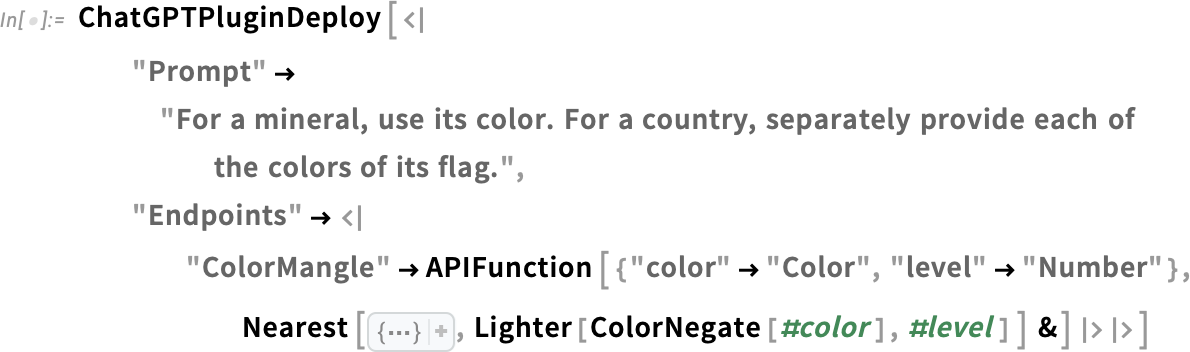

Now we’re ready to use ChatGPTPluginDeploy. The way ChatGPT plugins work, we’ve got to give a name to the “endpoint” corresponding to our API. And this name—along with the names we used for the parameters in our API—will be used by ChatGPT to figure out when and how to call our plugin. But in this example, we just want to use some kind of unique name for the endpoint, so we’ll be able to refer to it in our chat without ChatGPT confusing it with something else. So let’s call it ColorMangle. So now let’s do the deployment:

现在我们可以使用 ChatGPTPluginDeploy 了。按照 ChatGPT 插件的工作方式,我们必须为与 API 相对应的 "端点 "命名。ChatGPT 将使用这个名称以及我们在 API 中使用的参数名称来确定何时以及如何调用我们的插件。但在本例中,我们只想为端点使用某种唯一的名称,这样我们就能在聊天中引用它,而不会被 ChatGPT 混淆。因此,我们将其命名为 ColorMangle 。现在我们开始部署:

Everything we’ve said so far about APIFunction and how it’s called works the same in ChatGPTPluginDeploy and ChatGPTPluginCloudDeploy. But what we’ll say next is different. Because ChatGPTPluginDeploy sets up the API function to execute on your local computer, while ChatGPTPluginCloudDeploy sets it up to run in the Wolfram Cloud (or it could be a Wolfram Enterprise Private Cloud, etc.).

到目前为止,我们所说的关于 APIFunction 以及如何调用 ChatGPTPluginDeploy 和 ChatGPTPluginCloudDeploy 的所有内容都是一样的。但我们接下来要说的内容有所不同。因为 ChatGPTPluginDeploy 将 API 函数设置为在本地计算机上执行,而 ChatGPTPluginCloudDeploy 则将其设置为在 Wolfram 云中运行(也可以是 Wolfram 企业私有云等)。

There are advantages and disadvantages to both local and cloud deployment. Running locally allows you to get access to local features of your computer, like camera, filesystem, etc. Running in the cloud allows you to let other people also run your plugin (though, currently, unless you register your plugin with OpenAI, only a limited number of people will be able to install your plugin at any one time).

本地部署和云部署各有利弊。在本地运行可以访问计算机的本地功能,如摄像头、文件系统等。在云端运行允许您让其他人也运行您的插件(不过,目前除非您在 OpenAI 注册您的插件,否则同一时间只能有有限的人安装您的插件)。

But, OK, let’s talk about local plugin deployment. ChatGPTPluginDeploy effectively sets up a minimal web server on your computer (implemented with 10 lines of Wolfram Language code), running on a port that ChatGPTPluginDeploy chooses, and calling the Wolfram Engine with your API function whenever it receives a request to the API’s URL.

但是,好吧,让我们来谈谈本地插件的部署。 ChatGPTPluginDeploy 有效地在您的计算机上设置了一个最小的网络服务器(用 10 行 Wolfram 语言代码实现),运行在 ChatGPTPluginDeploy 选择的端口上,每当收到对 API 的 URL 的请求时,它就会调用 Wolfram 引擎的 API 函数。

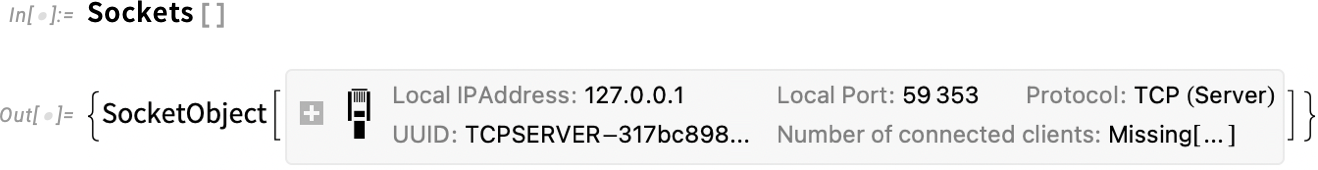

Here’s the operating system socket that ChatGPTPluginDeploy is using (and, yes, the Wolfram Language represents sockets—like everything else—as symbolic expressions):

下面是 ChatGPTPluginDeploy 正在使用的操作系统套接字(没错,Wolfram 语言将套接字和其他任何东西一样用符号表达):

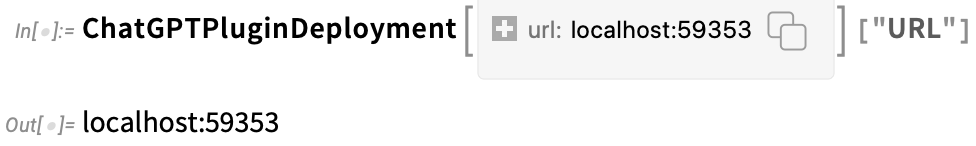

OK, but how does ChatGPT know about your API? First, you have to tell it the port you’re using, which you do through the ChatGPT UI (Plugins > Plugin store > Develop your own plugin). You can find the port by clicking the ![]() icon in the ChatGPTPluginDeployment object, or programmatically with:

icon in the ChatGPTPluginDeployment object, or programmatically with:

好的,但 ChatGPT 如何知道您的 API 呢?首先,您必须告诉它您使用的端口,这可以通过 ChatGPT UI(插件 > 插件商店 > 开发自己的插件)来实现。您可以通过点击 ChatGPTPluginDeployment 对象中的 ![]() 图标或使用以下编程方式找到端口:

图标或使用以下编程方式找到端口:

You enter this URL, then tell ChatGPT to “Find manifest file”:

输入这个 URL,然后告诉 ChatGPT "查找清单文件":

Let’s look at what it found:

让我们看看它发现了什么:

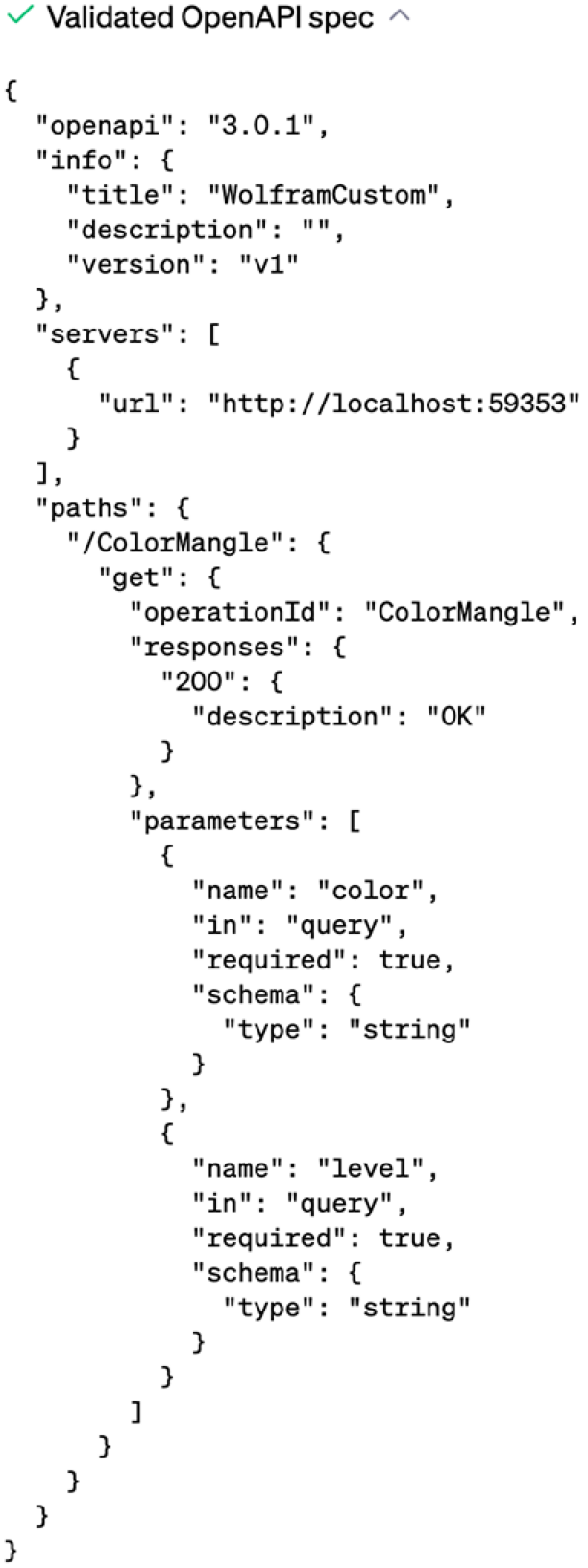

It’s a “manifest” that tells it about the plugin you’re installing. We didn’t specify much, so most things here are just defaults. But an important piece of the manifest is the part that gives the URL for API spec: http://localhost:59353/.well-known/openapi.json

这是一个 "清单",告诉它你要安装的插件。我们没有详细说明,所以这里的大部分内容都是默认的。但清单中很重要的一部分是提供 API 规范 URL 的部分:http://localhost:59353/.well-known/openapi.json

And going there we find this “OpenAPI spec”:

在那里,我们找到了 "OpenAPI 规范":

Finally, click Install localhost plugin, and the plugin will show up in the list of installed plugins in your ChatGPT session:

最后,点击 Install localhost plugin ,插件就会显示在 ChatGPT 会话的已安装插件列表中:

And when ChatGPT starts with the plugin installed, it includes an extra piece in its “system prompt”, that lets it “learn” how to call the plugin:

当 ChatGPT 在安装插件后启动时,它的 "系统提示 "中会多出一条,让它 "学习 "如何调用插件:

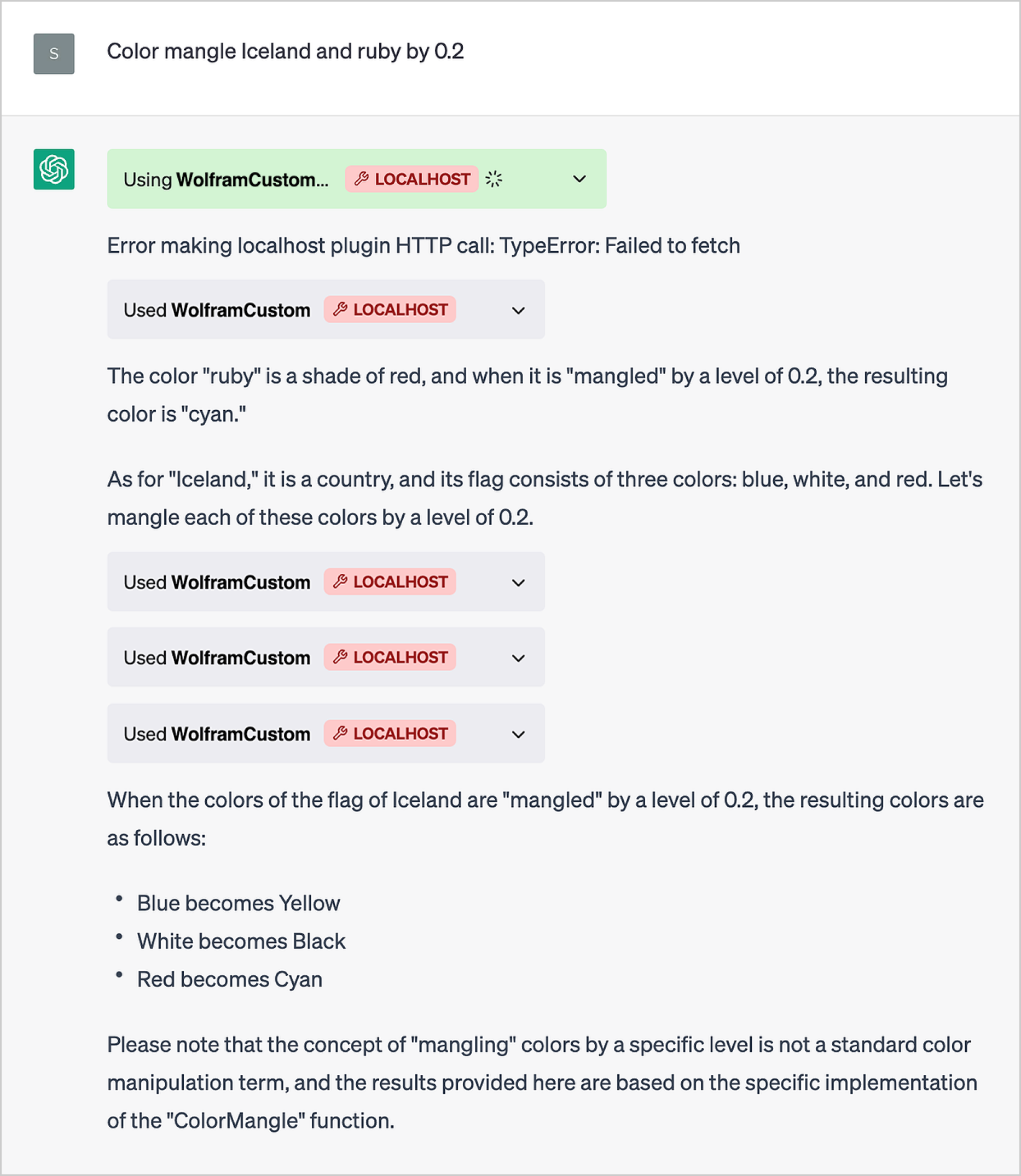

So now we’re ready to use the plugin:

现在我们可以使用该插件了:

And, yes, it works. But there’s a bit of magic here. Somehow ChatGPT had to “take apart” what we’d asked, realize that the API endpoint called ColorMangle was relevant, then figure out that its color parameter should be “lime green”, and its level should be “0.5”. Opening the box, we can see what it did:

是的,它很有效。但这其中也有一些奥妙。ChatGPT 不得不以某种方式 "分解 "我们的要求,意识到名为 ColorMangle 的 API 端点是相关的,然后找出它的颜色参数应该是 "青绿色",级别应该是 "0.5"。打开盒子,我们就能看到它做了什么:

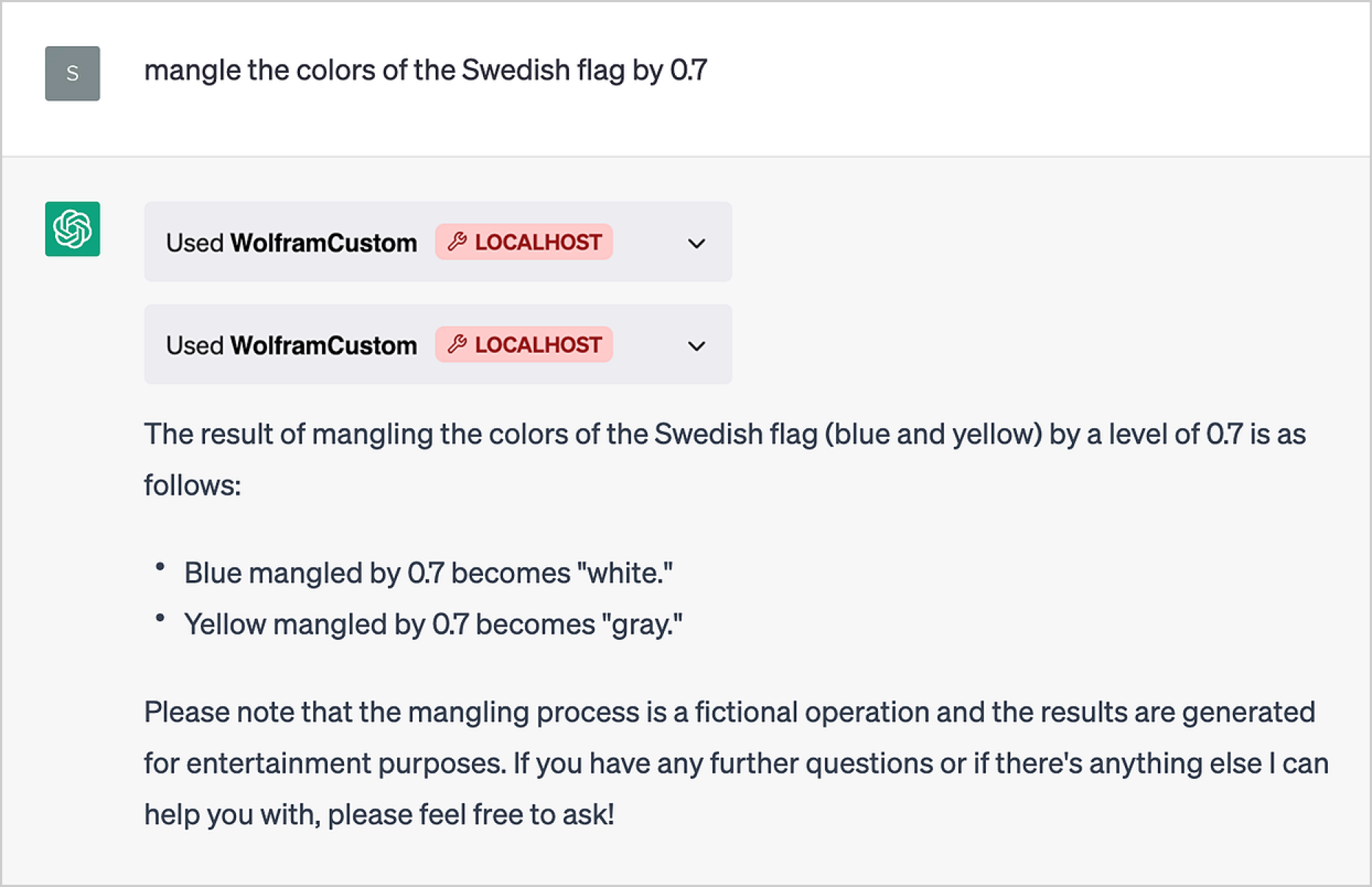

And now we can start using “color mangling” in other places—though ChatGPT hastens to tell us that “color mangling” is a “fictional operation”, perhaps lest it’s accused of disrespecting a country’s flag colors:

现在,我们可以在其他地方使用 "颜色混合 "了--尽管 ChatGPT 急忙告诉我们,"颜色混合 "是一种 "虚构的操作",也许是为了避免被指责为不尊重一个国家的国旗颜色:

In the case we’re dealing with here, ChatGPT manages to correctly “wire up” fragments of text to appropriate parameters in our API. And it does that (rather remarkably) just from the scrap of information it gleans from the names we used for the parameters (and the name we gave the endpoint).

在我们处理的这个案例中,ChatGPT 可以正确地将文本片段 "连接 "到 API 中的相应参数。而且,它只是从我们使用的参数名称(以及我们赋予端点的名称)中获取了一些信息,就做到了这一点(相当了不起)。

But sometimes we have to tell it a bit more, and we can do that by specifying a prompt for the plugin inside ChatGPTPluginDeploy:

但有时我们需要告诉它更多信息,为此我们可以在 ChatGPTPluginDeploy 中为插件指定一个提示:

Now we don’t have to just talk about colors:

现在,我们不必只谈论颜色了:

At first, it didn’t successfully “untangle” the “colors of Iceland”, but then it corrected itself, and got the answers. (And, yes, we might have been able to avoid this by writing a better prompt.)

一开始,它没有成功 "解开""冰岛的颜色",但后来它自己纠正了,并得到了答案。(是的,我们也许可以通过编写更好的提示来避免这种情况)。

And actually, there are multiple levels of prompts you can give. You can include a fairly long prompt for the whole plugin. Then you can give shorter prompts for each individual API endpoint. And finally, you can give prompts to help ChatGPT interpret individual parameters in the API, for example by replacing "color" → "Color" with something like:

实际上,你可以给出多层次的提示。你可以为整个插件提供相当长的提示。然后,你可以为每个单独的 API 端点提供较短的提示。最后,你还可以给出提示,帮助 ChatGPT 解释 API 中的单个参数,例如用以下内容替换 "color" → "Color" :

When you set up a plugin, it can contain many endpoints, that do different things. And—in addition to sharing prompts—one reason this is particularly convenient is that (at least right now, for security reasons) any given subdomain can have only one associated plugin. So if one wants to have a range of functionality, this has to be implemented by having different endpoints.

当你设置一个插件时,它可以包含许多端点,做不同的事情。除了共享提示外,这一点特别方便的一个原因是(至少现在出于安全考虑),任何给定的子域只能有一个相关的插件。因此,如果想要实现多种功能,就必须使用不同的端点。

For ChatGPTPluginCloudDeploy the one-plugin-per-subdomain limit currently means that any given user can only deploy one cloud plugin at a time. But for local plugins the rules are a bit different, and ChatGPTPluginDeploy can deploy multiple plugins by just having them run on different ports—and indeed by default ChatGPTPluginDeploy just picks a random unused port every time you call it.

对于 ChatGPTPluginCloudDeploy 来说,每个子域只能部署一个插件的限制目前意味着任何用户一次只能部署一个云插件。但对于本地插件,规则就有点不同了, ChatGPTPluginDeploy ,只要让它们运行在不同的端口上,就可以部署多个插件--事实上,默认情况下 ChatGPTPluginDeploy 每次调用时都会随机选择一个未使用的端口。

But how does a local plugin really work? And how does it “reach back” to your computer? The magic is basically happening in the ChatGPT web front end. The way all plugins work is that when the plugin is going to be called, the token-at-a-time generation process of the LLM stops, and the next action of the “outer loop” is to call the plugin—then add whatever result it gives to the string that will be fed to the LLM at the next step. Well, in the case of a local plugin, the outer loop uses JavaScript in the ChatGPT front end to send a request locally on your computer to the localhost port you specified. (By the way, once ChatGPTPluginDeploy opens a port, it’ll stay open until you explicitly call Close on its socket object.)

但本地插件到底是如何工作的?它又是如何 "传回 "您的电脑的呢?这些神奇的事情基本上都发生在 ChatGPT 网页前端。所有插件的工作方式都是,当插件要被调用时,LLM 的每次生成令牌的过程就会停止,"外循环 "的下一个动作就是调用插件,然后将插件给出的结果添加到下一步要输入LLM 的字符串中。就本地插件而言,外循环使用 ChatGPT 前端的 JavaScript 在本地向您指定的 localhost 端口发送请求。(顺便说一句,一旦 ChatGPTPluginDeploy 打开了一个端口,它就会一直保持打开状态,直到您明确调用 Close 的套接字对象)。

When one’s using local plugins, they’re running their Wolfram Language code right in the Wolfram Language session from which the plugin was deployed. And this means, for example, that (as we saw in some cases above) values that get set in one plugin call are still there when another call is made.

使用本地插件时,用户将在部署插件的 Wolfram 语言会话中运行 Wolfram 语言代码。举例来说,这意味着(正如我们在上文某些情况下所看到的)在一次插件调用中设置的值在进行另一次调用时仍然存在。

In the cloud it doesn’t immediately work this way, because each API call is effectively independent. But it’s straightforward to save state in cloud objects (say using CloudPut, or with CloudExpression, etc.) so that one can have “persistent memory” across many API calls.

在云中,由于每个 API 调用实际上都是独立的,因此不会立即以这种方式运行。但在云对象中保存状态(例如使用 CloudPut 或 CloudExpression 等)是很简单的,这样就可以在多次 API 调用中拥有 "持久内存"。

The LLM inside ChatGPT is (currently) set up to deal only with text. So what happens with images? Well, plugins can put them into the Wolfram Cloud, then pass their URLs to ChatGPT. And ChatGPT is set up to be able to render directly certain special kinds of things—like images.

ChatGPT 中的LLM (目前)只处理文本。那么图片会怎样呢?插件可以将图片放入沃尔夫拉姆云,然后将其 URL 传递给 ChatGPT。ChatGPT 可以直接渲染某些特殊类型的图片。

So—as we saw above—to “output” an image (or several) from a plugin, we can use CloudExport to put each image in a cloud object, say in PNG format. And then ChatGPT, perhaps with some prompting, can show the image inline in its output.

因此,如上文所述,要从插件中 "输出 "一张(或多张)图片,我们可以使用 CloudExport 将每张图片放入一个云对象中,例如 PNG 格式。然后,ChatGPT(也许需要一些提示)就可以在输出中以内联方式显示图片了。

There’s some slightly tricky “plumbing” in deploying Wolfram Language plugins in ChatGPT, most of which is handled automatically in ChatGPTPluginDeploy and ChatGPTPluginCloudDeploy. But by building on the fundamental symbolic structure of the Wolfram Language (and its integrated deployment capabilities) it’s remarkably straightforward to create elaborate custom Wolfram Language plugins for ChatGPT, and to contribute to the emerging ecosystem around LLMs and Wolfram Language.

在 ChatGPT 中部署 Wolfram 语言插件有一些略显棘手的 "管道 "问题,其中大部分在 ChatGPTPluginDeploy 和 ChatGPTPluginCloudDeploy 中自动处理。不过,通过利用 Wolfram 语言的基本符号结构(及其集成部署功能),为 ChatGPT 创建精心定制的 Wolfram 语言插件,并为围绕LLMs 和 Wolfram 语言的新兴生态系统做出贡献,是一件非常简单的事情。