A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference

用于深度学习推理的 AI 加速器的完整指南 — GPU、AWS Inferentia 和 Amazon Elastic Inference

Learn about CPUs, GPUs, AWS Inferentia, and Amazon Elastic Inference and how to choose the right AI accelerator for inference deployment

了解 CPU、GPU、AWS Inferentia 和 Amazon Elastic Inference 以及如何选择合适的 AI 加速器进行推理部署

如何选择 — 用于推理的 GPU、AWS Inferentia 和 Amazon Elastic Inference(作者插图)

Let’s start by answering the question “What is an AI accelerator?”

让我们从回答“什么是人工智能加速器”这个问题开始。

An AI accelerator is a dedicated processor designed to accelerate machine learning computations. Machine learning, and particularly its subset, deep learning is primarily composed of a large number of linear algebra computations, (i.e. matrix-matrix, matrix-vector operations) and these operations can be easily parallelized. AI accelerators are specialized hardware designed to accelerate these basic machine learning computations and improve performance, reduce latency and reduce cost of deploying machine learning based applications.

AI 加速器是一种专用处理器,旨在加速机器学习计算。机器学习,尤其是其子集,深度学习主要由大量的线性代数计算(即矩阵-矩阵、矩阵-向量运算)组成,这些运算可以很容易地并行化。AI 加速器是专门的硬件,旨在加速这些基本的机器学习计算,提高性能,减少延迟并降低部署基于机器学习的应用程序的成本。

Do I need an AI accelerator for machine learning (ML) inference?

我是否需要 AI 加速器进行机器学习 (ML) 推理?

Let’s say you have an ML model as part of your software application. The prediction step (or inference) is often the most time consuming part of your application that directly affects user experience. A model that takes several hundreds of milliseconds to generate text translations or apply filters to images or generate product recommendations, can drive users away from your “sluggish”, “slow”, “frustrating to use” app.

假设您有一个 ML 模型作为软件应用程序的一部分。预测步骤(或推理)通常是应用程序中最耗时的部分,直接影响用户体验。一个需要几百毫秒才能生成文本翻译或对图像应用过滤器或生成产品推荐的模型,可能会使用户远离你的“迟钝”、“缓慢”、“使用起来令人沮丧”的应用程序。

By speeding up inference, you can reduce the overall application latency and deliver an app experience that can be described as “smooth”, “snappy”, and “delightful to use”. And you can speed up inference by offloading ML model prediction computation to an AI accelerator.

通过加快推理速度,您可以减少整体应用程序延迟,并提供可以描述为“流畅”、“活泼”和“使用愉快”的应用程序体验。您可以通过将 ML 模型预测计算卸载到 AI 加速器来加快推理速度。

With great market needs comes great many product alternatives, so naturally there is more than one way to accelerate your ML models in the cloud.

随着巨大的市场需求而来的是大量的产品替代品,因此自然有不止一种方法可以在云中加速您的 ML 模型。

In this blog post, I’ll explore three popular options:

在这篇博文中,我将探讨三个常用选项:

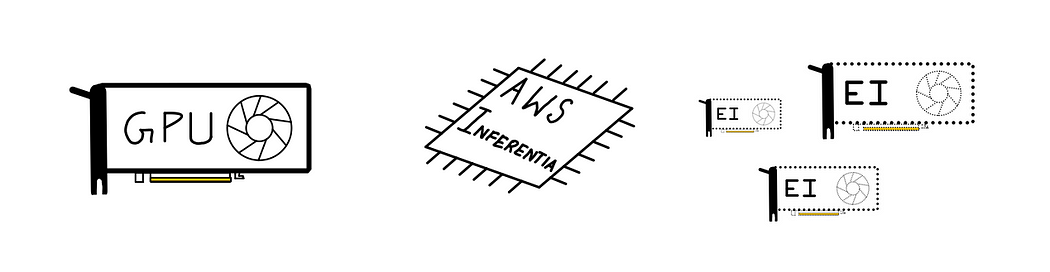

- GPUs: Particularly, the high-performance NVIDIA T4 and NVIDIA V100 GPUs

GPU:特别是高性能 NVIDIA T4 和 NVIDIA V100 GPU - AWS Inferentia: A custom designed machine learning inference chip by AWS

AWS Inferentia:AWS 定制设计的机器学习推理芯片 - Amazon Elastic Inference (EI): An accelerator that saves cost by giving you access to variable-size GPU acceleration, for models that don’t need a dedicated GPU

Amazon Elastic Inference (EI):一种加速器,可让您访问可变大小的 GPU 加速,从而节省成本,适用于不需要专用 GPU 的型号

Choosing the right type of hardware acceleration for your workload can be a difficult choice to make. Through the rest of this post, I’ll walk you through various considerations such as target throughput, latency, cost budget, model type and size, choice of framework, and others to help you make your decision. I’ll also present plenty of code examples and discuss developer friendliness and ease of use with options.

为您的工作负载选择正确的硬件加速类型可能是一个艰难的选择。在本文的其余部分,我将引导你完成各种注意事项,例如目标吞吐量、延迟、成本预算、模型类型和大小、框架选择等,以帮助你做出决策。我还将提供大量代码示例,并讨论开发人员的友好性和选项的易用性。

Disclaimer: Opinions and recommendations in this article are my own and do not reflect the views of my current or past employers.

免责声明:本文中的意见和建议是我自己的,并不反映我现在或过去的雇主的观点。

A little bit of hardware accelerator history

硬件加速器历史简介

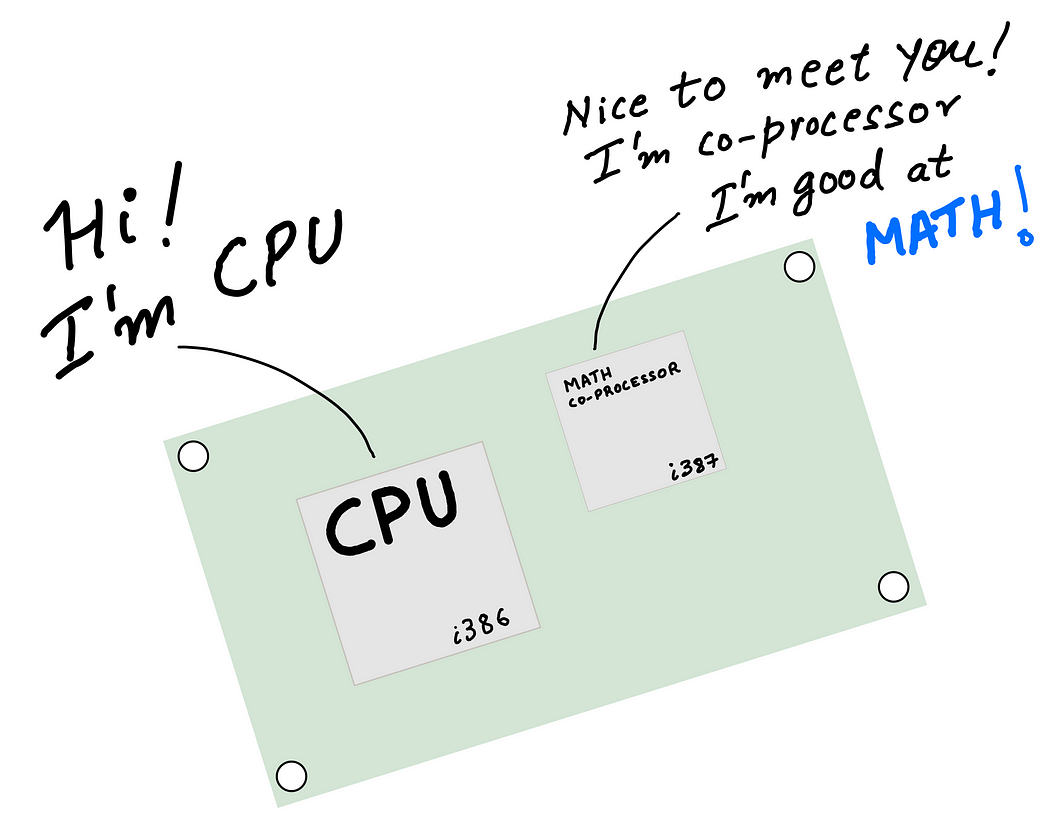

In the early days of computing (in the 70s and 80s), to speed up math computations on your computer, you paired a CPU (Central Processing Unit) with an FPU (floating-point unit) aka math coprocessor. The idea was simple — allow the CPU to offload complex floating point mathematical operations to a specially designed chip, so that the CPU could focus on executing the rest of the application program, run the operating system etc. Since the system had different types of processors (the CPU and the math coprocessor) the setup was sometimes referred to as heterogeneous computing.

在计算的早期(70 年代和 80 年代),为了加快计算机上的数学计算速度,您将 CPU(中央处理器)与 FPU(浮点单元)(又名数学协处理器)配对。这个想法很简单——允许 CPU 将复杂的浮点数学运算卸载到专门设计的芯片上,这样 CPU 就可以专注于执行应用程序的其余部分、运行操作系统等。由于系统具有不同类型的处理器(CPU 和数学协处理器),因此该设置有时被称为异构计算。

Fast forward to the 90s, and the CPUs got faster, better and more efficient, and started to ship with integrated floating-point hardware. The simpler system prevailed, and coprocessors and heterogeneous computing fell out of fashion for the regular user.

快进到 90 年代,CPU 变得更快、更好、更高效,并开始配备集成浮点硬件。更简单的系统占了上风,协处理器和异构计算对于普通用户来说已经过时了。

Around the same time specific types of workloads started to get more complex. Designers demanded better graphics, engineers and scientists demanded faster computers for data processing, modeling and simulations. This meant there was some need (and a market) for high-performance processors that could accelerate “special programs” much faster than a CPU, freeing up the CPU to do other things. Computer graphics was an early example of workload being offloaded to a special processor. You may know this special processor by its common name, the venerable GPU.

大约在同一时间,特定类型的工作负载开始变得更加复杂。设计师需要更好的图形,工程师和科学家需要更快的计算机进行数据处理、建模和模拟。这意味着对高性能处理器有一些需求(和市场),这些处理器可以比CPU更快地加速“特殊程序”,从而释放CPU来做其他事情。计算机图形学是将工作负载卸载到特殊处理器的早期示例。您可能知道这个特殊的处理器的通用名称,即古老的 GPU。

The early 2010s saw yet another class of workloads — deep learning, or machine learning with deep neural networks — that needed hardware acceleration to be viable, much like computer graphics. GPUs were already in the market and over the years have become highly programmable unlike the early GPUs which were fixed function processors. Naturally, ML practitioners started using GPUs to accelerate deep learning training and inference.

2010 年代初,又出现了另一类工作负载——深度学习或具有深度神经网络的机器学习——需要硬件加速才能实现,就像计算机图形学一样。GPU 已经进入市场,多年来已经变得高度可编程,这与早期的 GPU 不同,早期的 GPU 是固定功能处理器。自然而然地,机器学习从业者开始使用 GPU 来加速深度学习训练和推理。

CPU 可以将复杂的机器学习操作卸载到 AI 加速器(作者插图)

Today’s deep learning inference acceleration landscape is much more interesting. CPUs acquired support for advanced vector extensions (AVX-512) to accelerate matrix math computations common in deep learning. GPUs acquired new capabilities such as support for reduced precision arithmetic (FP16 and INT8) further accelerating inference.

如今的深度学习推理加速领域更加有趣。CPU 获得了对高级向量扩展 (AVX-512) 的支持,以加速深度学习中常见的矩阵数学计算。GPU 获得了新功能,例如支持低精度算术(FP16 和 INT8),进一步加快了推理速度。

In addition to CPUs and GPUs, today you also have access to specialized hardware, with custom designed silicon built just for deep learning inference. These specialized processors, also called Application Specific Integrated Circuits or ASICs can be far more performant and cost effective compared to general purpose processors, if your workload is supported by the processor. A great example of such specialized processors is AWS Inferentia, a custom-designed ASIC by AWS for accelerating deep learning inference.

除了 CPU 和 GPU 之外,如今您还可以使用专用硬件,这些硬件采用专为深度学习推理而设计的定制芯片。如果处理器支持您的工作负载,则与通用处理器相比,这些专用处理器(也称为专用集成电路或 ASIC)的性能和成本效益要高得多。AWS Inferentia 是此类专用处理器的一个很好的例子,它是 AWS 定制设计的 ASIC,用于加速深度学习推理。

The right choice of hardware acceleration for your application may not be obvious at first. In the next section, we’ll discuss the benefits of each approach and considerations such as throughput, latency, cost and other factors that will affect your choice.

起初,为您的应用程序正确选择硬件加速可能并不明显。在下一节中,我们将讨论每种方法的好处,以及吞吐量、延迟、成本和其他影响选择的因素等注意事项。

AI accelerators and how to choose the right option

AI 加速器以及如何选择正确的选项

It’s hard to answer general questions such as “is GPU better than CPU?” or “is CPU cheaper than a GPU” or “is an ASIC always faster than a GPU”. There really isn’t a single hardware solution that works well for every use case and the answer depends on your workload and several considerations:

很难回答诸如“GPU 比 CPU 好吗?”或“CPU 比 GPU 便宜吗”或“ASIC 总是比 GPU 快吗”之类的一般问题。实际上,没有一个硬件解决方案适用于每个用例,答案取决于您的工作负载和几个注意事项:

- Model type and programmability: model size, custom operators, supported frameworks

模型类型和可编程性:模型大小、自定义运算符、支持的框架 - Target throughput, latency and cost: deliver good customer experience at a budget

目标吞吐量、延迟和成本:在预算内提供良好的客户体验 - Ease of use of compiler and runtime toolchain: should have fast learning curve, doesn’t require hardware knowledge

编译器和运行时工具链的易用性:应该有快速的学习曲线,不需要硬件知识

While considerations such as model support and target latency are objective, ease of use can be very subjective. Therefore, I caution against general recommendation that doesn’t consider all of the above for your specific application. Such high level recommendations tends to be biased.

虽然模型支持和目标延迟等考虑因素是客观的,但易用性可能非常主观。因此,我告诫不要使用不考虑上述所有特定应用的一般建议。这种高级别的建议往往有偏见。

Let’s review these key considerations.

让我们回顾一下这些关键注意事项。

1. Model type and programmability

1. 模型类型和可编程性

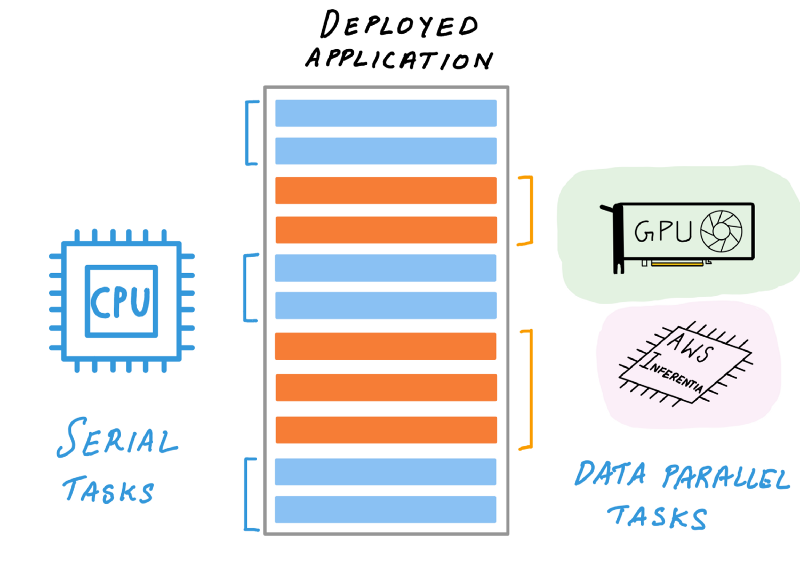

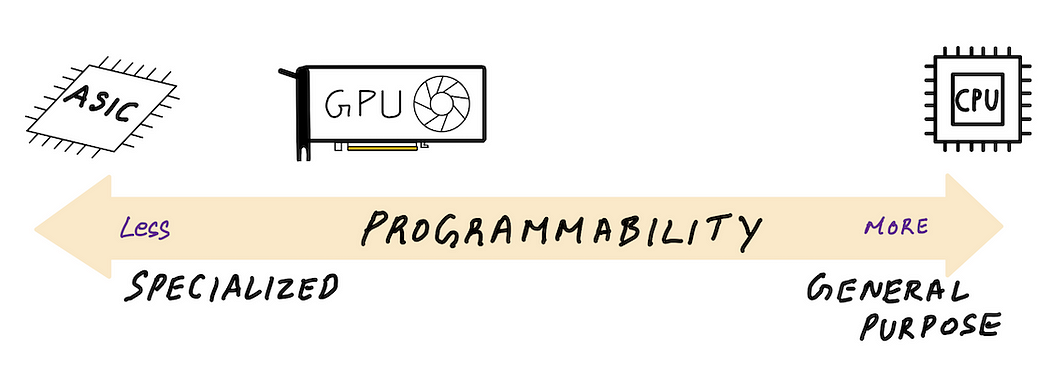

One way to categorize AI accelerators is based on how programmable they are. On the “fully programmable” end of the spectrum there are CPUs. As general purpose processors, you can pretty much write custom code for your machine learning model with custom layers, architectures and operations.

对 AI 加速器进行分类的一种方法是基于它们的可编程性。在频谱的“完全可编程”端有 CPU。作为通用处理器,您几乎可以为机器学习模型编写具有自定义层、架构和操作的自定义代码。

On the other end of the spectrum are ASICs such as AWS Inferentia that have a fixed set of supported operations exposed via it’s AWS Neuron SDK compiler. Somewhere in between, but closer to ASICs are GPUs, that are far more programmable than ASICs, but far less general purpose than CPUs. There is always going to be some trade off between being general purpose and delivering performance.

另一端是 ASIC,例如 AWS Inferentia,它们通过其 AWS Neuron SDK 编译器公开了一组固定的支持操作。介于两者之间但更接近 ASIC 的是 GPU,其可编程性远高于 ASIC,但远不如 CPU 通用。在通用和交付性能之间总是会有一些权衡。

自定义代码可能会回退到 CPU 执行,从而降低整体吞吐量(作者插图)

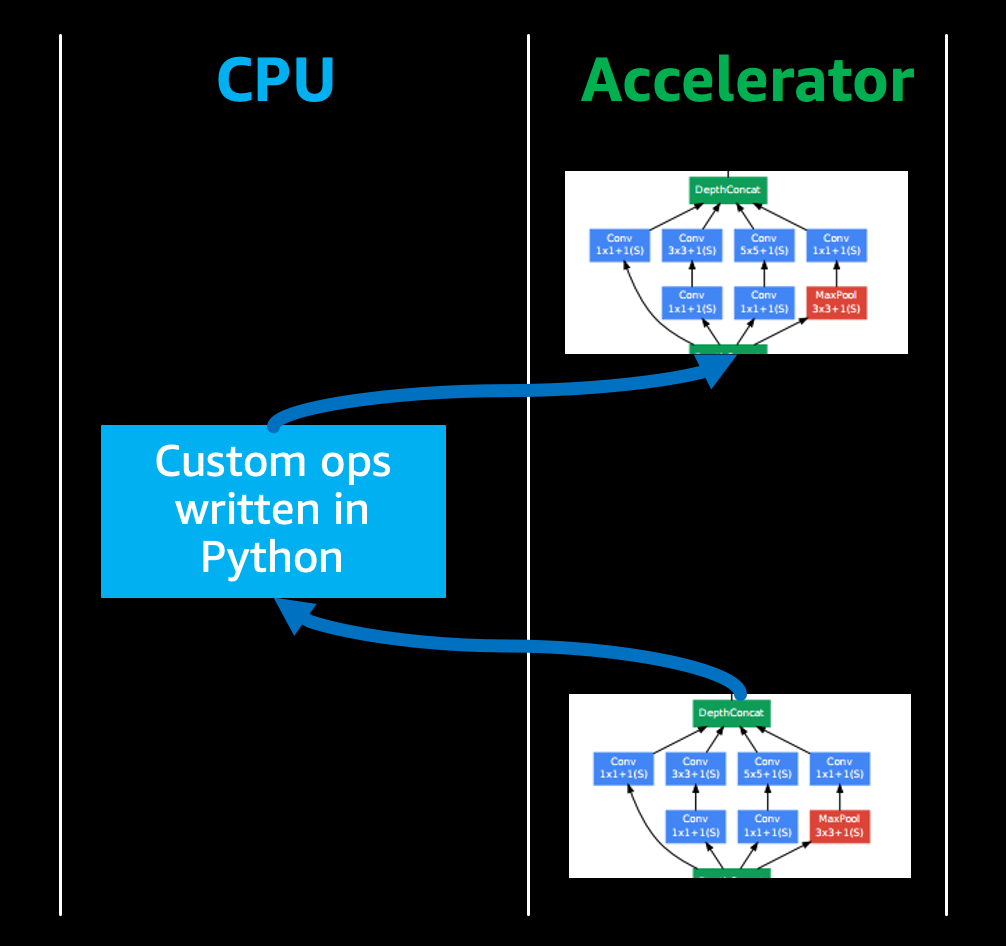

If you’re pushing the boundaries of deep learning research with custom neural network operations, you may need to author custom code for custom operations. And you’d typically do this in high level languages like Python.

如果要通过自定义神经网络操作来突破深度学习研究的界限,则可能需要为自定义操作编写自定义代码。你通常会用 Python 等高级语言来做到这一点。

Most AI accelerators can’t automatically accelerate custom code written in high level languages and therefore that piece of code will fall back to CPUs for execution, reducing the overall inference performance.

大多数 AI 加速器无法自动加速用高级语言编写的自定义代码,因此该代码段将回退到 CPU 执行,从而降低整体推理性能。

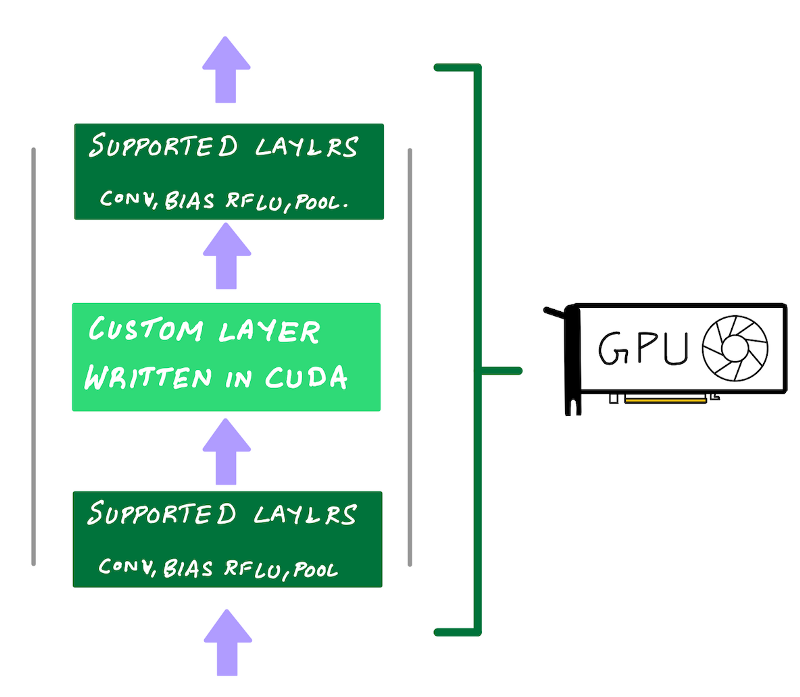

NVIDIA GPUs have the advantage that if you want more performance out of your custom code you can reimplement them using CUDA programming language and run them on GPUs. But if your ASIC’s compiler doesn’t support the operations you need, then CPU fall back may result in lower performance.

NVIDIA GPU 的优势在于,如果您想从自定义代码中获得更高的性能,您可以使用 CUDA 编程语言重新实现它们并在 GPU 上运行它们。但是,如果 ASIC 的编译器不支持所需的操作,则 CPU 回退可能会导致性能降低。

2. Target throughput, latency and cost

2. 目标吞吐量、延迟和成本

In general specialized processors such as AWS Inferentia tend to offer lower price/performance ratio and improve latency vs. general purpose processors. But in the world of AI acceleration, all solutions can be competitive, depending on the type of workload.

一般来说,与通用处理器相比,AWS Inferentia 等专用处理器往往提供较低的性价比并改善延迟。但在 AI 加速的世界中,所有解决方案都可能具有竞争力,具体取决于工作负载的类型。

GPUs are throughput processors, and can deliver high throughput for a specified latency. If latency is not critical (batch processing, offline inference) then GPU utilization can be kept high, making them the most cost effective option in the cloud. CPUs are not parallel throughput devices, but for real time inference of smaller models, CPUs can be the most cost effective, as long the inference latency is under your target latency budget. AWS Inferentia’s performance and lower cost could make it the most cost effective and performant option vs both CPUs and GPUs if your model is fully supported by AWS Neuron SDK compiler for acceleration on AWS Inferentia.

GPU 是吞吐量处理器,可以在指定的延迟下提供高吞吐量。如果延迟不是关键(批处理、离线推理),那么 GPU 利用率可以保持在高水平,使其成为云中最具成本效益的选择。CPU 不是并行吞吐量设备,但对于较小模型的实时推理,只要推理延迟低于目标延迟预算,CPU 可能是最具成本效益的。如果 AWS Neuron 开发工具包编译器完全支持您的模型在 AWS Inferentia 上加速,那么与 CPU 和 GPU 相比,AWS Inferentia 的性能和更低的成本可能使其成为最具成本效益和性能的选择。

This is indeed a nuanced topic and is very workload dependent. In the subsequent sections we’ll take a closer look at performance, latency and cost for each accelerator. If a specific choice doesn’t work for you, no problem, it’s easy to switch options in the cloud till you find the right option for you.

这确实是一个微妙的话题,并且非常依赖于工作量。在后续部分中,我们将仔细研究每个加速器的性能、延迟和成本。如果某个特定选项不适合您,没问题,在云中切换选项很容易,直到您找到适合您的选项。

3. Compiler and runtime toolchain and ease of use

3. 编译器和运行时工具链和易用性

To accelerate your models on AI accelerators, you typically have to go through a compilation step that analyzes the computational graph and optimizes it for the target hardware to get the best performance. When deploying on a CPU, the deep learning framework has everything you need, so additional SDKs and compilers are typically not required.

为了在 AI 加速器上加速模型,您通常必须经历一个编译步骤,该步骤分析计算图并针对目标硬件进行优化,以获得最佳性能。在 CPU 上部署时,深度学习框架具有所需的一切,因此通常不需要额外的 SDK 和编译器。

If you’re deploying to a GPU, you can rely on a deep learning framework to accelerate your model for inference, but you’ll be leaving performance on the table. To get the most out of your GPU, you’ll have to use a dedicated inference compiler such as NVIDIA TensorRT.

如果要部署到 GPU,则可以依靠深度学习框架来加速模型进行推理,但性能将不被搁置。为了充分利用您的 GPU,您必须使用专用的推理编译器,例如 NVIDIA TensorRT。

In some cases, you can get over 10 times extra performance vs. using the deep learning framework (see figure). We’ll see later in the code examples section, how you can reproduce these results.

在某些情况下,与使用深度学习框架相比,您可以获得 10 倍以上的额外性能(见图)。稍后我们将在代码示例部分看到如何重现这些结果。

NVIDIA TensorRT is two things — inference compiler and a runtime engine. By compiling your model with TensorRT, you can get better performance and lower latency since it performs a number of optimizations such as graph optimization and quantizations. Likewise, when targeting AWS Inferentia, AWS Neuron SDK compiler will perform similar optimizations to get the most out of your AWS Inferentia processor.

NVIDIA TensorRT 是两件事 — 推理编译器和运行时引擎。通过使用 TensorRT 编译模型,您可以获得更好的性能和更低的延迟,因为它执行了许多优化,例如图形优化和量化。同样,在以 AWS Inferentia 为目标时,AWS Neuron 开发工具包编译器将执行类似的优化,以充分利用您的 AWS Inferentia 处理器。

Let’s dig a little deeper into each of these AI accelerator options

让我们更深入地研究这些 AI 加速器选项中的每一个

Accelerator Option 1: GPU-acceleration for inference

加速器选项 1:用于推理的 GPU 加速

You train your model on GPUs, so it’s natural to consider GPUs for inference deployment. After all, GPUs substantially speed up deep learning training, and inference is just the forward pass of your neural network that’s already accelerated on GPU. This is true, and GPUs are indeed an excellent hardware accelerator for inference.

您可以在 GPU 上训练模型,因此很自然地会考虑使用 GPU 进行推理部署。毕竟,GPU 大大加快了深度学习训练的速度,而推理只是在 GPU 上加速的神经网络的前向传递。这是真的,GPU 确实是推理的绝佳硬件加速器。

First, let’s talk about what GPUs really are.

首先,让我们谈谈GPU到底是什么。

GPUs are first and foremost throughput processors, as this blog post from NVIDIA explains. They were designed to exploit inherent parallelism in algorithms and accelerate them by computing them in parallel. GPUs started out as specialized processors for computer graphics, but today’s GPUs have evolved into programmable processors, also called General Purpose GPU (GPGPU). They are still specialized parallel processors, but also highly programmable for a narrow range of applications which can be accelerated with parallelization.

GPU 首先是吞吐量处理器,正如 NVIDIA 的这篇博文所解释的那样。它们旨在利用算法中固有的并行性,并通过并行计算来加速它们。GPU 最初是用于计算机图形学的专用处理器,但今天的 GPU 已发展成为可编程处理器,也称为通用 GPU (GPGPU)。它们仍然是专用的并行处理器,但对于可以通过并行化加速的狭窄应用,它们也是高度可编程的。

As it turns out, the high-performance computing (HPC) community had been using GPUs to accelerate linear algebra calculations long before deep learning. Deep neural networks computations are primarily composed of similar linear algebra computations, so a GPU for deep learning was a solution looking for a problem. It is no surprise that Alex Krizhevsky’s AlexNet deep neural network that won the ImageNet 2012 competition and (re)introduced the world to deep learning was trained on readily available, programmable consumer GPUs by NVIDIA.

事实证明,早在深度学习之前,高性能计算 (HPC) 社区就一直在使用 GPU 来加速线性代数计算。深度神经网络计算主要由类似的线性代数计算组成,因此用于深度学习的 GPU 是寻找问题的解决方案。毫不奇怪,Alex Krizhevsky 的 AlexNet 深度神经网络赢得了 ImageNet 2012 竞赛,并(重新)向世界介绍了深度学习,这是由 NVIDIA 在现成的可编程消费类 GPU 上训练的。

GPUs have gotten much faster since then and I’ll refer you to NVIDIA’s website for their latest training and inference benchmarks for popular models. While these benchmarks are a good indication of what a GPU is capable of, your decision may hinge on other considerations discussed below.

从那时起,GPU 的速度变得更快了,我将向您推荐 NVIDIA 的网站,了解他们针对流行型号的最新训练和推理基准测试。虽然这些基准测试很好地表明了 GPU 的能力,但您的决定可能取决于下面讨论的其他考虑因素。

1. GPU inference throughput, latency and cost

1. GPU 推理吞吐量、延迟和成本

Since GPUs are throughput devices, if your objective is to maximize sheer throughput, they can deliver best in class throughput per desired latency, depending on the GPU type and model being deployed. An example of a use-case where GPUs absolutely shine is offline or batch inference. GPUs will also deliver some of the lowest latencies for prediction for small batches, but if you are unable to keep your GPU utilization at its maximum at all times, due to say sporadic inference request (fluctuating customer demand), your cost / inference request goes up (because you are delivering fewer requests for the same GPU instance cost). For these situations you’re better off using Amazon Elastic Inference which lets you access just enough GPU acceleration for lower cost.

由于 GPU 是吞吐量设备,如果您的目标是最大化绝对吞吐量,则它们可以根据所需的延迟提供一流的吞吐量,具体取决于部署的 GPU 类型和型号。GPU 绝对大放异彩的一个用例示例是离线或批量推理。GPU 还将为小批量预测提供一些最低的延迟,但如果由于零星的推理请求(客户需求波动)而无法始终将 GPU 利用率保持在最大水平,则成本/推理请求会上升(因为您为相同的 GPU 实例成本交付的请求更少)。对于这些情况,您最好使用 Amazon Elastic Inference,它可以让您以更低的成本访问足够的 GPU 加速。

In the example section we’ll see comparision of GPU performance across different precisions (FP32, FP16, INT8).

在示例部分中,我们将看到不同精度(FP32、FP16、INT8)的 GPU 性能比较。

2. GPU inference supported model size and options

2. GPU推理支持的模型大小和选项

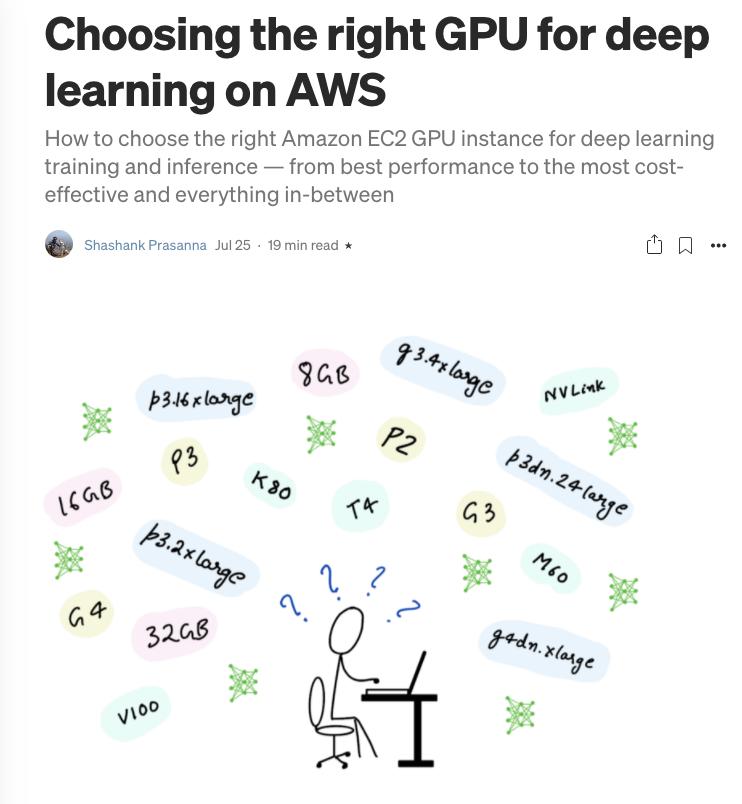

On AWS you can launch 18 different Amazon EC2 GPU instances with different NVIDIA GPUs, number of vCPUs, system memory and network bandwidth. Two of the most popular GPUs for deep learning inference are the NVIDIA T4 GPUs offered by G4 EC2 instance type and NVIDIA V100 GPUs offered by P3 EC2 instance type.

在 AWS 上,您可以使用不同的 NVIDIA GPU、vCPU 数量、系统内存和网络带宽启动 18 个不同的 Amazon EC2 GPU 实例。用于深度学习推理的两个最受欢迎的 GPU 是 G4 EC2 实例类型提供的 NVIDIA T4 GPU 和 P3 EC2 实例类型提供的 NVIDIA V100 GPU。

博客文章:在 AWS 上为深度学习选择合适的 GPU(作者截图)

For a fully summary of all GPU instance type of AWS read my earlier blog post: Choosing the right GPU for deep learning on AWS

有关 AWS 所有 GPU 实例类型的完整摘要,请阅读我之前的博客文章:在 AWS 上为深度学习选择合适的 GPU

G4 instance type should be the go-to GPU instance for deep learning inference deployment.

G4 实例类型应该是深度学习推理部署的首选 GPU 实例。

Based on the NVIDIA Turing architecture, NVIDIA T4 GPUs feature FP64, FP32, FP16, Tensor Cores (mixed-precision), and INT8 precision types. They also have 16 GB of GPU memory which can be plenty for most models and combined with reduced precision support.

基于 NVIDIA Turing 架构,NVIDIA T4 GPU 具有 FP64、FP32、FP16、Tensor Core(混合精度)和 INT8 精度类型。它们还具有 16 GB 的 GPU 内存,这对于大多数型号来说已经足够了,并且还具有较低的精度支持。

If you need more throughput or need more memory per GPU, then P3 instance types offer a more powerful NVIDIA V100 GPU and with p3dn.24xlarge instance size, you can get access to NVIDIA V100 with up to 32 GB of GPU memory for large models or large images or other datasets.

如果您需要更高的吞吐量或每个 GPU 需要更多的内存,那么 P3 实例类型将提供更强大的 NVIDIA V100 GPU,并且通过 p3dn.24xlarge 实例大小,您可以访问具有高达 32GB GPU 内存的 NVIDIA V100,用于大型模型、大型图像或其他数据集。

3. GPU inference model type, programmability and ease of use

3. GPU推理模型类型、可编程性和易用性

Unlike ASICs such as AWS Inferentia which are fixed function processors, a developer can use NVIDIA’s CUDA programming model to code up custom layers that can be accelerated on an NVIDIA GPU. This is exactly what Alex Krizhevsky did with AlexNet in 2012. He hand coded custom CUDA kernels to train his neural network on GPU. He called his framework cuda-convnet and you could say cuda-convnet was the very first deep learning framework. If you’re pushing the boundary of deep learning and don’t want to leave performance on the table a GPU is the best option for you.

与 AWS Inferentia 等固定功能处理器的 ASIC 不同,开发人员可以使用 NVIDIA 的 CUDA 编程模型对可在 NVIDIA GPU 上加速的自定义层进行编码。这正是 Alex Krizhevsky 在 2012 年对 AlexNet 所做的。他手工编写了自定义 CUDA 内核,以在 GPU 上训练他的神经网络。他将他的框架称为 cuda-convnet,你可以说 cuda-convnet 是第一个深度学习框架。如果您正在突破深度学习的界限,并且不想将性能留在桌面上,那么 GPU 是您的最佳选择。

Programmability with performance is one of GPUs greatest strengths

性能的可编程性是 GPU 的最大优势之一

使用 NVIDIA 的 CUDA 编程模型对可在 NVIDIA GPU 上加速的自定义层进行编码。(作者插图)

Of course, you don’t need to write low-level GPU code to do deep learning. NVIDIA has made neural network primitives available via libraries such as cuDNN and cuBLAS and deep learning frameworks such as TensorFlow, PyTorch and MXNet use these libraries under the hood so you get GPU acceleration for free by simply using these frameworks. This is why GPUs score high marks for ease of use and programmability.

当然,你不需要编写低级的GPU代码来做深度学习。NVIDIA 通过 cuDNN 和 cuBLAS 等库提供神经网络原语,而 TensorFlow、PyTorch 和 MXNet 等深度学习框架则在后台使用这些库,因此您只需使用这些框架即可免费获得 GPU 加速。这就是为什么 GPU 在易用性和可编程性方面获得高分的原因。

4. GPU performance with NVIDIA TensorRT

4. NVIDIA TensorRT 的 GPU 性能

If you really want to get the best performance out of your GPUs, NVIDIA offers TensorRT, a model compiler for inference deployment. Does additional optimizations to a trained model, and a full list is available on NVIDIA’s TensorRT website. The key optimizations to note are:

如果您真的想让 GPU 发挥最佳性能,NVIDIA 提供了 TensorRT,这是一款用于推理部署的模型编译器。对经过训练的模型进行其他优化,完整列表可在 NVIDIA 的 TensorRT 网站上找到。需要注意的关键优化包括:

- Quantization: reduce model precision from FP32 (single precision) to FP16 (half precision) or INT8 (8-bit integer precision), thereby speeding up inference due to reduced amount of computation

量化:将模型精度从 FP32(单精度)降低到 FP16(半精度)或 INT8(8 位整数精度),从而减少计算量,从而加快推理速度 - Graph fusion: fusing multiple layers/ops into a single function call to a CUDA kernel on the GPU. This reduces the overhead of multiple function call for each layer/op

图形融合:将多个层/操作融合到对 GPU 上的 CUDA 内核的单个函数调用中。这减少了对每个层/操作的多个函数调用的开销

Deploying with FP16 is straight forward with NVIDIA TensorRT. The TensorRT compiler will automatically quantize your models during the compilation step.

使用 FP16 进行部署可直接使用 NVIDIA TensorRT。TensorRT 编译器将在编译步骤中自动量化您的模型。

To deploy with INT8 precision, the weights and activations of the model need to be quantized so that floating point values can be converted into integers using appropriate ranges. You have two options.

为了以 INT8 精度进行部署,需要量化模型的权重和激活,以便可以使用适当的范围将浮点值转换为整数。您有两种选择。

- Option 1: Perform quantization aware training. In quantization aware training, the error from quantizing weights and tensors to INT8 is modeled during training, allowing the model to adapt and mitigate this error. This requires additional setup during training.

选项 1:执行量化感知训练。在量化感知训练中,在训练期间对从权重和张量到 INT8 的误差进行建模,使模型能够适应和减轻此误差。这需要在训练期间进行额外的设置。 - Option 2: Perform post training quantization. In post-quantization training, no pre-deployment preparation is required. You will provide a training model in full precision (FP32), and you will also need to provide a dataset sample from your training dataset that the TensorRT compiler can use to run a calibration step to generate quantization ranges. In Example 2 below, we’ll take a look at implementing Option 2.

选项 2:执行培训后量化。在量化后培训中,不需要部署前的准备工作。您将提供一个全精度 (FP32) 的训练模型,并且还需要从训练数据集中提供一个数据集样本,TensorRT 编译器可以使用该样本来运行校准步骤以生成量化范围。在下面的示例 2 中,我们将了解如何实现选项 2。

Examples of GPU-accelerated inference

GPU 加速推理示例

The following examples was tested on Amazon EC2 g4dn.xlarge using the following AWS Deep Learning AMI: Deep Learning AMI (Ubuntu 18.04) Version 35.0. To run TensorRT, I used the following NVIDIA TensorFlow Docker image: nvcr.io/nvidia/tensorflow:20.08-tf2-py3

以下示例 g4dn.xlarge 已使用以下 AWS Deep Learning AMI 在 Amazon EC2 上进行了测试:Deep Learning AMI (Ubuntu 18.04) 版本 35.0。为了运行 TensorRT,我使用了以下 NVIDIA TensorFlow Docker 映像: nvcr.io/nvidia/tensorflow:20.08-tf2-py3

Dataset: ImageNet Validation dataset with 50000 test images, converted to TFRecord

数据集:包含 50000 张测试图像的 ImageNet 验证数据集,转换为 TFRecord

Model: TensorFlow implementation of ResNet50

模型:ResNet50 的 TensorFlow 实现

You can find the full implementation for the examples below on this Jupyter Notebook:

您可以在此 Jupyter Notebook 上找到以下示例的完整实现:

Example 1: Deploying ResNet50 TensorFlow model (1) framework’s native GPU support and (2) with NVIDIA TensorRT

示例 1:部署 ResNet50 TensorFlow 模型 (1) 框架的原生 GPU 支持和 (2) 使用 NVIDIA TensorRT

TensorFlow’s native GPU acceleration support just works out of the box, with no additional setup. You won’t get the additional performance you can get with NVIDIA TensorRT, but you can’t argue with how easy life becomes when things just work.

TensorFlow 的原生 GPU 加速支持开箱即用,无需额外设置。您将无法获得 NVIDIA TensorRT 所能获得的额外性能,但您无法否认当一切正常时生活变得多么轻松。

Running inference with frameworks’ native GPU support takes all of 3 lines of code:

使用框架的原生 GPU 支持运行推理需要所有 3 行代码:

model = tf.keras.models.load_model(saved_model_dir)for i, (validation_ds, batch_labels, _) in enumerate(dataset):

pred_prob_keras = model(validation_ds)

But you’re really leaving performance on the table (some times 10x the performance). To increase the performance and utilization of your GPU, you have to use an inference compiler and runtime like NVIDIA TensorRT.

但你真的把性能留在了桌面上(有时是性能的 10 倍)。为了提高 GPU 的性能和利用率,您必须使用推理编译器和运行时,例如 NVIDIA TensorRT。

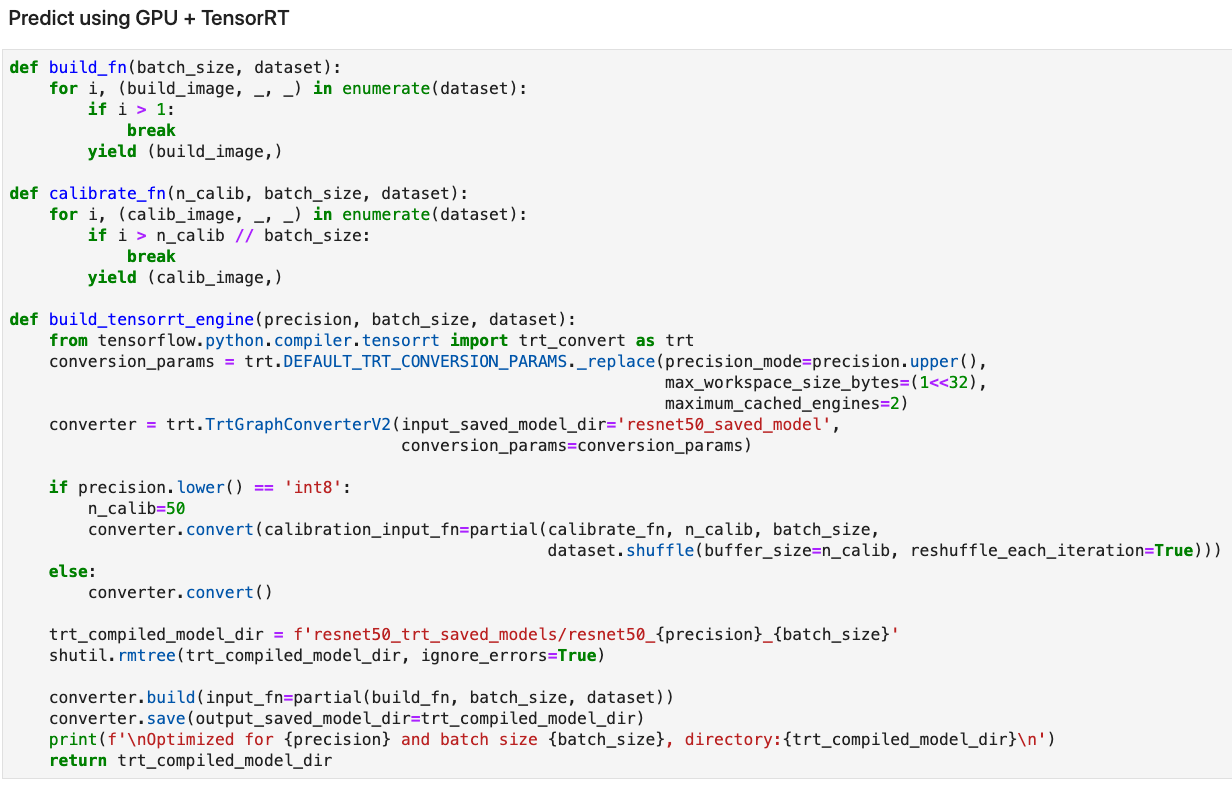

The following code shows how to compile your model with TensorRT. You can find the full implementation on GitHub

以下代码演示如何使用 TensorRT 编译模型。您可以在 GitHub 上找到完整的实现

摘自:https://github.com/shashankprasanna/ai-accelerators-examples/blob/main/gpu-tf-tensorrt-resnet50.ipynb(作者截图)

TensorRT compilation has the following steps:

TensorRT 编译包含以下步骤:

- Provide TensorRT’s

TrtGraphConverterV2(for TensorFlow2) with your uncompiled TensorFlow saved model

向 TensorRTTrtGraphConverterV2(适用于 TensorFlow2)提供未编译的 TensorFlow 保存模型 - Specify TensorRT compilation parameters. The most important parameter is the precision (FP32, FP16, INT8). If you’re compiling with INT8 support, TensorRT expects you to provide it with a representative sample from your training set to calibrate scaling factors. You’ll do this by providing a python generator to argument

calibration_input_fnwhen you callconverter.convert(). You don’t need to provide additional data for FP32 and FP16 optimizations.

指定 TensorRT 编译参数。最重要的参数是精度(FP32、FP16、INT8)。如果您使用 INT8 支持进行编译,TensorRT 希望您从训练集中为其提供具有代表性的样本,以校准缩放因子。为此,calibration_input_fn您可以在调用converter.convert().您无需为 FP32 和 FP16 优化提供其他数据。 - TensorRT compiles your model and saves it as a TensorFlow saved model that includes special TensorRT operators which accelerates inference on GPU and runs them more efficiently.

TensorRT 编译您的模型并将其保存为 TensorFlow 保存的模型,该模型包含特殊的 TensorRT 运算符,可加速 GPU 上的推理并更高效地运行它们。

Below is a comparison of accuracy and performance of TensorFlow ResNet50 inference with:

以下是 TensorFlow ResNet50 推理的准确性和性能比较:

- TensorFlow native GPU acceleration

TensorFlow 原生 GPU 加速 - TensorFlow + TensorRT FP32 precision

TensorFlow + TensorRT FP32 精度 - TensorFlow + TensorRT FP16 precision

TensorFlow + TensorRT FP16 精度 - TensorFlow + TensorRT INT8 precision

TensorFlow + TensorRT INT8 精度

I measured not just performance but also accuracy, since reducing precision means there is information loss. On the ImageNet test dataset we see negligible loss in accuracy across all precisions, with minor boost in throughput. Your mileage may vary for your model.

我不仅测量了性能,还测量了准确性,因为降低精度意味着信息丢失。在 ImageNet 测试数据集上,我们看到所有精度的精度损失可以忽略不计,吞吐量略有提高。您的里程可能因型号而异。

Example 2: Hosting a ResNet50 TensorFlow model using Amazon SageMaker

示例 2:使用 Amazon SageMaker 托管 ResNet50 TensorFlow 模型

In Example 1, we tested the performance offline, but in most cases you’ll be hosting your model in the cloud as an endpoint that client applications can submit inference requests to. One of the simplest ways of doing this is to use Amazon SageMaker hosting capabilities.

在示例 1 中,我们离线测试了性能,但在大多数情况下,您将在云中托管模型作为客户端应用程序可以向其提交推理请求的终结点。执行此操作的最简单方法之一是使用 Amazon SageMaker 托管功能。

This example was tested on Amazon SageMaker Studio Notebook. Run this notebook using the following Amazon SageMaker Studio conda environment: TensorFlow 2 CPU Optimized. The full implementation is available here:

此示例已在 Amazon SageMaker Studio 笔记本上进行了测试。使用以下 Amazon SageMaker Studio conda 环境运行此笔记本:TensorFlow 2 CPU 优化。完整的实现可在此处获得:

Hosting a model endpoint with SageMaker involves the following simple steps:

使用 SageMaker 托管模型终端节点涉及以下简单步骤:

- Create a tar.gz archive file using your TensorFlow saved model and upload it to Amazon S3

使用 TensorFlow 保存的模型创建tar.gz存档文件并将其上传到 Amazon S3 - Use the Amazon SageMaker SDK API to create a TensorFlowModel object

使用 Amazon SageMaker 开发工具包 API 创建 TensorFlowModel 对象 - Deploy the TensorFlowModel object to a G4 EC2 instance with NVIDIA T4 GPU

将 TensorFlowModel 对象部署到具有 NVIDIA T4 GPU 的 G4 EC2 实例

Create model.tar.gz with the TensorFlow saved model:

使用 TensorFlow 保存的模型创建model.tar.gz:

$ tar cvfz model.tar.gz -C resnet50_saved_model .Upload model to S3 and deploy:

将模型上传到 S3 并部署:

You can test the model by invoking the endpoint as follows:

可以通过调用终结点来测试模型,如下所示:

Output: 输出:

Accelerator Option 2: AWS Inferentia for inference

加速器选项 2:用于推理的 AWS Inferentia

AWS Inferentia is a custom silicon designed by Amazon for cost-effective, high-throughput, low latency inference.

AWS Inferentia 是 Amazon 设计的定制芯片,用于经济高效、高吞吐量、低延迟的推理。

James Hamilton (VP and Distinguished Engineer at AWS) goes into further depth about ASICs, general purpose processors, AWS Inferentia and the economics surrounding them in his blog post: AWS Inferentia Machine Learning Processor, which I encourage you to read if you’re interested in AI hardware.

James Hamilton(AWS 副总裁兼杰出工程师)在他的博客文章中进一步深入探讨了 ASIC、通用处理器、AWS Inferentia 及其周围的经济效益:AWS Inferentia 机器学习处理器,如果您对 AI 硬件感兴趣,我鼓励您阅读该文章。

The idea of using specialized processors for specialized workloads is not new. The chip in your noise cancelling headphone and the video decoder in your DVD player are examples of specialized chips, sometimes also called an Application Specific Integrated Circuit (ASIC).

将专用处理器用于专用工作负载的想法并不新鲜。降噪耳机中的芯片和 DVD 播放器中的视频解码器是专用芯片的示例,有时也称为专用集成电路 (ASIC)。

ASICs have 1 job (or limited responsibilities) and are optimized to do it well. Unlike general purpose processors (CPUs) or programmable accelerators (GPU), large parts of the silicon are not dedicated to run arbitrary code.

ASIC 有 1 项工作(或有限职责),并经过优化以做好这项工作。与通用处理器 (CPU) 或可编程加速器 (GPU) 不同,芯片的大部分不是专用于运行任意代码的。

AWS Inferentia was purpose built to offer high inference performance at the lowest cost in the cloud. AWS Inferentia chips can be accessed via the Amazon EC2 Inf1 instances which come in different sizes with 1 AWS Inferentia chip per instance all the way up to 16 AWS Inferential chips per instance. Each AWS Inferentia chip has 4 NeuronCores and supports FP16, BF16 and INT8 data types. NeuronCore is a high-performance systolic-array matrix-multiply engine and each has a two stage memory hierarchy, a very large on-chip cache.

AWS Inferentia 旨在以最低的成本在云中提供高推理性能。可以通过 Amazon EC2 Inf1 实例访问 AWS Inferentia 芯片,这些实例具有不同的大小,每个实例有 1 个 AWS Inferentia 芯片,每个实例最多有 16 个 AWS Inferential 芯片。每个 AWS Inferentia 芯片都有 4 个 NeuronCore,并支持 FP16、BF16 和 INT8 数据类型。NeuronCore 是一个高性能的收缩阵列矩阵乘法引擎,每个引擎都有一个两级内存层次结构,一个非常大的片上缓存。

In most cases, AWS Inferentia might be the best AI accelerator for your use case, if your model:

在大多数情况下,如果您的模型符合以下条件,则 AWS Inferentia 可能是最适合您的使用案例的 AI 加速器:

- Was trained in MXNet, TensorFlow, PyTorch or has been converted to ONNX

接受过 MXNet、TensorFlow、PyTorch 的训练或已转换为 ONNX - Has operators that are supported by the AWS Neuron SDK

具有 AWS Neuron 开发工具包支持的运算符

If you have operators not supported by the AWS Neuron SDK, you can still deploy it successfully on Inf1 instances, but those operations will run on the host CPU and won’t be accelerated on AWS Inferentia. As I stated earlier, every use case is different, so compile your model with AWS Neuron SDK and measure performance to make sure it meets your performance, latency and throughput needs.

如果您的运算符不受 AWS Neuron 开发工具包支持,您仍然可以在 Inf1 实例上成功部署它,但这些操作将在主机 CPU 上运行,并且不会在 AWS Inferentia 上加速。正如我之前所说,每个使用案例都是不同的,因此请使用 AWS Neuron 开发工具包编译您的模型并测量性能,以确保它满足您的性能、延迟和吞吐量需求。

1. AWS Inferentia throughput, latency and cost

1. AWS Inferentia 吞吐量、延迟和成本

AWS has compared performance of AWS Inferentia vs. GPU instances for popular models, and reports lower cost for popular models: YOLOv4 model, OpenPose, and has provided examples for BERT and SSD for TensorFlow, MXNet and PyTorch. For real-time applications, AWS Inf1 instances are amongst the least expensive of all the acceleration options available on AWS and AWS Inferentia can deliver higher throughput at target latency and at lower cost compared to GPUs and CPUs. Ultimately your choice may depend on other factors discussed below.

AWS 比较了流行模型的 AWS Inferentia 与 GPU 实例的性能,并报告了流行模型(YOLOv4 模型、OpenPose)的成本更低,并提供了适用于 TensorFlow、MXNet 和 PyTorch 的 BERT 和 SSD 示例。对于实时应用程序,AWS Inf1 实例是 AWS 上提供的所有加速选项中最便宜的实例之一,与 GPU 和 CPU 相比,AWS Inferentia 可以在目标延迟和更低的成本下提供更高的吞吐量。最终,您的选择可能取决于下面讨论的其他因素。

2. AWS Inferentia supported models, operators and precisions

2. AWS Inferentia 支持的模型、运算符和精度

AWS Inferentia chip supports a fixed set of neural network operators exposed via the AWS Neuron SDK. When you compile a model to target AWS Inferentia using the AWS Neuron SDK, the compiler will check your model for supported operators for your framework. If an operator isn’t supported or if the compiler determines that a specific operator is more efficient to execute on CPU, it’ll partition the graph to include CPU partitions and AWS Inferentia partitions. The same is also true for Amazon Elastic Inference which we’ll discuss in the next section. If you’re using TensorFlow with AWS Inferentia here is a list of all TensorFlow ops accelerated on AWS Inferentia.

AWS Inferentia 芯片支持通过 AWS Neuron 开发工具包公开的一组固定的神经网络运算符。当您使用 AWS Neuron 开发工具包编译模型以面向 AWS Inferentia 时,编译器将检查您的模型中是否有您的框架支持的运算符。如果不支持运算符,或者编译器确定特定运算符在 CPU 上执行效率更高,则会对图形进行分区以包括 CPU 分区和 AWS Inferentia 分区。Amazon Elastic Inference 也是如此,我们将在下一节中讨论。如果您将 TensorFlow 与 AWS Inferentia 结合使用,以下是在 AWS Inferentia 上加速的所有 TensorFlow 操作的列表。

自定义操作将成为 CPU 分区的一部分,并将在主机实例的 CPU 上运行(作者插图)

If you trained your model in FP32 (single precision), AWS Neuron SDK compiler will automatically cast your FP32 model to BF16 to improve inference performance. If you instead, prefer to provide a model in FP16, either by training in FP16 or by performing post-training quantization, AWS Neuron SDK will directly use your FP16 weights. While INT8 is supported by the AWS Inferentia chip, the AWS Neuron SDK compiler currently does not provide a way to deploy with INT8 support.

如果您在 FP32(单精度)中训练模型,AWS Neuron 开发工具包编译器会自动将 FP32 模型转换为 BF16,以提高推理性能。如果您希望通过在 FP16 中训练或执行训练后量化来提供 FP16 中的模型,则 AWS Neuron 开发工具包将直接使用您的 FP16 权重。虽然 AWS Inferentia 芯片支持 INT8,但 AWS Neuron 开发工具包编译器目前不提供支持 INT8 的部署方法。

3. AWS Inferentia flexibility and control over how Inferentia NeuronCores are used

3. AWS Inferentia 灵活性和对 Inferentia NeuronCore 使用方式的控制

In most cases, AWS Neuron SDK makes AWS Inferentia really easy to use. A key difference in the user experience of using AWS Inferentia and GPUs is that AWS Inferentia lets you have more control over how each core is used.

在大多数情况下,AWS Neuron 开发工具包使 AWS Inferentia 非常易于使用。使用 AWS Inferentia 和 GPU 的用户体验的一个关键区别是,AWS Inferentia 让您可以更好地控制每个内核的使用方式。

AWS Neuron SDK supports two ways to improve performance by utilizing all the NeuronCores: (1) batching and (2) pipelining. Since the AWS Neuron SDK compiler is an ahead-of-time compiler, you have to enable these options explicitly during the compilation stage.

AWS Neuron 开发工具包支持两种利用所有 NeuronCore 提高性能的方法:(1) 批处理和 (2) 流水线。由于 AWS Neuron 开发工具包编译器是提前编译器,因此您必须在编译阶段显式启用这些选项。

Let’s take a look at what these are and how these work.

让我们来看看这些是什么以及它们是如何工作的。

a. Use batching to maximize throughput for larger batch sizes

一个。使用批处理来最大化更大批量的吞吐量

When you compile a model with AWS Neuron SDK compiler with batch_size, greater than one, batching is enabled. During inference your model weights are stored in external memory, and as forward pass is initiated, a subset of layer weights, as determined by the neuron runtime, is copied to the on-chip cache. With the weights of this layer on the cache, forward pass is computed on the entire batch.

当您使用 batch_size 大于 1 的 AWS Neuron 开发工具包编译器编译模型时,将启用批处理。在推理过程中,模型权重存储在外部存储器中,当启动前向传递时,由神经元运行时确定的层权重子集被复制到片上缓存中。使用缓存上此层的权重,将在整个批次上计算正向传递。

After that the next set of layer weights are loaded into the cache, and the forward pass is computed on the entire batch. This process continues until all weights are used for inference computations. Batching allows for better amortization of the cost of reading weights from the external memory by running inference on large batches when the layers are still in cache.

之后,将下一组层权重加载到缓存中,并计算整个批次的前向传递。此过程一直持续到所有权重都用于推理计算。批处理允许在层仍在缓存中时对大批处理运行推理,从而更好地摊销从外部存储器读取权重的成本。

All of this happens behind the scenes and as a user, you just have to set a desired batch size using an example input, during compilation.

所有这些都发生在幕后,作为用户,您只需在编译过程中使用示例输入设置所需的批处理大小。

Even though batch size is set at the compilation phase, with dynamic batching enabled, the model can accept variable sized batches. Internally the neuron runtime will break down the user batch size into compiled batch sizes and run inference.

即使批量大小是在编译阶段设置的,在启用动态批处理的情况下,模型也可以接受可变大小的批处理。在内部,神经元运行时会将用户批大小分解为编译的批大小并运行推理。

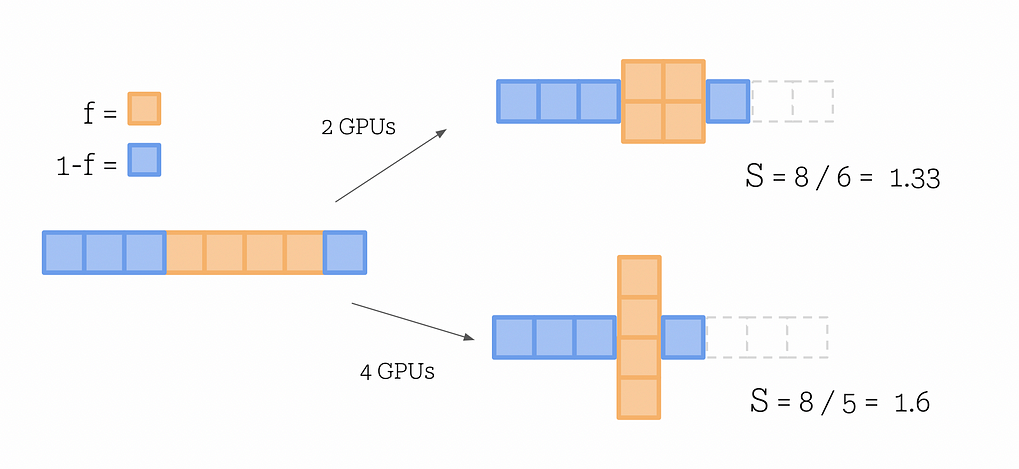

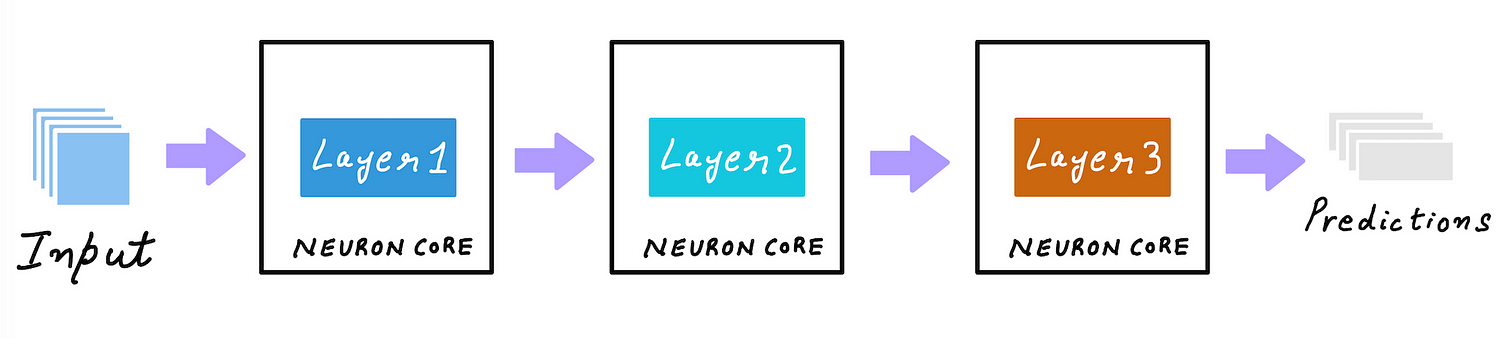

b. Using pipelining to improve latency by caching the model across multiple NeuronCores

b.使用流水线通过跨多个 NeuronCore 缓存模型来改善延迟

During batching, model weights are loaded to the on-chip cache from the external memory layer by layer. With pipelining, you can load the entire model weights into the on-chip cache of multiple cores. This can reduce the latency since the neuron runtime does not have to load the weights from external memory. Again all of this happens behind the scenes, as a user you just set the desired number of cores using —-num-neuroncores during the compilation phase.

在批处理过程中,模型权重从外部存储器逐层加载到片上缓存中。通过流水线,您可以将整个模型权重加载到多个内核的片上缓存中。这可以减少延迟,因为神经元运行时不必从外部存储器加载权重。同样,所有这些都发生在幕后,作为用户,您只需在编译阶段设置 —-num-neuroncores 所需的内核数量即可。

Batching and pipelining can be used together. However, you have to try different combinations of pipelining cores and compiled batch sizes to determine what works best for your model.

批处理和流水线可以一起使用。但是,您必须尝试流水线核心和编译批量大小的不同组合,以确定最适合您的模型的方法。

During the compilation step, all combinations of batch sizes and number of neuron cores (for pipelining), may not work. You will have to determine the working combinations of batch size and number of neuron cores by running a sweep of different values and monitoring compiler errors.

在编译步骤中,批处理大小和神经元核心数量(用于流水线)的所有组合可能不起作用。您必须通过运行不同值的扫描和监视编译器错误来确定批量大小和神经元核心数量的工作组合。

Using all NeuronCores on your Inf1 instances

在 Inf1 实例上使用所有 NeuronCore

Depending on how you compiled your model you can either:

根据模型的编译方式,您可以:

- Compile your model to run on a single NeuronCore with a specific batch size

编译模型以在具有特定批处理大小的单个 NeuronCore 上运行 - Compile your model by pipelining to multiple-NeuronCores with specific batch size

通过流水线化到具有特定批处理大小的多个 NeuronCore 来编译模型

The least cost Amazon EC2 Inf1 instance type, inf1.xlarge has 1 AWS Inferentia chip with 4 NeuronCores. If you compiled your model to run on a single NeuronCore, tensorflow-neuron will automatically perform data parallel execution on all 4 NeuronCores. This is equivalent to replicating your model 4 times and loading it into each NeuronCore and running 4 Python threads to feed input to data to each core. Automatic data parallel execution does not work beyond 1 AWS Inferentia chip. If you want to replicate your model to all 16 NeuronCores on an inf1.6xlarge for example, you have to spawn multiple threads to feed all AWS Inferentia chips with data. In python you can use concurrent.futures.ThreadPoolExecutor.

成本最低的 Amazon EC2 Inf1 实例类型具有 inf1.xlarge 1 个 AWS Inferentia 芯片和 4 个 NeuronCore。如果您编译模型以在单个 NeuronCore 上运行, tensorflow-neuron 则会自动在所有 4 个 NeuronCore 上执行数据并行执行。这相当于复制模型 4 次并将其加载到每个 NeuronCore 中,并运行 4 个 Python 线程以将数据输入到每个内核。自动数据并行执行不能超过 1 个 AWS Inferentia 芯片。 inf1.6xlarge 例如,如果您想将模型复制到所有 16 个 NeuronCore,则必须生成多个线程以向所有 AWS Inferentia 芯片提供数据。在 python 中,您可以使用 concurrent.futures.ThreadPoolExecutor。

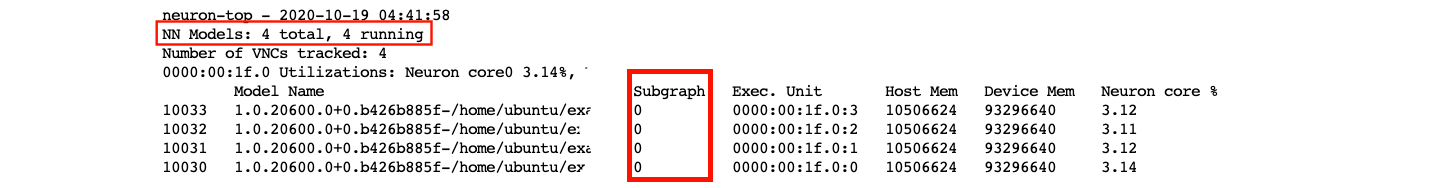

When you compile a model for multiple NeuronCores, the runtime will allocate different subgraphs to each NeuronCore (screenshot by author)

当您为多个 NeuronCore 编译模型时,运行时会为每个 NeuronCore 分配不同的子图(作者截图)

当您使用流水线编译模型时,运行时会为每个 NeuronCore 分配不同的子图(作者截图)

4. Deploying multiple models on Inf1 instances

4. 在 Inf1 实例上部署多个模型

AWS Neuron SDK allows you to group NeuronCores into logical groups. Each group could have 1 or more NeuronCores and could run a different model. For example if you’re deploying on an inf1.6xlarge EC2 Inf1 instance, you have access to 4 Inferentia chips with 4 NeuronCores each i.e. a total of 16 NeuronCores. You could divide 16 NeuronCores into, let’s say 3 groups. Group 1 has 8 NeuronCores and will run a model that uses pipelining to use all 8 cores. Group 2 uses 4 NeuronCores and runs 4 copies of a model compiled with 1 neuron core. Group 3 uses 4 NeuronCores and runs 2 copies of a model compiled with 2 neuron cores with pipelining. You can specify this configuration using the NEURONCORE_GROUP_SIZES environment variable, and you’d set it to NEURONCORE_GROUP_SIZES=8,1,1,1,1,2,2

AWS Neuron 开发工具包允许您将 NeuronCore 分组到逻辑组中。每个组可以有 1 个或多个 NeuronCore,并且可以运行不同的模型。例如,如果您在 inf1.6xlarge EC2 Inf1 实例上部署,则可以访问 4 个 Inferentia 芯片,每个芯片有 4 个 NeuronCore,即总共 16 个 NeuronCore。您可以将 16 个 NeuronCore 分成 3 组。第 1 组有 8 个 NeuronCore,并将运行一个使用流水线来使用所有 8 个核心的模型。第 2 组使用 4 个 NeuronCore,并运行使用 1 个神经元核心编译的模型的 4 个副本。第 3 组使用 4 个 NeuronCore,并运行 2 个模型的副本,该模型由 2 个神经元核心编译,并带有流水线。您可以使用 NEURONCORE_GROUP_SIZES 环境变量指定此配置,并将其设置为 NEURONCORE_GROUP_SIZES=8,1,1,1,1,2,2

After that you simply have to load the model in the specified sequence within a single python process, i.e. load the model that’s compiled to use 8 cores first, then load the model that’s compiled to use 1 core four times, and then use load the model that’s compiled to use 2 cores, two times. The appropriate cores will be assigned to the model.

之后,您只需在单个 python 进程中按指定的顺序加载模型,即首先加载编译为使用 8 个内核的模型,然后加载编译为使用 1 个内核的模型四次,然后使用 load 编译为使用 2 个内核的模型,两次。将分配给模型的适当内核。

Examples of AWS Inferentia accelerated inference on Amazon EC2 Inf1 instances

Amazon EC2 Inf1 实例上的 AWS Inferentia 加速推理示例

AWS Neuron SDK comes pre-installed on AWS Deep Learning AMI, and you can also install the SDK and the neuron-accelerated frameworks and libraries TensorFlow, TensorFlow Serving, TensorBoard (with neuron support), MXNet and PyTorch.

AWS Neuron 开发工具包预装在 AWS Deep Learning AMI 上,您还可以安装开发工具包以及神经元加速框架和库 TensorFlow、TensorFlow Serving、TensorBoard(支持神经元)、MXNet 和 PyTorch。

The following examples were tested on Amazon EC2 Inf1.xlarge and Deep Learning AMI (Ubuntu 18.04) Version 35.0.

以下示例已在 Amazon EC2 Inf1.xlarge 和 Deep Learning AMI (Ubuntu 18.04) 版本 35.0 上进行了测试。

You can find the full implementation for the examples below on this Jupyter Notebook:

您可以在此 Jupyter Notebook 上找到以下示例的完整实现:

Example 1: Deploying ResNet50 TensorFlow model with AWS Neuron SDK on AWS Inferentia

示例 1:在 AWS Inferentia 上使用 AWS Neuron 开发工具包部署 ResNet50 TensorFlow 模型

In this example I compare 3 different options

在这个例子中,我比较了 3 个不同的选项

- No batching, no pipelining: Compile ResNet50 model with batch size = 1 and number of cores = 1

无批处理,无流水线:编译批处理大小 = 1 且内核数 = 1 的 ResNet50 模型 - With batching, no pipelining: Compile ResNet50 model with batch size = 5 and number of cores = 1

使用批处理,无流水线:编译批处理大小 = 5 且内核数 = 1 的 ResNet50 模型 - No batching, with pipelining: Compile ResNet50 model with batch size = 1 and number of cores = 4

无批处理,带流水线:编译批处理大小 = 1 且内核数 = 4 的 ResNet50 模型

You can find the full implementation in this Jupyter Notebook. I’ll just review the results here.

您可以在此 Jupyter Notebook 中找到完整的实现。我在这里只回顾一下结果。

The comparison below shows that you get the best throughput with option 2 (batch size = 1, no pipelining) on Inf1.xlarge instances. You can repeat this experiment with other combinations on large Inf1 instances.

下面的比较显示,在 Inf1.xlarge 实例上,使用选项 2(批处理大小 = 1,无流水线)可以获得最佳吞吐量。您可以在大型 Inf1 实例上使用其他组合重复此实验。

Accelerator Option 3: Amazon Elastic Inference (EI) acceleration for inference

加速器选项 3:用于推理的 Amazon Elastic Inference (EI) 加速

Amazon Elastic Inference(作者插图)

Amazon Elastic Inference (EI) allows you to add cost-effective variable-size GPU acceleration to a CPU-only instance without provisioning a dedicated GPU instance. To use Amazon EI, you simply provision a CPU-only instance such as Amazon EC2 C5 instance type, and choose from 6 different EI accelerator options at launch.

借助 Amazon Elastic Inference (EI),您可以向仅 CPU 实例添加经济高效的可变大小 GPU 加速,而无需预置专用 GPU 实例。要使用 Amazon EI,您只需预置一个仅限 CPU 的实例(如 Amazon EC2 C5 实例类型),并在启动时从 6 种不同的 EI 加速器选项中进行选择。

The EI accelerator is not part of the hardware that makes up your CPU instance, instead, the EI accelerator is attached through the network using an AWS PrivateLink endpoint service which routes traffic from your instance to the Elastic Inference accelerator configured with your instance. All of this happens seamlessly behind the scenes when you use an EI enabled serving frameworks such as TensorFlow serving.

EI 加速器不是构成 CPU 实例的硬件的一部分,而是使用 AWS PrivateLink 终端节点服务通过网络连接 EI 加速器,该服务将流量从您的实例路由到配置了您的实例的 Elastic Inference 加速器。当您使用启用了 EI 的服务框架(如 TensorFlow 服务)时,所有这些都会在幕后无缝发生。

借助 Elastic Inference,您可以访问可变大小的 GPU 加速(作者插图)

Amazon EI uses GPUs to provide GPU acceleration, but unlike dedicated GPU instances, you can choose to add GPU acceleration that comes in 6 different accelerator sizes, that you can choose by Tera (trillion) Floating Point Operations per Second (TFLOPS) or GPU memory.

Amazon EI 使用 GPU 提供 GPU 加速,但与专用 GPU 实例不同,您可以选择添加 6 种不同加速器大小的 GPU 加速,您可以按 Tera(万亿)每秒浮点运算数 (TFLOPS) 或 GPU 内存进行选择。

Why choose Amazon EI over dedicated GPU instances?

为什么选择 Amazon EI 而不是专用 GPU 实例?

As I discussed earlier, GPUs are primarily throughput devices, and when dealing with smaller batches, common with real-time applications, GPUs tend to get underutilized when you deploy models that don’t need the full processing power or full memory of a GPU. Also, if you don’t have sufficient demand or multiple models to serve and share the GPU, then a single GPU may not be cost effective as cost/inference would go up.

You can choose from 6 different EI accelerators that offer 1–4 TFLOPS and 1–8 GB of GPU memory. Let’s say you have a less computationally demanding model with a small memory footprint, you can attach the smallest EI accelerator such as eia1.medium that offers 1 TFLOPS of FP32 performance and 1 GB of GPU memory to a CPU instance. If you have a more demanding model, you could attach an eia2.xlarge EI accelerator with 4 TFLOPS performance and 8 GB GPU memory to a CPU instance.

The cost of the CPU instance + EI accelerator would still be cheaper than a dedicated GPU instance, and can lower inference costs. You don’t have to worry about maximizing the utilization of your GPU since you’re adding just enough capacity to meet demand, without over-provisioning.

When to choose Amazon EI over GPU, and what EI accelerator size to choose?

Let’s consider the following hypothetical scenario. Let’s say your application can deliver a good customer experience if your total latency (app + network + model predictions) is under 200 ms. And let’s say, with a G4 instance type you can get total latency down to 40 ms which is well within your target latency. You’ve also tried deploying with a CPU-only C5 instance type you can only get total latency to 400 ms which does not meet your SLA requirements and results in poor customer experience.

With Elastic Inference, you can network attach just enough GPU acceleration to a CPU instance. After exploring different EI accelerator sizes (say eia2.medium, eia2.large, eia2.xlarge), you and get your total latency down to 180 ms with an eia2.large EI accelerators, which is under the desired 200 ms mark. Since EI is significantly cheaper than provisioning a dedicated GPU instance, you save on your total deployment costs.

借助 Elastic Inference,您可以将足够的 GPU 加速网络附加到 CPU 实例。在探索了不同的 EI 加速器大小(比如 eia2.medium 、 eia2.large ) eia2.xlarge 后,您可以使用 eia2.large EI 加速器将总延迟降低到 180 毫秒,低于所需的 200 毫秒。由于 EI 比预置专用 GPU 实例便宜得多,因此可以节省总部署成本。

1. Amazon Elastic Inference performance

Since the GPU acceleration is added via the network, EI adds some latency compared to a dedicated GPU instance, but will still be faster than a CPU-only instance, and more cost-effective than a dedicated GPU instance. A dedicated GPU instance will still deliver better inference performance vs EI, but if the extra performance doesn’t improve your customer experience, with EI you will stay under the target latency SLA, deliver good customer experience, and save on overall deployment costs. AWS has a number of blog posts that talk about performance and cost savings compared to CPUs and GPU using popular deep learning frameworks.

2. Supported model types, programmability and ease of use

Amazon EI supports models trained on TensorFlow, Apache MXNet, Pytorch and ONNX models. After you launch an Amazon EC2 instance with Amazon EI attached, to access the accelerator you need an EI enabled framework such as TensorFlow, PyTorch or Apache MXNet.

EI enabled frameworks come pre-installed on AWS Deep Learning AMI, but if you prefer installing it manually, a Python wheel file has also been made available.

Most popular models such as Inception, ResNet, SSD, RCNN, GNMT have been tested to deliver cost saving benefits when deployed with Amazon EI. If you’re deploying a custom model with custom operators, EI enabled framework, partitions the graph to run unsupported operators on the host CPU, and all support ops on the EI accelerator attached via the network. This makes using EI very simple.

Example: Deploying ResNet50 TensorFlow model using Amazon EI

This example was tested on Amazon EC2 c5.2xlarge the following AWS Deep Learning AMI: Deep Learning AMI (Ubuntu 18.04) Version 35.0

You can find the full implementation on this Jupyter Notebook here:

https://github.com/shashankprasanna/ai-accelerators-examples/blob/main/ei-tensorflow-resnet50.ipynb

Amazon EI enabled TensorFlow offers APIs that let you accelerate your models using EI accelerators, and behave just like TensorFlow API. As a developer you to make have minimal code changes.

To load model, you just have to run the following code:

from ei_for_tf.python.predictor.ei_predictor import EIPredictoreia_model = EIPredictor(saved_model_dir,accelerator_id=0)

If you have more than one EI accelerators attached to your instance, you can specify them using the accelerator_id argument. Simply replace your TensorFlow model object with eia_model and the rest of your script remains the same, and your model is now accelerated on Amazon EI.

The following figure compares CPU-only inference vs. EI accelerated inference on the same CPU instance. In this example you see over 6 times speed up with an EI accelerator.

Summary

If there is one thing I want you to take away from the blog post, it is this: Deployment needs are unique and there really is no one size fits all. Review your deployment goals, compare them with the discussions in the article, and test out all options. Cloud makes it easy to try before you commit.

Keep these considerations in mind as you choose:

- Model type and programmability (model size, custom operators, supported frameworks)

- Target throughput, latency and cost (to deliver good customer experience at a budget)

- Ease of use of compiler and runtime toolchain (fast learning curve, doesn’t require hardware knowledge)

If programmability is very important, and you have low performance targets, then CPU might just work for you.

If programmability and performance is important, then you can develop custom CUDA kernels for custom ops that are accelerated on GPUs.

If you want the lowest cost option, and your model is supported on AWS Inferentia, you can save on overall deployment costs.

Ease of use is subjective, but nothing can beat native framework experience. But with a little bit of extra effort both AWS Neuron SDK for AWS Inferentia and NVIDIA TensorRT for NVIDIA GPUs can deliver higher performance, thereby reducing cost / inference.

Thank you for reading. In this article I was only able to give you a glimpse of all the sample code we discussed in this article. If you want to reproduce the results visit the following GitHub repo:

https://github.com/shashankprasanna/ai-accelerators-examples

If you found this article interesting, please check out my other blog posts on medium.

Want me to write on a specific machine learning topic? I’d love to hear from you! Follow me on twitter (@shshnkp), LinkedIn or leave a comment below.