TMTB Weekly: Deepseek-ing for answers on the AI narrative going forward

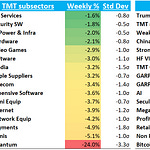

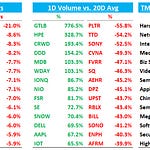

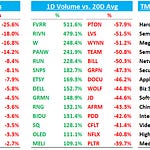

What a fun week! It was only 4 days, but we got plenty to keep us Tech investors entertained: Trump inaugurated Monday where Tech CEOs huddled together, NFLX crushed subs and hit ATHs, $500B Stargate sucked all of the air out of the room driving big rallies in ORCL and anything AI Capex levered, followed by some hot debate around where funding was going to come from, a big positive pre-announcement from TWLO and a massively negative one from EA (along with a TXN guide down), and OTAs selling off on OpenAI Operator release. If that wasn’t enough for 3 days, on Friday META came out swinging with a $60-$65B capex # only to be overshadowed as Deepseek stole the spotlight raising concerns if the race for more and more compute had reached a crescendo-ing peak this week. You want more? NPR reporting this weekend that Trump is in talks to have Oracle and U.S. investors take over TikTok. And earnings season has barely begun…

It’s only January and things are just beginning to get good. So grab the popcorn, boot up your favorite Chinese LLM, and let TMTB help you navigate the last couple years of investing before the robots take over.

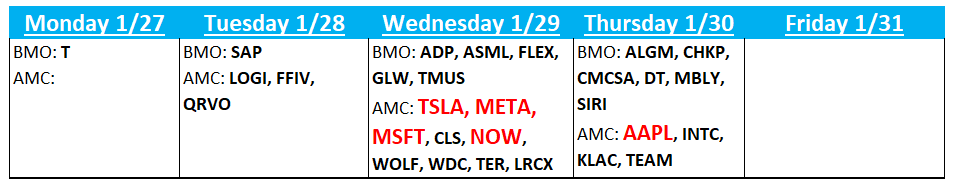

We’ll touch on the macro briefly, some quick thoughts on Deepseek and what it means for Tech Stocks, then dive into some previews/bogeys for the week ahead.

The bullish window that opened post-CPI without any big macro releases has played out with the QQQs +4%+ since the softer CPI print a week and a half ago. Yields have pulled back and as long as they continue to stay below previous highs, we think fears surrounding any bond tantrum will be subdued:

The dollar is rolling over a bit and now 2.5% below highs, which should help Big Tech guides and sentiment going through earnings seasons:

This week brings some big macro releases: we have the Fed on Wednesday and PCE on Friday. Fed expects continue to hover around 40bps worth of easing for the rest of the year. We continue to think macro releases will play an outsized role determining the narrative and direction of the market. We don’t think we’ll hear any big surprises from the Fed this week. PCE is more a of a wildcard so we’ll have to watch that closely. Our feeling is the market wants to continue to go up so inline-ish prints/releases will continue to strengthen the current goldilocks narrative: subdued inflation, steady econ growth, fed easing cycle near the end but still intact, new biz friendly administration helping drive deregulation/increased biz + consumer sentiment, oil under $80, and AI supercycle still intact (yes, despite all the Deepseek noise and likely digestion in AI capex levered plays - more on this below).

Under the covers, price action in Tech supports the view that market wants to continue higher. GOOGL AMZN NFLX META are at all-time highs and the narratives/valuations of those names still feel good to us (We prefer AMZN/META and think NFLX likely grinds higher. GOOGL we’re a bit more mixed on but price action has been surprisingly positive despite some mixed 3p search checks so far in Q1). Semi leaders like AVGO TSM MRVL within a few % of highs. SAP NOW near ATHs. Big move from ORCL. Names with good stories like CHWY, DASH, RBLX are hitting new highs. Pockets of animal spirits showing life. BTC still over $100k.

As we wrote about last week, we think 2025 will provide plenty of opportunities on both the long and short side and this week’s big moves from TWLO/NFLX (beat), and EA/TXN (misses) showed that.

Deepseek AI - what does it mean for Tech stocks/AI narrative going forward?

We covered some initial takes in our Morning and EOD wraps on Friday and I won’t bore you with more 101 on it as I’m sure you can read the 1,000s of takes on X this weekend if you want more. If you’re still catching up, I’d encourage you to take a look there. Here’s a quick two paragraphs to get you up to speed quickly:

It is amazing to me how well DeepSeek’s infrastructure has held up given it’s now the #2 app in the appstore, behind ChatGPT

Here’s my synthesis of what’s important for the AI narrative:

Whether or not Deepseek is being honest on development costs for their model, the reality is that the issue of LLMs headed for commoditization has been around for a while. It’s likely why Satya put the lid on increasing amounts of capex this week and has talked about value shifting to the app layer. It’s why the main take coming out AMZN’s re-invent conf was that their strategy was “predicated on the ideas that AI becomes a commodity not something eternally special.” Here’s how Ben from Stratechery put it in December, quoting AMZN’s AWS CEO Garman:

Here’s the argument on why everyone still needs more compute going forward:

"The space will continue evolving, but this doesn't change the fundamental advantage of having more GPUs rather than fewer. Consider an unlikely extreme scenario: we've reached the absolute best possible reasoning model - R10/o10, a superintelligent model with hundreds of trillions of parameters. Even if that's the smallest possible version while maintaining its intelligence - the already-distilled version - you'll still want to use it in multiple real-world applications simultaneously. You wouldn't want to choose between using it for improving cyber capabilities, helping with homework, or solving cancer. You'd want to do all of these things. This requires running many copies in parallel, generating hundreds or thousands of attempts at solving difficult problems before selecting the best solution. Even in this extreme case of total distillation and parity, export controls remain critically important. To make a human-AI analogy, consider Einstein or John von Neumann as the smartest possible person you could fit in a human brain. You would still want more of them. You'd want more copies. That's basically what inference compute or test-time compute is - copying the smart thing. It's better to have an hour of Einstein's time than a minute, and I don't see why that wouldn't be true for AI."

One positive tea leaf that this isn’t slowing spend is META announcing $60-65B in capex on Friday. Zuck and co have known about Deepseek for a while. I’m sure Altman/Ellison/Masa knew about Deepseek when Stargate was announced. But I’m sure those guys are feeling some heat as well this weekend - I would be, especially after watching some demos online this weekend of Deepseek re-creating OpenAI’s Operator, which costs $200/month.

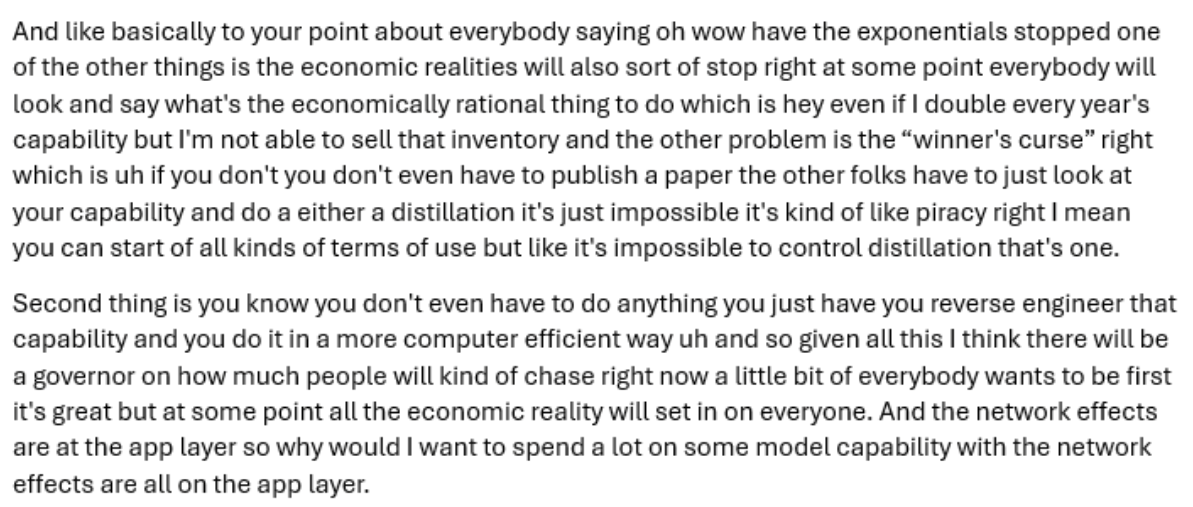

At the same time, Satya was one of the first who helped drive this whole AI ramp by funding and partnering with OpenAI — what does it mean that he’s now the first one to say hey, we’re going to slow down and think more economically here at MSFT. Here’s him on BG2 a couple weeks ago about the impossibility of preventing distillation and economic realities settling in:

Putting aside stocks for a second, this is massively bullish for the AI supercycle! Deepseek just improved efficiency by a ton. Every other top LLM will adopt Deepseek’s methods and improve their models faster and do it all with more compute. That means better AI apps and AI agents are coming sooner, faster, and better than previously thought. It means that innovation is occurring at more than just the compute level driving the exponential curve up not down. And that means a lot more inference spend. Eventually.

Back to stocks — That last word is the key for me when thinking about what this means for the AI narrative/stocks. While I think the news is massively bullish for the AI supercycle, the path and narrative are never move in a straight line, especially when it comes to AI levered capex plays.

When it comes to AI capex levered names (NVDA, ASICs, AI Power, Memory, AI Infra, Nuclear, etc.): Near-term, investors likely need to update their priors on how much training compute is needed and how fast pricing on inference spend falls. The reality is we just don’t have a hit AI app yet outside of the LLMs. MSFT’s co-pilot isn’t it. OpenAI’s Operator has promise but this version isn’t it. There are still some basic simple tasks like working with excel that ChatGPT is frustratingly bad with. We haven’t seen any of the big medical breakthroughs promised. Robotics/FSD still not fully here. YET. I don’t doubt we’ll eventually some really big breakthroughs and multiple hit AI apps take hold - and that might be just months away, but it’s not here NOW. That means inference spend hasn’t hit that inflection that would get investors excited enough not to worry about training spend and inference pricing coming down.

It’s funny how stocks work — early in the week Stargate sucked the air out of the room as everyone bid up anything AI capex related. It was a crescendo that now looks like a local peak. In hindsight, do we remember the picture of Trump, Altman, Ellison, and Masa on stage - what some would say is the ultimate “promotional hype squad” - basically the meme that signaled a local top in AI capex levered names?

NVDA is the center of this debate and had already morphed into a bit of a battleground stock given: 1) ASICs vs GPU debate given hyperscalers building out their own chips 2) Peak Capex/scaling laws debate 3) risk to Feb Q and Apr-q guide given weaker Hopper checks/Blackwell delays. This news will only fuel that and sentiment has already turned more negative over the weekend. This stock is still very well-owned and investors need to update their priors after this weekend, which means very likely more selling early in the week. The debate about whether this leads to more compute or less is unlikely to be solved in the near-term. What is clear: taken at face value, a company needing to spend $40k on an NVDA chip now only needs to $3k for the same amount of horsepower. That’s big.

MRVL/AVGO/ASICs - Inference costs, which were already going down, are going to take a big step function down. What does that mean for how much custom silicon hyperscalers need? Do they alter their plans? ISI’s Kevin Rippey also put it well this weekend: “Flexibility trumping TCO is where NVDA maintains its core edge against ASIC competition and when the playing field is moving as fast as we continue to observe, the optionality implicit in the NVDA ecosystem is hard to understate.”

What does this mean for MSFT? Mixed as OpenAI investment arguably worth less and Azure gets revs/credits from OpenAI’s API. Check out this quote from this The Information article over the weekend:

Steve Hsu, a co-founder at enterprise AI agent developer SuperFocus, has been using the DeepSeek model released last month, DeepSeek-V3, and says it performs at a level similar to or better than that of OpenAI’s previous flagship model, GPT-4, which currently powers most of SuperFocus’ generative AI features.

His startup is likely to switch to DeepSeek in the upcoming weeks, he said. And because DeepSeek is available to download for free, SuperFocus can store and run it on its own servers, an important consideration for customers concerned about OpenAI having access to their corporate data, he said. OpenAI says it saves API queries for 30 days before deleting them.

Because the cloud API of DeepSeek V3 is sold at a fraction of the cost of GPT-4, it will also “increase our profit margins in the products we sell, or we can sell them more cheaply,” said Hsu, who is also a professor of theoretical physics and computational mathematics, science and engineering at Michigan State University.

On the flip-side, shifting value to the app layer is good for MSFT. Less capex spend on compute is good for MSFT. And Satya now seen again as the guy leading from the front.

Levered AI Capex names are some of the most crowded areas of the market. Given we still think the macro narrative is supportive for stocks to go up and we that this news actually accelerates the AI Supercycle, we think that $ goes elsewhere instead of just being sold and kept in cash. So where does it go?

AI Agentic Software Names - Yes, on one hand you can argue AI going into hyperdrive means that software disintermediation is closer than previously thought, but we think that’s unlikely a 1H’25 story. We still think we’re in a golden “3 to 6 to 9 month window” for AI Agentic software names. Take CRM for example - CRM wanted to charge $2 per query for agent force to pay for inference costs. Now they can charge a fraction of that and accelerate demand significantly. Other names to watch: HUBS, TEAM, NOW

Internet/AI Adopters: We think AI Adopters like META, RBLX, and RDDT stand to benefit the most. Yes, META just guided to $60-$65B capex, a big y/y accel in plans, but any sign capex growth needs to slow, and that’s a big boon to META’s FCF. Better, faster, cheaper AI = better ROI for META’s ad tech. Despite some catching up to do, META’s open-source approach will be seen as validated.

AMZN: As mentioned above, the main take away coming out AMZN’s re-invent conf was that their strategy was “predicated on the ideas that AI becomes a commodity not something eternally special.” That supports our view we wrote about in December that the narrative on AWS has changed from “AI loser” to potentially “AI leader.” Better AI is also likely to drive improvements in ad-tech, logistics, robotics, and cost-to-serve.

Speculative Tech: While AI capex levered names will be hit, we think other areas of speculative tech, in particular robotics and FSD (TSLA one of them) will see flows. Does accelerating FSD timelines hit UBER? Possibly.

Ok, I’ve spent way too much time on Deepseek. I’ll send out some bogeys for names that report this week tomorrow: