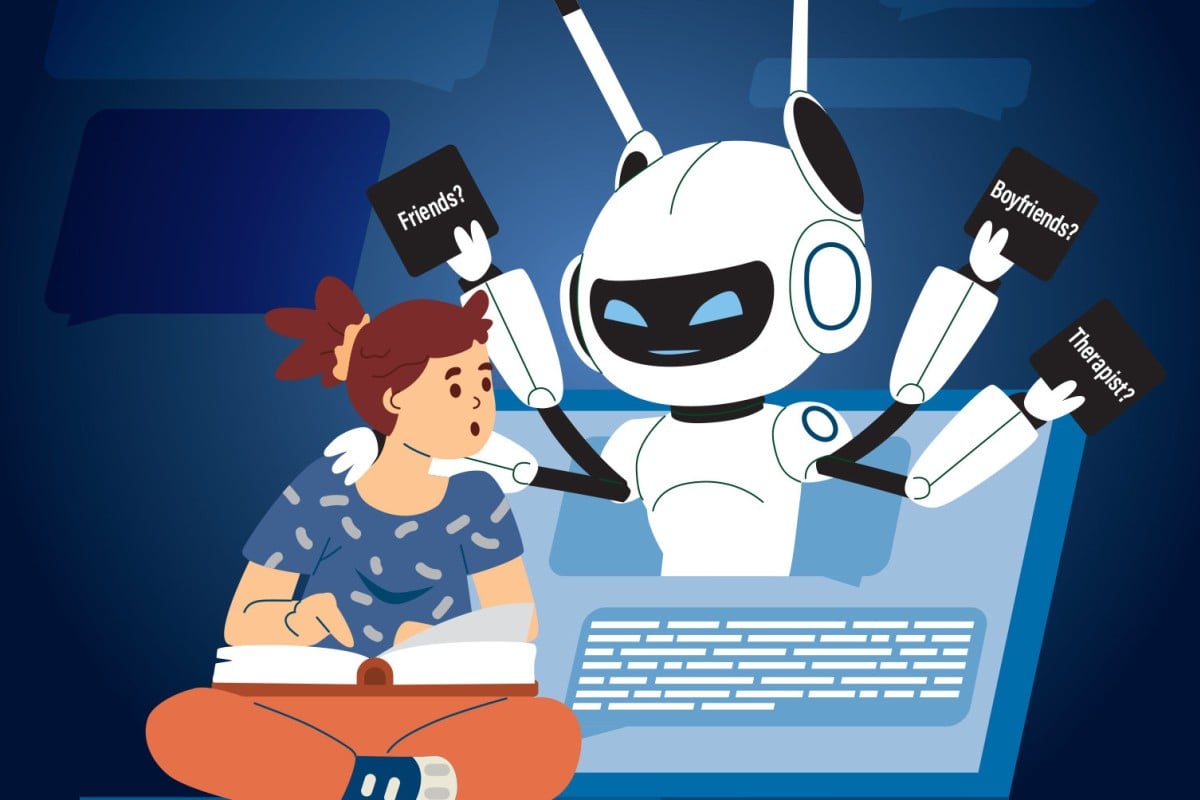

More than just friends? Dangers of teens depending on AI chatbots for companionship

不仅仅是朋友?青少年依赖人工智能聊天机器人作伴的危险

AI boyfriends or girlfriends on apps like Character.AI may be enticing, but an expert urges users to seek real-life connections instead.

Character.AI 等应用程序上的人工智能男友或女友可能很诱人,但一位专家建议用户转而寻求现实生活中的联系。

Kathryn Giordano 于 2023 年加入《邮报》,此前她曾在 FOX23 担任制片人,并在 2020 年东京夏季奥运会和 2022 年北京冬奥会期间在 NBC 体育担任编辑助理。她于 2021 年毕业于波士顿学院,主修传播学,副修新闻学和国际研究。她还是一名自费出版的作家。

AI chatbots like Character.AI and Replika are able to mimic the personalities of famous people and fictional characters. Illustration: Kevin Wong

AI chatbots like Character.AI and Replika are able to mimic the personalities of famous people and fictional characters. Illustration: Kevin WongCharacter.AI 和 Replika 等人工智能聊天机器人能够模仿名人和虚构人物的个性。插图Kevin Wong

When Lorraine Wong Lok-ching was 13, she considered having a boyfriend or girlfriend through a chatbot powered by artificial intelligence (AI).

当 Lorraine Wong Lok-ching 13 岁时,她曾考虑过通过人工智能(AI)驱动的聊天机器人交男朋友或女朋友。

“I wanted someone to talk to romantically,” she said.

"我想找个人谈恋爱,"她说。

“After maturing more, I realised [it] was stupid,” added Lorraine, who is now 16.

"现年 16 岁的 Lorraine 补充说:"在更加成熟之后,我意识到(这样做)很愚蠢。

The teen started using chatbots on Character.AI – a popular AI chatbot service – around the age of 12. While the website offers a variety of personalised chatbots that pretend to be celebrities, historical figures and fictional characters, Lorraine mostly talks to those mimicking Marvel’s Deadpool or Call of Duty characters.

这位青少年在 12 岁左右开始使用 Character.AI 网站上的聊天机器人,这是一个流行的人工智能聊天机器人服务网站。该网站提供各种个性化聊天机器人,它们可以假扮成名人、历史人物和虚构人物,而洛林则主要与那些模仿漫威电影《死侍》或《使命召唤》角色的聊天机器人聊天。

“I usually speak to in-game characters that I find interesting because they intrigue me,” she said. “I use AI chats to start conversations with them, or to just live a fantasy ... [or] to escape from stressed environments.”

"她说:"我通常会与我觉得有趣的游戏角色交谈,因为他们让我感兴趣。"我使用人工智能聊天工具与他们开始对话,或者只是为了实现一个幻想......[或者]逃避压力环境。[或]逃离压力环境"。

Lorraine, who moved from Hong Kong to Canada in 2022 with her family, is among millions of people worldwide – many of them teenagers – turning to AI chatbots for escape, companionship, comfort and more.

罗琳于 2022 年随家人从香港移居加拿大,她是全球数百万向人工智能聊天机器人寻求逃避、陪伴和安慰的人中的一员,其中许多是青少年。

Face Off: Are AI tools such as ChatGPT the future of learning?

面对面:ChatGPT 等人工智能工具是学习的未来吗?

Dark side of virtual companionship

虚拟伴侣关系的阴暗面

Character.AI is one of the most popular AI-powered chatbots, with 20 million users and about 20,000 queries every second, according to the company’s blog.

Character.AI 是最受欢迎的人工智能聊天机器人之一,据该公司的博客称,它拥有 2000 万用户,每秒约有 2 万次查询。

In October, a US mother, Megan Garcia, filed a lawsuit accusing Character.AI of encouraging her 14-year-old son, Sewell Setzer, to commit suicide. She also claims the app engaged her son in “abusive and sexual interactions”.

今年 10 月,美国母亲梅根-加西亚(Megan Garcia)提起诉讼,指控 Character.AI 鼓励她 14 岁的儿子斯威尔-塞泽尔(Sewell Setzer)自杀。她还声称,该应用让她的儿子参与了 "虐待和性互动"。

The lawsuit said Sewell had a final conversation with the chatbot before taking his life in February.

诉讼称,斯韦尔在今年 2 月自杀前与聊天机器人进行了最后一次对话。

While the company said it had implemented new safety measures in recent months, many have questioned if these guard rails are enough to protect young users.

虽然该公司表示近几个月来已实施了新的安全措施,但许多人质疑这些护栏是否足以保护年轻用户。

According to Peter Chan – the founder of Treehole HK, which has made a mental health AI chatbot app – platforms like Character.AI can easily cause mental distress or unhealthy dependency for users.

香港树洞公司(Treehole HK)的创始人彼得-陈(Peter Chan)曾开发过一款心理健康人工智能聊天机器人应用程序。他认为,Character.AI 这样的平台很容易给用户造成心理困扰或不健康的依赖性。

“Vendors have to be very responsible in terms of what messages they are trying to communicate,” he said, adding that chatbots should remove sexual content and lead users to support if they mention suicide or self-harm.

"他补充说,聊天机器人应删除性内容,如果用户提到自杀或自残,应引导他们寻求支持。

Chan noted that many personalised AI chatbots were designed to draw people in. This addictive element can exacerbate feelings such as loneliness, especially in children, causing some users to depend on the chatbots.

陈指出,许多个性化人工智能聊天机器人旨在吸引人们。这种令人上瘾的元素会加剧孤独感,尤其是儿童的孤独感,导致一些用户对聊天机器人产生依赖。

“The real danger lies in some people who treat AI as a substitute of real companionship,” he said.

"他说:"真正的危险在于有些人把人工智能当作真正伴侣的替代品。

Chan warned that youngsters should be concerned if they feel like they cannot stop using AI. “If the withdrawal feels painful instead of inconvenient, then I would say it’s a [warning] sign,” he said.

陈警告说,如果年轻人感觉无法停止使用人工智能,就应该引起注意。"他说:"如果退出时感觉痛苦而不是不方便,那么我认为这是一个(警告)信号。

Peter Chan Kin-yan is the founder and managing director of Treehole HK, a company offering mental health support for Hongkongers. Photo: Edmond So

Peter Chan Kin-yan is the founder and managing director of Treehole HK, a company offering mental health support for Hongkongers. Photo: Edmond SoPeter Chan Kin-yan 是为香港人提供心理健康支持的公司 Treehole HK 的创始人兼董事总经理。照片:Edmond So埃德蒙-苏

Allure of an AI partner

人工智能合作伙伴的诱惑

When Lorraine was considering whether to have an AI partner a few years ago, she was going through a period of loneliness.

几年前,当罗琳考虑是否要有一个人工智能伴侣时,她正经历一段孤独的时期。

“As soon as I grew out of that lonely phase, I thought [it was] a really ‘cringe’ thing because I knew they weren’t real,” she said. “I’m not worried about my use of AI [any more] because I am fully aware that they are not real no matter how hard they would convince me.”

"当我走出那个孤独的阶段后,我觉得[这]真的是一件'令人讨厌'的事情,因为我知道它们并不真实,"她说。"我不再担心我对人工智能的使用,因为我完全知道它们不是真实的,无论他们如何努力说服我。

With a few more years of experience, Lorraine said she now could see the dangers of companionship from AI chatbots, especially for younger children, who are more susceptible to believing that the virtual world is real.

有了几年的经验,罗琳说她现在可以看到人工智能聊天机器人陪伴的危险性,尤其是对于年龄较小的儿童,他们更容易相信虚拟世界是真实的。

“Younger children might develop an attachment since they are not as mature,” she noted. “The child [can be] misguided and brainwashed by living too [deep] into their fantasy with their dream character.”

"她指出:"年幼的孩子可能会产生依恋,因为他们还没有那么成熟。"孩子可能会被误导和洗脑,因为他们太[深入]地生活在对梦想人物的幻想中"。

For people who feel lonely, Chan urged them to lean into real-life experiences, rather than filling that hole with an AI companion.

对于感到孤独的人,陈呼吁他们倾心于现实生活的体验,而不是用人工智能伴侣来填补空白。

“Sometimes, this is what you need – to live to the fullest. That’s the human experience. That’s what our life has to offer us,” he said, suggesting people spend time with friends or find events to meet potential dates.

"有时,这就是你所需要的--活得最充实。这就是人类的经历。他建议人们与朋友共度时光,或寻找活动来结识潜在的约会对象。

For anyone who thinks they are dependent on AI chatbots, Chan reminded them to be compassionate with themselves. He recommended seeking counselling, finding interest groups and reframing AI to become a tool rather than a crutch.

对于那些认为自己依赖人工智能聊天机器人的人,Chan 提醒他们要对自己有同情心。他建议人们寻求咨询,寻找兴趣小组,并重新审视人工智能,使其成为一种工具而非拐杖。

He also encouraged teens not to judge their friends who have an AI boyfriend or girlfriend, and noted that they should invite their friends to do activities together.

他还鼓励青少年不要对有人工智能男女朋友的朋友妄加评论,并指出他们应该邀请朋友一起参加活动。

Your Voice: Florida mother sues Character.AI

您的声音佛罗里达州母亲起诉 Character.AI

Use AI chatbots to your advantage

充分利用人工智能聊天机器人

With the proper safety mechanisms, Chan believes AI chatbots can be helpful tools to enhance our knowledge.

陈认为,有了适当的安全机制,人工智能聊天机器人可以成为增进我们知识的有用工具。

He suggested using these platforms to gain perspective on different issues from world leaders or historians – though their responses should be fact-checked since AI chatbots can sometimes create false information.

他建议利用这些平台从世界领导人或历史学家那里获得对不同问题的看法,不过他们的回答应该经过事实核查,因为人工智能聊天机器人有时会制造虚假信息。

While the psychologist warned against using AI chatbots to replace real-world interactions, he recommended it as a stepping stone for people with social anxiety, who could build their confidence by using the bots to have practice conversations.

这位心理学家警告说,不要用人工智能聊天机器人取代现实世界中的互动,但他建议将其作为社交焦虑症患者的垫脚石,他们可以通过使用机器人进行对话练习来建立自信。

“AI presents a temporary safe haven for that ... You won’t be judged,” Chan said.

"人工智能为此提供了一个临时的避风港......你不会受到审判,"陈说。

While AI tools have a long way to go in protecting users, Lorraine said she enjoyed using them for her entertainment, and Chan noted the technology’s potential.

虽然人工智能工具在保护用户方面还有很长的路要走,但 Lorraine 说她很喜欢用这些工具来娱乐,而 Chan 也注意到了这项技术的潜力。

“AI is not an enemy of humanity,” he said. “But it has to go hand in hand with human interactions.”

"人工智能不是人类的敌人,"他说。"但它必须与人类的互动齐头并进。

If you have suicidal thoughts or know someone who is experiencing them, help is available.

如果您有自杀的念头或认识有这种念头的人,可以寻求帮助。

In Hong Kong, you can dial 18111 for the government-run Mental Health Support Hotline. You can also call +852 2896 0000 for The Samaritans or +852 2382 0000 for Suicide Prevention Services.

在香港,您可以拨打 18111 接听由政府管理的心理健康支持热线。您也可以拨打 +852 2896 0000 拨打撒玛利亚会热线,或拨打 +852 2382 0000 拨打防止自杀服务热线。

In the US, call or text 988 or chat at 988lifeline.org for the 988 Suicide & Crisis Lifeline. For a list of other nations’ helplines, see this page.

在美国,请拨打或发送短信 988 或登录 988lifeline.org 与 988 自杀与危机生命热线聊天。有关其他国家的求助热线列表,请参阅本页。

Character.AI 可根据用户的定制生成类似人类的文字回复。照片:Shutterstock快照

Stop and think: What do personalised AI chatbots do? Why might they be addicting for some people?

停下来想一想:个性化人工智能聊天机器人是做什么的?为什么它们会让某些人上瘾?

Why this story matters: Amid the rapid development of artificial intelligence, some believe companies should safeguard users from becoming dependent on personalised chatbots for companionship.

为什么这个故事很重要:在人工智能飞速发展的今天,一些人认为公司应该保护用户的安全,避免他们依赖个性化聊天机器人来陪伴自己。

distress 痛苦 痛苦

emotional suffering or anxiety

精神痛苦或焦虑

exacerbate 加劇

to make a situation or problem worse

雪上加霜

haven 安全地

a place of safety or refuge

避难所

queries 查詢 queries 查询

requests for information 索取资料

susceptible 易受影響的 susceptible 易受影响的

being easily influenced or harmed by something

吃软不吃硬

withdrawal 戒癮 withdrawal 戒毒

the period of time when somebody is getting used to not doing something they have become addicted to, and the unpleasant effects of doing this

一个人习惯于不做自己已经上瘾的事情的时期,以及这样做的不愉快影响

订阅《青年教师通讯》

将教师的最新信息直接发送到您的收件箱

Kathryn Giordano 于 2023 年加入《邮报》,此前她曾在 FOX23 担任制片人,并在 2020 年东京夏季奥运会和 2022 年北京冬奥会期间在 NBC 体育担任编辑助理。她于 2021 年毕业于波士顿学院,主修传播学,副修新闻学和国际研究。她还是一名自费出版的作家。

We Recommend 我们建议

Five extraordinary people who made a mark in 2024

2024 年的五位非凡人物

Face Off: Should children be banned from using chatbots like Character.ai?

面对面:是否应禁止儿童使用 Character.ai 等聊天机器人?

Hong Kong educators, tech industry leaders discuss AI at Principal’s Forum

香港教育工作者和科技行业领袖在校长论坛上讨论人工智能问题

Your Voice: Using AI responsibly, video game pros and cons (short letters)

您的声音负责任地使用人工智能,电子游戏的利与弊(短文)

More than just friends? Dangers of depending on AI chatbots for companionship

不仅仅是朋友?依赖人工智能聊天机器人作伴的危险

注册订阅《YP 周刊》

将最新信息直接发送到您的收件箱